Hyper-V 3.0 against ... Or suicidal holivor

Good day to all!

Today, the topic for conversation will be (as I personally think) very relevant, if not even painful ...

Yes, yes, I decided to make a functional-economic comparison of the 2 leading hypervisor platforms - Hyper-V 3.0 and VMware ESXi 5.0 / 5.1 between themselves ... But then I decided to add XenServer 6 from Citrix to complete the picture to the comparison ...

I am already in anticipation of rockfall in my garden, but still we will keep the defense to the end - please take the appropriate position, side, camp - as it is convenient for anyone - and under the cat to start the fight ...

')

Before you start a battle for life and death, I propose to define the criteria, domains, zones within which features will be allocated and compared between platforms. So:

1) Scalability and performance

2) Security and network

3) Infrastructure flexibility

4) Fault tolerance and business continuity

Categories are set - let's get started!

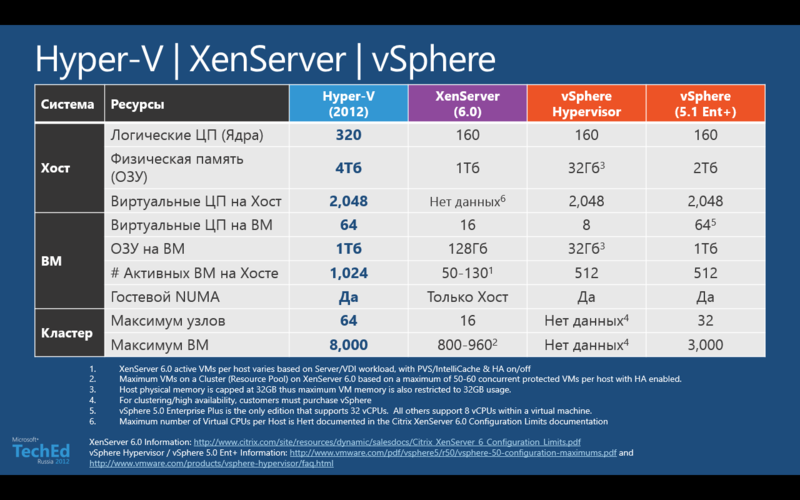

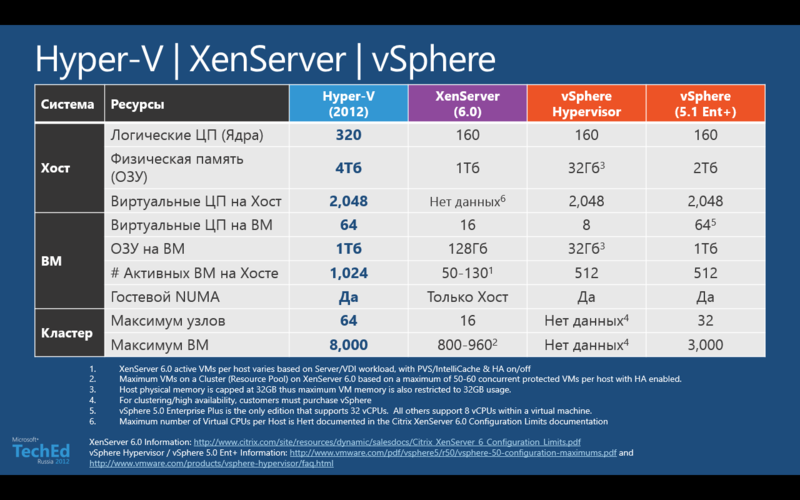

For a start, I propose to take a look at the comparative table of scalability of experimental:

As the table shows, the leader here is clear and unambiguous - this is Hyper-V 3.0. And here it should be mentioned that the performance and scalability of the hypervisor from MS does not depend on its chargeability. In VMware, the situation is the opposite - depending on how much of the ever-green digital you pay, you will get as many chips.

So for comparison, VMware we have 2 columns already. XenServer is clearly neither here nor there — it somehow stuck in the middle ...

However, if you recall the numerous statements by Citrix that “we are not a company that makes virtualization decisions, but a company that makes traffic optimization solutions,” then XenServer is a tool to accomplish this task. However, the fact that Hyper-V and Citrix Xen - distant relatives - and as a result, such a difference in scale - depresses.

About VMware, the oscillating factor of their RAM licensing policy was very annoying - the so-called. vRAM - WHAT IS ACTUALLY CAN BE CALLED BY EXTENSION. Not only did it have to license the amount of RAM that virtual users consume, as well as, if necessary, an upgrade was made on this very vRAM parameter delta (because there was simply no such parameter in ESX / ESXi 4.x) it means people got money. True, with the release of 5.1, VMware changed its mind and refused such a delusional format - however, such tough pricing convulsions cannot testify to anything good ...

From the technological point of view - I personally personally expected the situation “nostrils-to-nostrils” - but no, this did not happen, and the interest a little faded ... I think the first comparison block doesn’t need much more commentary - it is quite “transparent”.

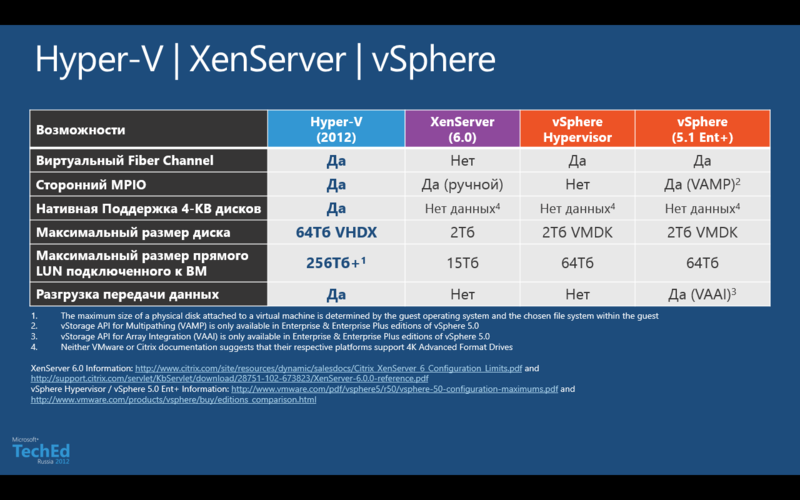

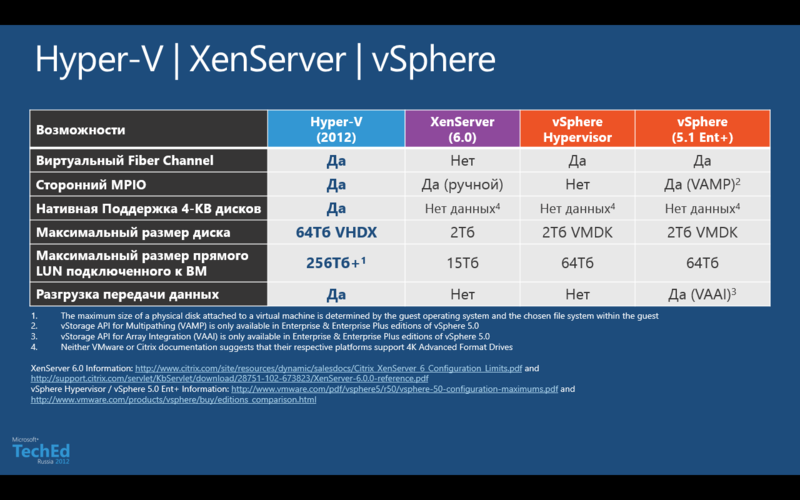

I propose to go to the next part of this comparison block - namely, to review some features that relate to performance and scalability. Consider the functional interaction with the disk subsystem, the table below:

If you look at this table, here you can note the following points:

1) Native support for 4K sectors in virtual disks is quite a critical thing for demanding applications. High-performance DBMS is usually placed on disks with a large stripe size. Support for this mechanism is officially present only in Hyper-V - I did not manage to find competing vendors in the official documentation about support for this mechanism.

2) The maximum size of the LUN connected directly to the VM - here the situation is as follows. In Citrix and VMware, this is a parameter that depends on the hypervisor itself, while in Hyper-V it is more likely on which OS is the guest OS. In the case of using Windows Server 2012 as a guest OS, it is possible to forward LUNs of more than 256 TB in size, with other hypervisors this parameter ends at 64 TB in VMware and does not rise above the mark of 15 TB in Citrix, respectively.

In terms of functionality, XenServer looks very weak - support for virtualization of the Fiber Channel should already become the norm - at least at the platform level, but the situation in this case is not a positive example. The fact that VMware’s “paid” policy of features has already become accustomed to everything, so at the level of the platform itself ESXi can do this - the only question is whether you are ready to pay so much for it? The vSphere Enterprise Plus edition is not extremely cheap, and you also need to buy support without fail.

Having support for hardware offloading data on SANs is also a very good bonus. If earlier it was an exclusive VMware feature - vStorage API for Array Integration (VAAI) , then Hyper-V 3.0 has in its arsenal similar technology - Offload Data Transfer (ODX) . Most storage vendors have either already released firmware for their systems supporting this technology, or are ready to do this soon. It’s very sad for Citrix in this particular case - XenServer doesn’t have any technologies for optimizing work with SAN systems.

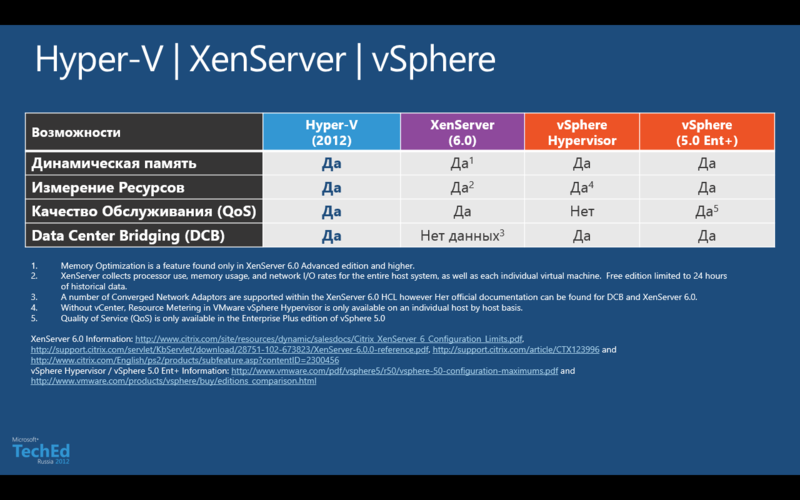

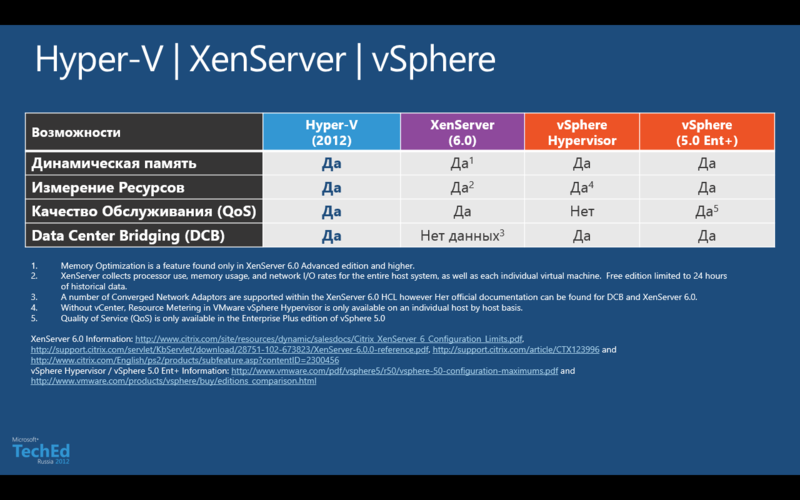

Well, we figured out the disk subsystem - let's now see what the resource management mechanisms of our review participants are.

From the point of view of features, the picture in this situation is more or less even, but there are points that should be noted:

1) Data Center Bridging (DCB) - under this mysterious abbreviation hides nothing more than support for converged network adapters - Converged Network Adapter (CNA) . In other words, a converged network adapter is an adapter that simultaneously has hardware support and acceleration for operation in both conventional Ethernet Ethernet networks and SANs — that is, the idea is that the data transmission medium, the media type becomes unified (it can be optics or twisted pair), and different protocols are used on the physical channel — SMB, FCoE, iSCSI, NFS, HTTP and others — that is, in terms of management, we have the ability to dynamically reassign such adapters by redistributing them depending on the need and type of load. If you look at the table, Citrix doesn’t even have DCB support — there is some list of CNA adapters that are supported — but the official documentation for Citrix XenServer 6.0 does not contain a word about DCB support.

2) Quality of service, QoS support are essentially mechanisms for optimizing traffic and ensuring the quality of the data transmission channel. Here, everything is in principle all normal, except for the free ESXi.

3) Dynamic memory - here the story turns out extremely interesting. According to Microsoft, the memory optimization mechanisms using Dynamic Memory have been significantly reworked and allowed us to obtain optimization in working with memory - and thereby increase the density of virtual machines. Added to this is an intelligent paging file that is configured for each individual VM. Honestly, I find it difficult to judge how effective this mechanism is, but when comparing the density of placement of identical VMs on Hyper-V 2.0 and Hyper-V 3.0, the difference was almost 2 times in favor of the second (The machines were with virtual Windows Server 2008 R2). And here the question arises - VMware has a whole arsenal of features for optimizing work with memory, including the deduplication of memory pages, which works much more efficiently. But let's take a closer look at this situation. VMware has 4 memory optimization technologies - Memory Balooning, Transparent Page Sharing, Compression and Swapping. However, all 4 technologies start my work only under conditions of increased load on the resources of the host system, i.e. in their manner of action, they are reactive, rather than proactive or optimizing. If we also take into account the fact that most modern server platforms support large tables, Large Page Tables, which by default are 2 MB in size - this increases performance, the picture changes - because ESXi does not know how to deduplicate such large data blocks - it starts to break them into smaller blocks, 4 KB, but now they deduplicate them - but all these operations cost resources and time - the picture is not so unambiguous in terms of the usefulness of these technologies.

Well, it seems that we figured out with performance and scalability - now is the time to move on to comparing the security and isolation capabilities of virtualized environments.

Here it is necessary to highlight the following points:

1) Single Root I / O Virtualization (SRIOV) is actually a mechanism for forwarding a physical adapter inside a virtual machine, however, in my opinion, it is a little incorrect to compare VMware and Microsoft technologies in this area. DirectPath I / O, the technology that VMware applies, really completely forwards the PCI Express slot, or rather the device that is in it - however, the list of devices that are compatible with this feature is extremely small, and you actually tie your VM to the host in the appendix almost all bonuses in the field of memory optimization, high availability, network traffic monitoring and other goodies. The exception, perhaps, in this situation may be platforms based on Cisco UCS - and even then - only in certain configurations. Exactly the same situation with Citirix - despite the support for SRIOV, the VM in which this feature is activated loses all possibilities in the area of VM allocation, the ability to configure VM access lists (after all, a card will go around this virtual switch) - so extremely limited. Microsoft is more likely to virtualize and profile such an adapter - because VMs can be highly available, and can migrate from host to host, but this requires the presence of maps with SRIOV support in each host — then the SRIOV adapter parameters will be transferred along with the VM to the new host.

2) Disk Encryption and IPSec Offload - the picture here is clearly unequivocal, no one except Hyper-V works with such mechanisms. Naturally, for IPSec Offload you need a card with hardware support for this technology, however, if you start a VM with intensive interaction with IPSec mechanisms, then you will benefit from this technology. As for disk encryption, this mechanism is implemented using BitLocker, but its application in our country is strongly limited by regulations. In general, the mechanism is interesting - to encrypt the space where the VMs are located in order to prevent data leakage, but, unfortunately, this mechanism will not receive wide distribution until legal and regulatory issues are resolved.

3) Virtual Machine Queue (VMQ) - Technology optimizing work with VM traffic at the host level and network interfaces. Here, for ches_noku, it is difficult to judge how much DMVq is better than VMq - for in practice I have not had the opportunity to compare these technologies. However, it is stated that only Hyper-V has DMVq. Well, there is so, probably it is good, but in this case I will leave this point without any comments - because for me personally there is no strong difference, except for letters - I will be glad if someone sheds light on the difference and the effect of the dynamism of the notorious VMq.

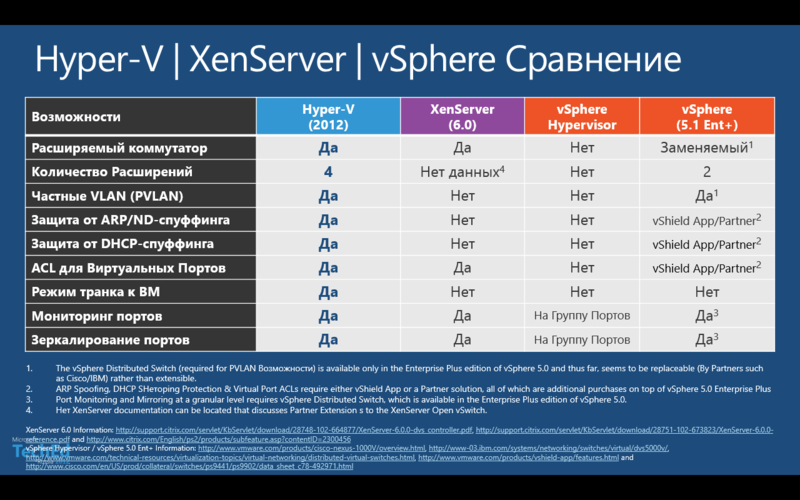

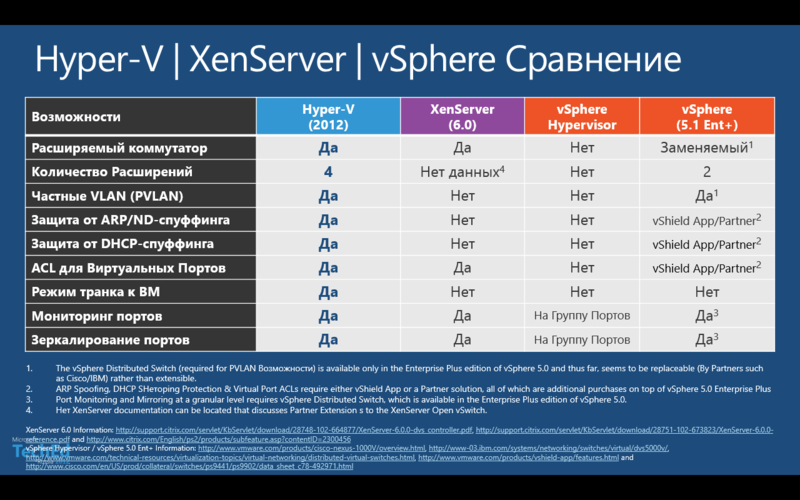

Let's continue further - now I propose to take a look at the capabilities of virtual switches that our hypervisors have in their arsenal:

1) Expandable switches - here it is necessary to immediately understand what is meant by "extensibility". In my understanding, this means an opportunity to add, expand the functionality of an existing solution, while maintaining its integrity. In this case, Hyper-V and XenServer are precisely this ideology and correspond. But with VMware, I would call their switch not “extensible”, but “replaceable” - because once, say, put a Cisco 1000V Nexus , it replaces the vDS, rather than expanding its capabilities.

2) Protection against spoofing and unauthorized packages - the situation here is simple. Free to take advantage of protection against unwanted packages and attacks can only be in Hyper-V. Citrix, unfortunately, doesn’t have such functionality, while with VMware you will have to purchase the vShield App solution either from third-party partners.

Of the other features, it is probably worth noting that everyone has control over access to the virtual network port, except for the free ESXi - i.e. with VMware, this can be applied again only at an additional cost. And lastly, I would like to mention the direct trunk mode to the VM, which only Hyper-V has.

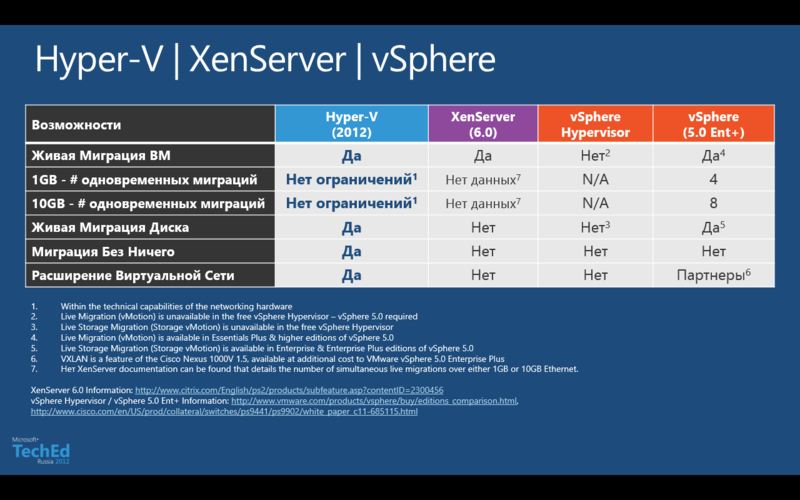

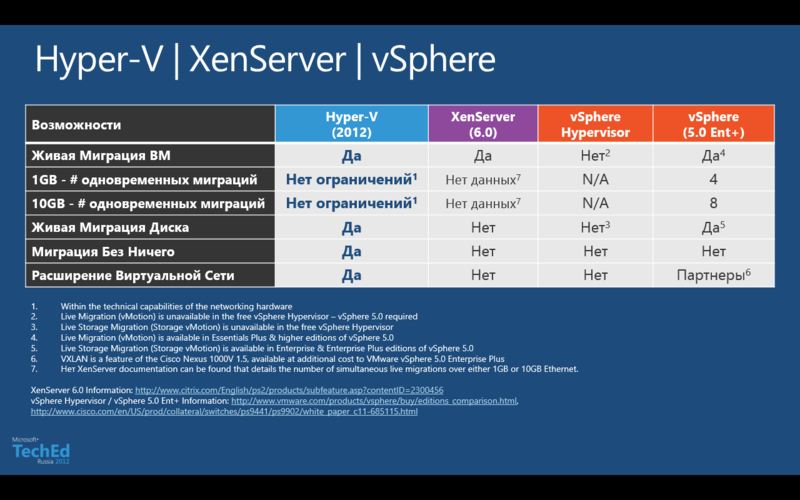

Now let's consider in comparison the mechanisms of VM migration, as well as the availability of support for VXLAN .

1) Live migration - it is supported by all but free ESXi. The number of simultaneous migrations is a very thin parameter. In VMware, it is limited technically at the hypervisor level, and Hyper-V at the level of robust logic and physical infrastructure capabilities. Citrix has no clear official data on the number of simultaneous migrations supported. Although Hyper-V has this option and looks more attractive, but this approach is very alarming - of course, very cool, that at least 1000 VMs can be migrated at the same time - but it will just hang your network. Unknowingly, this can happen, but if you have a friendship with your head, then you set the desired parameter yourself - it is saved that by default the number of simultaneous migrations is 2 nd - so be careful. As for the live migration of virtual storage VM - this can only boast of Hyper-V and vSphere Enterprise Plus. But to migrate from host to host (or where else) without shared storage is possible only by Hyper-V - although the function is really important and useful, it is a pity that only one hypervisor has this mechanism.

2) VXLAN is a mechanism for abstraction and isolation of a virtual network with the possibility of its dynamic expansion. This mechanism is very necessary for continuous cloud environments or very large data centers with embedded virtualization. Today, only Hyper-V supports this mechanism by default - VMware has the ability to implement this mechanism, but only for an additional fee, because you will have to buy a third-party extension from Cisco.

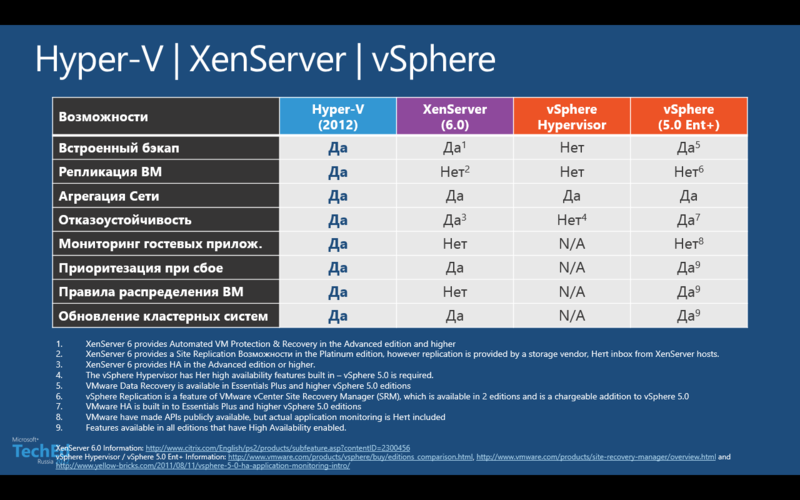

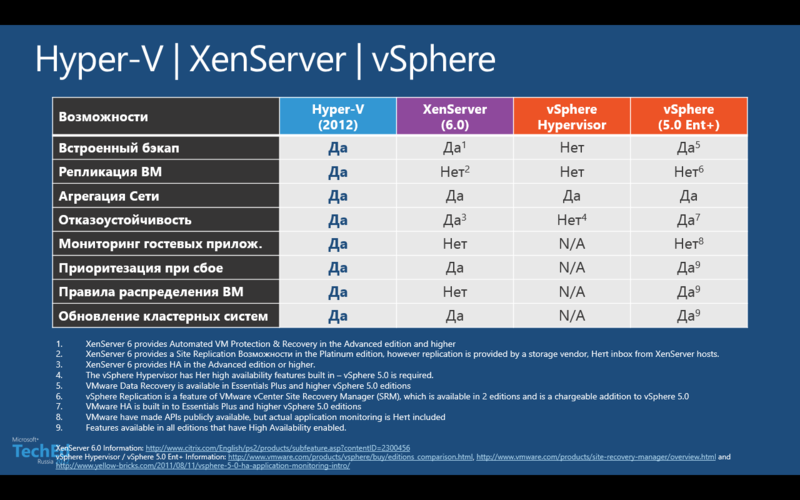

Now let's look at the failover and disaster recovery mechanisms that are present in virtualization platforms.

1) Built-in backup - this mechanism is available for free only in Hyper-V, in XenServer - starting with the Advanced edition, and if we are talking about VMware, then starting with Essentials Plus. In my opinion, it is a bit strange not to build in and not to free this mechanism - imagine the situations that you have tested virtualization, you have deployed virtuals - and hryas, that's all - the end !!! Not very cool, I think it will look like - if you really want to push the platform into the market - then do it with customer care (smile).

2) VM replication is a solution for a disaster-proof scenario when you need to be able to pick up current VM instances in another data center. VMware has a separate product to implement this solution - Site Recovery Manager (SRM) - and it costs money. Citrix has the same situation - Platinum Edition - and you will get this functionality. Hyper-V 3.0 is free.

3) Guest application monitoring - a mechanism that monitors the state of an application or service within a VM and, based on this data, can take any actions with the VM, for example, restart it if necessary. Hyper-V has built-in functionality for taking parameters and taking actions, VMware has an API - but there is no solution for working with this function, and Citrix has nothing in this plan.

4) VM distribution rules — a mechanism that allows VMs to be distributed on a cluster so that they either never met on the same host, or vice versa — never split up. Citrix does not know how to make such feints with ears, VMware can do this in all paid editions where HA is available, and as always, Hyper-V costs 0 rubles.

The rest of the platform is more or less the same from the point of view of a functional, with the exception of ESXi, of course.

During the IT Camps in Russia and Moscow, I talked to a very different audience - and therefore I want to immediately highlight a few more points.

1) “And when will Hyper-V learn how to forward USB keys from the host inside the VM?” - Most likely never. There are limitations to this in terms of hypervisor architecture, and considerations in terms of security and availability of services.

2) “VMware has Fault Tolerance technology - when will something like this be in Hyper-V?” - The answer will be similar to the previous one, but ... I personally consider this technology castrated - judge for yourself - you turned it on. But what will you do with a virtual machine that can have only 1 processor and no more than 4 GB of RAM? The domain controller thrust there!? Holy-holy-holy-izdydi! There should always be at least 2 domain controllers, and this will not save you from a crash at the guest OS level or a guest application - if the BSOD comes, then on both instances of the VM. Restrictions on the number of processors and RAM promise to increase as many as 3 releases to a number, if not more - and things are there.

3) “In VMware, the environment is better adapted to the Linux environment — how is Hyper-V?” Yes, indeed, VMware supports Linux guests much better — that’s a fact. But Hyper-V does not stand still - the number of supported distributions increases, and the capabilities of integration services.

And so - in general, let's summarize.

In terms of functionality, all hypervisors are about the same, but I would single out VMware and Hyper-V as 2 leaders. If you compare them with each other, then it is only a matter of money and functionality, as well as your IT infrastructure - what do you have more - Linux or Windows? VMware has all the tasty features worth the money - not Microsoft. If you have an operating environment on MS, then VMware will become additional costs for you, and serious licenses + support + maintenance ...

If you have more Linux, then VMware is probably there, if the environment is mixed, then the question is open.

PS> I heard somewhere that in the last square of Gartner Hyper-V are among the leaders - and overtook the vSphere, but I did not find such a picture, so this time without Gartner - if anyone has info on this topic - share, plz. I found the Gartner square from the month of June - but this is not that ...

PSS> Well, I'm ready for the hellish shooting))) What and how to use - it's up to you, as they say, but what - I'm waiting for the cartridges!

Respectfully,

Fireman,

George A. Gadzhiev

Information Infrastructure Expert

Microsoft Corporation

Today, the topic for conversation will be (as I personally think) very relevant, if not even painful ...

Yes, yes, I decided to make a functional-economic comparison of the 2 leading hypervisor platforms - Hyper-V 3.0 and VMware ESXi 5.0 / 5.1 between themselves ... But then I decided to add XenServer 6 from Citrix to complete the picture to the comparison ...

I am already in anticipation of rockfall in my garden, but still we will keep the defense to the end - please take the appropriate position, side, camp - as it is convenient for anyone - and under the cat to start the fight ...

')

Comparison criteria

Before you start a battle for life and death, I propose to define the criteria, domains, zones within which features will be allocated and compared between platforms. So:

1) Scalability and performance

2) Security and network

3) Infrastructure flexibility

4) Fault tolerance and business continuity

Categories are set - let's get started!

Scalability and performance

For a start, I propose to take a look at the comparative table of scalability of experimental:

As the table shows, the leader here is clear and unambiguous - this is Hyper-V 3.0. And here it should be mentioned that the performance and scalability of the hypervisor from MS does not depend on its chargeability. In VMware, the situation is the opposite - depending on how much of the ever-green digital you pay, you will get as many chips.

So for comparison, VMware we have 2 columns already. XenServer is clearly neither here nor there — it somehow stuck in the middle ...

However, if you recall the numerous statements by Citrix that “we are not a company that makes virtualization decisions, but a company that makes traffic optimization solutions,” then XenServer is a tool to accomplish this task. However, the fact that Hyper-V and Citrix Xen - distant relatives - and as a result, such a difference in scale - depresses.

About VMware, the oscillating factor of their RAM licensing policy was very annoying - the so-called. vRAM - WHAT IS ACTUALLY CAN BE CALLED BY EXTENSION. Not only did it have to license the amount of RAM that virtual users consume, as well as, if necessary, an upgrade was made on this very vRAM parameter delta (because there was simply no such parameter in ESX / ESXi 4.x) it means people got money. True, with the release of 5.1, VMware changed its mind and refused such a delusional format - however, such tough pricing convulsions cannot testify to anything good ...

From the technological point of view - I personally personally expected the situation “nostrils-to-nostrils” - but no, this did not happen, and the interest a little faded ... I think the first comparison block doesn’t need much more commentary - it is quite “transparent”.

I propose to go to the next part of this comparison block - namely, to review some features that relate to performance and scalability. Consider the functional interaction with the disk subsystem, the table below:

If you look at this table, here you can note the following points:

1) Native support for 4K sectors in virtual disks is quite a critical thing for demanding applications. High-performance DBMS is usually placed on disks with a large stripe size. Support for this mechanism is officially present only in Hyper-V - I did not manage to find competing vendors in the official documentation about support for this mechanism.

2) The maximum size of the LUN connected directly to the VM - here the situation is as follows. In Citrix and VMware, this is a parameter that depends on the hypervisor itself, while in Hyper-V it is more likely on which OS is the guest OS. In the case of using Windows Server 2012 as a guest OS, it is possible to forward LUNs of more than 256 TB in size, with other hypervisors this parameter ends at 64 TB in VMware and does not rise above the mark of 15 TB in Citrix, respectively.

In terms of functionality, XenServer looks very weak - support for virtualization of the Fiber Channel should already become the norm - at least at the platform level, but the situation in this case is not a positive example. The fact that VMware’s “paid” policy of features has already become accustomed to everything, so at the level of the platform itself ESXi can do this - the only question is whether you are ready to pay so much for it? The vSphere Enterprise Plus edition is not extremely cheap, and you also need to buy support without fail.

Having support for hardware offloading data on SANs is also a very good bonus. If earlier it was an exclusive VMware feature - vStorage API for Array Integration (VAAI) , then Hyper-V 3.0 has in its arsenal similar technology - Offload Data Transfer (ODX) . Most storage vendors have either already released firmware for their systems supporting this technology, or are ready to do this soon. It’s very sad for Citrix in this particular case - XenServer doesn’t have any technologies for optimizing work with SAN systems.

Well, we figured out the disk subsystem - let's now see what the resource management mechanisms of our review participants are.

From the point of view of features, the picture in this situation is more or less even, but there are points that should be noted:

1) Data Center Bridging (DCB) - under this mysterious abbreviation hides nothing more than support for converged network adapters - Converged Network Adapter (CNA) . In other words, a converged network adapter is an adapter that simultaneously has hardware support and acceleration for operation in both conventional Ethernet Ethernet networks and SANs — that is, the idea is that the data transmission medium, the media type becomes unified (it can be optics or twisted pair), and different protocols are used on the physical channel — SMB, FCoE, iSCSI, NFS, HTTP and others — that is, in terms of management, we have the ability to dynamically reassign such adapters by redistributing them depending on the need and type of load. If you look at the table, Citrix doesn’t even have DCB support — there is some list of CNA adapters that are supported — but the official documentation for Citrix XenServer 6.0 does not contain a word about DCB support.

2) Quality of service, QoS support are essentially mechanisms for optimizing traffic and ensuring the quality of the data transmission channel. Here, everything is in principle all normal, except for the free ESXi.

3) Dynamic memory - here the story turns out extremely interesting. According to Microsoft, the memory optimization mechanisms using Dynamic Memory have been significantly reworked and allowed us to obtain optimization in working with memory - and thereby increase the density of virtual machines. Added to this is an intelligent paging file that is configured for each individual VM. Honestly, I find it difficult to judge how effective this mechanism is, but when comparing the density of placement of identical VMs on Hyper-V 2.0 and Hyper-V 3.0, the difference was almost 2 times in favor of the second (The machines were with virtual Windows Server 2008 R2). And here the question arises - VMware has a whole arsenal of features for optimizing work with memory, including the deduplication of memory pages, which works much more efficiently. But let's take a closer look at this situation. VMware has 4 memory optimization technologies - Memory Balooning, Transparent Page Sharing, Compression and Swapping. However, all 4 technologies start my work only under conditions of increased load on the resources of the host system, i.e. in their manner of action, they are reactive, rather than proactive or optimizing. If we also take into account the fact that most modern server platforms support large tables, Large Page Tables, which by default are 2 MB in size - this increases performance, the picture changes - because ESXi does not know how to deduplicate such large data blocks - it starts to break them into smaller blocks, 4 KB, but now they deduplicate them - but all these operations cost resources and time - the picture is not so unambiguous in terms of the usefulness of these technologies.

Security and network

Well, it seems that we figured out with performance and scalability - now is the time to move on to comparing the security and isolation capabilities of virtualized environments.

Here it is necessary to highlight the following points:

1) Single Root I / O Virtualization (SRIOV) is actually a mechanism for forwarding a physical adapter inside a virtual machine, however, in my opinion, it is a little incorrect to compare VMware and Microsoft technologies in this area. DirectPath I / O, the technology that VMware applies, really completely forwards the PCI Express slot, or rather the device that is in it - however, the list of devices that are compatible with this feature is extremely small, and you actually tie your VM to the host in the appendix almost all bonuses in the field of memory optimization, high availability, network traffic monitoring and other goodies. The exception, perhaps, in this situation may be platforms based on Cisco UCS - and even then - only in certain configurations. Exactly the same situation with Citirix - despite the support for SRIOV, the VM in which this feature is activated loses all possibilities in the area of VM allocation, the ability to configure VM access lists (after all, a card will go around this virtual switch) - so extremely limited. Microsoft is more likely to virtualize and profile such an adapter - because VMs can be highly available, and can migrate from host to host, but this requires the presence of maps with SRIOV support in each host — then the SRIOV adapter parameters will be transferred along with the VM to the new host.

2) Disk Encryption and IPSec Offload - the picture here is clearly unequivocal, no one except Hyper-V works with such mechanisms. Naturally, for IPSec Offload you need a card with hardware support for this technology, however, if you start a VM with intensive interaction with IPSec mechanisms, then you will benefit from this technology. As for disk encryption, this mechanism is implemented using BitLocker, but its application in our country is strongly limited by regulations. In general, the mechanism is interesting - to encrypt the space where the VMs are located in order to prevent data leakage, but, unfortunately, this mechanism will not receive wide distribution until legal and regulatory issues are resolved.

3) Virtual Machine Queue (VMQ) - Technology optimizing work with VM traffic at the host level and network interfaces. Here, for ches_noku, it is difficult to judge how much DMVq is better than VMq - for in practice I have not had the opportunity to compare these technologies. However, it is stated that only Hyper-V has DMVq. Well, there is so, probably it is good, but in this case I will leave this point without any comments - because for me personally there is no strong difference, except for letters - I will be glad if someone sheds light on the difference and the effect of the dynamism of the notorious VMq.

Let's continue further - now I propose to take a look at the capabilities of virtual switches that our hypervisors have in their arsenal:

1) Expandable switches - here it is necessary to immediately understand what is meant by "extensibility". In my understanding, this means an opportunity to add, expand the functionality of an existing solution, while maintaining its integrity. In this case, Hyper-V and XenServer are precisely this ideology and correspond. But with VMware, I would call their switch not “extensible”, but “replaceable” - because once, say, put a Cisco 1000V Nexus , it replaces the vDS, rather than expanding its capabilities.

2) Protection against spoofing and unauthorized packages - the situation here is simple. Free to take advantage of protection against unwanted packages and attacks can only be in Hyper-V. Citrix, unfortunately, doesn’t have such functionality, while with VMware you will have to purchase the vShield App solution either from third-party partners.

Of the other features, it is probably worth noting that everyone has control over access to the virtual network port, except for the free ESXi - i.e. with VMware, this can be applied again only at an additional cost. And lastly, I would like to mention the direct trunk mode to the VM, which only Hyper-V has.

Infrastructure flexibility

Now let's consider in comparison the mechanisms of VM migration, as well as the availability of support for VXLAN .

1) Live migration - it is supported by all but free ESXi. The number of simultaneous migrations is a very thin parameter. In VMware, it is limited technically at the hypervisor level, and Hyper-V at the level of robust logic and physical infrastructure capabilities. Citrix has no clear official data on the number of simultaneous migrations supported. Although Hyper-V has this option and looks more attractive, but this approach is very alarming - of course, very cool, that at least 1000 VMs can be migrated at the same time - but it will just hang your network. Unknowingly, this can happen, but if you have a friendship with your head, then you set the desired parameter yourself - it is saved that by default the number of simultaneous migrations is 2 nd - so be careful. As for the live migration of virtual storage VM - this can only boast of Hyper-V and vSphere Enterprise Plus. But to migrate from host to host (or where else) without shared storage is possible only by Hyper-V - although the function is really important and useful, it is a pity that only one hypervisor has this mechanism.

2) VXLAN is a mechanism for abstraction and isolation of a virtual network with the possibility of its dynamic expansion. This mechanism is very necessary for continuous cloud environments or very large data centers with embedded virtualization. Today, only Hyper-V supports this mechanism by default - VMware has the ability to implement this mechanism, but only for an additional fee, because you will have to buy a third-party extension from Cisco.

Fault tolerance and business continuity

Now let's look at the failover and disaster recovery mechanisms that are present in virtualization platforms.

1) Built-in backup - this mechanism is available for free only in Hyper-V, in XenServer - starting with the Advanced edition, and if we are talking about VMware, then starting with Essentials Plus. In my opinion, it is a bit strange not to build in and not to free this mechanism - imagine the situations that you have tested virtualization, you have deployed virtuals - and hryas, that's all - the end !!! Not very cool, I think it will look like - if you really want to push the platform into the market - then do it with customer care (smile).

2) VM replication is a solution for a disaster-proof scenario when you need to be able to pick up current VM instances in another data center. VMware has a separate product to implement this solution - Site Recovery Manager (SRM) - and it costs money. Citrix has the same situation - Platinum Edition - and you will get this functionality. Hyper-V 3.0 is free.

3) Guest application monitoring - a mechanism that monitors the state of an application or service within a VM and, based on this data, can take any actions with the VM, for example, restart it if necessary. Hyper-V has built-in functionality for taking parameters and taking actions, VMware has an API - but there is no solution for working with this function, and Citrix has nothing in this plan.

4) VM distribution rules — a mechanism that allows VMs to be distributed on a cluster so that they either never met on the same host, or vice versa — never split up. Citrix does not know how to make such feints with ears, VMware can do this in all paid editions where HA is available, and as always, Hyper-V costs 0 rubles.

The rest of the platform is more or less the same from the point of view of a functional, with the exception of ESXi, of course.

Other read

During the IT Camps in Russia and Moscow, I talked to a very different audience - and therefore I want to immediately highlight a few more points.

1) “And when will Hyper-V learn how to forward USB keys from the host inside the VM?” - Most likely never. There are limitations to this in terms of hypervisor architecture, and considerations in terms of security and availability of services.

2) “VMware has Fault Tolerance technology - when will something like this be in Hyper-V?” - The answer will be similar to the previous one, but ... I personally consider this technology castrated - judge for yourself - you turned it on. But what will you do with a virtual machine that can have only 1 processor and no more than 4 GB of RAM? The domain controller thrust there!? Holy-holy-holy-izdydi! There should always be at least 2 domain controllers, and this will not save you from a crash at the guest OS level or a guest application - if the BSOD comes, then on both instances of the VM. Restrictions on the number of processors and RAM promise to increase as many as 3 releases to a number, if not more - and things are there.

3) “In VMware, the environment is better adapted to the Linux environment — how is Hyper-V?” Yes, indeed, VMware supports Linux guests much better — that’s a fact. But Hyper-V does not stand still - the number of supported distributions increases, and the capabilities of integration services.

Conclusion

And so - in general, let's summarize.

In terms of functionality, all hypervisors are about the same, but I would single out VMware and Hyper-V as 2 leaders. If you compare them with each other, then it is only a matter of money and functionality, as well as your IT infrastructure - what do you have more - Linux or Windows? VMware has all the tasty features worth the money - not Microsoft. If you have an operating environment on MS, then VMware will become additional costs for you, and serious licenses + support + maintenance ...

If you have more Linux, then VMware is probably there, if the environment is mixed, then the question is open.

PS> I heard somewhere that in the last square of Gartner Hyper-V are among the leaders - and overtook the vSphere, but I did not find such a picture, so this time without Gartner - if anyone has info on this topic - share, plz. I found the Gartner square from the month of June - but this is not that ...

PSS> Well, I'm ready for the hellish shooting))) What and how to use - it's up to you, as they say, but what - I'm waiting for the cartridges!

Respectfully,

Fireman,

George A. Gadzhiev

Information Infrastructure Expert

Microsoft Corporation

Source: https://habr.com/ru/post/161267/

All Articles