Life in the era of "dark" silicon. Part 2

Other parts: Part 1 . Part 3 .

This post is a continuation of the story "Life in the era of" dark "silicon . " In the previous part it was told about what “dark” silicon is and why it appeared. Two of the four main approaches that allow microelectronics to flourish in the era of "dark" silicon were also considered. It was told about the role of new discoveries in the field of production technology, how to improve energy efficiency through parallelism, and also why the reduction in the area of the processor chip seems unlikely. This time the next approach is on the agenda.

"The Dim Horseman" or energy and temperature management.

“We will fill the chip with homogeneous cores

that would exceed the power budget

but we will underclock them (spatial dimming),

or use them all in bursts (temporal dimming)

... “dim silicon”.

')

Since the reduction of the crystal area seems unlikely, then we will consider how to effectively use the "dark" areas of silicon. Here, the developers have a choice: to use general or special purpose logic? This time we will consider the variant of universal logic, the work involved only a small part of the time, and which can be used for a wide range of tasks. For logic, which works at lower frequencies most of the time, the term “dim” (dim) silicon is used. [one]

Let us consider several design methods associated with the use of "dim" silicon.

Near-Threshold Voltage processors. One of the recently emerged approaches is the use of logic operating at lower voltage levels close to the trigger threshold (Near-Threshold Voltage, NTV) [2]. The logic working in this mode, although losing in absolute performance indicators, but gives the best performance per unit of power consumption.

In the previous part, this idea was considered on the example of one comparator. If we talk about more extensive use of it, then recently the attention has attracted the NTV implementation of SIMD processors [3]. SIMD is the most successful form of parallelism for this approach. Multi-core [4] and x86 (IA32) [5] NTV processors were also investigated.

The performance of NTV processors drops faster than the corresponding energy savings in a single operation. For example, a 8x drop in performance at 40x overall reduction in power consumption or a 5x reduction per operation. Performance losses can be compensated for by using a larger number of parallel processors. But it will be effective only assuming perfect parallelization, which is more consistent with SIMD than other architectures.

Returning to Moore's law, NTV can offer a 5x performance increase, while maintaining the same power consumption, but using 40 times more space (about eleven generations of the process technology).

Using NTV creates a variety of technical problems. One such problem is the increase in the sensitivity of the circuit to variations in the parameters of the technological process. The process of lithography consists of applying multiple layers of topology on the surface of silicon ( doping ). But the thickness and width of the lines in the resulting layers may vary slightly, and this leads to a variation in the threshold voltage of the transistors. Reducing the operating voltage, we get a greater spread of frequencies at which transistors can operate. This creates a lot of inconvenience - usually SIMD uses tightly synchronized parallel blocks, which is difficult to achieve in such conditions.

There are other problems, for example, the difficulty of creating SRAM-memory operating at lower voltages.

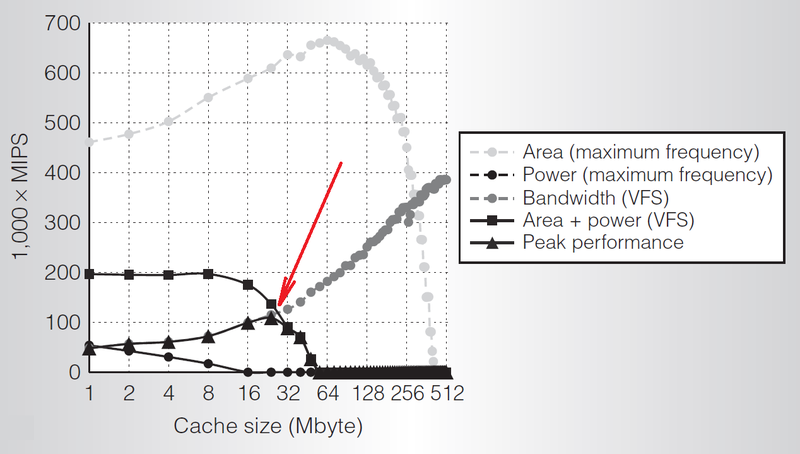

Increase cache. An often suggested alternative is to simply use dark areas of silicon to locate the cache. After all, you can easily imagine the growth of the cache at a rate of 1.4-2x per generation process technology. A larger cache for tasks with frequent cache misses can improve both performance and cost-effectiveness — accessing memory outside the chip requires a lot of energy. The frequency of cache misses basically determines the feasibility of increasing the cache. According to a recent study, the optimal cache size is determined by the point where the system performance ceases to be limited by bandwidth and becomes limited in terms of power consumption. [6]

Performance versus cache size under various constraints

However, interfaces with extracrystal memory are becoming more energy efficient, and 3D memory integration speeds up access to it. This is likely to reduce future gains from large cache amounts.

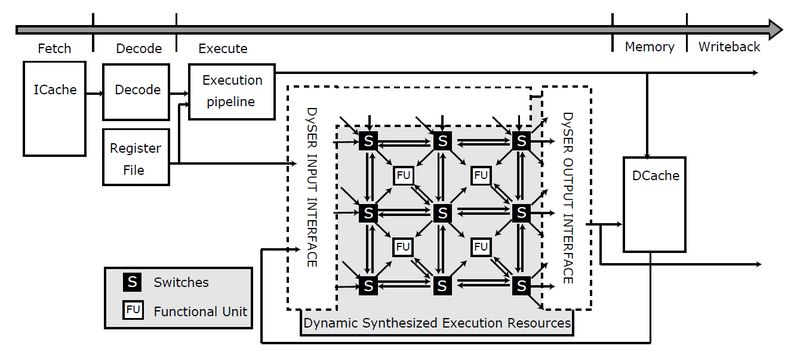

Coarse-Grained Reconfigurable Arrays. Using reconfigurable logic is not a new idea. But using it at the bit level, as it happens in FPGA, is associated with high overhead power. The most promising option is the use of coarse-grained reconfigurable arrays (Coarse-Grained Reconfigurable Arrays, CGRAs). Such arrays are configured to perform a particular operation on an entire word. The idea is to place the elements of logic in the order corresponding to the natural order of calculations, which allows to reduce the length of the signal links and the costs associated with multiple multiplexing of communication lines. [7] In addition, the CGRA duty cycle is very small, most of the time its logic is inactive, which makes it attractive for use as dim logic.

Research in the field of CGRA was conducted earlier, and they continue now, in the era of dark silicon. The commercial success of the technology has been very limited, but new problems often force us to take a fresh look at old ideas. :)

Use of reconfigurable arrays in the processor

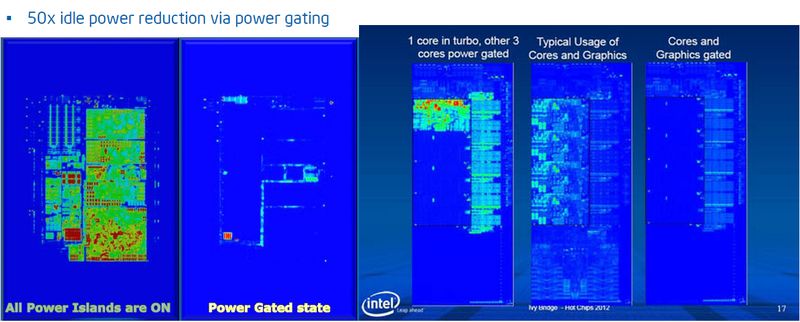

Computational Sprinting and Turbo Boost. The approaches described above used the spatial darkening of silicon, when some area of logic is designed so that it always works at very low frequencies or is mostly idle. But there are a number of methods based on the temporary blackout of silicon. For example, a processor may provide a short (<1 min.), But significant increase in performance, increasing the clock frequency. At the same time, the thermal budget is temporarily exceeded (~ 1.2-1.3 TDP), but the calculation is made on the thermal capacity of the chip and the radiator, as a means against temperature increase. After some time, the frequency returns to its original value, allowing the radiator to “cool”. Turbo Boost [8] technology uses this approach to improve performance at the right time.

Computational sprinting, and with it the version of Turbo Boost 2.0 takes a step forward compared with the original idea, allowing you to get a much larger (~ 10x) performance boost, but only for a fraction of a second.

And now a little practice, not to be unfounded . What can be achieved in modern processors with the help of these and other approaches. Effectively using a variety of methods, both spatial and temporal dimming, power consumption can vary by more than 50 times depending on the load.

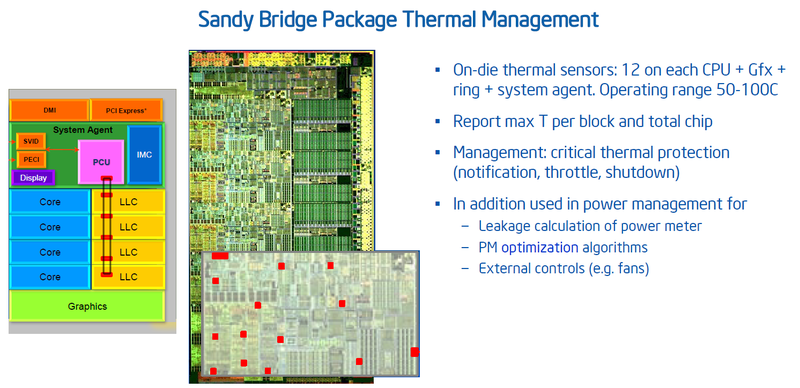

Infrared snapshots of processors in various power configurations

However, the system providing this is very, very complex. In addition to the corresponding design solutions, the microprocessor contains a lot of temperature sensors (red dots on the right side of the figure). For example, in Sandy Bridge, there are 12 sensors per processor core, and a considerable number of them are located outside the processor cores. In addition, the processor includes a specialized unit (PCU - Package Control Unit), which performs the firmware that controls power consumption. The readings of the temperature sensors are used not only to monitor the temperature, but also, for example, to estimate leakage currents, and the PCU has an interface to communicate with the outside world, which allows the operating system and user applications to monitor data and manage power consumption.

Also interesting is the following feature of multi-core processors. At low load, redundant processor cores can be disabled to save power. But Uncore (the part of the processor that does not include the processor cores - the communication factory, the shared cache, the memory controller, etc.) cannot be disabled, since This will completely stop the processor. At the same time, Uncore power consumption is comparable to the power consumption of several processor cores.

Uncore

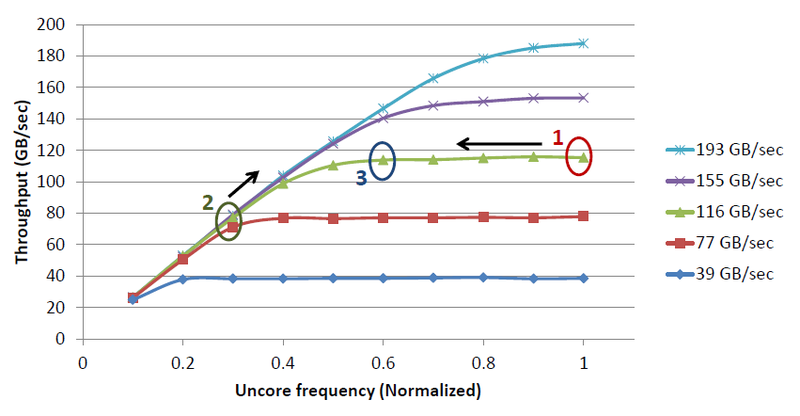

The desired energy savings can be achieved by controlling the Uncore clock speed. When the frequency is higher than necessary (point 1), Uncore performance requirements are fully met, but energy is wasted and the frequency can be reduced without sacrificing performance. In the case where the frequency is lower than necessary (point 2), performance suffers and the frequency should be increased. Our goal is to find the optimal point for the current load (point 3) and maintain it. It is not easy, because To work effectively, performance requirements need to be predicted and the clock frequency changed in advance.

Uncore performance clock curves for various loads

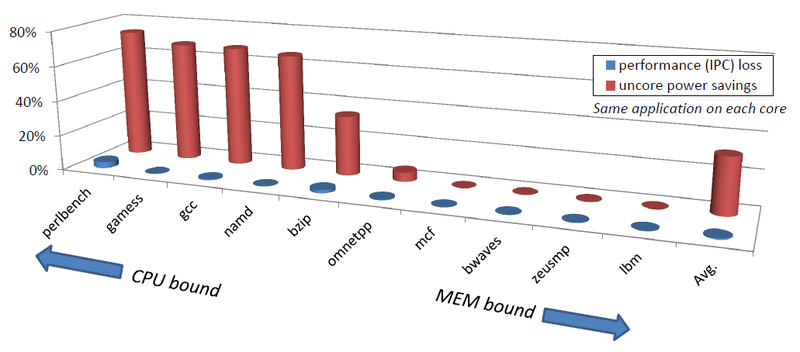

Loss of performance and energy savings on various tasks

Different Uncore frequency control algorithms allow, for example, to achieve 31% energy savings, losing only 0.6% of performance, or 73% of savings due to 3.5% of performance, respectively.

Continued .

Sources

1. W. Huang, K. Rajamani, M. Stan, and K. Skadron. ”Scaling with design constraints: Predicting the future of big chips." IEEE Micro, july-aug. 2011.

2. R. Dreslinski et al., “Near-threshold computing: Reclaiming moore's law of energy efficient integrated circuits.” Proceedings of the IEEE, Feb. 2010.

3. Hsu, Agarwal, Anders et al. “A 280mv-to-1.2v 256b reconfigurable simd vector permutation engine with 2-dimensional shuffle in 22nm cmos." In ISSCC, Feb. 2012.

4. D. Fick et al. “Centip3de: A 3930 dmips / w configurable near-threshold 3d stacked system with 64 cortex-m3 cores." In ISSCC, Feb. 2012.

5. Jain, Khare, Yada et al. “A 280mv-to-1.2v wide-operating-range ia-32 processor in 32nm cmos." In ISSCC, Feb. 2012.

6. N. Hardavellaset al. “Toward dark silicon in servers." IEEE Micro, 2011.

7. V. Govindaraju, C.-H. Ho, and K. Sankaralingam. “Dynamically specialized datapaths for energy efficient computing." In HPCA, 2011.

8. E. Rotem. "Intel core microarchitecture, formerly codenamed sandy bridge." In Proceedings of Hotchips, 2011.

Source: https://habr.com/ru/post/160451/

All Articles