Increase the reliability of the data center! (photo from the thermal imager inside)

Almost all the accidents in properly planned data centers are predictable and can be detected at the “pre-accident” stage. But how to understand in advance where to “lay the straws”? Under the cut, our experience in improving data center reliability on the street. Prishvina (e-Style Telecom).

The infrastructure of the data center should be serviced and monitored, and shutdowns, of course, are not allowed. How to achieve this?

How to eliminate a potential problem before it can affect the performance of the system?

The real reliability of the data center in our country is determined by only three factors:

1. the degree of pofigism and stupidity of designers, builders of data centers;

2. external risks for the company, premises and connections;

3. degree of carelessness and carelessness of data center employees.

')

Thanks to a painful and expensive experience, based on our own and others' mistakes, we were able to detect a significant number of shortcomings and stupidity during the planning, design and equipment stages of the data center. And, most importantly, in time to eliminate them.

According to the company's risks, premises and connections, everything turned out - the building and transformer were built “for ourselves”, everything is owned, and our company is in one of the largest IT holdings - R-Style / e-Style.

It remains only to provide competent maintenance and operation ... easy to say! How? Our steps on this path:

First step, basic : two parallel monitoring systems, common SNMP interface, isolated management network. Absolutely all the equipment of the e-Style Telecom data center was equipped / equipped with self-diagnostic and monitoring tools. Information was already enough to understand the current state of the systems.

The second one - additionally added hundreds of temperature sensors (at different points of the equipment room, in different zones). It has become much more informative, the distribution of power and temperature, changes when switching blocks of air conditioners. At this stage, we were no longer able to place the new equipment in the blind “on the project”, but to see and compare the real thermal picture and plan the loading of the hardware.

The third is to regularly examine the infrastructure and server equipment with a thermal imager. When they found this method - they were very happy. The imager allows you to quickly get a lot of information for analysis.

Batteries, clamps, connections, disks in the storage system, wires, filters, fans, air flow, air flow between the corridors are now visible in advance. After each round, as a rule, something suspicious is detected and eliminated. Today, for example, they found a 7-degree increase in the temperature of the cable in one cabinet - the customer supplied the load with 5kW through one cable, ignoring other outlets in the PDU.

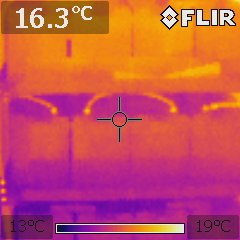

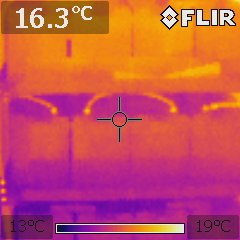

A snapshot of a cold corridor, in which cabinets without equipment are immediately visible in the lower part, through which air flows from hot corridors.

Engineer in the cold corridor:

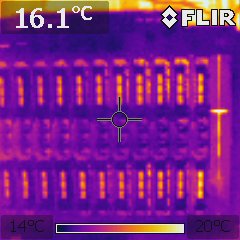

A snapshot of an IBM blade with evenly loaded blades:

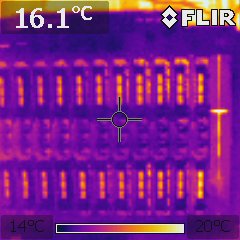

A snapshot of the battery cabinet during battery testing:

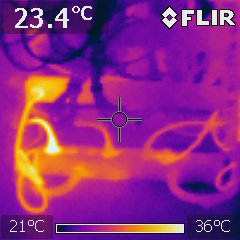

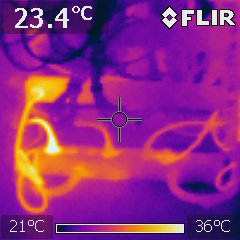

Power cables in cabinets:

Excessive heat is often a good prediction of possible problems, the main thing to see in time. We did what we could to know in advance where to lay the straws.

The infrastructure of the data center should be serviced and monitored, and shutdowns, of course, are not allowed. How to achieve this?

How to eliminate a potential problem before it can affect the performance of the system?

The real reliability of the data center in our country is determined by only three factors:

1. the degree of pofigism and stupidity of designers, builders of data centers;

2. external risks for the company, premises and connections;

3. degree of carelessness and carelessness of data center employees.

')

Thanks to a painful and expensive experience, based on our own and others' mistakes, we were able to detect a significant number of shortcomings and stupidity during the planning, design and equipment stages of the data center. And, most importantly, in time to eliminate them.

According to the company's risks, premises and connections, everything turned out - the building and transformer were built “for ourselves”, everything is owned, and our company is in one of the largest IT holdings - R-Style / e-Style.

It remains only to provide competent maintenance and operation ... easy to say! How? Our steps on this path:

First step, basic : two parallel monitoring systems, common SNMP interface, isolated management network. Absolutely all the equipment of the e-Style Telecom data center was equipped / equipped with self-diagnostic and monitoring tools. Information was already enough to understand the current state of the systems.

The second one - additionally added hundreds of temperature sensors (at different points of the equipment room, in different zones). It has become much more informative, the distribution of power and temperature, changes when switching blocks of air conditioners. At this stage, we were no longer able to place the new equipment in the blind “on the project”, but to see and compare the real thermal picture and plan the loading of the hardware.

The third is to regularly examine the infrastructure and server equipment with a thermal imager. When they found this method - they were very happy. The imager allows you to quickly get a lot of information for analysis.

Batteries, clamps, connections, disks in the storage system, wires, filters, fans, air flow, air flow between the corridors are now visible in advance. After each round, as a rule, something suspicious is detected and eliminated. Today, for example, they found a 7-degree increase in the temperature of the cable in one cabinet - the customer supplied the load with 5kW through one cable, ignoring other outlets in the PDU.

A snapshot of a cold corridor, in which cabinets without equipment are immediately visible in the lower part, through which air flows from hot corridors.

Engineer in the cold corridor:

A snapshot of an IBM blade with evenly loaded blades:

A snapshot of the battery cabinet during battery testing:

Power cables in cabinets:

Excessive heat is often a good prediction of possible problems, the main thing to see in time. We did what we could to know in advance where to lay the straws.

Source: https://habr.com/ru/post/160307/

All Articles