Progress in the development of neural networks for machine learning

The Friday edition of the NY Times published an article about the significant successes that the developers of algorithms for self-learning neural networks have been demonstrating in recent years. In deep structures there are several hidden layers that have traditionally been difficult to train. But that all changed with the use of a stack of Boltzmann machines (RBM) for pre-training. After that, you can conveniently reconfigure the weights using the backpropagation method. Plus, the emergence of fast GPUs - all this has led to significant progress, which we have seen in recent years.

The Friday edition of the NY Times published an article about the significant successes that the developers of algorithms for self-learning neural networks have been demonstrating in recent years. In deep structures there are several hidden layers that have traditionally been difficult to train. But that all changed with the use of a stack of Boltzmann machines (RBM) for pre-training. After that, you can conveniently reconfigure the weights using the backpropagation method. Plus, the emergence of fast GPUs - all this has led to significant progress, which we have seen in recent years.The developers themselves do not make loud statements in order not to raise the hype around neural networks - such as in the 1960s rose around cybernetics. Nevertheless, it is possible to speak about a revival of interest in research in this area.

Researches on neural networks were actively conducted in the 1960s, then this sphere for a while went into the shadows. But in 2011-2012 a number of excellent results in the field of speech recognition, computer vision and artificial intelligence are shown. Here are some recent successes:

• In 2011, the program for recognition of road signs was won by a program created by Swiss AI Lab specialists from the University of Lugano. Based on 50 thousand images of German road signs, it showed a result of 99.46%, ahead of not only other programs, but even the best of 32 people participating in the competition (99.22%). The average for people was 98.84%. The Swiss AI Lab's neural network has also won other contests, including the most accurate recognition of handwritten Chinese characters.

')

• In the summer of 2012, Google specialists raised a cluster of 16 thousand computers for a self-learning neural network that trained on the basis of 14 million images, corresponding to 20 thousand objects. Although the recognition accuracy is not very high (15.8%), but it is much higher than that of previous systems of similar purpose.

• In October 2012, a program that uses neural networks won the Merck Molecular Activity Challenge software competition for statistical analysis of activity for the development of new drugs. It was developed by a team of the University of Toronto under the guidance of Professor Geoffrey E. Hinton, a well-known expert on neural networks.

• In November 2012, the director of Microsoft’s research division spoke at Microsoft Research Asia’s 21st Century Computing conference in China with an impressive demonstration of speech recognition, simultaneous translation into Chinese, and real-time speech synthesis in another language, in his own voice).

It is believed that it was the aforementioned Jeffrey Hinton who introduced the backpropagation method invented in 1969, but little known before his work, into the field of neural network training.

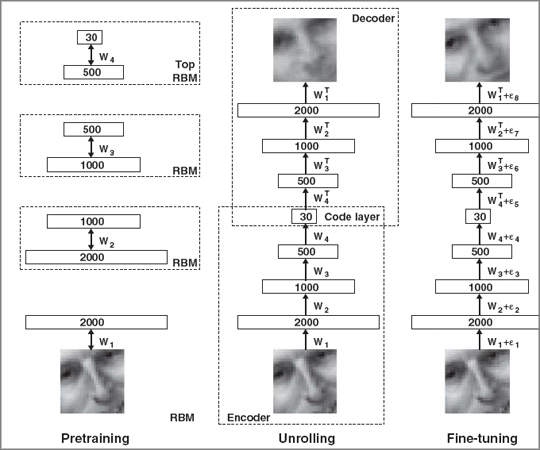

A breakthrough in the training of neural networks occurred after the publication in 2006 of a scientific article by Hinton and his colleague Ruslan Salakhutdinov (Ruslan Salakhutdinov) “ Reducing the Dimensionality of Data with Neural Networks ”. There he described the technique of pre-training the neural network and the subsequent fine-tuning using the Boltzmann machine stack (RBM) and the back propagation error method (backpropagation). Schematically, this technique is shown in the illustration.

Mark Watson on his blog published a couple of links to useful resources to start learning in this area.

- Hinton's online Neural Networks for Machine Learning course on Coursera.

- Deep Learning Tutorial , a very large presentation (pdf, 184 slides), which provides all the necessary theoretical base, mathematics and Python code examples.

Source: https://habr.com/ru/post/160115/

All Articles