Benchmark Windows Azure showed high performance for large-scale computing

151.3 TFlops on 8064 cores with 90.2 percent efficiency.

Windows Azure offers its customers a cloud platform that cost-effectively and reliably meets the requirements of large-scale computing (Big Compute). When developing Windows Azure, we used high-power infrastructure and scalability, new instance configurations, as well as the new HPC Pack 2012, which allowed Windows Azure to become the best platform for applications using large-scale computing. In fact, Windows Azure was tested using the LINPACK benchmark, which confirmed the power of Windows Azure in large-scale calculations. The network performance is very impressive, and amounted to 151.3 TFlops on 8064 cores with 90.2 percent efficiency - these results were sent to the Top 500 and the researchers received a certificate confirming that 500 of the world's most powerful supercomputers were included in the top.

Large-scale computing equipment

In view of Microsoft’s interest in large-scale computing, equipment designed to meet customer demand for high-performance computing was introduced. Two high-performance configurations were presented. The first with 8 processors and 60 GB of RAM, the second with 16 processors and 120 GB of memory. Both configurations also support InfiniBand network with RDMA (remote direct memory access) for MPI (message passing interface) applications.

• Dual Intel Sandybridge processor at 2.6 GHz;

• DDR3 memory at 1600 MHz;

• 10 Gbps network for storage and Internet access;

• InfiniBand (IB) network with 40 Gbps bandwidth with RDMA.

The InfiniBand network presented by Microsoft supports communication, which provides the ability to use remote direct memory access RDMA between compute nodes. For applications using MPI (library interface exchange interface) library, RDMA allows you to combine memory on multiple computers into one pool. Using RDMA provides performance comparable to the peak theoretical performance of a computer in the cloud, which is especially important for applications using large-scale computing.

The new high-performance configuration with RDMA support is ideal for HPC and other demanding applications, such as engineering modeling and weather forecasting, which require scaling to multiple computers. Fast processors and low network latency mean that large-scale models can be run and the simulation will complete faster.

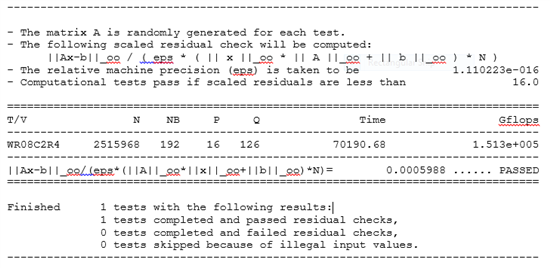

LINPACK Benchmark

To demonstrate the productive capabilities of large-scale computing equipment, Microsoft conducted the LinPack benchmark, the results of which were obtained, and according to the results, certified as one of the 500-hundred top supercomputers of the whole world. LinPack benchmark shows the computational power of floating point operations by measuring how fast the dense matrix n * n of the linear equation system Ax = b is calculated, which is a common engineering problem. This gives an approximate estimate of performance in solving real problems.

In Microsoft, the result was achieved - 151.3 TFlops on 8064 cores with 90.2 percent efficiency. The effective number indicates how close to the maximum theoretical performance a system can approach. This number is calculated as the frequency of the machine in Hz multiplied by the number of operations that can be performed per cycle. One of the factors that affects performance and efficiency in cluster computing is the ability to use internal network connections. That is why Windows Azure uses InfiniBand with RDMA for large-scale computing.

')

Below are the results of the LinPack benchmark test, which shows a performance of 151.3 TFlops.

It is impressive that the result was achieved when using Windows Server 2012 running on a virtual machine hosted in Windows Azure with Hyper-V . Thanks to an efficient implementation, you can get the same performance for your high-performance application running in Windows Azure as on your own dedicated HPC cluster.

Windows Azure is the first public cloud provider that offers the ability to virtualize InfiniBand RDMA networks for MPI applications. If your code is sensitive to delays, then our cluster can send a 4-bit packet between machines in 2.1 microseconds. InfiniBand also provides high bandwidth. This means that the application scales better, with a faster result in time and low cost.

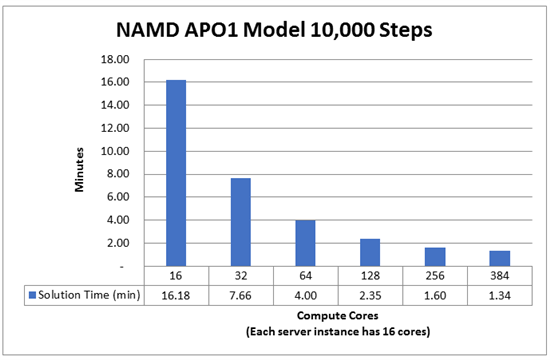

Application performance

The graphs below show how the NAMD molecular dynamics simulation program scales on a different number of cores running in Windows Azure using the recently announced configuration. Instances of a 16-nuclear machine were used to launch the application, so for launching on a 32-core or more nuclear machine, data transmission over the network is required. On the RDMA network, the NAMD program worked flawlessly, and the time spent on the solution was significantly reduced by adding additional cores.

It is good that the scale of modeling depends both on the application and the specific model, and on the problem being solved.

High-performance equipment is being tested with a certain group of partners, and Microsoft will make them available to a wide audience in 2013.

Windows Azure supports large-scale computing using Microsoft HPC Pack 2012

Large-scale computing service in Windows Azure Microsoft began to support 2 years ago. Resource-intensive applications require a large amount of computing power, which usually rests on many hours and days of waiting for calculations. Examples of large-scale computing include: modeling complex engineering problems, understanding financial risk, researching a disease, weather forecasting, transcoding media data, or analyzing a large data set. Clients performing large-scale computing are increasingly turning to cloud technologies to support and grow the necessary computing power, which provides better flexibility and cost savings, which makes it possible to do all the work locally on site (“on-premise”).

In December 2010, Microsoft HPC Pack for the first time provided an opportunity for a “breakthrough”, which consists in refusing to use local computing clusters in favor of cloud computing (i.e., to meet demand, they could instantly consume additional resources from the cloud, namely in the extreme situations at peak use). This allowed consumers to easily use Windows Azure to handle peak requirements. HPC Pack is responsible for the reservation and work scheduling, and many customers have seen direct returns on their investments through the use of cloud computing resources in Windows Azure.

Today, Microsoft is pleased to announce the fourth edition of the cluster computing solution since 2006. HPC Pack 2012 is used to manage computing clusters, dedicated servers, incomplete servers, desktop computers, and hybrid systems in Windows Azure. Clusters can be fully utilized both locally (“on-permise”) and in the cloud on a schedule or on demand and are active only when necessary.

The new release provides support for Windows Server 2012 and includes Windows Azure VPN integration to provide access to local (“on-premises”) resources, such as licensing servers, new elements for performing work and its dependencies, a new working planned memory policy. and kernels, new monitoring tools and utilities that will help manage the delivery of data.

Microsoft HPC Pack 2012 will be available in December 2012.

Scale Computing in Windows Openwork Today

From the start, Windows Azure has been designed to support large-scale computing. With the help of Microsoft HPC Pack 2012 or with their own applications, customers and partners can quickly raise a large-scale computing environment with tens of thousands of cores. As the examples illustrate large-scale computing, customers have already added these features to Windows Azure for testing.

Solvency II Regulators Risk Report

Milliman is one of the world's largest suppliers of actuarial and related products and services. Their MG-ALFA application is widely used by insurance and financial companies to model risk, they are integrated with the Microsoft HPC Pack to distribute calculations in the HPC cluster or to "break through" work in Windows Azure.

In order to help insurance companies collect risk reports for Solvency II regulators, Milliman also offers MG-ALFA as a service used in Windows Azure . This allows their clients to perform complex risk calculations without capital investments or management of a local (“on-premises”) cluster. This solution from Milliman has been in production for over a year, with customers using up to 8,000 Windows Azure cores on this cluster.

MG-ALFA can reliably scale to tens of thousands of Windows Azure cores. To test new models, Milliman used 45,500 Windows Azure cores to calculate 5,800 tasks with 100 percent success in just 24 hours. Since you can run applications with sufficiently large scalability, you get quick results and have more confidence in the results without using approximations or proxy modeling techniques. For most companies, complex and time-consuming forecasts must be made every quarter. Without significant computing power, they will either have to find a compromise on how long they will have to wait for the results or reduce the size of the models they run. Windows Azure is changing the equation.

The cost of world insurance

Towers Watson is a global professional service company. Their MoSes financial modeling programs are widely used by international insurance companies to develop new offers and manage their financial risks. MoSes is integrated with Microsoft HPC Pack for project distribution across the cluster, which also contributes to the transition to Windows Azure. Last month, Towers Watson announced that they would borrow Windows Azure as their preferred cloud platform .

One of the first projects for Towers Watson's for its partners was testing the scalability of the Windows Azure computing environment by simulating the cost of life insurance around the world. The team used MoSes programs to carry out individual methodological calculations of the cost of issuing life-long insurance policies for all seven billion people on Earth. The calculations were repeated 1000 times, taking into account the scenarios of natural economic risk. In order to complete calculations in a short time, MoSes uses the HPC Pack to deliver these calculations to 50,000 parallel cores within Windows Azure.

Towers Watson was impressed with their ability to complete 100,000 hourly computing work in a few real-time hours. Insurance companies are increasingly faced with growing demands on the frequency and complexity of financial modeling. This example demonstrates what extraordinary opportunities Windows Azure gives insurers. With Windows Azure, insurance companies can run their financial models with greater accuracy, speed and accuracy of effective risk and capital management.

Acceleration analysis of the human genome

Cloud computing broadens the horizons of science and helps us better understand the human genome and disease. One example is the genome research association (GWAS), which identifies genetic markers that are responsible for human disease.

David Heckerman and eScience research group at Microsoft Research have developed a new algorithm called FaST-LMM, which can find new genetic links to diseases by analyzing data sets that are several orders of magnitude larger than previously possible, it will also allow for the detection of thinner ones. signals in the data than before.

The goal of the Windows Azure research team is to help them successfully test the application. They use Microsoft HPC Pack with FaST-LMM on 27,000 Windows Azure computing cores to analyze data from a UK population survey conducted as part of the Wellcome Trust organization. They analyzed 63,524,915,020 pairs of genetic markers, looking for the relationship between these markers and ischemic heart disease, hypertension, inflammatory bowel disease (Crohn's disease), rheumatoid arthritis, and first and second diabetes.

More than 1,000,000 tasks were scheduled on the HPC Pack within 72 hours, which is equivalent to 1.9 million hours of computing. Such calculations would take 25 years of computing on a single 8-core server. The result: the ability to discover new connections between the gene and these diseases can help make a potential breakthrough in prevention and treatment.

Researchers in this area will have free access to the result for self-testing in their laboratories. These researchers can calculate results from individual pairs, and the FaST-LMM algorithm will be freely available on demand in the Windows Azure Data Marketplace .

Scale calculations

With a large, powerful and scalable infrastructure, with new instance configurations and with Microsoft HPC Pack 2012, Windows Azure is designed to be the best platform for applications using large-scale computing. If you are interested in large-scale computer calculations, then contact Microsoft at bigcompute@microsoft.com.

- Bill Hilf, General Manager, Windows Azure Product Marketing

- Translation Vladimir Khlyzov, graduate student of MGUPI vlkhlyzov@gmail.com.

Source: https://habr.com/ru/post/159895/

All Articles