Living example, google now scans ajax

I decided to remake the navigation menu on the site which is abundant with links to different sections and make less “spamming” for search engines and more convenient for visitors. The idea was simple.

- from the main page all links to sections of the site in HTML

- links in the menu from sections only to thematic sections

- Other links to non-thematic sections should be made in JavaScript in a separate file.

I will omit the details of the implementation of this idea, but it turned out quite badly, I like everything.

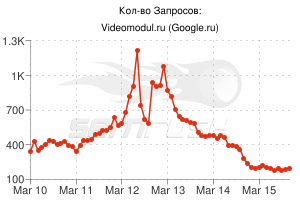

And suddenly I noticed a sharp drop in traffic from Google. What? How? Why?

It seems the opposite wanted to do better, to send the search engines on the right path, but it turns out that everything is not so smooth.

Recently, Google began to crawl and index AJAX sites!

No one seems to have heard anything new, but they just outdid everyone again.

Everything is very simple.

- The site supports the AJAX scan.

- The server provides an HTML snapshot for each AJAX URL that is visible to the user (through the browser). The AJAX URL contains a hash fragment, for example, example.com/index.html#mystate, where #mystate is a hash fragment. An HTML snapshot is all the content that is displayed on the page after the JavaScript code is executed.

- Search engines index an HTML snapshot and serve the original AJAX URLs in their search results.

That is, it turns out that Google saw every page of the site with a bunch of links to all sections, made its findings and reindexed the site in its own way.

')

A live example , a source on a subject .

Source: https://habr.com/ru/post/158845/

All Articles