I / O virtualization to increase data center scalability and protect existing hardware investments

Dear Hobrazhiteli,

Dear Hobrazhiteli,we open a cycle of publications dedicated to innovative server I / O virtualization technology, in which we make a short comparison with FCoE and iSCSI technologies, consider in detail the I / O virtualization device and data center architecture using this technology, familiarize the audience with the main characteristics and features of connection and operation I / O virtualization devices, and also show the results of comparative testing.

We present to you the first article of the cycle.

Are there enough resources in our data center to solve current and future tasks?

In data centers that use the traditional methodology of static resource sharing, a significant part of the equipment is usually poorly loaded, and in some critical places overloads are constantly observed. The elimination of one bottleneck according to the well-known law of Murphy immediately leads to the identification of other bottlenecks. Currently, most data centers consolidate storage systems and servers, and also use server virtualization management systems, the use of which allows you to dynamically allocate resources and predict the moment of occurrence of the need for additional server capacity, switching nodes, and storage system resources. Now it is safe to calculate which date the RAM or server is required for, or a certain number of hard drives, or a disk shelf. And as long as it fits into the possibilities of expanding existing equipment, the costs are relatively small. However, when it comes to introducing a new server rack, especially with significantly higher performance servers, this change affects a greater number of data center subsystems: first of all, LAN and SAN cores, which were previously invested heavily and would like to save.

')

What architectures and technologies can be used to save investment in data center equipment?

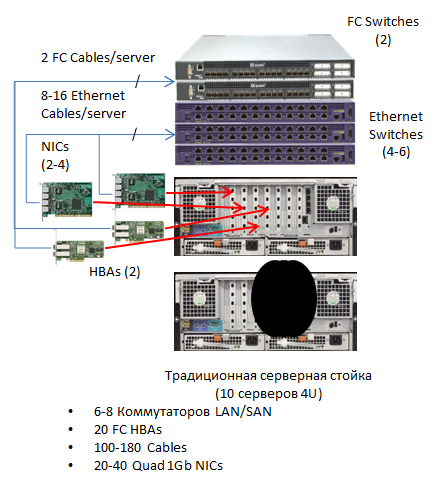

Classic Architecture (Ethernet + FC)

With the classical generally accepted approach, there are two completely independent networks in the data center — LAN and SAN — that have their own core and access switches. At the same time, both types of network adapters are installed in the servers, which are duplicated when redundancy is required. Physically, LAN and SAN core switches are located, as a rule, in a dedicated sector of the data center.

With the classical generally accepted approach, there are two completely independent networks in the data center — LAN and SAN — that have their own core and access switches. At the same time, both types of network adapters are installed in the servers, which are duplicated when redundancy is required. Physically, LAN and SAN core switches are located, as a rule, in a dedicated sector of the data center.Access switchboards, the so-called TOR switches (Top Of Rack), are installed in server cabinets. As a rule, one switch of each type is used, and two switches are used to increase fault tolerance and redundancy. Access switches and cores are connected via trunk lines. If it is necessary to add a new cabinet or rack with servers, such a system can be easily scaled: a rack with servers and TOR switches is added, and the necessary interface modules are added to the core switches, and new connecting lines are laid.

This approach is most justified if core redundancy was previously provided (there are free slots in trunk devices).

Converged architecture based on Ethernet protocol extensions

Since the classical scheme should initially have significant redundancy, serious attempts have recently been made to ensure the convergence of LAN and SAN networks: on the one hand, this is 10 Gbit iSCSI technology, and on the other, FCoE technology. The use of the first technology allows you to use existing LAN core switches in your organization, but requires a complete replacement of storage systems in the SAN (10G iSCSI connectivity interfaces are required). The use of the second technology saves investments in FC SAN, but requires a complete replacement of the core switches and access. Both technologies provide convergence and savings on switches and adapters, but their use is economically justified when it comes to the complete replacement of one of the data center subsystems.

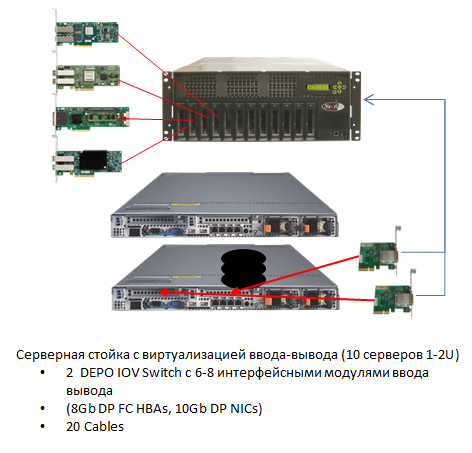

Architecture using innovative server I / O virtualization technology

If you have previously made significant investments in the data center (both in the SAN and the LAN), but you need to increase server resources, you can use new I / O virtualization technology that uses switching at the PCI-E bus level. This is the most natural switching method for servers, as in modern servers on Intel Xeon E5 processors, the PCI-E controller is integrated directly into the processor. Such processors are able to process I / O requests directly in their own cache memory without recourse to RAM, which improves I / O performance by up to 80%. The I / O Virtualization Switch (hereinafter referred to as IOV - Input Output Virtualization) has inputs for connecting servers via the PCI-E bus, as well as interface modules for connecting to LAN and SAN. Such interface modules are recognized by the drivers of the virtual machine monitors (hypervisors) and operating systems as standard LAN and SAN adapters (in fact, they become collective for the server farm), and the bandwidth in the LAN and SAN is distributed dynamically. It is also possible to allocate bandwidth, prioritization and traffic aggregation. Dynamic separation of the bandwidth of trunk links makes it possible to save, for example, by reducing the number of interfaces and, consequently, reducing the number of ports on the core switches. This ensures investment protection and reduces the cost of ownership. In addition, delays in accessing the LAN core and storage will be significantly reduced due to the exclusion of switching in TOR.

If you have previously made significant investments in the data center (both in the SAN and the LAN), but you need to increase server resources, you can use new I / O virtualization technology that uses switching at the PCI-E bus level. This is the most natural switching method for servers, as in modern servers on Intel Xeon E5 processors, the PCI-E controller is integrated directly into the processor. Such processors are able to process I / O requests directly in their own cache memory without recourse to RAM, which improves I / O performance by up to 80%. The I / O Virtualization Switch (hereinafter referred to as IOV - Input Output Virtualization) has inputs for connecting servers via the PCI-E bus, as well as interface modules for connecting to LAN and SAN. Such interface modules are recognized by the drivers of the virtual machine monitors (hypervisors) and operating systems as standard LAN and SAN adapters (in fact, they become collective for the server farm), and the bandwidth in the LAN and SAN is distributed dynamically. It is also possible to allocate bandwidth, prioritization and traffic aggregation. Dynamic separation of the bandwidth of trunk links makes it possible to save, for example, by reducing the number of interfaces and, consequently, reducing the number of ports on the core switches. This ensures investment protection and reduces the cost of ownership. In addition, delays in accessing the LAN core and storage will be significantly reduced due to the exclusion of switching in TOR.Description of the device DEPO Switch IOV can be found here .

Full DEPO Switch IOV specifications

Chassis

Ports for connecting servers: 15 non-blocking PCIe x8 ports.

Performance per port: 20 Gbps.

Cables for connecting to servers: PCIe x8 (1, 2 or 3 m).

Slots for installation of I / O modules: 8 slots that support any combination of FC I / O modules, Ethernet 10GE

(in plans - support for I / O modules SAS2 and GPU)

10 Gigabit Ethernet I / O Module

I / O module interfaces: two 10G Ethernet ports (SFP + connector, 10 GBase-SR modules and DA cables supported).

MAC address allocation: each virtual network adapter (vNIC) receives a unique MAC address; provides dynamic migration of MAC-addresses when moving between physical servers.

Tx / Rx IP, SCTP, TCP, and UDP checksum calculation checksum offloading capabilities, Tx TCP segmentation offload, IPSec offload and MacSec (IEEE 802.1ae).

VLAN support: supports IEEE 802.1Q VLANs and trunks.

IPv6 support.

Interprocess communication (IPC) support.

Quality of Service (QoS): yes.

8 Gigabit Fiber Chanel I / O Module

I / O module interfaces: two 8Gb Fiber Channel ports (SFP +, LC, MM connector).

Supported protocols FC-PI-4, FC-FS-2, FC FS-2 / AM1, FC-LS, FC-AL-2, FC-GS-6, FC FLA, FC-PLDA, FC-TAPE, FC- DA, FCP through FCP-4, SBC-3, FC-SP, FC-HBA and SMI-S v1.1.

World Wide Name support: each virtual HBA (vHBA) receives a unique WWN; WWNs can dynamically move between physical servers.

The ability to boot from SAN is supported, vHBA can be configured to boot from SAN.

Supported operating systems and hypervisors

Windows 2008 (32-bit and 64-bit), Windows 2008 R2.

Linux RHEL 5.3, 5.4, 5.5 and 6.0, CentOS, SEL 11.x.

VMware ESXi 4.1 update 1.

Management console

Management through a dedicated Ethernet port 10/100/1000 Mbps.

Management Interface:

- Web-based GUI management (Firefox 3.5, Firefox 4, IE8, IE9, Chrome 11+).

- CLI via Telnet / SSH and Open API for integration with other management systems.

Lights Out Management - support SNMPv3 Trap Configuration with 2 user accounts and 3 trap destinations.

Environment monitoring - environment monitoring and chassis alert.

High availability

Redundant and hot-swappable power supplies, fan modules, FRU.

Support 10Gbps Ethernet NIC teaming and failover.

Support Fiber Channel Multi-Pathing (MPIO).

Power Options

110–240 V, 47–63 Hz.

Maximum power consumption: 600 watts.

Overall dimensions and weight

172.7 x 482.6 x 508 (H x W x D, mm)

31.8 kg

terms of Use

Operating temperature: from + 10 ° C to 35 ° C.

Permissible humidity: from 8 to 80% (without condensation).

Height above sea level: from 0 to 2000 m.

Ports for connecting servers: 15 non-blocking PCIe x8 ports.

Performance per port: 20 Gbps.

Cables for connecting to servers: PCIe x8 (1, 2 or 3 m).

Slots for installation of I / O modules: 8 slots that support any combination of FC I / O modules, Ethernet 10GE

(in plans - support for I / O modules SAS2 and GPU)

10 Gigabit Ethernet I / O Module

I / O module interfaces: two 10G Ethernet ports (SFP + connector, 10 GBase-SR modules and DA cables supported).

MAC address allocation: each virtual network adapter (vNIC) receives a unique MAC address; provides dynamic migration of MAC-addresses when moving between physical servers.

Tx / Rx IP, SCTP, TCP, and UDP checksum calculation checksum offloading capabilities, Tx TCP segmentation offload, IPSec offload and MacSec (IEEE 802.1ae).

VLAN support: supports IEEE 802.1Q VLANs and trunks.

IPv6 support.

Interprocess communication (IPC) support.

Quality of Service (QoS): yes.

8 Gigabit Fiber Chanel I / O Module

I / O module interfaces: two 8Gb Fiber Channel ports (SFP +, LC, MM connector).

Supported protocols FC-PI-4, FC-FS-2, FC FS-2 / AM1, FC-LS, FC-AL-2, FC-GS-6, FC FLA, FC-PLDA, FC-TAPE, FC- DA, FCP through FCP-4, SBC-3, FC-SP, FC-HBA and SMI-S v1.1.

World Wide Name support: each virtual HBA (vHBA) receives a unique WWN; WWNs can dynamically move between physical servers.

The ability to boot from SAN is supported, vHBA can be configured to boot from SAN.

Supported operating systems and hypervisors

Windows 2008 (32-bit and 64-bit), Windows 2008 R2.

Linux RHEL 5.3, 5.4, 5.5 and 6.0, CentOS, SEL 11.x.

VMware ESXi 4.1 update 1.

Management console

Management through a dedicated Ethernet port 10/100/1000 Mbps.

Management Interface:

- Web-based GUI management (Firefox 3.5, Firefox 4, IE8, IE9, Chrome 11+).

- CLI via Telnet / SSH and Open API for integration with other management systems.

Lights Out Management - support SNMPv3 Trap Configuration with 2 user accounts and 3 trap destinations.

Environment monitoring - environment monitoring and chassis alert.

High availability

Redundant and hot-swappable power supplies, fan modules, FRU.

Support 10Gbps Ethernet NIC teaming and failover.

Support Fiber Channel Multi-Pathing (MPIO).

Power Options

110–240 V, 47–63 Hz.

Maximum power consumption: 600 watts.

Overall dimensions and weight

172.7 x 482.6 x 508 (H x W x D, mm)

31.8 kg

terms of Use

Operating temperature: from + 10 ° C to 35 ° C.

Permissible humidity: from 8 to 80% (without condensation).

Height above sea level: from 0 to 2000 m.

How do you embed a server farm with I / O virtualization into your existing data center infrastructure?

The procedure for adding a server farm with I / O virtualization differs little from a similar procedure for adding servers with physical adapters. The only differences are in setting up IOV and TOR switches. IOV has some advantage: when moving (live migrating) virtual servers within a server farm connected to an IOV device, no additional configuration is required. IOV allows you to divide the administration process of the server farm and the network core between different administrative groups. If by the time the new server farm is introduced, there will be no free ports on the core switches, but there will be a performance margin, you can avoid costly upgrading the core switches. It suffices to replace the IOR TOR switches in the existing racks and divide the existing ports on the core switch between them. To connect existing servers to IOV, there is only one requirement: the presence of 1-2 free PCI-E 1.0 or 2.0 slots with x8 connector.

What are some ways to become more familiar with the new I / O virtualization technology currently available?

We conduct training on deploying a test farm, connecting to a LAN, SAN, VMWare vCenter, transferring a virtual machine between farm servers, transferring settings to a second I / O switch, backing up and restoring them, adding a new physical server, and much more. After the training, we can transfer the equipment for testing, including with new servers, pre-configured and prepared to connect to the customer's data center (there are two demos).

What are the advantages of I / O virtualization devices over other solutions?

The first advantage is that this is probably the most efficient solution in order to increase the maximum possible number of virtual machines that can be run on a single physical server (due to the large PCI bandwidth and interface aggregation capabilities compared to using 10GbE adapters - 2.5 times).

The second significant advantage of I / O virtualization technology is more than twofold reduction in network I / O delays, which can be a key advantage for applying IOV in data center subsystems on which financial systems are deployed (by reducing transaction processing time and transmitting network traffic). between servers on a high-speed PCI-E bus), as well as in telecommunication systems to provide the required quality of service.

Other benefits and benefits

- Simplify administration . Using this device, the system administrator can assign any I / O interface to any server with one click. Dynamic resource allocation helps to optimize the configuration in accordance with changing load conditions.

- The overall reduction in the number of intermediate switches, network adapters, cables can provide a reduction in capital and operating costs (CAPEX up to 40% and OPEX up to 60%).

In addition, investment in existing LAN and SAN equipment is protected when adding a new server farm, and the ability to use other I / O modules. In the near future, it is planned to release interface modules for connecting to SAS2 networks and sharing of resources of the GPU.

When building a private cloud from scratch running the VMWare and Microsoft virtualization platform, using I / O virtualization technology is the right choice, since this technology allows you to fully utilize all the opportunities offered by modern virtualization and cloud computing platforms.

DEPO Switch IOV devices, in particular, are used in a typical DEPO Cloud 4000 solution to build private clouds for more than 2,000 jobs.

senko ,

Lead Product Manager DEPO Computers

Source: https://habr.com/ru/post/158033/

All Articles