How we automated testing of applications on canvas

My colleagues have already written about the development of TeamLab online editors on canvas. Today, we will look at the workflow through the eyes of testing specialists, since not only the product was innovative from the developers' point of view due to the selected technology, but the task of verifying the quality of the product turned out to be new, not solved by anyone before.

My colleagues and I spent the last 8 months of my life testing a completely new document editor written in HTML5. Testing innovative products is difficult, if only because no one has done this before us.

To implement all our ideas, we needed third-party tools:

')

And then it went the most important and interesting. Pressing the button and entering the text is quite simple, but how to verify what was created? How to check that everything done by automated tests is done correctly?

Many copies were broken in the process of finding the desired method of comparison with the standard. As a result, we came to the conclusion that information about the actions taken and, accordingly, about the success of passing the tests can be obtained in two ways:

1. Using the API of the application itself.

2. Using your own document parser.

Positive sides:

Negative sides:

Positive sides:

Negative sides:

As a result, we chose both options, but used them in different proportions: about 90% of the test verification is implemented by the parser and only 10% by the API. This allowed us to quickly write the first tests using the API and gradually develop functional tests in conjunction with the development of the parser.

DOCX was chosen as the format for parsing. This is due to the fact that:

We could not find a third-party DOCX parsing library that would support the required set of functions. Subsequently, a similar situation arose with a different format, when, when testing the table editor, we needed the XLSX parser.

Only one specialist is responsible for the parser. The basic backbone of the functionality has been implemented for about a month, but is still constantly maintained - fixes bugs (which, by the way, almost no existing functionality remains), and also adds support for the new functionality introduced in the document editor.

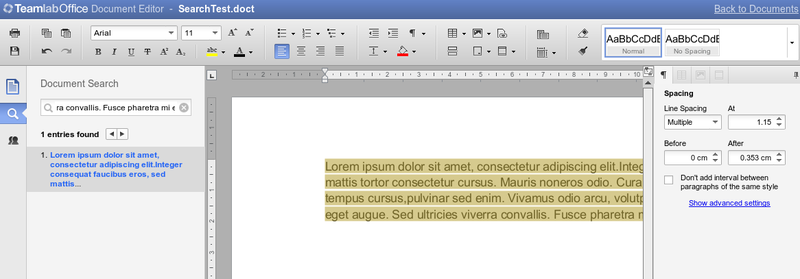

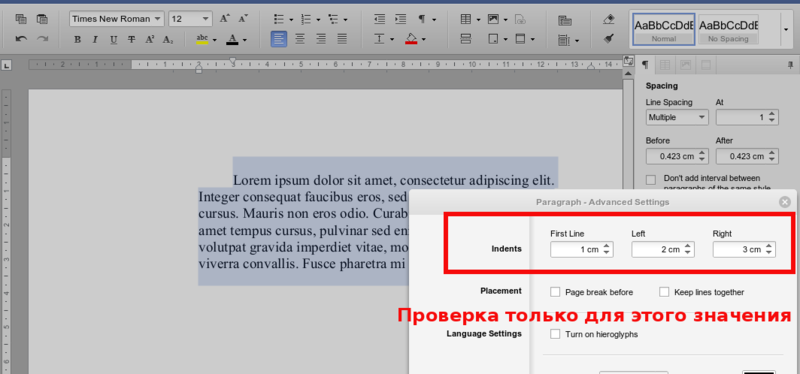

Example:

Consider a simple example: a single paragraph document with a text size of 20, with a Times New Roman font and Bold and Italic options. For such a document, the parser will return the following structure:

A document consists of a set of elements (elements) - these can be paragraphs of text, images, tables.

Each paragraph consists of several character_styles - pieces of text with exactly the same properties in this segment. Thus, if we have all the text in one paragraph typed in the same style, then it will have only one character_style. If the first word of a paragraph is, for example, bold and the rest in italics, then the paragraph will consist of two character_style: one for the first word, the second for all the others.

Inside character_style are all the necessary text properties, such as size = 20 - font size, font = "Times New Roman" - font type, font_style - class with font style properties (Bold, Italic, Underlined, Strikeout), as well as all other properties document.

As a result, in order to verify this document, we should write this code:

doc.elements.first.character_styles_array.first.size.should == 20

doc.elements.first.character_styles_array.first.font.should == "Times New Roman"

doc.elements.first.character_styles_array.first.font_style.should == FontStyle.new (true, true, false, false)

In order to have a representative selection of files in DOCX format, it was decided that you also need to write a DOCX file generator - an application that would allow you to create documents with arbitrary content and with arbitrary parameter values. For this, too, was selected one specialist. The generator was not considered as a key part of the testing system, but rather as some kind of auxiliary utility that would allow us to increase test coverage.

Based on its development, the following conclusions were made:

When creating or automating tests, do not try to cover all possible format functionality at once. This will create additional difficulties, especially at an early stage of developing the application under test.

It is difficult to distinguish incorrect results from tests, the functionality of which is not yet supported by the application.

And as a consequence of the previous points: the generator should be written closer to the end of the development of the tested application (and not at the beginning, as we did), when the maximum number of functions in the editor will be implemented.

Notwithstanding the foregoing, the generator allowed us to find errors that were almost impossible to find with manual testing, for example, incorrect processing of parameters whose values are not included in the set of acceptable values.

The basis for the framework was taken by Selenium Webdriver. On Ruby, there is an excellent alternative for it - Watir, but it so happened that at the time of the creation of the framework we did not know about it. The only time we regretted not using Watir was when integrating tests into the most favorite and best browser, IE. Selenium simply refused to click on the buttons and do something, in the end I had to write a crutch so that in case of running the tests on IE, Watir methods were called.

Before the development of this framework, testing of web applications was built in Java, and all XPath elements and other object identifiers were moved to a separate xml file, so that when changing XPath, it was not necessary to recompile the whole project.

Ruby didn’t have such a problem with recompilation, therefore in our next automation project we have abandoned a single XML file.

The functions in Selenium Webdriver were overloaded because I had to work with a file containing 1200 xPath elements. We reworked them:

It was

It became

In our opinion, it is very important before starting automation, analyzing object identifiers on a page, and if you can see that they are generated automatically (id like “docmenu-1125”), be sure to ask developers to add id that will not change, otherwise will have to redo the XPath with each new build.

More specific functions have been added to the SeleniumCommands class. Some of them were already present in Watir by default, but then we did not know it yet, for example, by clicking on the ordinal number of one of several elements, receiving one attribute from several elements at once. It is important that only one testing specialist be allowed to work with such a key class as SeleniumCommands, otherwise if an even insignificant error occurs, the whole project may fall, and, of course, this will happen exactly when it is necessary to drive out all the tests as quickly as possible.

A higher level of the framework is to work with the program menu, application interface. This is a very large (about 300) set of functions, divided into classes. Each of them implements a specific function in the interface that is accessible to the user (for example, font selection, line spacing, etc.).

All these functions have the most simple visual names and take arguments in the simplest form (for example, when there is an opportunity to transfer not any class, but just String objects, you must pass them). This makes writing tests much easier so that even manual testers without programming experience can handle it. This, by the way, is not a joke and not an exaggeration: we have work tests written by a man who is a complete zero in programming.

In the process of writing these functions, the first category of Smoke tests was created, the script of which for all functions looks like this:

1. A new document is being created.

2. One (exactly one) written function is called, to which some concrete correct value is transferred (not random, but concrete and correct, in order to discard the functionality that works correctly only on a part of possible values)

3. Download the document

4. Verify

5. Recorded results in the reporting system

6. PROFIT!

So, summing up, let's deal with the categories of tests that are present in our project.

1. Smoke tests

Their goal is to test the product on some small amount of input data and, most importantly, to verify that the Framework itself works correctly on the new build (whether xPath elements have changed, if the logic of building the application interface menu has changed.

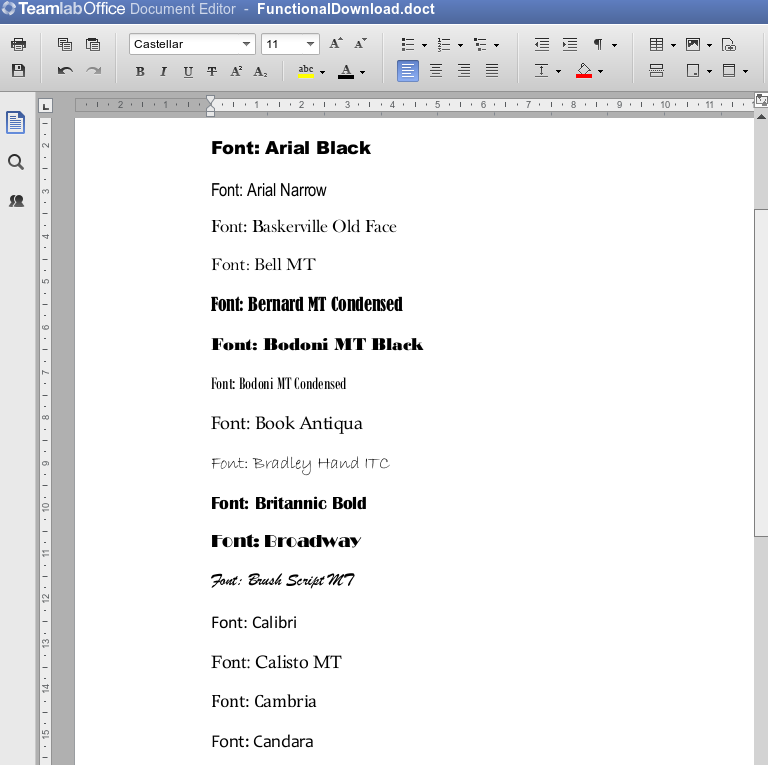

This category of tests works well, but sometimes the tests hang at odd moments while waiting for some interface elements. When repeating the test script manually, it turned out that the tests were stuck due to errors in the menu interface, for example, the list itself did not appear when the button to open the font list was pressed. And so there was a second category of tests.

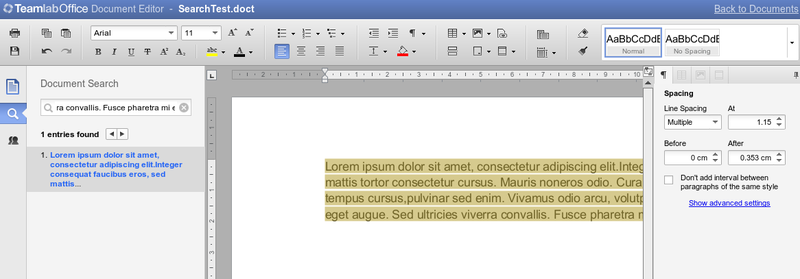

2. Interface Tests

This is a very simple set of tests whose task is to verify that when you click on all controls, an action occurs for which this control is responsible (at the interface level). That is, if we press the Bold button, we do not check whether the selection has worked in bold, we only check that the button has become displayed as pressed. Similarly, we check that all drop-down menus and all lists are opened.

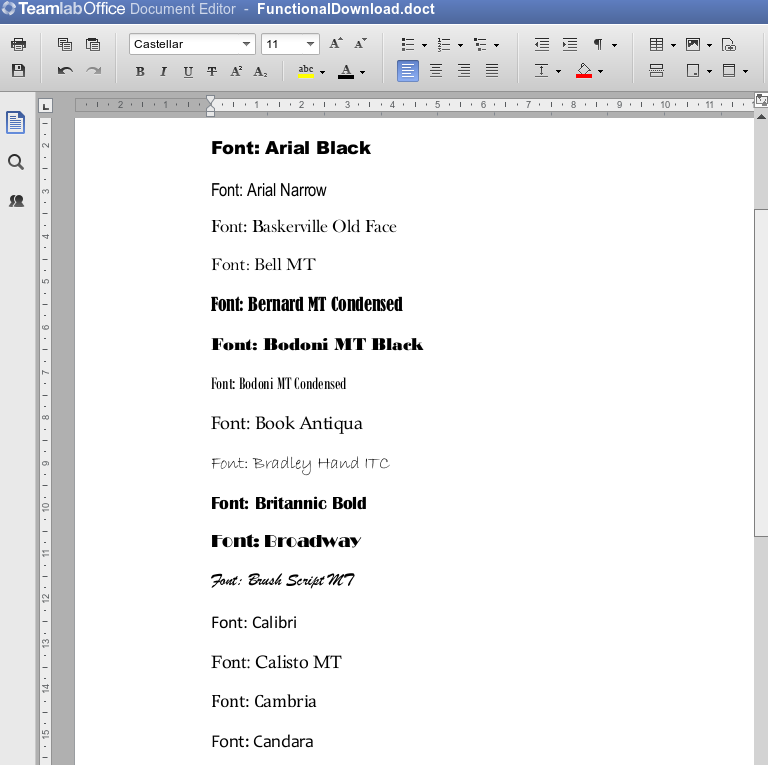

3. Enumerate all parameter values.

For each parameter, all possible values are set (for example, all font sizes are sorted), and then this is verified. In a single document, only changes of one parameter should be present, no bundles are tested. Subsequently, another test subcategory emerged from these tests.

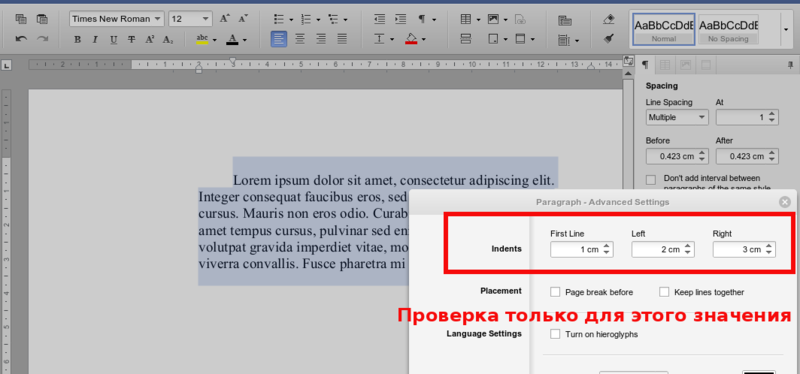

3.1 Visual verification of rendering

In the process of running tests on the enumeration of all parameter values, we realized that it was necessary to verify the rendering of all parameter values visually. The test sets the value of the parameter (for example, the font is selected), makes a screenshot. At the exit we have a group of screenshots (about 2 thousand) and a disgruntled handbrake, who will have to watch a large slideshow.

Subsequently, the verification of the rendering was done using a system of standards, which allowed us to track the regression.

4. Functional tests

One of the most important categories of tests. Covers all the functionality of the application. For best results, scripting should be done by test designers.

5. Pairwise

Tests for checking parameter bundles. For example, a complete check of typefaces with all styles and sizes (two or more functions with parameters). Generated automatically. They run very rarely due to their duration (at least 4-5 hours, some even longer).

6. Regular tests on the production server

The latest category of tests, which appeared after the first release. Basically, this is a check of the correctness of the operation of the communication components, namely the fact that files of all formats are opened for editing and correctly downloaded to all formats. Tests are short, but run twice a day.

At the beginning of the project, all reporting was collected manually. Tests were run directly from RubyMine from memory (or marks, comments in the code), it was determined whether the new build became worse or worse than the previous one, and in words the results were transferred to the test manager.

But when the number of tests grew (which happened quickly enough), it became clear that it could not last long. The memory is not rubber, it is very inconvenient to keep a lot of data on pieces of paper or to enter it manually in an electronic tablet. Therefore, it was decided to choose Testrail as the reporting server, which is almost ideally suited for our task - to keep reports on each version of the editor.

Reporting is added via the testrail API. At the beginning of each test on Rspec, a line is added that is responsible for initializing TestRun to Testrail. If a new test is written that is not yet present on Testrail, it is automatically added there. Upon completion of the test, the result is automatically determined and added to the database.

In essence, the entire reporting process is fully automated; you should only go to Testrail to get a result. It looks like this:

In conclusion, some statistics:

Project age - 1 year

The number of tests - about 2000

The number of cases completed - about 350 000

Team - 3 people

The number of found bugs - about 300

My colleagues and I spent the last 8 months of my life testing a completely new document editor written in HTML5. Testing innovative products is difficult, if only because no one has done this before us.

To implement all our ideas, we needed third-party tools:

')

- Ruby language for automation. It is scripted, works fine with large amounts of data, and, I will not dissemble, there was little experience with it.

- Gem rspec for test run

- driver Selenium Webdriver to work with browsers

1. Verification

Many copies were broken in the process of finding the desired method of comparison with the standard. As a result, we came to the conclusion that information about the actions taken and, accordingly, about the success of passing the tests can be obtained in two ways:

1. Using the API of the application itself.

2. Using your own document parser.

API

Positive sides:

- Quick results, because there is no need for unnecessary access to the server when downloading documents.

- The ability to work directly with the functions of the API interface. For us, this was the best solution - we work in a friendly climate with programmers, and they quickly provided us with these functions.

Negative sides:

- The inability to clearly locate the bug in the program, i.e. in the code of editors or when calling / implementing an interface, ie in the interface code

- Dependence on programmers. If the developers have such a situation, the API methods will change a lot or stop working altogether, the automatic testing process will also arise. But server-side API fixing can be far from a priority task (considering that this is not a public API and is used only for tests).

- The impossibility of automating the testing of all the functionality in the existing application architecture.

Parsing the downloaded document

Positive sides:

- Reliable approach, because we check the final result of the application.

- Independence from the developers. We wrote the opening of the docx format with our own tools, relatively speaking we created our own small document converter.

- The ability to implement tests of any levels of complexity, because all existing application functionality is supported.

Negative sides:

- Difficulty in implementation.

Final Verification

As a result, we chose both options, but used them in different proportions: about 90% of the test verification is implemented by the parser and only 10% by the API. This allowed us to quickly write the first tests using the API and gradually develop functional tests in conjunction with the development of the parser.

DOCX was chosen as the format for parsing. This is due to the fact that:

- In the editor itself, the support for the DOCX format was implemented best of all, the maximum number of functions was supported and the preservation into it was of the highest quality and most reliable.

- The DOCX format itself, unlike DOC, is more open and has a simpler structure. In essence, this is a ZIP archive with XML files.

We could not find a third-party DOCX parsing library that would support the required set of functions. Subsequently, a similar situation arose with a different format, when, when testing the table editor, we needed the XLSX parser.

Only one specialist is responsible for the parser. The basic backbone of the functionality has been implemented for about a month, but is still constantly maintained - fixes bugs (which, by the way, almost no existing functionality remains), and also adds support for the new functionality introduced in the document editor.

Example:

Consider a simple example: a single paragraph document with a text size of 20, with a Times New Roman font and Bold and Italic options. For such a document, the parser will return the following structure:

A document consists of a set of elements (elements) - these can be paragraphs of text, images, tables.

Each paragraph consists of several character_styles - pieces of text with exactly the same properties in this segment. Thus, if we have all the text in one paragraph typed in the same style, then it will have only one character_style. If the first word of a paragraph is, for example, bold and the rest in italics, then the paragraph will consist of two character_style: one for the first word, the second for all the others.

Inside character_style are all the necessary text properties, such as size = 20 - font size, font = "Times New Roman" - font type, font_style - class with font style properties (Bold, Italic, Underlined, Strikeout), as well as all other properties document.

As a result, in order to verify this document, we should write this code:

doc.elements.first.character_styles_array.first.size.should == 20

doc.elements.first.character_styles_array.first.font.should == "Times New Roman"

doc.elements.first.character_styles_array.first.font_style.should == FontStyle.new (true, true, false, false)

2. Document Generator

In order to have a representative selection of files in DOCX format, it was decided that you also need to write a DOCX file generator - an application that would allow you to create documents with arbitrary content and with arbitrary parameter values. For this, too, was selected one specialist. The generator was not considered as a key part of the testing system, but rather as some kind of auxiliary utility that would allow us to increase test coverage.

Based on its development, the following conclusions were made:

When creating or automating tests, do not try to cover all possible format functionality at once. This will create additional difficulties, especially at an early stage of developing the application under test.

It is difficult to distinguish incorrect results from tests, the functionality of which is not yet supported by the application.

And as a consequence of the previous points: the generator should be written closer to the end of the development of the tested application (and not at the beginning, as we did), when the maximum number of functions in the editor will be implemented.

Notwithstanding the foregoing, the generator allowed us to find errors that were almost impossible to find with manual testing, for example, incorrect processing of parameters whose values are not included in the set of acceptable values.

3. Framework for running tests

Interaction with Selenium

The basis for the framework was taken by Selenium Webdriver. On Ruby, there is an excellent alternative for it - Watir, but it so happened that at the time of the creation of the framework we did not know about it. The only time we regretted not using Watir was when integrating tests into the most favorite and best browser, IE. Selenium simply refused to click on the buttons and do something, in the end I had to write a crutch so that in case of running the tests on IE, Watir methods were called.

Before the development of this framework, testing of web applications was built in Java, and all XPath elements and other object identifiers were moved to a separate xml file, so that when changing XPath, it was not necessary to recompile the whole project.

Ruby didn’t have such a problem with recompilation, therefore in our next automation project we have abandoned a single XML file.

The functions in Selenium Webdriver were overloaded because I had to work with a file containing 1200 xPath elements. We reworked them:

It was

get_attribute(xpath, attribute) @driver.find_element(:xpath, xpath_value) attribute_value = element.attribute(attribute) return attribute_value end get_attribute('//div/div/div[5]/span/div[3]', 'name') It became

get_attribute(xpath_name, attribute) xpath_value =@@xpaths.get_value(xpath_name) elementl = @driver.find_element(:xpath, xpath_value) attribute_value = element.attribute(attribute) return attribute_value end get_attribute('admin_user_name_xpath', 'name') In our opinion, it is very important before starting automation, analyzing object identifiers on a page, and if you can see that they are generated automatically (id like “docmenu-1125”), be sure to ask developers to add id that will not change, otherwise will have to redo the XPath with each new build.

More specific functions have been added to the SeleniumCommands class. Some of them were already present in Watir by default, but then we did not know it yet, for example, by clicking on the ordinal number of one of several elements, receiving one attribute from several elements at once. It is important that only one testing specialist be allowed to work with such a key class as SeleniumCommands, otherwise if an even insignificant error occurs, the whole project may fall, and, of course, this will happen exactly when it is necessary to drive out all the tests as quickly as possible.

Menu interaction

A higher level of the framework is to work with the program menu, application interface. This is a very large (about 300) set of functions, divided into classes. Each of them implements a specific function in the interface that is accessible to the user (for example, font selection, line spacing, etc.).

All these functions have the most simple visual names and take arguments in the simplest form (for example, when there is an opportunity to transfer not any class, but just String objects, you must pass them). This makes writing tests much easier so that even manual testers without programming experience can handle it. This, by the way, is not a joke and not an exaggeration: we have work tests written by a man who is a complete zero in programming.

In the process of writing these functions, the first category of Smoke tests was created, the script of which for all functions looks like this:

1. A new document is being created.

2. One (exactly one) written function is called, to which some concrete correct value is transferred (not random, but concrete and correct, in order to discard the functionality that works correctly only on a part of possible values)

3. Download the document

4. Verify

5. Recorded results in the reporting system

6. PROFIT!

4. Tests

So, summing up, let's deal with the categories of tests that are present in our project.

1. Smoke tests

Their goal is to test the product on some small amount of input data and, most importantly, to verify that the Framework itself works correctly on the new build (whether xPath elements have changed, if the logic of building the application interface menu has changed.

This category of tests works well, but sometimes the tests hang at odd moments while waiting for some interface elements. When repeating the test script manually, it turned out that the tests were stuck due to errors in the menu interface, for example, the list itself did not appear when the button to open the font list was pressed. And so there was a second category of tests.

2. Interface Tests

This is a very simple set of tests whose task is to verify that when you click on all controls, an action occurs for which this control is responsible (at the interface level). That is, if we press the Bold button, we do not check whether the selection has worked in bold, we only check that the button has become displayed as pressed. Similarly, we check that all drop-down menus and all lists are opened.

3. Enumerate all parameter values.

For each parameter, all possible values are set (for example, all font sizes are sorted), and then this is verified. In a single document, only changes of one parameter should be present, no bundles are tested. Subsequently, another test subcategory emerged from these tests.

3.1 Visual verification of rendering

In the process of running tests on the enumeration of all parameter values, we realized that it was necessary to verify the rendering of all parameter values visually. The test sets the value of the parameter (for example, the font is selected), makes a screenshot. At the exit we have a group of screenshots (about 2 thousand) and a disgruntled handbrake, who will have to watch a large slideshow.

Subsequently, the verification of the rendering was done using a system of standards, which allowed us to track the regression.

4. Functional tests

One of the most important categories of tests. Covers all the functionality of the application. For best results, scripting should be done by test designers.

5. Pairwise

Tests for checking parameter bundles. For example, a complete check of typefaces with all styles and sizes (two or more functions with parameters). Generated automatically. They run very rarely due to their duration (at least 4-5 hours, some even longer).

6. Regular tests on the production server

The latest category of tests, which appeared after the first release. Basically, this is a check of the correctness of the operation of the communication components, namely the fact that files of all formats are opened for editing and correctly downloaded to all formats. Tests are short, but run twice a day.

5. Reporting

At the beginning of the project, all reporting was collected manually. Tests were run directly from RubyMine from memory (or marks, comments in the code), it was determined whether the new build became worse or worse than the previous one, and in words the results were transferred to the test manager.

But when the number of tests grew (which happened quickly enough), it became clear that it could not last long. The memory is not rubber, it is very inconvenient to keep a lot of data on pieces of paper or to enter it manually in an electronic tablet. Therefore, it was decided to choose Testrail as the reporting server, which is almost ideally suited for our task - to keep reports on each version of the editor.

Reporting is added via the testrail API. At the beginning of each test on Rspec, a line is added that is responsible for initializing TestRun to Testrail. If a new test is written that is not yet present on Testrail, it is automatically added there. Upon completion of the test, the result is automatically determined and added to the database.

In essence, the entire reporting process is fully automated; you should only go to Testrail to get a result. It looks like this:

In conclusion, some statistics:

Project age - 1 year

The number of tests - about 2000

The number of cases completed - about 350 000

Team - 3 people

The number of found bugs - about 300

Source: https://habr.com/ru/post/156979/

All Articles