Intel PCI 910 PCI-E SSD Features

Previously, for a long time, we used intel 320 series to cache random IO. It was moderately fast, in principle, allowed to reduce the number of spindles. At the same time, ensuring high write performance required, to put it mildly, an unreasonable amount of SSDs.

Finally, at the end of the summer, the Intel 910 came to us. To say that I am deeply impressed - to say nothing. All my previous skepticism about the effectiveness of the SSD to write dispelled.

However, first things first.

')

The Intel 910 is a PCI-E card of fairly solid footprint (to match the discrete graphics cards). However, I don’t like unpacks, so let's get to the most important thing - performance.

The numbers are real, yes, this is a hundred thousand IOPSs for random recording. Details under the cut.

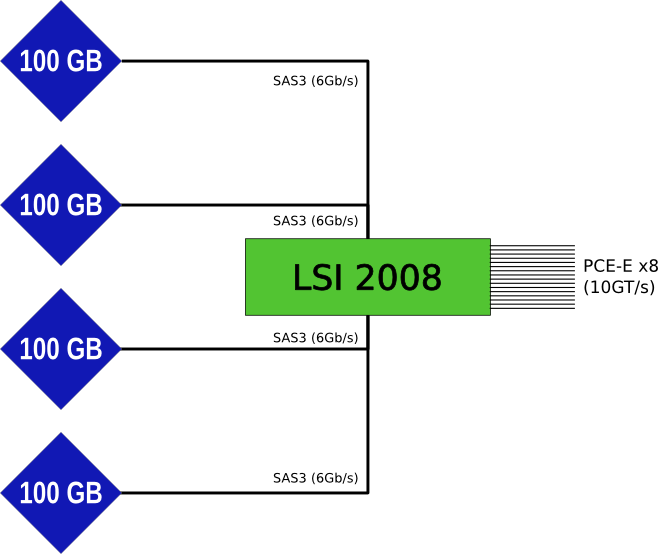

But first, we'll play Alchemy Classic, in which dragging one LSI over 4 Hitachi will result in Intel.

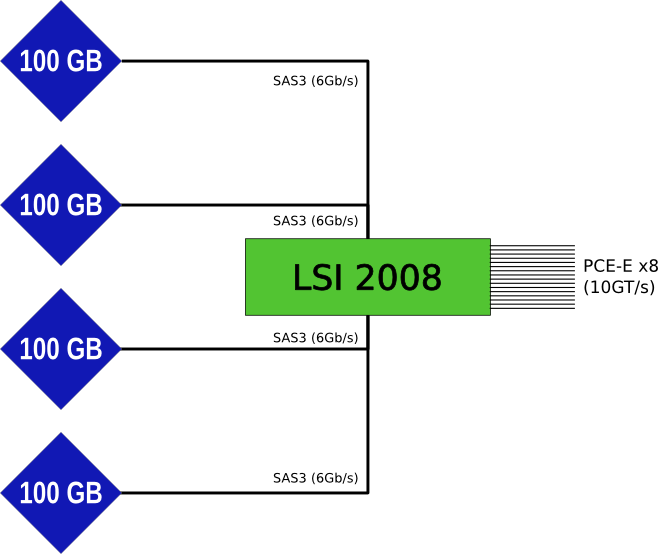

The device is a specially adapted LSI 2008, each port of which is connected to one SSD device with a capacity of 100GB. In fact, all the connections are made on the board itself, so the connection is visible only when analyzing the relationship of the devices.

The approximate scheme is as follows:

Note that the LSI controller is overwhelmed very much - it does not have its own BIOS, it does not know how to boot. In lspci, it looks like this:

The structure of the device (4 SSD's for 100GB) implies that the user decides how to use the device — raid0 or raid1 (for thin connoisseurs — raid5, although with high probability this will be the biggest nonsense that can be done with a device of this class) .

It is serviced by the mpt2sas driver.

4 scsi devices are connected to it, which declare themselves hitachi:

They do not support any extended sata-commands (as well as most extended SAS service commands) - only the minimum necessary for full-fledged work as a block device. Although, fortunately, it supports sg_format with the resize option, which allows you to make full reservations for less impact of housekeeping with active recording.

In total, we did 5 different tests to evaluate the characteristics of the device:

In general, these tests are of no interest to anyone, to ensure the “flow” of HDDs are much better suited, since they have higher capacity, lower price and very decent linear speed. A simple server with 8-10 SAS disks (or even fast SATA) in raid0 is quite capable of clogging a ten-gigabit channel.

But, after all, here are the indicators:

For maximum performance, we set 2 streams of 256k per device. Final performance: 1680MB / s, without hesitation (the deviation was only 40 μs). Lantency at the same time was 1.2ms (for block 256k, this is more than good).

In fact, this means that, alone, this device for reading is capable of completely “heading in” to the 10 Gbit / s channel and showing more than impressive results on a 20 Gbit / s channel. In this case, it will show a constant speed regardless of load. Note that Intel itself promises up to 2GB / s.

To get the highest digits per entry, we had to reduce the queue depth - one thread per write to each device. The remaining parameters were similar (block 256k)

Peak speed (second samples) was 1800MB / s, the minimum - about 600MB / s. The average write speed of 100% was 1228MB / s. A sudden decrease in recording speed is a birth injury of SSD due to housekeeping. In this case, the drop was up to 600MB / s (about three times), which is better than in older generations of SSDs, where degradation could go up to 10-15 times. Intel promises a speed of about 1.6GB / s with linear recording.

Of course, linear performance does not interest anyone. Everyone is interested in performance under heavy load. And what could be the hardest for SSD? Writing in 100% of the volume, in small blocks, in many streams, without interruption for several hours. On the 320th series, this led to a performance drop from 2000 IOPS to 300.

Test parameters: raid0 from 4 parts of the device, linux-raid (3.2) is made, 64-bit. Each task with randread or randwrite mode, for mixed load, 2 tasks are described.

Note, unlike many utilities that correlate the number of read and write operations at a fixed percentage, we run two independent streams, one of which reads all the time, the other writes all the time (this allows the equipment to be loaded more completely - if the device has problems with writing , it can still continue to serve the read). The remaining parameters: direct = 1, buffered = 0, io mode - libaio, block 4k.

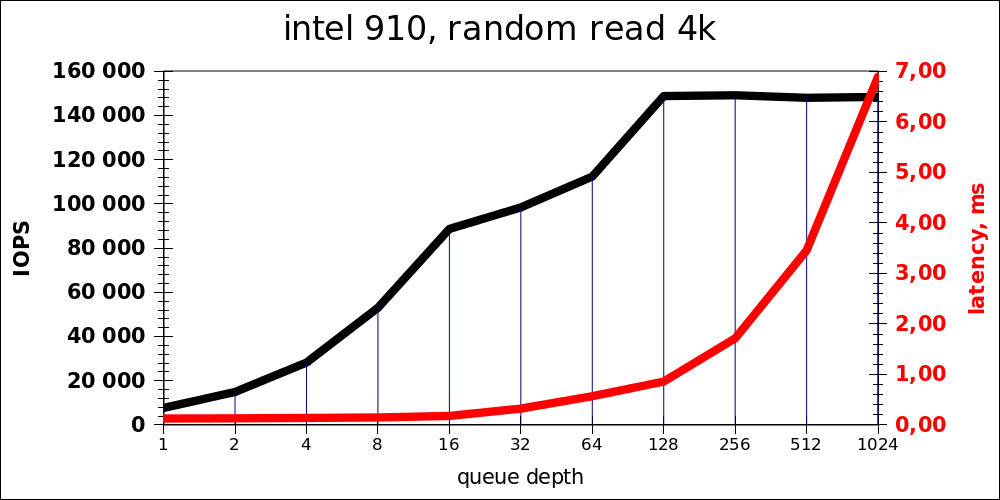

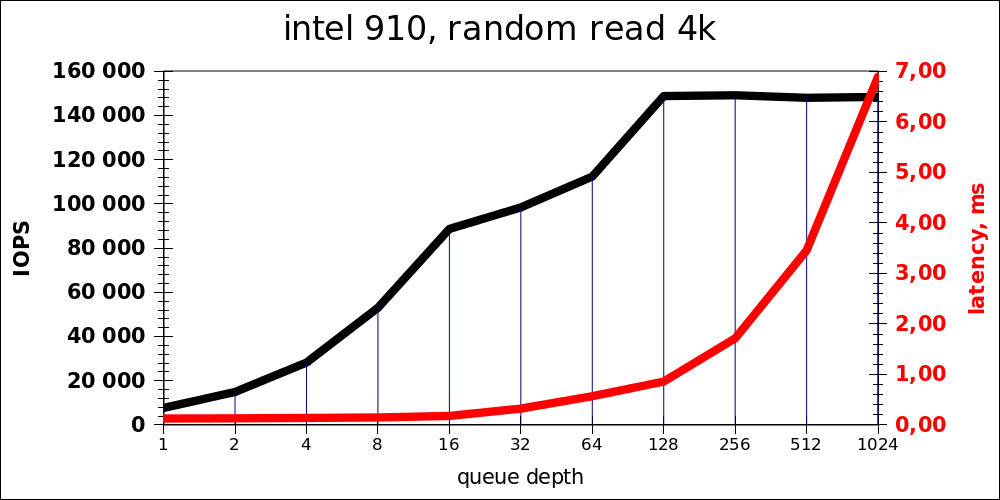

It is noticeable that the optimal load is something of the order of 16-32 operations simultaneously. The queue length of 1024 is added from sports interest, of course, this is not an adequate performance for the product (but even in this case, the latency is obtained at the level of a fairly fast HDD).

It can also be noted that the point at which the speed practically stops growing is 128. Taking into account that there are 4 pieces inside, this is the usual queue depth of 32 for each controller.

Similarly, the optimum is in the region of 16-32 simultaneous operations, by a very significant (10-fold growth) latency, you can “squeeze” another 10k IOPS.

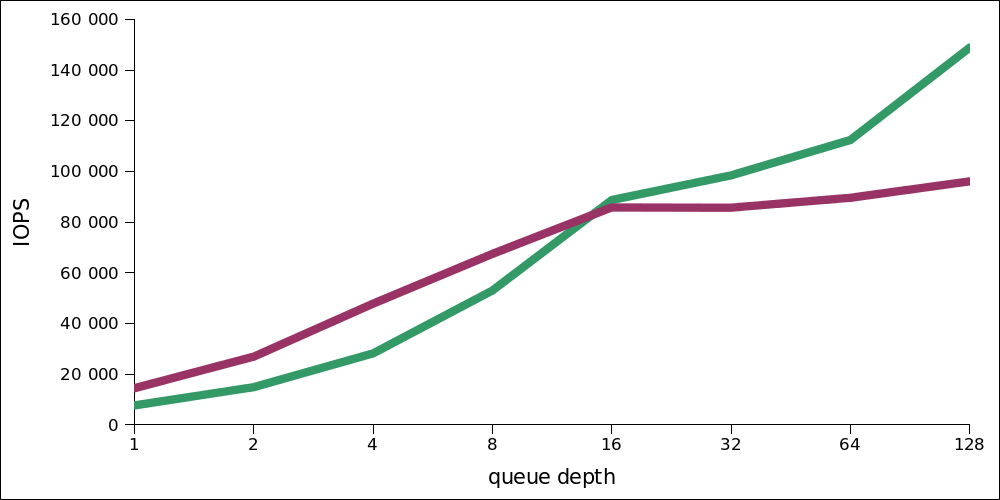

Interestingly, at low load, write performance is higher. Here is a comparison of two graphs - reading and writing in one scale (reading - green):

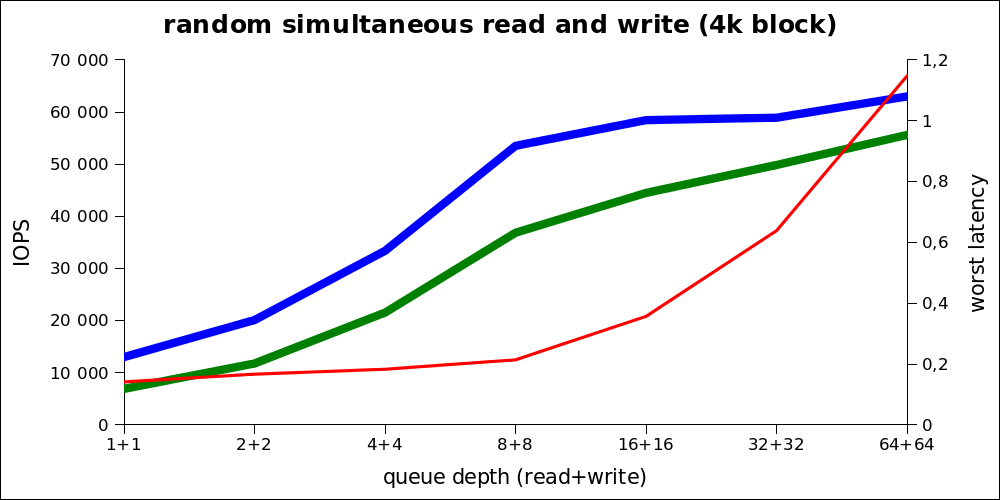

The heaviest type of load that can be considered as obviously exceeding any practical load in the product environment (including OLAP).

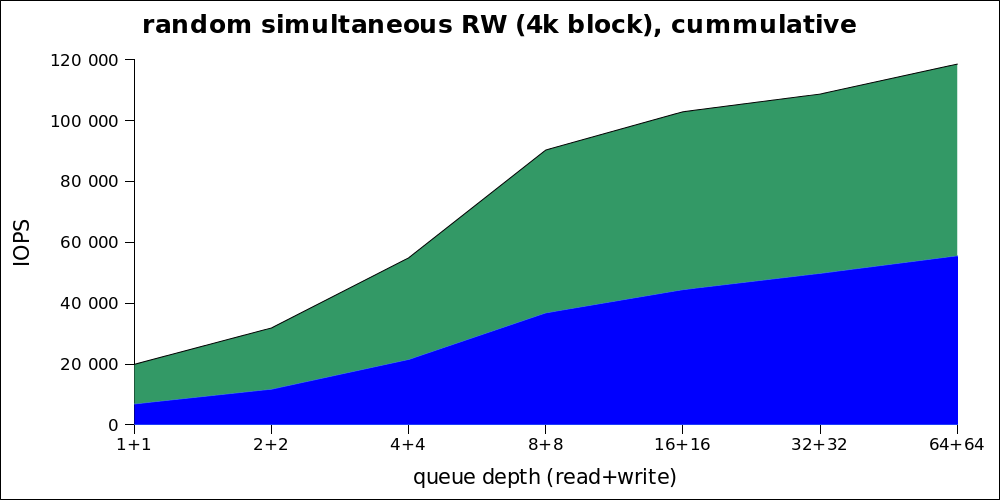

Since real graphics do not understand real graphics, here are the same figures in a cumulative way:

It can be seen that the optimal load is also in the region from 8 + 8 (that is, 16) to 32. Thus, despite the very high maximum rates, we need to talk about a maximum of ~ 80k IOPS under normal load.

Note that the resulting numbers are more than intel promises. On the site, they state that this model is capable of 35 kIOPS per record, which roughly corresponds (on the performance graph) to a point with an iodepth of about 6. It is also possible that this figure corresponds to the worst case for housekeeping.

The only disadvantage of this device are certain problems with hot-swapping - PCI-E devices require de-energizing the server before replacing.

Finally, at the end of the summer, the Intel 910 came to us. To say that I am deeply impressed - to say nothing. All my previous skepticism about the effectiveness of the SSD to write dispelled.

However, first things first.

')

The Intel 910 is a PCI-E card of fairly solid footprint (to match the discrete graphics cards). However, I don’t like unpacks, so let's get to the most important thing - performance.

Picture to attract attention

The numbers are real, yes, this is a hundred thousand IOPSs for random recording. Details under the cut.

Device description

But first, we'll play Alchemy Classic, in which dragging one LSI over 4 Hitachi will result in Intel.

The device is a specially adapted LSI 2008, each port of which is connected to one SSD device with a capacity of 100GB. In fact, all the connections are made on the board itself, so the connection is visible only when analyzing the relationship of the devices.

The approximate scheme is as follows:

Note that the LSI controller is overwhelmed very much - it does not have its own BIOS, it does not know how to boot. In lspci, it looks like this:

04: 00.0 Serial Attached SCSI controller: LSI Logic / Symbios Logic SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] (rev 03)

Subsystem: Intel Corporation Device 3700

The structure of the device (4 SSD's for 100GB) implies that the user decides how to use the device — raid0 or raid1 (for thin connoisseurs — raid5, although with high probability this will be the biggest nonsense that can be done with a device of this class) .

It is serviced by the mpt2sas driver.

4 scsi devices are connected to it, which declare themselves hitachi:

sg_inq / dev / sdo Vendor identification: HITACHI Product identification: HUSSL4010ASS600

They do not support any extended sata-commands (as well as most extended SAS service commands) - only the minimum necessary for full-fledged work as a block device. Although, fortunately, it supports sg_format with the resize option, which allows you to make full reservations for less impact of housekeeping with active recording.

Testing

In total, we did 5 different tests to evaluate the characteristics of the device:

- random read test

- random write test

- a test for mixed parallel reading / writing (note that we don’t have to talk about the proportions of “read / write”, because each stream was threshing separately from each other, competing for resources, as is often the case in real life).

- test for maximum linear read performance

- test for maximum linear write performance

Tests for linear read and write

In general, these tests are of no interest to anyone, to ensure the “flow” of HDDs are much better suited, since they have higher capacity, lower price and very decent linear speed. A simple server with 8-10 SAS disks (or even fast SATA) in raid0 is quite capable of clogging a ten-gigabit channel.

But, after all, here are the indicators:

Linear reading

For maximum performance, we set 2 streams of 256k per device. Final performance: 1680MB / s, without hesitation (the deviation was only 40 μs). Lantency at the same time was 1.2ms (for block 256k, this is more than good).

In fact, this means that, alone, this device for reading is capable of completely “heading in” to the 10 Gbit / s channel and showing more than impressive results on a 20 Gbit / s channel. In this case, it will show a constant speed regardless of load. Note that Intel itself promises up to 2GB / s.

Linear recording

To get the highest digits per entry, we had to reduce the queue depth - one thread per write to each device. The remaining parameters were similar (block 256k)

Peak speed (second samples) was 1800MB / s, the minimum - about 600MB / s. The average write speed of 100% was 1228MB / s. A sudden decrease in recording speed is a birth injury of SSD due to housekeeping. In this case, the drop was up to 600MB / s (about three times), which is better than in older generations of SSDs, where degradation could go up to 10-15 times. Intel promises a speed of about 1.6GB / s with linear recording.

random IO

Of course, linear performance does not interest anyone. Everyone is interested in performance under heavy load. And what could be the hardest for SSD? Writing in 100% of the volume, in small blocks, in many streams, without interruption for several hours. On the 320th series, this led to a performance drop from 2000 IOPS to 300.

Test parameters: raid0 from 4 parts of the device, linux-raid (3.2) is made, 64-bit. Each task with randread or randwrite mode, for mixed load, 2 tasks are described.

Note, unlike many utilities that correlate the number of read and write operations at a fixed percentage, we run two independent streams, one of which reads all the time, the other writes all the time (this allows the equipment to be loaded more completely - if the device has problems with writing , it can still continue to serve the read). The remaining parameters: direct = 1, buffered = 0, io mode - libaio, block 4k.

Random read

| iodepth | Iops | avg.latency |

|---|---|---|

| one | 7681 | 0.127 |

| 2 | 14893 | 0.131 |

| four | 28203 | 0.139 |

| eight | 53011 | 0.148 |

| sixteen | 88700 | 0.178 |

| 32 | 98419 | 0.323 |

| 64 | 112378 | 0.568 |

| 128 | 148845 | 0.858 |

| 256 | 149196 | 1,714 |

| 512 | 148067 | 3,456 |

| 1024 | 148445 | 6,895 |

It is noticeable that the optimal load is something of the order of 16-32 operations simultaneously. The queue length of 1024 is added from sports interest, of course, this is not an adequate performance for the product (but even in this case, the latency is obtained at the level of a fairly fast HDD).

It can also be noted that the point at which the speed practically stops growing is 128. Taking into account that there are 4 pieces inside, this is the usual queue depth of 32 for each controller.

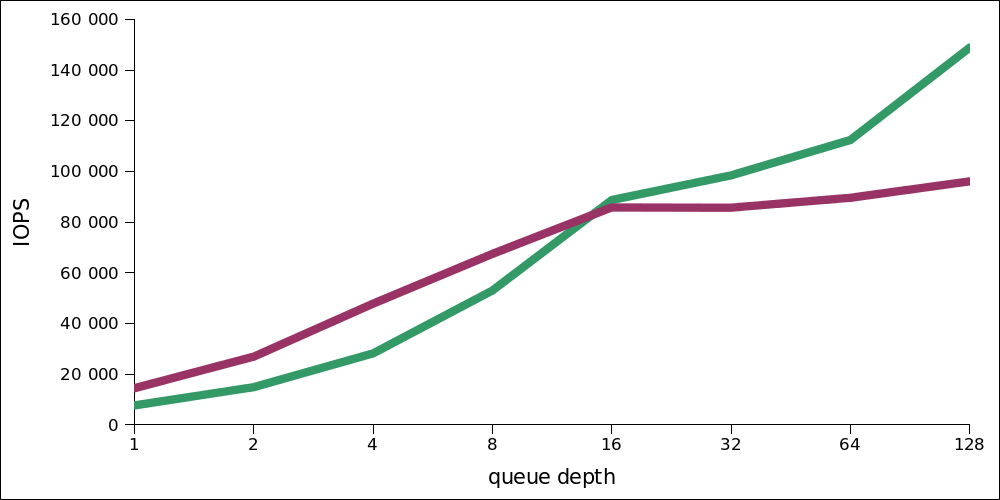

Random write

| iodepth | Iops | avg.latency |

|---|---|---|

| one | 14480 | 0.066 |

| 2 | 26930 | 0.072 |

| four | 47827 | 0.081 |

| eight | 67451 | 0.116 |

| sixteen | 85790 | 0.184 |

| 32 | 85692 | 0.371 |

| 64 | 89589 | 0.763 |

| 128 | 96076 | 1,330 |

| 256 | 102496 | 2,495 |

| 512 | 96658 | 5,294 |

| 1024 | 97243 | 10.52 |

Similarly, the optimum is in the region of 16-32 simultaneous operations, by a very significant (10-fold growth) latency, you can “squeeze” another 10k IOPS.

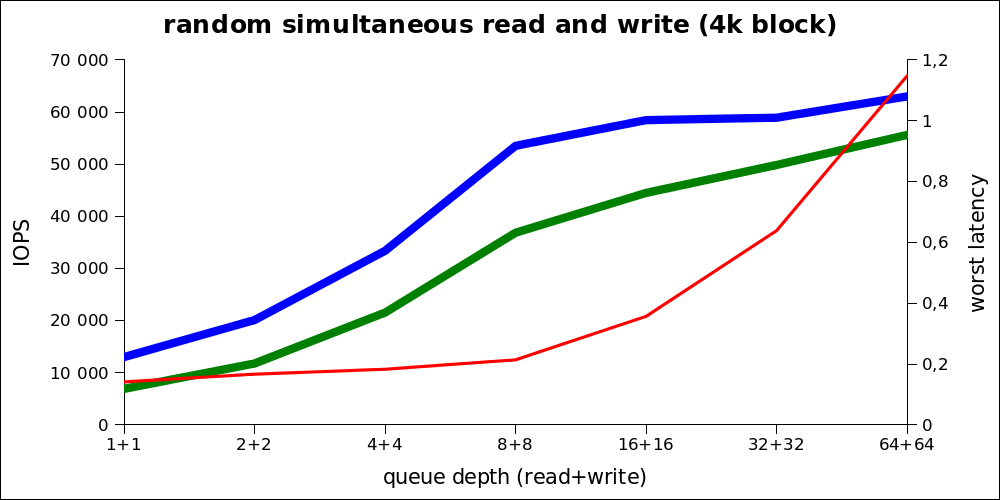

Interestingly, at low load, write performance is higher. Here is a comparison of two graphs - reading and writing in one scale (reading - green):

Mixed load

The heaviest type of load that can be considered as obviously exceeding any practical load in the product environment (including OLAP).

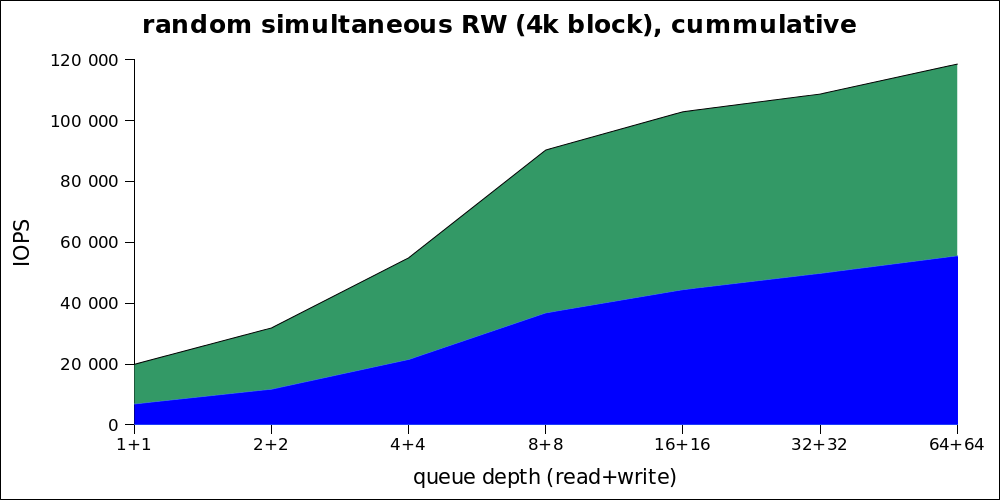

Since real graphics do not understand real graphics, here are the same figures in a cumulative way:

| iodepth | IOPS read | IOPS write | avg.latency |

|---|---|---|---|

| 1 + 1 | 6920 | 13015 | 0.141 |

| 2 + 2 | 11777 | 20110 | 0.166 |

| 4 + 4 | 21541 | 33392 | 0.18 |

| 8 + 8 | 36865 | 53522 | 0.21 |

| 16 + 16 | 44495 | 58457 | 0.35 |

| 32 + 32 | 49852 | 58918 | 0.63 |

| 64 + 64 | 55622 | 63001 | 1.14 |

It can be seen that the optimal load is also in the region from 8 + 8 (that is, 16) to 32. Thus, despite the very high maximum rates, we need to talk about a maximum of ~ 80k IOPS under normal load.

Note that the resulting numbers are more than intel promises. On the site, they state that this model is capable of 35 kIOPS per record, which roughly corresponds (on the performance graph) to a point with an iodepth of about 6. It is also possible that this figure corresponds to the worst case for housekeeping.

The only disadvantage of this device are certain problems with hot-swapping - PCI-E devices require de-energizing the server before replacing.

Source: https://habr.com/ru/post/156147/

All Articles