Active appearance models

Active Models of Appearance (Active Appearance Models, AAM) are statistical models of images that can be adapted to a real image by various kinds of deformations. This type of model in a two-dimensional version was proposed by Tim Kutes and Chris Taylor in 1998 [1]. Initially, active models of appearance were used to estimate the parameters of facial images, but then they were actively used in other areas, in particular, in medicine in the analysis of X-ray images and images obtained using magnetic resonance imaging.

This article discusses a brief description of how active models of appearance and the associated mathematical apparatus function, as well as an example of their implementation.

')

Over the past years, the mathematical apparatus of active models of appearance has been actively developed and at the moment there are 2 approaches to constructing such models: the classical one (the one that was originally proposed by Kutes) and based on the so-called reverse composition (proposed by Matthews and Baker in 2003 [2]).

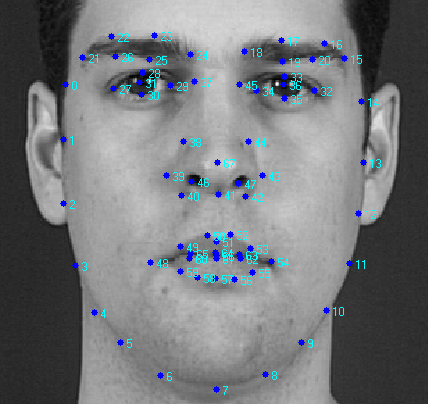

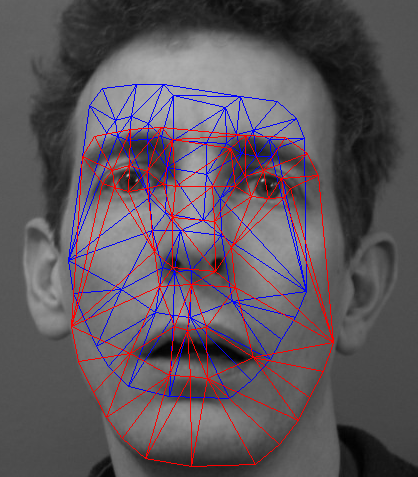

We first consider the common parts of the two approaches. In active appearance models, two types of parameters are modeled: parameters associated with the form (shape parameters), and parameters related to the statistical model of the image or texture (appearance parameters). Before using the model should be trained on a set of pre-marked images. The marking of images is done manually or in semi-automatic mode, when using some algorithm are the approximate location of the tags, and then they are specified by an expert. Each label has its own number and determines the characteristic point that the model will have to find when adapting to a new image. An example of such markup (XM2VTS face database) is shown in the figure below.

In the presented example, the image shows 68 marks that form the shape of the model of the active appearance. This form indicates the outer contour of the face, the contours of the mouth, eyes, nose, eyebrows. This character of the markup allows us to further determine the various parameters of the face from its image, which can be used for further processing by other algorithms. For example, these can be personality identification algorithms, audio-visual speech recognition, and the determination of the emotional state of a subject.

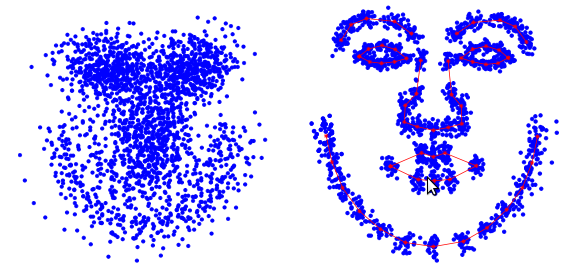

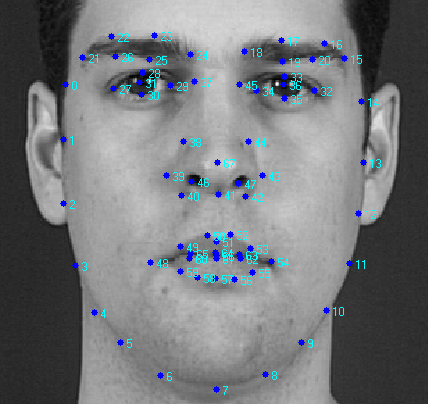

The procedure for learning the appearance of active models begins with the normalization of the position of all forms in order to compensate for differences in scale, tilt and offset. For this, the so-called generalized Procrustes analysis is used. Here we will not give a detailed description of it, and an interested reader can read the corresponding article in Wikipedia. Here is how many labels look like before and after normalization (according to [3]).

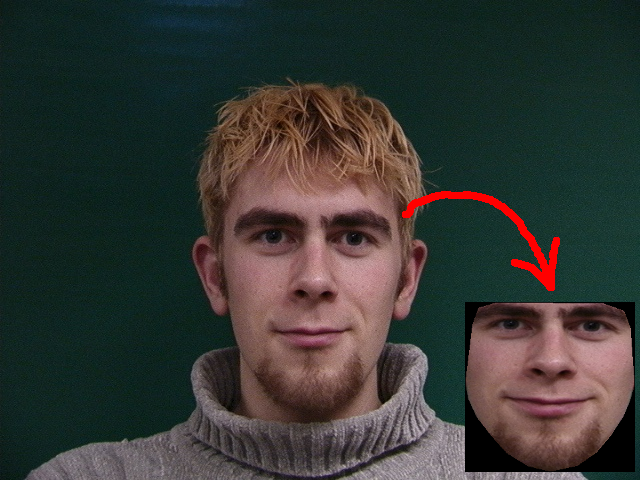

After all forms are normalized, a matrix is formed from the components of their points. where

where  . After selecting the main components of the specified matrix, we obtain the following expression for the synthesized form:

. After selecting the main components of the specified matrix, we obtain the following expression for the synthesized form:

.

.

Here - the form averaged over all implementations of the training sample (basic form),

- the form averaged over all implementations of the training sample (basic form),  - matrix of main vectors,

- matrix of main vectors,  - form parameters. The above expression means that the form

- form parameters. The above expression means that the form  can be expressed as the sum of the base form

can be expressed as the sum of the base form  and linear combinations of proper forms contained in a matrix

and linear combinations of proper forms contained in a matrix  . By changing the parameter vector

. By changing the parameter vector  we can get various kinds of deformation of the form to fit it to the real image. Below is an example of such a form [7]. The blue and red arrows show the directions of the main components.

we can get various kinds of deformation of the form to fit it to the real image. Below is an example of such a form [7]. The blue and red arrows show the directions of the main components.

It should be noted that there are models of active appearance with a hard and not hard deformation. Models with rigid deformation can undergo only affine transformations (rotation, shift, scaling), while models with non-rigid deformation can be subjected to other types of deformations. In practice, a combination of both types of deformations is used. In this case, the layout parameters (rotation angle, scale, offset or affine transform coefficients) are also added to the form parameters.

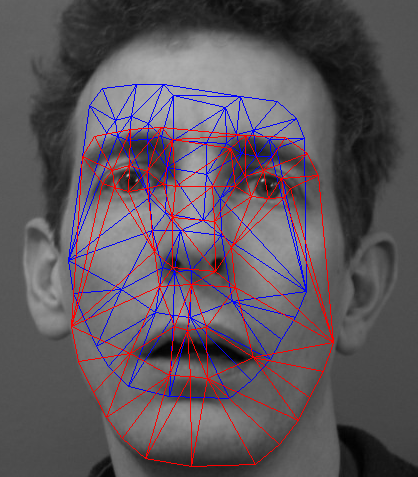

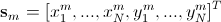

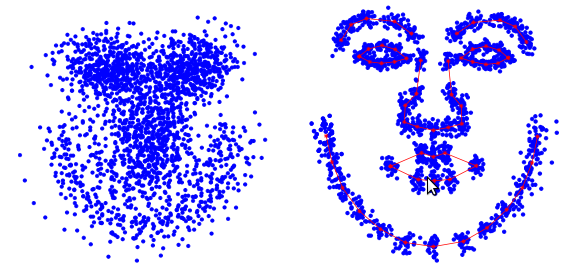

The learning procedure for the components of the appearance is performed after the components of the form (the basic form and the matrix of the main components) are calculated. The learning process here consists of three steps. The first step is to extract textures from the training images that best fit the basic form. For this, a triangulation of the marks of the basic form and the form consisting of the marks of the training image is performed. Then, using piecewise interpolation, the mapping of the training image regions resulting from the triangulation into the corresponding regions of the generated texture is performed. As an example, the figure below shows the result of such a conversion for one of the images of the IMM database.

After all the textures have been formed, in the second step, their photometric normalization is performed in order to compensate for the different lighting conditions. Currently, a large number of methods have been developed to allow this. The simplest of them is the subtraction of the average value and the normalization of the dispersion of pixel brightness.

Finally, in the third step, a matrix is formed from the textures, such that each column of it contains the pixel values of the corresponding texture (similar to the matrix ). It is worth noting that the textures used for learning can be either single-channel (grayscale) or multi-channel (for example, RGB color space or another). In the case of multichannel textures, the vectors of pixels are formed separately for each of the channels, and then they are concatenated. After finding the main components of the texture matrix, we obtain the expression for the synthesized texture:

). It is worth noting that the textures used for learning can be either single-channel (grayscale) or multi-channel (for example, RGB color space or another). In the case of multichannel textures, the vectors of pixels are formed separately for each of the channels, and then they are concatenated. After finding the main components of the texture matrix, we obtain the expression for the synthesized texture:

.

.

Here - basic texture obtained by averaging over all textures of the training set,

- basic texture obtained by averaging over all textures of the training set,  - Matrix own textures

- Matrix own textures  - vector of active appearance parameters. Below is an example of a synthesized texture [7].

- vector of active appearance parameters. Below is an example of a synthesized texture [7].

In practice, to reduce the overtraining effect of the model, only 95-98% of the most significant vectors are left in the matrices of the main components. Moreover, this number may be different for the main components of the form and the main components of the appearance. Refined figures can be selected already in the process of experimental studies or when testing a model using the cross-validation procedure.

This is where the common part of different types of active models of appearance ends and now we consider the differences between the two approaches.

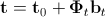

In a model of this type, we also need to calculate the vector of combined parameters, which is given by the following formula:

.

.

Here - a diagonal matrix of weight values, which allows to balance the contribution of the distances between pixels and pixel intensities. For each element of the training set (texture-form pair), its own vector is calculated

- a diagonal matrix of weight values, which allows to balance the contribution of the distances between pixels and pixel intensities. For each element of the training set (texture-form pair), its own vector is calculated  . Then the resulting set of vectors is combined into a matrix and its main components are found. In this case, the synthesized vector of the combined shape and texture parameters is defined by the following expression:

. Then the resulting set of vectors is combined into a matrix and its main components are found. In this case, the synthesized vector of the combined shape and texture parameters is defined by the following expression:

.

.

Here - the matrix of the main components of the combined parameters

- the matrix of the main components of the combined parameters  - vector of combined appearance parameters. From here we can get new expressions for the synthesized form and texture:

- vector of combined appearance parameters. From here we can get new expressions for the synthesized form and texture:

.

.

In practice, the matrix It also removes noise components to reduce the effect of retraining and reduce the amount of computation.

It also removes noise components to reduce the effect of retraining and reduce the amount of computation.

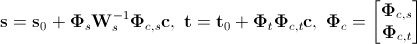

After the shape, appearance and combined parameters have been calculated, we need to find the so-called prediction matrix. which, in the sense of minimum rms error, would satisfy the following linear equation:

which, in the sense of minimum rms error, would satisfy the following linear equation:

.

.

Here , but

, but  - perturbation of the position vector and combined appearance parameters. Various methods have been developed to solve the above equation. A detailed review of them was carried out in [3 - 6].

- perturbation of the position vector and combined appearance parameters. Various methods have been developed to solve the above equation. A detailed review of them was carried out in [3 - 6].

Adaptation of the considered active model of appearance to the analyzed image occurs, in the general case, as follows.

Various modifications and improvements have been proposed to this algorithm, but its overall structure and essence remains the same.

The above algorithm is quite effective, but it has a rather serious drawback, which limits its use in real-time applications: it slowly converges and requires a large amount of computation. To overcome these shortcomings, a new type of active appearance models was proposed in [2, 7], which will be discussed in the next section.

Matthews and Baker proposed a computationally efficient algorithm for adapting the active model of appearance, which depends only on the shape parameters (the so-called “project-out” model). Due to this, it was possible to significantly increase its speed. The adaptation algorithm, which was based on the Lucas-Canada approach, uses the Newton method to find the minimum of the error function.

The Lucas-Canada algorithm is trying to find a locally best fit in the sense of the minimum of the root-mean-square error between the template and the real image. In this case, the pattern is subjected to deformation (affine and / or piecewise) specified by the parameter vector which maps its pixels to the pixels of the real image.

which maps its pixels to the pixels of the real image.

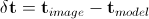

Direct finding of parameters is a nonlinear optimization problem. To solve it using linear methods, the Lucas-Canada algorithm assumes that the initial value of the deformation parameters is known and then iteratively finds the increments of the parameters

is a nonlinear optimization problem. To solve it using linear methods, the Lucas-Canada algorithm assumes that the initial value of the deformation parameters is known and then iteratively finds the increments of the parameters  by updating the vector on each iteration

by updating the vector on each iteration  .

.

The active model of the appearance of the reverse composition uses a similar approach to update its own parameters during the adaptation process, except that the non-basic texture undergoes deformation , and the analyzed image.

, and the analyzed image.

At the stage of learning the active model of the appearance of the reverse composition, so-called images of the fastest descent and their Hessian are calculated. Adaptation of the model takes place in a manner similar to the classical model of appearance, except that in this case only the update of the form parameters and (optionally) the location parameters takes place.

It is worth noting that Matthews and Baker offered a large number of possible variations with different properties of the models they developed. An interested reader can refer to the works [2, 7 - 9] for more information.

For the practical implementation and research of the above learning algorithms and adaptation of active models of appearance, the author has developed a specialized software library called AAMToolbox. The library is distributed under the GPLv3 license and is intended for use only for non-commercial and research purposes. Source codes are available at this link .

AAMToolbox requires the library OpenCV 2.4, boost 1.42 or higher, IDE NetBeans 6.9. Ubuntu Linux OS 10.04 and 10.10 are currently supported. Performance and collection on other platforms has not been verified.

AAMToolbox implements algorithms for working with both the classic active appearance model and the active appearance model of the reverse composition. Access to both types of algorithms is carried out through a single interface that provides training of the model on a given training sample, saving and restoring the trained model from the file, and adapting the model to the real image. Both color images (in three-channel color) and images in grayscale are supported.

In order to train a model, you must first prepare a training set. The sample should consist of two types of files. The first type is the actual images that the model will be trained on. Files of the second type are text markup files and contain form marks indicated on the corresponding images of the training sample. Below is a fragment of such a file.

Here, the first column is the label number, the second column is the X coordinate of the label, the third column is the Y coordinate of the label. Each image must have its own markup file.

The learning code for the active appearance model is fairly simple.

As a result of executing the presented code fragment, it allows you to train the active model of the appearance of a given type and save it to a file. It is worth noting that during training, all data, including images, are in RAM, so when loading a large number of images (several hundred), you should take care to ensure that there are enough of it available (2 - 3 GB). As an example of code that conducts the training procedure for different types of active models of appearance, you can look at the “AAM Estimator test” unit test of the library project. If it is launched for execution, it will train and save each of the supported types in the appropriate files in the variant for color images and grayscale (total 4 different models).

The adaptation code of the active model of appearance to the image will look as follows:

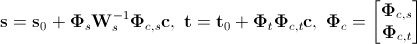

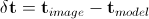

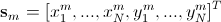

In order to see a demonstration of the operation of adaptation algorithms for active appearance models, it is necessary to run the “Aply model test” and “Aply model IC test” unit tests, which adapt the models of supported types to the image. The figure below shows an example of one of the results obtained.

These tests clearly demonstrate the difference in the rate of convergence of the classical active model of appearance and the active model of the appearance of the reverse composition. However, the lack of the latter can be attributed to the divergence of the algorithm of its adaptation in some cases. Several approaches have been proposed for its elimination, but they are not implemented in the AAMToolbox library under review (at least for the time being).

The article briefly reviewed the active models of appearance and the basic concepts and mathematical apparatus associated with them. Also considered is the author-developed AAMToolbox software library that implements the algorithms outlined in the article. Examples of its use are given.

Behind a frame there were three-dimensional models of active appearance and the algorithms connected with them. Perhaps they will be discussed in the following articles.

Description of the illustration

The figure shows the result of the adaptation of the active model of appearance to the image of the face. The blue grid shows the initial state of the model, and the red one shows what happened.

This article discusses a brief description of how active models of appearance and the associated mathematical apparatus function, as well as an example of their implementation.

')

Understanding Active Appearance Models

Over the past years, the mathematical apparatus of active models of appearance has been actively developed and at the moment there are 2 approaches to constructing such models: the classical one (the one that was originally proposed by Kutes) and based on the so-called reverse composition (proposed by Matthews and Baker in 2003 [2]).

We first consider the common parts of the two approaches. In active appearance models, two types of parameters are modeled: parameters associated with the form (shape parameters), and parameters related to the statistical model of the image or texture (appearance parameters). Before using the model should be trained on a set of pre-marked images. The marking of images is done manually or in semi-automatic mode, when using some algorithm are the approximate location of the tags, and then they are specified by an expert. Each label has its own number and determines the characteristic point that the model will have to find when adapting to a new image. An example of such markup (XM2VTS face database) is shown in the figure below.

In the presented example, the image shows 68 marks that form the shape of the model of the active appearance. This form indicates the outer contour of the face, the contours of the mouth, eyes, nose, eyebrows. This character of the markup allows us to further determine the various parameters of the face from its image, which can be used for further processing by other algorithms. For example, these can be personality identification algorithms, audio-visual speech recognition, and the determination of the emotional state of a subject.

The procedure for learning the appearance of active models begins with the normalization of the position of all forms in order to compensate for differences in scale, tilt and offset. For this, the so-called generalized Procrustes analysis is used. Here we will not give a detailed description of it, and an interested reader can read the corresponding article in Wikipedia. Here is how many labels look like before and after normalization (according to [3]).

After all forms are normalized, a matrix is formed from the components of their points.

where

where  . After selecting the main components of the specified matrix, we obtain the following expression for the synthesized form:

. After selecting the main components of the specified matrix, we obtain the following expression for the synthesized form: .

.Here

- the form averaged over all implementations of the training sample (basic form),

- the form averaged over all implementations of the training sample (basic form),  - matrix of main vectors,

- matrix of main vectors,  - form parameters. The above expression means that the form

- form parameters. The above expression means that the form  can be expressed as the sum of the base form

can be expressed as the sum of the base form  and linear combinations of proper forms contained in a matrix

and linear combinations of proper forms contained in a matrix  . By changing the parameter vector

. By changing the parameter vector  we can get various kinds of deformation of the form to fit it to the real image. Below is an example of such a form [7]. The blue and red arrows show the directions of the main components.

we can get various kinds of deformation of the form to fit it to the real image. Below is an example of such a form [7]. The blue and red arrows show the directions of the main components.

It should be noted that there are models of active appearance with a hard and not hard deformation. Models with rigid deformation can undergo only affine transformations (rotation, shift, scaling), while models with non-rigid deformation can be subjected to other types of deformations. In practice, a combination of both types of deformations is used. In this case, the layout parameters (rotation angle, scale, offset or affine transform coefficients) are also added to the form parameters.

The learning procedure for the components of the appearance is performed after the components of the form (the basic form and the matrix of the main components) are calculated. The learning process here consists of three steps. The first step is to extract textures from the training images that best fit the basic form. For this, a triangulation of the marks of the basic form and the form consisting of the marks of the training image is performed. Then, using piecewise interpolation, the mapping of the training image regions resulting from the triangulation into the corresponding regions of the generated texture is performed. As an example, the figure below shows the result of such a conversion for one of the images of the IMM database.

After all the textures have been formed, in the second step, their photometric normalization is performed in order to compensate for the different lighting conditions. Currently, a large number of methods have been developed to allow this. The simplest of them is the subtraction of the average value and the normalization of the dispersion of pixel brightness.

Finally, in the third step, a matrix is formed from the textures, such that each column of it contains the pixel values of the corresponding texture (similar to the matrix

). It is worth noting that the textures used for learning can be either single-channel (grayscale) or multi-channel (for example, RGB color space or another). In the case of multichannel textures, the vectors of pixels are formed separately for each of the channels, and then they are concatenated. After finding the main components of the texture matrix, we obtain the expression for the synthesized texture:

). It is worth noting that the textures used for learning can be either single-channel (grayscale) or multi-channel (for example, RGB color space or another). In the case of multichannel textures, the vectors of pixels are formed separately for each of the channels, and then they are concatenated. After finding the main components of the texture matrix, we obtain the expression for the synthesized texture: .

.Here

- basic texture obtained by averaging over all textures of the training set,

- basic texture obtained by averaging over all textures of the training set,  - Matrix own textures

- Matrix own textures  - vector of active appearance parameters. Below is an example of a synthesized texture [7].

- vector of active appearance parameters. Below is an example of a synthesized texture [7].

In practice, to reduce the overtraining effect of the model, only 95-98% of the most significant vectors are left in the matrices of the main components. Moreover, this number may be different for the main components of the form and the main components of the appearance. Refined figures can be selected already in the process of experimental studies or when testing a model using the cross-validation procedure.

This is where the common part of different types of active models of appearance ends and now we consider the differences between the two approaches.

Classic Active Appearance Model

In a model of this type, we also need to calculate the vector of combined parameters, which is given by the following formula:

.

.Here

- a diagonal matrix of weight values, which allows to balance the contribution of the distances between pixels and pixel intensities. For each element of the training set (texture-form pair), its own vector is calculated

- a diagonal matrix of weight values, which allows to balance the contribution of the distances between pixels and pixel intensities. For each element of the training set (texture-form pair), its own vector is calculated  . Then the resulting set of vectors is combined into a matrix and its main components are found. In this case, the synthesized vector of the combined shape and texture parameters is defined by the following expression:

. Then the resulting set of vectors is combined into a matrix and its main components are found. In this case, the synthesized vector of the combined shape and texture parameters is defined by the following expression: .

.Here

- the matrix of the main components of the combined parameters

- the matrix of the main components of the combined parameters  - vector of combined appearance parameters. From here we can get new expressions for the synthesized form and texture:

- vector of combined appearance parameters. From here we can get new expressions for the synthesized form and texture: .

.In practice, the matrix

It also removes noise components to reduce the effect of retraining and reduce the amount of computation.

It also removes noise components to reduce the effect of retraining and reduce the amount of computation.After the shape, appearance and combined parameters have been calculated, we need to find the so-called prediction matrix.

which, in the sense of minimum rms error, would satisfy the following linear equation:

which, in the sense of minimum rms error, would satisfy the following linear equation: .

.Here

, but

, but  - perturbation of the position vector and combined appearance parameters. Various methods have been developed to solve the above equation. A detailed review of them was carried out in [3 - 6].

- perturbation of the position vector and combined appearance parameters. Various methods have been developed to solve the above equation. A detailed review of them was carried out in [3 - 6].Adaptation of the considered active model of appearance to the analyzed image occurs, in the general case, as follows.

- Based on the initial approximation, all model parameters and affine transformations of the form are calculated;

- Calculates the error vector

. The texture is extracted from the analyzed image using its piecewise deformation;

. The texture is extracted from the analyzed image using its piecewise deformation; - Calculate the perturbation vector

;

; - The vector of combined parameters and affine transformations is updated by summing their current values with the corresponding components of the perturbation vector;

- The shape and texture are updated;

- We proceed to the implementation of paragraph 2 until, until we reach convergence.

Various modifications and improvements have been proposed to this algorithm, but its overall structure and essence remains the same.

The above algorithm is quite effective, but it has a rather serious drawback, which limits its use in real-time applications: it slowly converges and requires a large amount of computation. To overcome these shortcomings, a new type of active appearance models was proposed in [2, 7], which will be discussed in the next section.

Active model of the appearance of the reverse composition

Matthews and Baker proposed a computationally efficient algorithm for adapting the active model of appearance, which depends only on the shape parameters (the so-called “project-out” model). Due to this, it was possible to significantly increase its speed. The adaptation algorithm, which was based on the Lucas-Canada approach, uses the Newton method to find the minimum of the error function.

The Lucas-Canada algorithm is trying to find a locally best fit in the sense of the minimum of the root-mean-square error between the template and the real image. In this case, the pattern is subjected to deformation (affine and / or piecewise) specified by the parameter vector

which maps its pixels to the pixels of the real image.

which maps its pixels to the pixels of the real image.Direct finding of parameters

is a nonlinear optimization problem. To solve it using linear methods, the Lucas-Canada algorithm assumes that the initial value of the deformation parameters is known and then iteratively finds the increments of the parameters

is a nonlinear optimization problem. To solve it using linear methods, the Lucas-Canada algorithm assumes that the initial value of the deformation parameters is known and then iteratively finds the increments of the parameters  by updating the vector on each iteration

by updating the vector on each iteration  .

.The active model of the appearance of the reverse composition uses a similar approach to update its own parameters during the adaptation process, except that the non-basic texture undergoes deformation

, and the analyzed image.

, and the analyzed image.At the stage of learning the active model of the appearance of the reverse composition, so-called images of the fastest descent and their Hessian are calculated. Adaptation of the model takes place in a manner similar to the classical model of appearance, except that in this case only the update of the form parameters and (optionally) the location parameters takes place.

It is worth noting that Matthews and Baker offered a large number of possible variations with different properties of the models they developed. An interested reader can refer to the works [2, 7 - 9] for more information.

Software implementation

For the practical implementation and research of the above learning algorithms and adaptation of active models of appearance, the author has developed a specialized software library called AAMToolbox. The library is distributed under the GPLv3 license and is intended for use only for non-commercial and research purposes. Source codes are available at this link .

AAMToolbox requires the library OpenCV 2.4, boost 1.42 or higher, IDE NetBeans 6.9. Ubuntu Linux OS 10.04 and 10.10 are currently supported. Performance and collection on other platforms has not been verified.

AAMToolbox implements algorithms for working with both the classic active appearance model and the active appearance model of the reverse composition. Access to both types of algorithms is carried out through a single interface that provides training of the model on a given training sample, saving and restoring the trained model from the file, and adapting the model to the real image. Both color images (in three-channel color) and images in grayscale are supported.

In order to train a model, you must first prepare a training set. The sample should consist of two types of files. The first type is the actual images that the model will be trained on. Files of the second type are text markup files and contain form marks indicated on the corresponding images of the training sample. Below is a fragment of such a file.

1 228 307 2 232 327 3 239 350 5 270 392 6 294 406 7 314 410 8 343 403 9 361 388 10 372 370 11 382 349 12 388 331 13 393 312 14 374 243 Here, the first column is the label number, the second column is the X coordinate of the label, the third column is the Y coordinate of the label. Each image must have its own markup file.

The learning code for the active appearance model is fairly simple.

#include "aam/AAMEstimator.h" void trainAAM() { // aam::AAMEstimator estimator; // , // // aam::ModelPathType // std::pair<std::string, std::string>, // , // - . std::vector<aam::ModelPathType> modelPaths; // - ...................................................... // // aam::TrainOptions options; // . 0 1. options.setPCACutThreshold(0.95); // , : // true - , false - options.setGrayScale(true); // . // // . options.setMultithreading(true); // : // aam::algorithm::conventional - , // aam::algorithm::inverseComposition - options.setAAMAlgorithm(aam::algorithm::conventional); // . . // , // . triangles // std::vector<cv::Vec3i> // ( 0. options.setTriangles(triangles); // . // , // . options.setScales(4); estimator.setTrainOptions(options); // estimator.train(modelPaths); // estimator.save("data/aam_test.xml"); } As a result of executing the presented code fragment, it allows you to train the active model of the appearance of a given type and save it to a file. It is worth noting that during training, all data, including images, are in RAM, so when loading a large number of images (several hundred), you should take care to ensure that there are enough of it available (2 - 3 GB). As an example of code that conducts the training procedure for different types of active models of appearance, you can look at the “AAM Estimator test” unit test of the library project. If it is launched for execution, it will train and save each of the supported types in the appropriate files in the variant for color images and grayscale (total 4 different models).

The adaptation code of the active model of appearance to the image will look as follows:

#include "aam/AAMEstimator.h" void aplyAAM() { // . // aam::AAMEstimator estimator; estimator.load("<___>"); // cv::Mat im = cv::imread("<___>"); // std::vector<cv::Rect> faces; cv::cvtColor(im, im, CV_BGR2GRAY); cascadeFace.detectMultiScale(im, faces, 1.1, 2, 0 |CV_HAAR_FIND_BIGGEST_OBJECT //|CV_HAAR_DO_ROUGH_SEARCH |CV_HAAR_SCALE_IMAGE , cv::Size(30, 30) ); if (faces.empty()) { return; } cv::Rect r = faces[0]; aam::Point2D startPoint(rx + r.width * 0.5 + 20, ry + r.height * 0.5 + 40); // , aam::Vertices2DList foundPoints; // . verbose // . estimator.estimateAAM(im, startPoint, foundPoints, true); } In order to see a demonstration of the operation of adaptation algorithms for active appearance models, it is necessary to run the “Aply model test” and “Aply model IC test” unit tests, which adapt the models of supported types to the image. The figure below shows an example of one of the results obtained.

These tests clearly demonstrate the difference in the rate of convergence of the classical active model of appearance and the active model of the appearance of the reverse composition. However, the lack of the latter can be attributed to the divergence of the algorithm of its adaptation in some cases. Several approaches have been proposed for its elimination, but they are not implemented in the AAMToolbox library under review (at least for the time being).

Conclusion

The article briefly reviewed the active models of appearance and the basic concepts and mathematical apparatus associated with them. Also considered is the author-developed AAMToolbox software library that implements the algorithms outlined in the article. Examples of its use are given.

Behind a frame there were three-dimensional models of active appearance and the algorithms connected with them. Perhaps they will be discussed in the following articles.

Bibliography

- T. Cootes, G. Edwards, and C. Taylor. Active appearance models. In Proceedings of the European Conference on Computer Vision, volume 2, pages 484–498, 1998.

- S. Baker, R. Gross, and I. Matthews. Lucas-Kanade 20 years on: A unifying framework: Part 3. Technical Report CMU-RI-TR-03-35, Carnegie Mellon University Robotics Institute, 2003.

- Stegmann Analysis and Segmentation of MB Images using Linear Subspace Techniques. Technical report IMM-REP-2002-22, Informatics and Mathematical Modeling, Technical University of Denmark, 2002

- TF Cootes, GJ Edwards, and CJ Taylor. Active appearance models. IEEE Trans. on Pattern Recognition and Machine Intelligence, 23 (6): 681–685, 2001.

- TF Cootes and CJ Taylor. Statistical analysis and computer vision. In Proc. SPIE Medical Imaging 2001, volume 1, pages 236–248. SPIE, 2001.

- TF Cootes and CJ Taylor. Constrained active appearance models. Computer Vision, 2001. ICCV 2001. Proceedings. Eighth IEEE International Conference on, 1: 748–754 vol.1, 2001.

- Iain Matthews and Simon Baker Active Appearance Models Revisited. International Journal of Computer Vision, Vol. 60, No. 2, November, 2004, pp. 135 - 164.

- S. Baker, R. Gross, and I. Matthews. Lucas-Kanade 20 years on: A unifying framework: Part 1. Technical Report CMU-RI-TR-02-16, Carnegie Mellon University Robotics Institute, 2002.

- S. Baker, R. Gross, and I. Matthews. Lucas-Kanade 20 years on: A unifying framework: Part 2. Technical Report CMU-RI-TR-03-01, Carnegie Mellon University Robotics Institute, 2003.

Source: https://habr.com/ru/post/155759/

All Articles