First look at Fusion-IO ioDrive2

Introduction

At Habré, information about FusionIO products ( one , two ) has already jumped, but in 4 years the situation has changed a bit. For those who do not know in brief will tell you what it is and what it is eaten with.

FusionIO is a company that makes PCI-E SSD cards that have outstanding performance characteristics and a space price.

I will tell why it is needed and why, where to get it, how much it costs, and how it works.

Why is it needed

')

It is not difficult to guess what it needs for applications that are sensitive to the speed of the disk. In our case, to host databases MS SQL Server 2012 . Before that, we used an iSCSI disk shelf, with a RAID10 of 14 SAS disks. However, under a large load, the delay in writing the log file for the loaded databases reached 10 ms, which was completely unacceptable.

Why FusionIO? Because Steve Wozniak :), as well as companies that use FusionIO - Facebook, Apple iCloud, Salesforce and a bunch of others. Seriously, 2 factors played - price and speed. For the money faster just no.

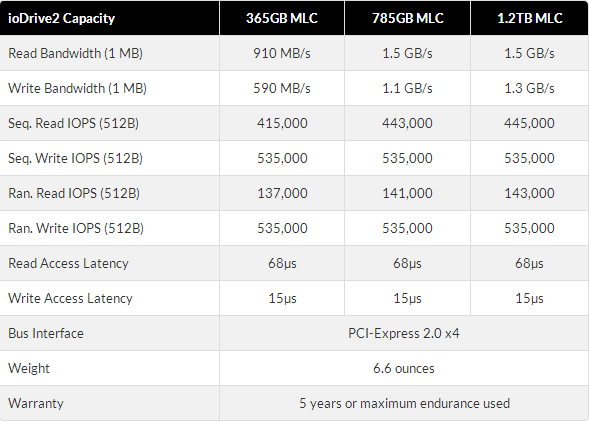

For example, the declared performance of ioDrive2 - we stopped at them:

Where to get it

In short - order from your supplier of server hardware.

We started searching from our server hardware provider. There, the guys asked for a day to think, and in the end promised to bring in 6-8 weeks. For a long time of course, but what to do.

The second step I wrote to the sales department of FusionIO with a simple question - where to buy in Russia? Managers got involved in the work with a bunch of questions “why do you need it”, “but let us tell you”, etc. I assured them that I knew what I needed, and asked - who should I give money to? I was announced the price, and sent to a reseller who "sells in your region." Looking ahead, I’ll say that we eventually bought from our supplier along with the servers. I was contacted by John, offered to come to Moscow and tell about all the benefits and give a map for the test. A couple of weeks later we had a meeting where I found out that they are now opening a sales office in Moscow, and they will probably open it within six months. And this means that soon it will be possible to buy these boards by bank transfer, with a normal support and reasonable terms, and also to receive cards for a test.

And at this time the cards went to us via supermicroscopic channels - now, by the way, the largest part of these cards is sold through vendors (HP, Dell, Supermicro and others, even Cisco plans to install them in their UCS).

How much is

ioDrive 2 at 1.2 Tb costs $ 14,000. I will quote material from the 2008 article: At the moment, this product is intended exclusively for corporate users: the 80GB version will cost $ 3,000, imperceptibly growing to $ 14,000 for a 320GB super speed.

Speech by the way in that article was about the first generation ioDrive, which are much less productive.

After a couple of years, prices will still fall, and for this money you can buy a 5-terabyte Octal , or some ioDrive 3 of similar volume.

MLC or SLC

Fusion-IO engineers say that it’s not worth buying SLC for reliability - MLCs are quite reliable. If the important additional speed - then perhaps worth it.

How does it work

Quickly. Very fast.

Here are the SQLIO results compared to our current shelf:

Megabytes per second (the regiment, of course, rested on the network):

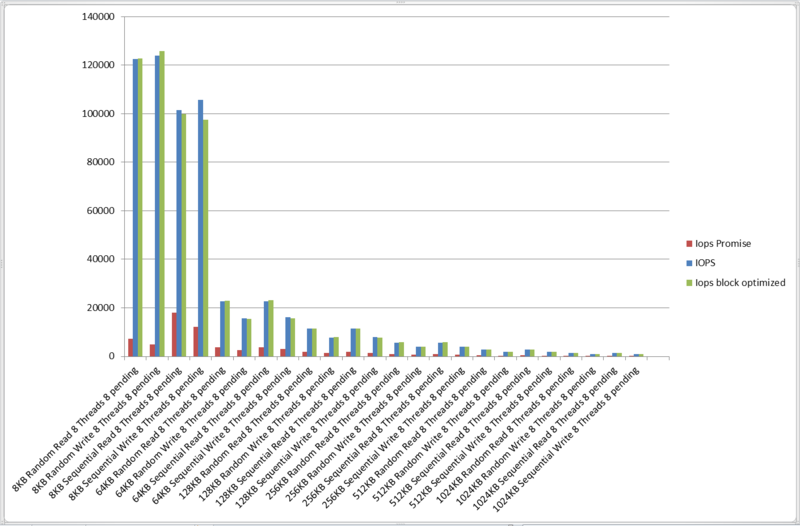

Iops:

Amarao will probably come to the topic , and say that the SSD for recording is not much faster than a good raid, and also that the main thing is latency - and I agree with him in something. However, I probably won’t do a test of a day-long test for a random write to a file of terabyte in size - all of a sudden it’s really bad there :), but I’ll tell you about the latency.

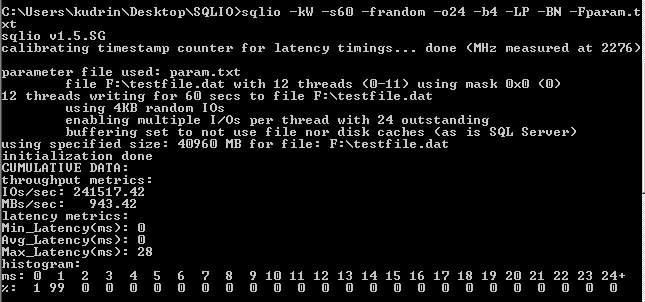

A simple benchmark for random recording 4Kb in blocks of 12 threads with a queue of 24 for each stream (total 288) - look at the latency histogram below

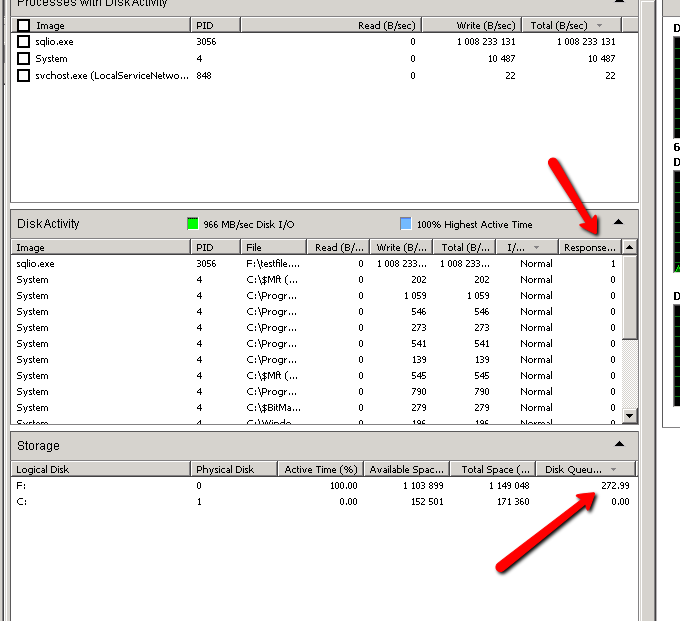

If we look at the Resource Monitor, then there is a terrible

The longest disk queue, however, due to the fact that requests are processed very quickly, the latency does not exceed 1 ms. By the way, if you reduce the queue to 200, then 99% are performed faster than 1 ms.

What can be customized

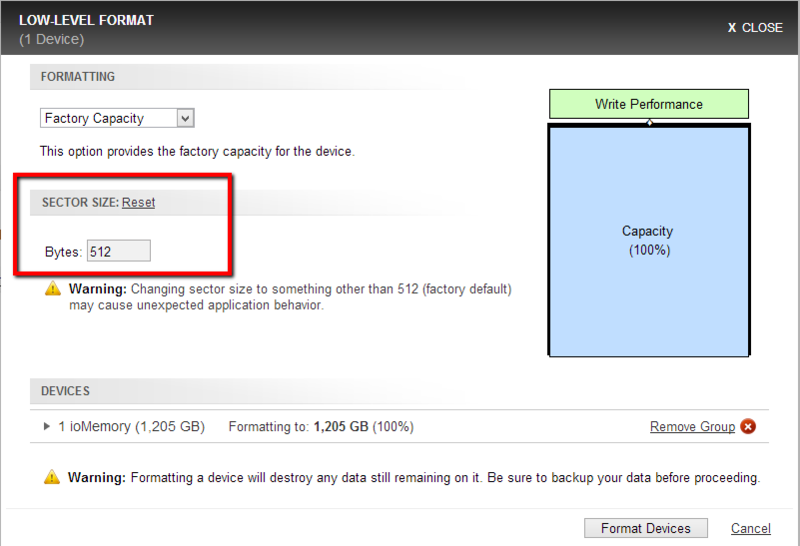

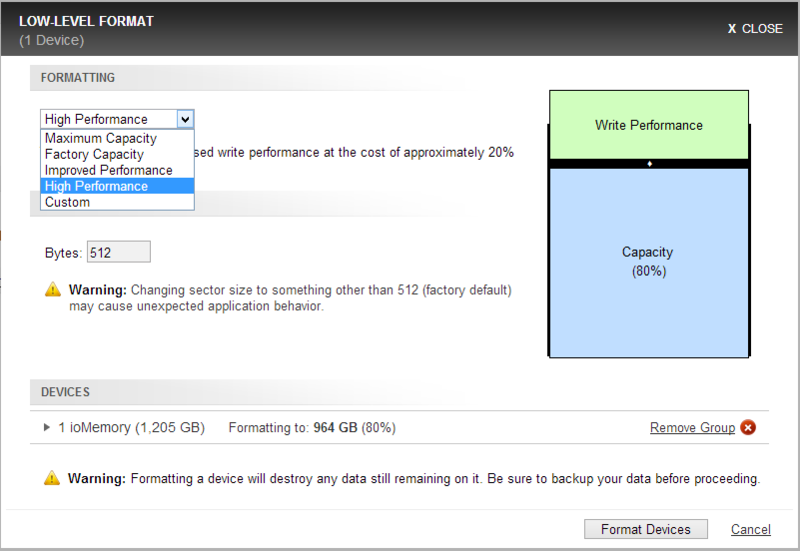

Well, firstly, the size of the sector. By default, they come from sectors of 512 bytes, the maximum size is 4 kb, by the way, it recommends exposing it to HP . Also, they, and everyone else, recommend setting the NTFS allocation unit size to 64 KB.

Sector size changes through Low Level Format with the loss of all data.

According to the results of testing with different size of the sector, I can say that the difference in the results is so tiny that it can be neglected.

It is also possible to format the device with less useful space to increase speed.

I did not test, we are more than satisfied with this speed, and I don’t want to lose precious gigabytes. Here are some tests .

Perhaps there is some kind of low-level tuning - I'm just starting to read the documentation. If there is something interesting, be sure to tell.

Instead of conclusion

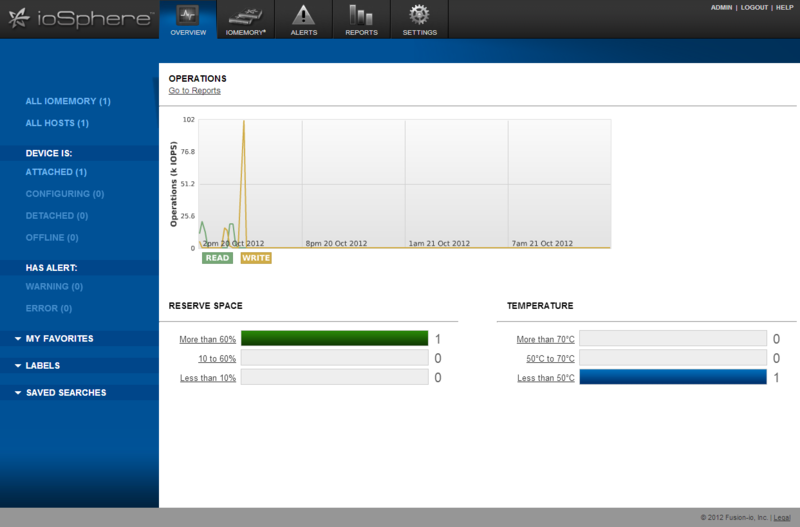

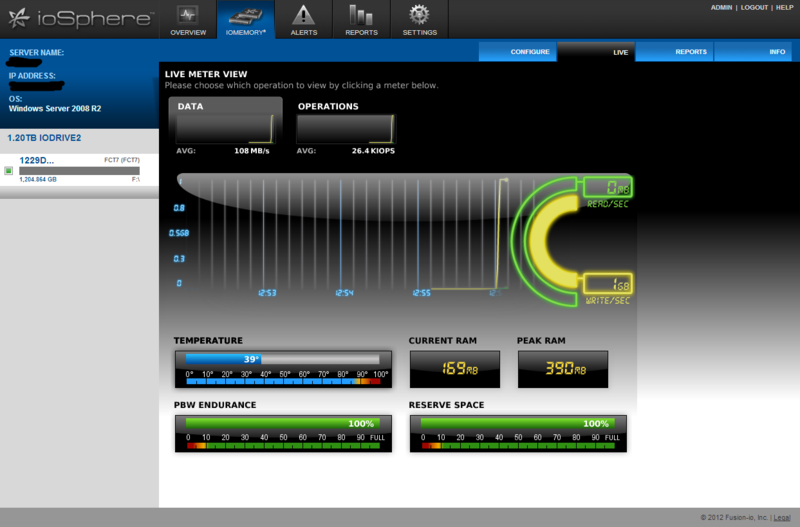

A couple of ioSphere interface screenshots - software for managing and monitoring fusion devices.

But the public ftp supermikry , where you can download different PDF (as well as drivers) for review for free, without registration and SMS.

I’ll say right away that under Linux it should work as the manufacturer claims, but I don’t have a chance to check it.

I hope it was interesting.

UPD1

Excerpt from cell wear documentation:

The ioMemory VSL manages block retirement using pre-determined retirement thresholds. The ioSphere and the

It shows that it starts at 100 and counts down to 0. As these thresholds are

crossed, various actions are taken.

At the 10% healthy threshold, a one-time warning is issued. See the 'monitoring' section below for methods for

capturing this alarm event.

At 0%, the device is considered unhealthy. It enters write-reduced mode,

can be safely migrated off. IoMemory device behaves normally, except for the reduced write

performance.

After the 0% threshold, the device will

an error. Block device in addition to some filesystems

to specifying that the mount should be read-only.

For example, under Linux, ext3 requires that "-o ro, noload" is used, the "noload" option tells the filesystem to

not try and replay the journal.

The device should not be read more

with continued use.

Source: https://habr.com/ru/post/155627/

All Articles