Top comments Habra - service, implementation details, and some statistics (C #)

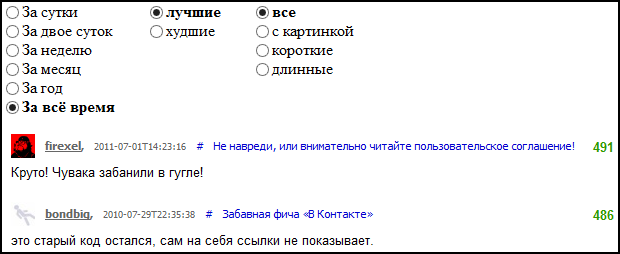

Some time ago, the "Best Comments" page was removed from Habr (details here: habrahabr.ru/qa/18401 ).

Nevertheless, I used to be interesting to look there - and for the sake of lulz, and sometimes interesting articles come across from those that were missed in the tape. So I decided to make my own small service. I hope the administration will not mind.

Current Service URL: habrastats.comyr.com

The first "version" displayed the top comments from the last N posts, was written in LINQPad in two hours and occupied one screen ( pastebin ). It became clear that “on request” it is unrealistic to generate even for posts in the last 24 hours (download speeds of 1-2 posts per second), which means that it is necessary to make a periodic update. From here came the idea to turn the service on a home machine (always on) and upload static results to a free hosting.

')

Code: code.google.com/p/habra-stats

- Windows Service on C # (4.5, VS2012 - new features are not used, you can build under 4.0)

- Parsing Regexp (and yes, I know: You can't parse HTML with regex , but it will do here)

- MS SQL Express + Entity Framework (well, very convenient ORM)

- XSLT to generate HTML (layout and css taken from Habr, forgive me again the administration)

Once every two hours, the service wakes up, parses the habrahabr.ru/posts/collective/new page and gets the Id of the newest post, then loads posts in reverse order until the publication date reaches the threshold (older than 3 days). Post parsytsya and put in the database.

Previously all existing posts were loaded into the database (it took two days).

Then, “reports” are generated from the database, such as “best for a day”, “worst for a month with a picture”, etc. The data for the report is simply a collection of “Comment” objects that are serialized and transformed by XSLT. The results are uploaded via FTP to the hosting.

For generating reports and navigating between them there is a small trick: each of the filtering methods (BackDown, Week, Best, Worst, etc.) is marked with the attribute:

Through Reflection we get all the combinations of such methods by categories, get data from the database and generate the navigation. Thus, to add one more “report” (for example, “in three days”), it is enough to add a method with an attribute. Glory to the LINQ and Entity Frameworkrobots .

Initially I thought to do without the database and do everything as simple as possible: store raw HTML on disk, load it into memory and process it there. But the scale of the disaster was underestimated: 150 thousand posts in HTML occupied more than 10 gigabytes. Even on an SSD, loading and parsing times is unacceptable.

Then I tried SQL Compact Edition (in-process database, supports the entity framework). Rested in the 4GB limit on the size of the database file. At that time there was only one Comments table with duplicate (denormalized) data. After the transition to SQL Express, I partially removed duplication, adding the Posts table, and deleted comments without votes (of which there were about 30%). As a result, the size of the base is now about 2GB.

In the process of parsing I learned that recklessly used RegexOptions.IgnoreCase reduces performance by several times.

At the time of writing this article in the database:

90619 posts

18 comments on the publication on average (no comments in the database without votes)

15 of them with a positive rating

1676593 comments total

721 comments per day

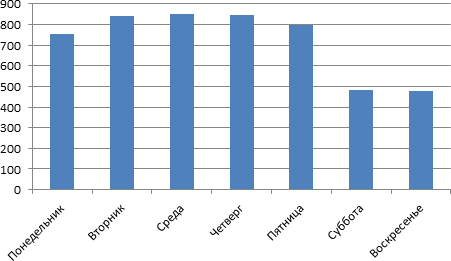

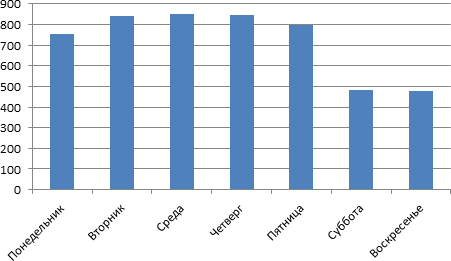

Average number of comments by day of the week

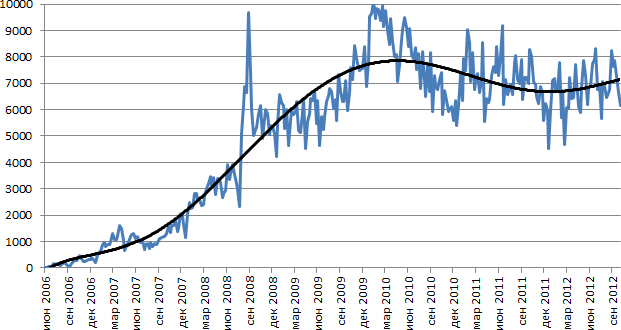

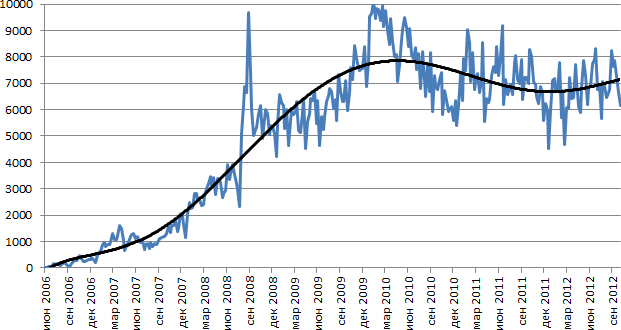

Comments per week: time dynamics

Site:

http://habrastats.comyr.com/

Code again: code.google.com/p/habra-stats

RSS with the best for the previous day

PS Offer more interesting requests

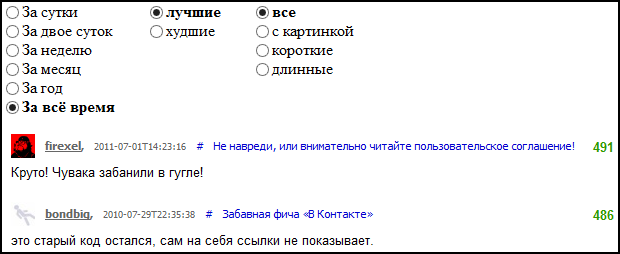

PPS famous comment on the famous topic about pornolab is not displayed because the author of the publication is blocked.

Posted by nForce , you can see the comment here: habrahabr.ru/users/nforce/comments/page2

Nevertheless, I used to be interesting to look there - and for the sake of lulz, and sometimes interesting articles come across from those that were missed in the tape. So I decided to make my own small service. I hope the administration will not mind.

Current Service URL: habrastats.comyr.com

The first "version" displayed the top comments from the last N posts, was written in LINQPad in two hours and occupied one screen ( pastebin ). It became clear that “on request” it is unrealistic to generate even for posts in the last 24 hours (download speeds of 1-2 posts per second), which means that it is necessary to make a periodic update. From here came the idea to turn the service on a home machine (always on) and upload static results to a free hosting.

')

Briefly about implementation

Code: code.google.com/p/habra-stats

- Windows Service on C # (4.5, VS2012 - new features are not used, you can build under 4.0)

- Parsing Regexp (and yes, I know: You can't parse HTML with regex , but it will do here)

- MS SQL Express + Entity Framework (well, very convenient ORM)

- XSLT to generate HTML (layout and css taken from Habr, forgive me again the administration)

Once every two hours, the service wakes up, parses the habrahabr.ru/posts/collective/new page and gets the Id of the newest post, then loads posts in reverse order until the publication date reaches the threshold (older than 3 days). Post parsytsya and put in the database.

Previously all existing posts were loaded into the database (it took two days).

Then, “reports” are generated from the database, such as “best for a day”, “worst for a month with a picture”, etc. The data for the report is simply a collection of “Comment” objects that are serialized and transformed by XSLT. The results are uploaded via FTP to the hosting.

For generating reports and navigating between them there is a small trick: each of the filtering methods (BackDown, Week, Best, Worst, etc.) is marked with the attribute:

[CommentReport(Category = "" , Name = " " , CategoryOrder = 0)] Through Reflection we get all the combinations of such methods by categories, get data from the database and generate the navigation. Thus, to add one more “report” (for example, “in three days”), it is enough to add a method with an attribute. Glory to the LINQ and Entity Framework

Few of the problems and solutions

Initially I thought to do without the database and do everything as simple as possible: store raw HTML on disk, load it into memory and process it there. But the scale of the disaster was underestimated: 150 thousand posts in HTML occupied more than 10 gigabytes. Even on an SSD, loading and parsing times is unacceptable.

Then I tried SQL Compact Edition (in-process database, supports the entity framework). Rested in the 4GB limit on the size of the database file. At that time there was only one Comments table with duplicate (denormalized) data. After the transition to SQL Express, I partially removed duplication, adding the Posts table, and deleted comments without votes (of which there were about 30%). As a result, the size of the base is now about 2GB.

In the process of parsing I learned that recklessly used RegexOptions.IgnoreCase reduces performance by several times.

Some statistics

At the time of writing this article in the database:

90619 posts

18 comments on the publication on average (no comments in the database without votes)

15 of them with a positive rating

1676593 comments total

721 comments per day

Average number of comments by day of the week

Comments per week: time dynamics

Finally, links!

Site:

http://habrastats.comyr.com/

Code again: code.google.com/p/habra-stats

In the plans

RSS with the best for the previous day

PS Offer more interesting requests

PPS famous comment on the famous topic about pornolab is not displayed because the author of the publication is blocked.

Posted by nForce , you can see the comment here: habrahabr.ru/users/nforce/comments/page2

Source: https://habr.com/ru/post/155541/

All Articles