Dynamic interpretation of metamodels

Continuing the series of articles on metaprogramming , he prepared a squeeze from his rather extensive work on increasing the level of abstractions in information systems. Habr certainly likes practical solutions, and I still have them , but there is a lot of material and I have to divide it into several articles. And to illustrate the effectiveness of the approach, I can say that its implementation in many live projects has allowed to increase the development efficiency tenfold, for example, create database applications with a structure of several hundred tables per week and port solutions between platforms in a matter of hours. This article is of a theoretical nature and is filled with specific terminology, without which, unfortunately, it would be much longer.

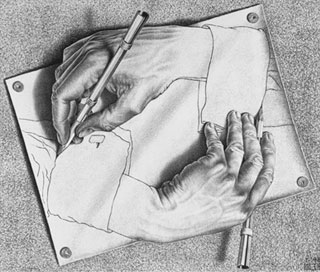

Continuing the series of articles on metaprogramming , he prepared a squeeze from his rather extensive work on increasing the level of abstractions in information systems. Habr certainly likes practical solutions, and I still have them , but there is a lot of material and I have to divide it into several articles. And to illustrate the effectiveness of the approach, I can say that its implementation in many live projects has allowed to increase the development efficiency tenfold, for example, create database applications with a structure of several hundred tables per week and port solutions between platforms in a matter of hours. This article is of a theoretical nature and is filled with specific terminology, without which, unfortunately, it would be much longer.“This is what I mean by producing work, or, as I called it last time,“ opera operans. ” In philosophy, there is a distinction between "natura naturata" and "natura naturans" - the generated nature and the generating nature. By analogy one could form - “cultura culturata” and “cultura culturans”. For example, the novel “In Search of Lost Time” is not built as a work, but as a “cultura culturans” or “opera operans”. This is what the Greeks called Logos. "

// Merab Mamardashvili "Lectures on ancient philosophy"

Formulation of the problem

The classical approach to the development of information systems is obviously the construction of a domain model using data structures and program code. Data structures include: in-memory and transfer protocols, in files on disk and in databases, and the program code is the active (imperative, event-driven or functional) model of the problem being solved on the simulated data.

There are many approaches and technologies for which the model is static , i.e. fixed for a long time (the period of life of one software version). In such static technologies, one or other means of synchronization of data structures of permanent storage and the structure of objects in the program code are used. But there is a class of tasks, and it is becoming increasingly relevant, for which such technologies are not an acceptable solution, because require the intervention of developers and the release of a new version of the system with any change in the domain model, and ultimately require the reassembly of all components of the information system. With such changes, it is necessary to redeploy these components to the workstations of users and servers, and for web applications this process is softer, since deployment only applies to servers. However, in any case, a change in the model is associated with the following necessary actions:

- interruption of the normal operation of the system for a certain time during deployment (interruption of user sessions, temporary unavailability of the service),

- conversion of data in permanent storage from old formats to new ones,

- making changes to the program code (which includes the allocation of time for development, debugging, testing),

- revision of all links with other subsystems (both at the data level and at the level of program calls).

We restrict the class of tasks that require a fundamentally different approach:

- tasks with a dynamic domain , where changing the structure and parameters of the model is the normal normal mode of operation,

- tasks of processing weakly-related data or data with non-permanent structure, parameters or processing logic,

- tasks in which the number of classes of processed objects is comparable to the number of their instances or the number of instances is only one or two orders higher;

- tasks in which the success of the business model depends on the speed of integration of the updated model with other subsystems (ideally, integration in real time or close to real time is required),

- tasks of inter-corporate data exchange, intersystem integration (including integration of heterogeneous distributed applications),

- application tasks not related to the mass user.

I would especially note that the class of tasks does not include typical communication or information tasks for which you can create software with a long lifespan, such as: email, workflow, CRM systems, instant messaging systems, social networks, news or informational web resources, and .d The scope of application of such technologies is rather reduced to such industries :

- corporate application information systems,

- database applications

- analytical systems, reporting systems, decision-making systems,

- integration systems between partner companies.

Such tasks are traditionally considered poorly automated or limitedly automated . But if you look for a way to solve them, then it is natural to assume that raising the level of abstraction of a software solution will allow covering a wider range of tasks, which will also include related tasks, with modified parameters and structure. Let us call such a model whiter than the general task, a metamodel and propose a method of its dynamic mapping (projection) onto the domain, in which we can dynamically obtain several domain models from one metamodel, depending on the metadata supplied to the system. Such metadata should complement and concretize the metamodel (complete the structure and parameters).

What are the difficulties in increasing the abstraction of software components and data structures:

1. Linking by identifiers wired into the program code. Calls to data structures and program interfaces are made using logical identifiers, which are ultimately converted into call addresses, relative in-memory addresses, and network calls. Both with static (early) binding and dynamic (late) binding, the identifiers are protected in the program code of the system components, moreover, they are scattered throughout the code. And to increase the abstraction, the code should not contain identifiers pointing to the domain model, but only identifiers pointing to the metamodel.

2. Application of static typing of variables . For problems with a dynamic domain, the characteristic is not only that we do not know in advance the type of the variable (object, class, expression, constant, function), but this type may even be unknown at compile time. At a minimum, a programming language suitable for solving such problems should support introspection and dynamic creation of types, classes, and variables of newly created types.

3. Mixing in one class the domain model and solving system problems. For example, the class “car” should not be known by any visual controls, libraries or tags it is displayed on the screen, not how it needs to be serialized and with what syntax structures, how it is stored on disk or in a database, how it is transmitted to it. network, using what protocols or data formats, or in what form it should be deployed in RAM.

I do not claim that these programming techniques do not exist, they are quite applicable and indispensable for solving problems with a static domain model. A static and dynamic binding is suitable for the development of system aspects of software with a dynamic domain, but these techniques should not be associated with the processing of a model or metamodel of a subject domain.

')

Dynamic interpretation of the metamodel

Before describing the principle of dynamic interpretation, we will clarify what we will mean by the terms used.

Metamodel is an information model of a higher level of abstraction than a specific domain model. The meta model describes and covers not a separate task with functionality, but a wide range of tasks with highlighting general abstractions, data processing rules and business process management in these tasks. The metamodel adapts to the necessary specifics and specifics already at the time of execution, converting metadata into dynamic code and ensuring their dynamic linking to the launch environment (virtual machine, operating system, or cloud infrastructure).

Metadata - data describing the structure and parameters of the data entering the information system. Metamodel interpreting metadata dynamically builds a domain model, which already interprets the incoming data. Metadata can contain declarative and imperative structures, for example: types, processing rules, scripts, regular expressions, etc. Without metadata, the incoming data is incomplete and the metamodel cannot compile a dynamic model of the problem being solved without them, that is, the metadata complements the description of the data and complements the metamodel means of interpreting the received data.

An application virtual machine is a dynamic interpretation environment that is connected to an operating system, virtual machine, or cloud infrastructure. An applied virtual machine, in contrast to a virtual machine, serves not system tasks, but applied tasks, ensures the creation of a model from metamodel, metadata and data using dynamic linking, as well as caching of the expanded model fragments in RAM for quick access to them.

Diagram of an IP component with dynamic interpretation. Abbreviations in the scheme: MS - state machine, K - component configuration, HRP - permanent storage, L - logic. Calls from the component go to the lower component, and requests go from the top (answers go in the opposite direction).

Diagram of an IP component with dynamic interpretation. Abbreviations in the scheme: MS - state machine, K - component configuration, HRP - permanent storage, L - logic. Calls from the component go to the lower component, and requests go from the top (answers go in the opposite direction).So, the metamodel is in each component of the IS (see diagram) and contains abstract functionality for a wide class of tasks. When a component is launched, the metamodel is deployed in the application virtual machine, but for its dynamic interpretation, i.e. to convert it into a domain model or problem to be solved, it is necessary to obtain data and metadata from the persistent storage system, state machine, or other component of the information system. Dynamic interpretation of a metamodel is the process of creating a domain model from metamodel, metadata and data. Dynamic interpretation takes place both on the client and on the server at the time of execution. With dynamic interpretation, the domain model is not built entirely in memory, but is interpreted fragmentarily as the incoming data is processed and can be cached in the application virtual machine in order to avoid the computational resources expended on multiple interpretation.

Metamodel contains specification of metadata, which is necessary for dynamic interpretation. If this is the first session of the component and there are no metadata available in the permanent storage and state machine available for them, then they can be obtained using an identifier (address) or a request entered by the user or transmitted from another component of the system (but not wired in the component) . This process is controlled by the session component of the application virtual machine. Further, metadata can be cached, how data is cached to minimize requests, and updated only when the version or checksum is changed at the source (storage system or remote component) or when an event is received from the source. Receiving changed metadata leads to the restructuring of the domain model in memory in RAM. In most cases, it is more optimal to do not complete restructuring, but “lazy” (as you go).

The metadata received in this way into the system component supplements the metamodel with its declarative parameters and imperative scenarios in such a way that it becomes possible to dynamically construct the domain classes, fill them with the obtained data and process them using the dynamically constructed business logic. As a result, both component functionality and interaction interfaces with other components can change in a wide class of tasks without recompiling the system, and many changes are possible with simple setting of new metadata parameters (even without modifying scripts).

Dynamically constructed application data structures and application scenarios (events, data processing methods) are based on API calls of the application virtual machine in which the dynamic model is running. Such an API, in turn, “sits down” on the startup environment API (for example, the operating system API, mobile operating system, virtual machine, cloud infrastructure API, etc.). Dynamic model building occurs in both server and client (user) components of the IC, i.e. the user interface is built dynamically, and the role of the API here is performed by the visualization library used (GUI components) or the Renderer rendering environment (for example, HTML5, Flash, SilverLight, Adobe AIR). Network interfaces can also be built dynamically, however, transmission protocols and data exchange formats, of course, must be fixed (compiled into a component) or selected from the available set available on the platform (for example, JSON, XML, YAML, CLEAR, CSV, HTML , etc.).

The user initiates events in the GUI that lead to local data processing or network calls. The meaning of dynamic interpretation in the network interaction is that the identifiers of the called remote procedures, their parameters and the structure of the returned data are not pre-protected into the calling component, but are obtained from the metadata during the dynamic interpretation of the metamodel. The remote side also does not have a fixed interface (pre-wired into the compiled component methods available via network calls), instead, only the metaprotocol (data transfer formats, types of parameters, processing of which is supported) and the introspection mechanism that allows you to dynamically read the structure remote network interface. For example, such a metaprotocol can be created from declarative structures by combining them with the HTTP, JSONP, SSE, WebSockets and introspection interfaces.

The role of the state machine in dynamic interpretation is to save the session with the necessary data set both on the client and on the server between network calls. This is necessary in order to ensure consistent or interactive human-computer data processing, in which user actions bring the model into a certain state, and each subsequent network call depends on the previous one within the transaction. In addition, the state machine allows you to significantly reduce the costs of traffic and computing resources by caching metadata, data and even the dynamically built model in RAM. The state machine can be implemented using in-memory structures (for programming languages), local storage (“Local Storage” for HTML5 applications), or memcached server (mainly for server components).

Permanent data storage in information systems with dynamic interpretation is possible in a DBMS: relational databases with a parametric approach to data schemas or using introspection of metadata of a storage schema, key-value databases or “Schemaless” data stores . It is worth noting that schema-free repositories (for example, MongoDB) are more natural for systems with dynamic interpretation, since provide for dynamic typing and flexible management of structures (close to programming languages with weak typing). For relational DBMS, the following approach is used: in addition to the main tables that store the domain model, additional tables are stored in the database for storing metadata, and these tables contain additional information about the structure of the main tables (extended data types, processing parameters, augmented classification of links and imperative scenarios) .

findings

Thus, the multi-layer structure of IP components with dynamic interpretation of metamodels is closed from below to self-describing storage (limited by the metamodel's abstraction level), and from above it closes to the user, who can modify not only the data, but also metadata, reconfiguring the IC to constantly changing requirements of the problem being solved, this is the flexibility of the approach and the simplification of system modification, reducing the required level of user skills when making changes to the structure and functions. The place of software solutions architect and programmers in creating software with a high level of abstraction, i.e. in the implementation of the metamodel (which is, naturally, a more difficult engineering task). But ultimately, the approach pays for itself by increasing the reuse of highly abstracted code, automating many tasks of linking components, reducing the influence of the human factor in modifying and integrating systems, and simplifying the integration between components of software systems.

Additional materials

1. Metaprogramming

2. The use of metamodel when designing databases with several abstract layers:

Part 1 and Part 2

3. Integration of information systems

Source: https://habr.com/ru/post/154891/

All Articles