Seamless migration of MySQL 5.0 -> Percona Server 5.5 with repartitioning storage

Hello.

I want to share the experience of migrating a combat database from MySQL 5.0 to Percona Server 5.5 under load almost on the job.

Our base is old, has experienced several MySQL upgrades. We started with MySQL 3.x. With the increase in load, already on MySQL 5.0 , we configured replication and connected another server for reading. Then we did it using standard MySQL tools, without involving xtrabackup - completely blocking the server at the time of the creation of the master dump and hung out on the sites stubs.

')

Then the next problem arose - a place with data began to run out on the data volume. Plus InnoDB storage has historically been located in a single file. It was considered a lot of solutions. Starting from placing a base on an iSCSI volume and ending with popping up more capacious disks into the raid, expanding the volume group / logical volume with the subsequent expansion of the file system.

As a temporary option, we decided to connect with an iSCSI virtual machine for VMWare vCloud ( no ads, honestly! ). vCloud stands by our side.

We started with an experiment on the slave . The experiment was successful, and for some time the second read-only MySQL kept the storage on the iSCSI volume.

As an experiment, we connected the server to vCloud with the second read slave. Transferred to it the entire load from the first slave. By performance, the virtual server won by a good margin. Affected by more powerful hardware from vCloud hosts. Switched the load back. Began to think.

At this moment a strange mystical event happened. A few hours after the experiment was completed with the load on the virtual server, a tricky accident occurred in the data center, as a result of which several servers were de-energized, including a physical slave. The relay log , located on the iSCSI volume of the physical slave, has garbage. Replication stopped.

The most logical action in this situation was switching to the cloud slave, which we did.

Thus, we were already one read-only foot in vCloud . You had to drag master .

They decided to move to Percona 5.5 , also on two servers, but with a private partitioning of the InnoDB storage.

The main restriction when moving was the inability to stop the base for any long time.

Since we are talking about splitting InnoDB storages into tables, it’s impossible to do without copying data. It is necessary to inject a new base from the dump.

To get a master dump, you need to lock a 125 gig base for more than an hour. This is unacceptable on a combat base. We decided to backup the master with xtrabackup , raise the server on the resulting cast, and remove the master dump from it.

To partition the InnoDB storage in tables on new servers, you must set the option " innodb_file_per_table = 1 ".

Since this is a cloud, we playfully raise three missing servers in five minutes.

As part of the program to reduce downtime to a minimum, we decided not to reconfigure applications to work with the new master, but simply to raise the ip of the old master on the new server. For this, it is necessary that the ip of the old server is visible on the new wizard for all clients. In our case, all five servers are on the same subnet, so there are no problems with this.

In total, we get four servers: two old, but so far combat MySQL 5.0 , and two new Percona 5.5 already with actual data.

The fun begins. Switch master . Here is the point of no return. Starting from this moment, we note the downtime of the master database.

In our case, the downtime was about one and a half minutes. Most of this time was 5.0-master . In principle, idle time could be reduced even more by dropping InnoDB caches before stopping the bases.

vCloud showed a good side. Performance when moving significantly increased due to a more powerful hardware and much more nimble disk subsystem, since the virtual disk is “smeared” by a large number of physical media.

I want to share the experience of migrating a combat database from MySQL 5.0 to Percona Server 5.5 under load almost on the job.

I will describe briefly the evolution of our base to the current state

Our base is old, has experienced several MySQL upgrades. We started with MySQL 3.x. With the increase in load, already on MySQL 5.0 , we configured replication and connected another server for reading. Then we did it using standard MySQL tools, without involving xtrabackup - completely blocking the server at the time of the creation of the master dump and hung out on the sites stubs.

')

Then the next problem arose - a place with data began to run out on the data volume. Plus InnoDB storage has historically been located in a single file. It was considered a lot of solutions. Starting from placing a base on an iSCSI volume and ending with popping up more capacious disks into the raid, expanding the volume group / logical volume with the subsequent expansion of the file system.

As a temporary option, we decided to connect with an iSCSI virtual machine for VMWare vCloud ( no ads, honestly! ). vCloud stands by our side.

We started with an experiment on the slave . The experiment was successful, and for some time the second read-only MySQL kept the storage on the iSCSI volume.

Began to think seriously about moving the base completely to the cloud

As an experiment, we connected the server to vCloud with the second read slave. Transferred to it the entire load from the first slave. By performance, the virtual server won by a good margin. Affected by more powerful hardware from vCloud hosts. Switched the load back. Began to think.

At this moment a strange mystical event happened. A few hours after the experiment was completed with the load on the virtual server, a tricky accident occurred in the data center, as a result of which several servers were de-energized, including a physical slave. The relay log , located on the iSCSI volume of the physical slave, has garbage. Replication stopped.

The most logical action in this situation was switching to the cloud slave, which we did.

Thus, we were already one read-only foot in vCloud . You had to drag master .

They decided to move to Percona 5.5 , also on two servers, but with a private partitioning of the InnoDB storage.

The main restriction when moving was the inability to stop the base for any long time.

Since we are talking about splitting InnoDB storages into tables, it’s impossible to do without copying data. It is necessary to inject a new base from the dump.

To get a master dump, you need to lock a 125 gig base for more than an hour. This is unacceptable on a combat base. We decided to backup the master with xtrabackup , raise the server on the resulting cast, and remove the master dump from it.

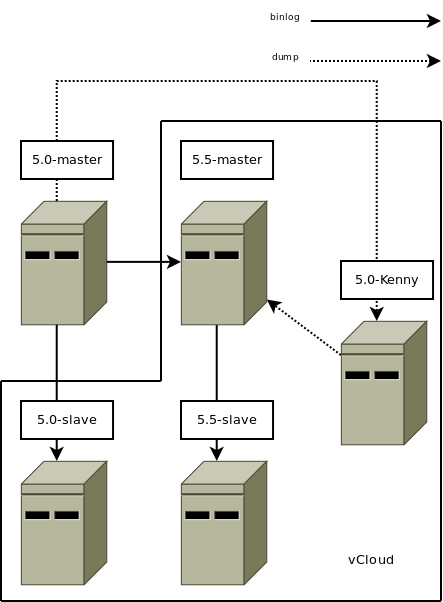

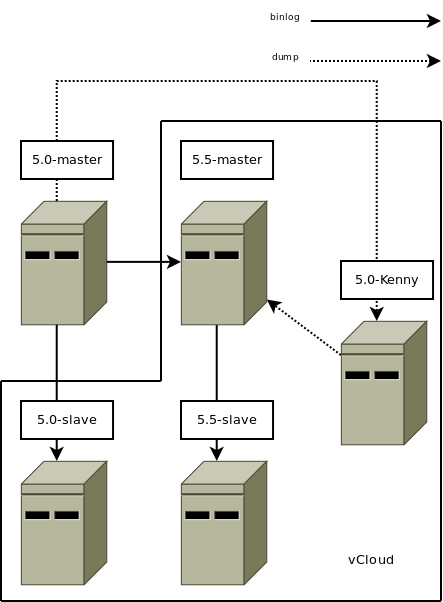

So the ingredients

- Two old combat MySQL-5.0 servers under load. Let's call them 5.0-master and 5.0-slave .

- Two new combat Percona-5.5 servers yet without load, data and replication. Let's call them 5.5-master and 5.5-slave .

- One intermediate MySQL 5.0 server for dumping. Let's call it 5.0-Kenny .

To partition the InnoDB storage in tables on new servers, you must set the option " innodb_file_per_table = 1 ".

Since this is a cloud, we playfully raise three missing servers in five minutes.

As part of the program to reduce downtime to a minimum, we decided not to reconfigure applications to work with the new master, but simply to raise the ip of the old master on the new server. For this, it is necessary that the ip of the old server is visible on the new wizard for all clients. In our case, all five servers are on the same subnet, so there are no problems with this.

And now - a sequence of actions.

- From the master database ( 5.0-master ) we remove the dump using xtrabackup :

innobackupex --user=root --password=Yoo0edae ___

It is important to remember that the more data your database is in MyISAM tables, the longer the base will be locked when xtrabackup is finalized, since MyISAM tables are copied completely during the final database lock. In our case, there is practically no MyISAM data, so the database is in a locked state for only a few seconds. - In the received data directory there is a file " xtrabackup_binlog_info " - we remember from it a position in the binlog.

- The copied copy is copied to 5.0-Kenny ( we mounted the Kenny disk on NFS on 5.0-Master , and pointed it to the destination directory when removing xtrabackup data, so that data on 5.0-Kenny appeared during the xtrabackup operation ). There we prepare it :

innobackupex --apply-log ____ - Stop MySQL . We clean the MySQL data directory, copy our image into it:

innobackupex --copy-back ____

Can be copied manually. Then in the MySQL storage there will be some garbage from the backup process. If necessary, put the correct owner on the data directory. - We start MySQL . We are convinced that there are no errors.

- From 5.0-Kenny we take a dump:

mysqldump --all-databases > mysql.dump - The resulting dump is copied to 5.5-master . Pour in:

mysql < mysql.dump - Restart MySQL . We admire the mountain of errors in the log. Upgrade the scheme to version 5.5 :

mysql_upgrade --force - Restart MySQL . The logs should be clean.

- Turn on replication, connect 5.5-master to the 5.0-master binlog. To do this, we first add to the 5.0-master user for replication (we remember everything about the server-id , right?):

mysql> GRANT REPLICATION SLAVE ON *.* TO 'repl_master_55' IDENTIFIED BY 'slavepass'; mysql> FLUSH PRIVILEGES;

then on the 5.5-master execute the query:mysql> CHANGE MASTER TO MASTER_HOST='master_host_name', MASTER_USER='repl_master_55', MASTER_PASSWORD='slavepass', MASTER_LOG_FILE='recorded_log_file_name', MASTER_LOG_POS=recorded_log_position; mysql> START SLAVE;

Values MASTER_LOG_FILE and MASTER_LOG_POS are taken from the file " xtrabackup_binlog_info ". - Make sure replication goes:

mysql> SHOW SLAVE STATUS\G

Our 5.5-master will be a slave for 5.0-master for a while. In order to get requests from 5.0-master to the 5.5-master binlog, the " log-slave-updates = 1 " option should be set to 5.5-master . By default, it is zero, that is, only local changes fall into the binlog. - Remove from 5.5-master dump using xtrabackup . Copy the dump to 5.5-slave .

- We lift 5.5- slave , we cling it to 5.5-master binlog.

In total, we get four servers: two old, but so far combat MySQL 5.0 , and two new Percona 5.5 already with actual data.

- Switching the entire read-only load from the old 5.0-slave to the new 5.5-slave . Since 5.5-slave replicates with 5.5-master , which in turn replicates with 5.0-master , no one notices anything. Half the battle is done - part of the load is already on the new bundle.

The fun begins. Switch master . Here is the point of no return. Starting from this moment, we note the downtime of the master database.

- Stop MySQL on 5.0-master .

- We are convinced that all the data from binlog got on 5.5-master

- We expel 5.5-master from binlog:

mysql> RESET SLAVE ALL; - We stop MySQL on 5.5-master

- On 5.0-master we put the network interface on which the applications communicated with the base, we raise this ip on the server where we have 5.5-master .

- We are convinced that the arp table has been updated.

- We lift 5.5-master . Make sure MySQL listens on the new ip .

- Restart applications that use persistent-connect to the database.

Everything

In our case, the downtime was about one and a half minutes. Most of this time was 5.0-master . In principle, idle time could be reduced even more by dropping InnoDB caches before stopping the bases.

vCloud showed a good side. Performance when moving significantly increased due to a more powerful hardware and much more nimble disk subsystem, since the virtual disk is “smeared” by a large number of physical media.

Source: https://habr.com/ru/post/154451/

All Articles