HP Dynamic VPN technology. Part 1

Introduction

Greetings to all readers in our blog.

This series of articles will be devoted to our great solution called HP Dynamic VPN (or HP DVPN).

This is the first article in the series, and in it I will try to talk about what the HP DVPN solution is, and also describe its capabilities and application scenarios. The following articles in this series will discuss the practical implementation of the capabilities of DVPN and the configuration of the equipment itself.

')

What is DVPN?

In short, DVPN is an architecture that allows many branches or regional offices (spoke) to dynamically create secure IPsec VPN tunnels over any IP transport to connect to the central office or data center (hub).

For whom and how can this be useful?

Suppose there is a certain organization that has many branches or remote offices scattered throughout the city or even across the country (for example, a certain bank or a chain of stores, there are many examples of such organizations). We are faced with the task of uniting all these branches with each other using one transport network, as well as ensuring the possibility of their connection to the central office or data center where the main IT resources of this enterprise are located.

It would seem that the task is quite a trivial, but there may be some nuances:

- A part of branches can have only one way to connect to the outside world - a channel to the Internet from the provider (due to the banal lack of alternatives or their economic inefficiency). Therefore, the option of renting a VPN service provider to connect all branches at once to one WAN- “cloud” in this case simply disappears.

- There are issues of ensuring the protection of transmitted data in the transport network. As you know, transferring confidential information directly via the Internet is not at all safe, and not everyone will want to completely trust the telecom operator in the VPN rental option.

- How long can it take to deploy such a network? Days? Weeks? And if there are thousands of branches? Do I need to configure the equipment at each branch? Do I need to tweak existing devices on the network when adding a new branch? What to do if some branches have the ability to transfer data between themselves directly, bypassing the center?

Due to the presence of similar nuances and pitfalls, such solutions to this problem, such as the already mentioned possibility of renting a dedicated VPN network from a telecom operator, or the option of directly connecting branches to the center via the Internet, do not suit us.

What are the acceptable ways to solve this problem? From the first thing that comes to mind is the use of secure IPsec VPN tunnels between the center and branches over the Internet in a star topology (that is, when each branch office builds an IPsec tunnel to the center, the center acts as a hub of VPN tunnels coming from the branches. At the same time, the traffic of the branches passes through the center).

This solution has been used for a long time by many organizations, and is also supported on the equipment of many manufacturers (since IPsec is a generally accepted standard), however, as part of our task, and it is not without some very significant drawbacks:

- The fact that for each new branch, in the center, you have to configure individual IPsec tunnels, adversely affects the scalability of this solution, and also increases the time to connect new nodes to the transport network. How long does it take to set up a new IPsec tunnel in the center, then make the same tunnel settings in the branch office? And if we suddenly made a mistake in the settings? Where to look for the problem and for how long?

- If the task is to provide the ability to communicate between branches directly, bypassing the center (to reduce delays and optimize the load on communication channels), for N nodes in the transport network we will have to configure N * (N-1) / 2 tunnels (the so-called topology " Full Mesh "). Imagine the amount of work for a network with at least several dozens of nodes?

- And what if some network nodes (for example, nodes in regional offices) in the future should move to more reliable transport and interconnect using dedicated channels from the telecom operator with a guarantee of quality of service? However, are the other nodes still connected via the Internet? Now completely reconfigure the configuration on these nodes?

What allows DVPN architecture to be implemented?

To solve such problems and shortcomings, we developed the HP Dynamic VPN (HP DVPN) architecture, which allows you to connect up to 3,000 nodes to a single secure transport network (or DVPN domain).

The main features of this architecture:

- DVPN allows you to dynamically raise IPsec VPN tunnels between domain DVPN nodes on top of any IP transport (Internet or WAN).

- When a new node is connected to such a network, you can take a device configuration from an already connected network node, change only the IP addresses of network interfaces unique for each branch, and upload to a new device. The IPsec VPN tunnel will automatically rise to the center, and the connection between the new branch office and the center will be established.

- DVPN is optimized for star topologies (or “Hub-and-Spoke”). Just our case.

- In addition, DVPN can be configured to work in full mesh mode (Full Mesh). In this mode, IPsec VPN tunnels are created dynamically between branch offices as soon as they begin to transmit data to each other over the network.

- DVPN uses the standard IPsec protocol to create tunnels, with all its advantages (standard openness, many encryption and authentication options, dynamic key changes, etc.).

- The configuration at the central site (Hub) is dynamically updated when a new Spoke node is added to the network. At the same time, other Spoke nodes automatically receive information about the new node and get the opportunity to exchange data with it.

- The configuration of the equipment in the center and other branches is not affected in any way.

- In summary, DVPN provides an automated, secure transport architecture that acts as an “overlay” (or “Overlay”) over an existing IP network, including the Internet.

Components of DVPN architecture

DVPN consists of five main components:

- VAM server (up to 2 pieces per domain for fault tolerance)

- VAM - VPN Address Management or VPN Address Management.

- It is installed in the center, registers VAM clients and receives address information from all DVPN domain nodes.

- It maps the public IP addresses (from the underlying transport network) to the private IP addresses (from the “superimposed” overlay network) of each node and sends them this information upon request. In fact, it is a kind of Address Resolution server running on the similarity of DNS.

- Can work on the same device with Hub.

- VAM client

- Registers the public IP address, private IP address, VAM node identifier on the VAM server.

- Performs the determination of the addresses of other nodes by requesting information from the VAM server.

- Hub and Spoke devices are VAM clients.

- Hub (up to 2 pieces per domain for resiliency)

- The device (router), which is located in the center and acts as a hub of IPsec VPN tunnels from Spoke.

- Allows you to balance connections from Spoke between two Hubs in Active / Active mode.

- Spoke

- A device (router) installed in a branch office that creates an IPsec tunnel with a Hub and tunnels traffic towards the center.

- It can also dynamically create IPsec tunnels with other Spoke in Full Mesh mode.

- Authentication Server (RADIUS / TACACS)

- Allows centrally authenticating VAM clients on a VAM server.

- Not required, the VAM server also supports the ability to locally authenticate VAM clients.

What does this look like?

The following is a simplified diagram of a typical DVPN network in the Hub-and-Spoke and Full Mesh topologies, respectively.

The following features are characteristic of the Hub-and-Spoke topology:

- DVPN is an “Overlay” Non-Broadcast Multiple-Access (NBMA) infrastructure running on top of an existing IP transport network and addresses DVPN nodes to private IP addresses within a single Overlay segment of the network.

- Spoke nodes do not have a direct connection with each other, all traffic between them passes through the central node of the Hub.

- DVPN is optimized for “star” transport topologies (Hub-and-Spoke), because by default it implements the same topology of the Overlay network.

- In the Hub-and-Spoke topology, one DVPN domain can contain up to 3000 Spoke nodes per Hub with dynamic BGP routing between nodes.

Full Mesh topology has its own characteristics:

- Just like the Hub and Spoke topologies, Full Mesh DVPN is an NBMA Overlay infrastructure supporting work on top of any IP transport.

- In the Full Mesh or Partial Mesh topology (where only a fraction of all nodes have direct connections to each other), direct traffic can be transmitted over dynamically created IPsec tunnels between the Spoke nodes, unloading the channels on the central Hub node.

- The Full Mesh topology has less scalability compared to the Hub-and-Spoke topology. The final numbers depend on many factors, including the performance of DVPN routers on the nodes.

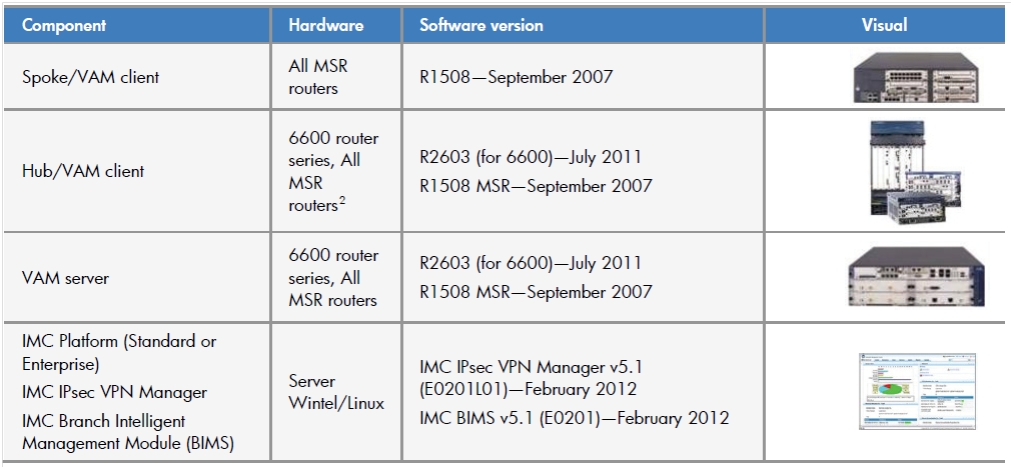

HP hardware with DVPN support

The table below provides a list of equipment that supports DVPN, and recommendations on the role of which component of the DVPN architecture it is desirable to use.

The table shows that DVPN is supported on almost all lines of HP routers (HP MSR and HP 6600) with the exception of the HP 8800 series.

More information on HP's DVPN-enabled product portfolio is available here .

DVPN and dynamic routing

Without dynamic routing between nodes, all the advantages of simplicity in configuration and scaling of the DVPN architecture would have disappeared. Obviously, with a large number of nodes in the network, static routing is not applicable, therefore DVPN supports the following dynamic routing protocols:

- BGP for large scale networks (more than 50-100 nodes, up to a maximum of 3,000 per domain)

- OSPF in NBMA mode

- OSPF Broadcast mode for Full Mesh topology

DVPN scaling and resiliency

As already mentioned, DVPN allows you to scale up to 3000 Spoke nodes per domain. If necessary, the number of domains can be increased to 10 (on an HP 6600 router operating as a Hub), thereby providing support for creating networks with up to 30,000 nodes!

To ensure fault tolerance, DVPN networks use duplication of devices that act as a VAN server, Hub router and authentication server (if available).

In this case, the VAM client on the Spoke nodes is registered on both VAM servers, and two independent DVPN domains are created on the router of these nodes, inside which IPsec VPN tunnels are built up to the main and backup Hub of the router at the same time.

With this approach, using dynamic routing protocols, it is possible to balance traffic between IPsec VPN tunnels of both DVPN domains (main and backup).

Below is a more detailed scheme for constructing a DVPN network using redundancy.

Control mechanisms

Even more effective and easy to manage and monitor the network with the DVPN architecture, will help implement the HP Intelligent Management Center (IMC).

For example, with the help of a special module for this Branch Intelligent Management System (BIMS), it is possible to completely abandon manual configuration of Spoke routers in branches through the use of automatic configuration mechanisms for subscriber devices of the TR-069 protocol.

You can read more about IMC and the BIMC module in the following cycles of HP Networking articles in our company blog.

Conclusion

The DVPN architecture allows you to quickly deploy highly scalable and secure corporate data networks (CSDs) of virtually any size and different topologies for enterprises with a well-developed infrastructure of geographically distributed units (branches) on top of any existing L3 transport (including the Internet, dedicated WAN channels, etc.). ).

What's next?

In the next article from the cycle about the DVPN architecture, an example of setting up a typical DVPN network will be described in detail.

Follow our blog updates!

Source: https://habr.com/ru/post/154141/

All Articles