How to develop your photo editor for iOS. Report on the contest VKontakte

Hello to all the interested and interested!

Yesterday (suddenly) there was an end to the delivery of projects to the first stage of another glorious contest of photo editors for iOS from VKontakte. And in this article I want to share the experience gained, to talk about the rakes and problems that I encountered while developing my version of this product.

"Engine"

The requirement of competition for shooting: “All filters should work in real time and not slow down the application” - raised the question “what kind of engine should I take.” The solution to this issue is googled in 5 seconds and is called a GPUImage . One of my friends (we will not name him) said that nafig him, there are 140 open issue, and in general it is easier to take everything and write it yourself. But time was a pity, besides, I estimate my strength objectively, therefore I took it. I think almost all members use this particular library :)

Of course, there were many problems with GPUImage , but everything seemed to be solved.

For example, a big problem with memory consumption, which is leaking in an unknown direction, because of which the application is closed. This can be both a problem of your code, and a problem of a library - not quite clear. Although the examples in the library are a whole bunch, but some subtle points can be a headache.

So, I got a bug in my code when I did something like:

[stillCamera addTarget:filter]; or

[filter prepareForImageCapture]; twice, because of what the application consumed the cosmic scale of memory and fell on the shooting (especially with blur).

The same problem lies in the fact that all the filters generate high-resolution textures inside of myself, so I decided that

CGSize forceSize = CGSizeMake(1024, 1024 * 1.5); if (!frontCameraSelected) [processFilter forceProcessingAtSize:forceSize]; @autoreleasepool { [stillCamera capturePhotoAsJPEGProcessedUpToFilter:processFilter ... ... However, I have to admit that during these two weeks the developer of the library Brad Larson has corrected several problems, and generally keeps in touch. In a word, well done!

Filters

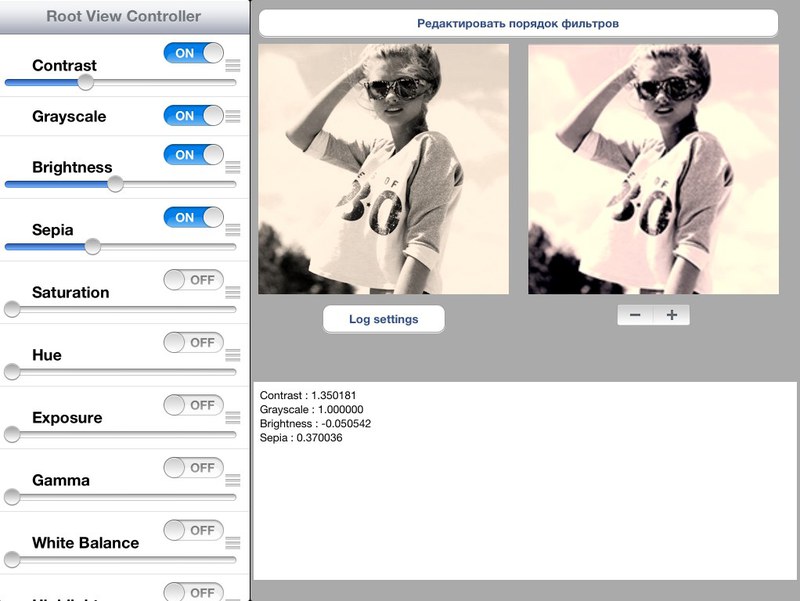

Perhaps this is the most difficult part, from the point of view of the programmer, because all the presented filters needed to be selected. For the selection of filters, I wrote an additional application on the iPad, with the help of which I selected filters with the parameters immediately used for GPUImage.

')

Approximate filter selection process:

I spent about 3 days (1-2 hours) on the selection of the filters presented, and then began to have fun with additional. For example, the eight-bit filter is my favorite:

Thus, I created a class of filters that were asked by the group. Omitting initialization and internal calls, it looks like this:

-(void)getFilters { /* Contrast : 1.032491 Gamma : 1.196751 Sepia : 0.442238 Saturation : 1.198556 */ GPUImageContrastFilter * contrast = [[GPUImageContrastFilter alloc] init]; contrast.contrast = 1.032491f; [self prepare:contrast]; GPUImageGammaFilter * gamma = [[GPUImageGammaFilter alloc] init]; gamma.gamma = 1.196751; [self prepare:gamma]; GPUImageSepiaFilter * sepia = [[GPUImageSepiaFilter alloc] init]; sepia.intensity = 0.442238; [self prepare:sepia]; GPUImageSaturationFilter * saturation = [[GPUImageSaturationFilter alloc] init]; saturation.saturation = 1.198556; [self prepare:saturation]; } Then a new problem arises with the filters: inhibition in real time :) unfortunately, for many filters I couldn’t beat it to the end, although I had the idea not to combine the filters into groups, but immediately combine them into one shader. I tried with one, but did not win a big performance, so I decided to take time and not use this approach.

Scaling

One of the conditions of the competition was the stacking and fitting of the image. UIScrollView works very well with scaling, but we need to “shoot the result” in GPUImageView. I went to the trick, or trick, and applied a GPUImageTransformFilter transformation filter to the image. Transformation is considered the results of scaling and dragging UIScrollView, which lies on the top layer.

The code for the transformation is:

- (void)scrollViewDidScroll:(UIScrollView *)scrollView { CGPoint offset = scrollView.contentOffset; CGSize size = scrollView.contentSize; CGFloat scrollViewWidth = scrollView.frame.size.width, scrollViewHeight = scrollView.frame.size.height; translationX = 0; float a = size.width - scrollViewWidth, b = size.height - scrollViewWidth; if ((int)a != 0) { translationX = (a / scrollViewWidth) * (0.5f - offset.x / a) * 2; } translationY = 0; if ((int)b != 0) { translationY = (b / scrollViewWidth) * (0.5f - offset.y / b) * 2; } if (size.height < size.width) { translationX *= aspectRatio; translationY *= aspectRatio; } CGAffineTransform resizeTransform = CGAffineTransformMakeScale(scrollView.zoomScale / scrollView.minimumZoomScale, scrollView.zoomScale / scrollView.minimumZoomScale); resizeTransform.tx = translationX; resizeTransform.ty = translationY; transformFilter.affineTransform = resizeTransform; [self fastRedraw]; // } For me, perhaps the strangest thing about this was that when the image is horizontal, we must multiply the result by the aspect ratio. Honestly, I picked it up more than I realized.

In addition, scaling and dragging with the filter applied is not a good idea, because it slows down brutally. Therefore, I turned off the filter at the time of these actions, and turned on after. It works just fine.

IPhone 5 support

This is not a very difficult topic, however, you need to keep it in mind. The application should not just stretch, but also behave a little differently. Fortunately, autoresize solves 80% of problems, the remaining 20% are solved by using one already well-known method:

- (BOOL)hasFourInchDisplay { return ([[UIDevice currentDevice] userInterfaceIdiom] == UIUserInterfaceIdiomPhone && [UIScreen mainScreen].bounds.size.height == 568.0); } In important places, use this code, and adjust the animations and frames to the new dimensions. And everything will be fine with you. You just need to remember about the new iPhone, at least a simulator.

Recent photos

During the day when everything was practically ready, “past photos” became a new headache. Their problem is that it is necessary to update them in a timely manner: did a photo - update, deleted from the gallery - update.

I do not know how the other participants, but I did get recent photos using AssetsLibrary and the method

enumerateAssetsAtIndexes ... So this method fell when you load your assets, then exit the application, delete something from the gallery, and then re-enter the application, because an invalid set is already stored in [NSIndexSet indexSetWithIndexesInRange:assetsRange] .In general, until the very last hours of surrender, this problem tormented me, but now it has been fixed.

Instead of an epilogue

During these two weeks, I got a lot of experience, and I figured out many interesting aspects, both in development, programming, and in image processing, and in general working with such libraries.

I would like to wish all the participants good luck and prizes! And I - the first;)

PS Source code lay out after the publication of the results of the competition. To avoid excesses.

PPS Link to source code for the third round: github.com/Dreddik/Phostock

The sources are pretty dirty, it was written very quickly and often thoughtlessly. I apologize :)

Requires Xcode 4.5 and up

Source: https://habr.com/ru/post/153967/

All Articles