Security Overview of Windows Azure, Part 1

Good afternoon, dear colleagues.

In this review, I tried as simple as possible to talk about how various aspects of security are provided on the Windows Azure platform. The review consists of two parts. The first part will reveal the main information - privacy, identity management, isolation, encryption, integrity and availability on the Windows Azure platform itself. The second part of the review will provide information about SQL Databases, physical security, client-side security tools, platform certification, and security recommendations.

Security is one of the most important topics when discussing the placement of applications in the "cloud". The growing popularity of cloud computing is drawing close attention to security issues, especially in light of the presence of resource sharing and multi-tenancy. Aspects of multi-tenancy and virtualization of cloud platforms necessitate some unique security methods, especially considering such types of attacks as a side-channel attack (a type of attack based on getting some information about the physical implementation).

')

The Windows Azure platform after June 7, 2012 cannot be called SaaS, PaaS or any platform, now it is rather an umbrella term, uniting many types of services. Microsoft provides a secure runtime environment that provides security at the level of the operating system and infrastructure. Some security aspects implemented at the cloud platform provider level are actually better than those available in the local infrastructure. For example, the physical security of data centers where Windows Azure is located is significantly more reliable than the vast majority of enterprises and organizations. Windows Azure network security, runtime isolation, and approaches to protecting the operating system are significantly higher than with traditional hosting. Thus, placing applications in the "cloud" can improve the security of your applications. In November, 2011, the Windows Azure platform and its information security management system were recognized by the British Standards Institute as satisfying the ISO 27001 certification. The certified functionality of the platform includes computing services, storage, virtual network and virtual machine. The next step will be certification of the rest of the functionality of Windows Azure: SQL Databases, Service Bus, CDN, etc.

In general, any cloud platform must provide three basic aspects of client data security: confidentiality, integrity and availability, and the Microsoft cloud platform is no exception. In this review I will try to reveal in as much detail as possible all the technologies and methods that are used to ensure the three aspects of security with the Windows Azure platform.

Confidentiality

Ensuring confidentiality allows the client to be sure that his data will be available only to those objects that have the appropriate right to do so. On the Windows Azure platform, privacy is maintained using the following tools and methods:

Let's look at all three of these technologies in more detail.

Personality management

To begin with, your subscription is accessed using a secure Windows Live ID system, which is one of the oldest and proven Internet authentication systems. Access to already deployed services is controlled by a subscription.

Deploying applications in Windows Azure can be done in two ways - from the Windows Azure portal and using the Service Management API (SMAPI). The Service Management API (SMAPI) provides web services using the Representational State Transfer (REST) protocol and is intended for developers. The protocol works over SSL.

SMAPI authentication is based on the user creating a pair of public and private keys and a self-signed certificate, which is registered on the Windows Azure portal. Thus, all critical application management actions are protected by your own certificates. In this case, the certificate is not tied to the trusted root certificate certificate (CA), instead it is self-signed, which allows with a certain degree of accuracy to be sure that only certain representatives of the client will have access to the services and data protected in this way.

Windows Azure storage uses its own authentication mechanism based on two Storage Account Key (SAK) keys that are associated with each account and can be reset by the user.

Thus, in Windows Azure implemented perfect comprehensive protection and authentication, general information about which is given in the table.

It should be noted that in the case of storage services to determine user rights, you can use (for all storage services - from version 1.7, previously - only for blobs) Shared Access Signatures. Shared Access Signatures were previously only available for blobs, which allowed storage account owners to issue signed URLs in a certain way to provide access to blobs. Now Shared Access Signature is available for both tables and queues in addition to blobs and containers. Before the introduction of this feature, in order to perform something from CRUD with a table or a queue, it was necessary to be the owner of the account. Now you can provide another person with a link signed by Shared Access Signature, and what rights should be granted. The functionality of Shared Access Signature is in this - detailed control of access to resources, determining what operations a user can perform on a resource, having a Shared Access Signature. The list of operations available for defining Shared Access Signature include:

The Shared Access Signature parameters include all the information necessary for issuing access to storage resources — the request parameters in the URL determine the time interval over which the Shared Access Signature will go out, the permissions granted to this Shared Access Signature, the resources to which access is granted and , in fact, the signature with which authentication occurs. In addition, you can include a link to the stored access policy in the Shared Access Signature URL, with which you can provide another layer of control.

Naturally, Shared Access Signature should be distributed using HTTPS and allow access to the shortest possible time period necessary for performing operations.

A typical example for using Shared Access Signature is an address book service, one condition for the development of which is that it can scale for a large number of users. The service allows the user to store his own address book in the cloud and access it from any device or application. The user subscribes to the service and receives the address book. You can implement this scenario using the Windows Azure role model, and then the service will work as a layer between the client application and the cloud platform storage services. After authenticating the client application, it will access the address book via the web interface of the service, which will send requests initiated by the client to the cloud platform table storage service. However, specifically in this scenario, using Shared Access Signature for table service looks great, and it is implemented quite simply. SAS for service tables can be used to provide direct access to the address book application. This approach allows you to increase the degree of scalability of the system and reduce the cost of the solution by removing the layer processing requests as a service. The role of the service in this case will be reduced to the processing of customer subscriptions and the generation of Shared Access Signature tokens for the client application.

You can read more about Shared Access Signatures in an article specifically dedicated to this topic.

An additional measure of security is the principle of least privilege, which, in fact, is also generally accepted recommended practice. According to this principle, clients are denied administrative access to their virtual machines (let me remind you that all services in Windows Azure work on the basis of virtualization), and the software launched by them works under a special limited account. Thus, anyone who in any way wants to gain access to the system must conduct an upgrade procedure.

Everything that is transmitted over the network to Windows Azure and inside the platform is securely protected using SSL, and in most cases SSL certificates are self-signed. The exception is data transfer outside the internal Windows Azure network, for example, for storage services or the fabric controller that use certificates issued by a Microsoft center.

Windows Azure Access Control Service

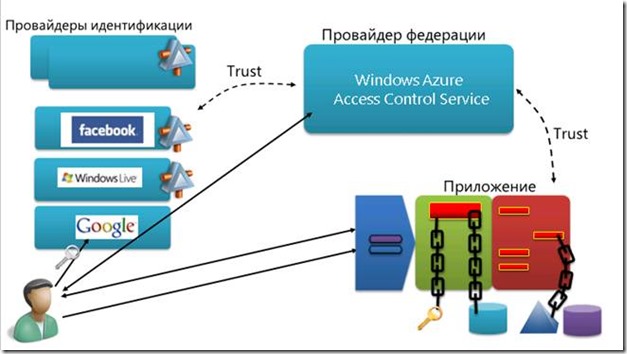

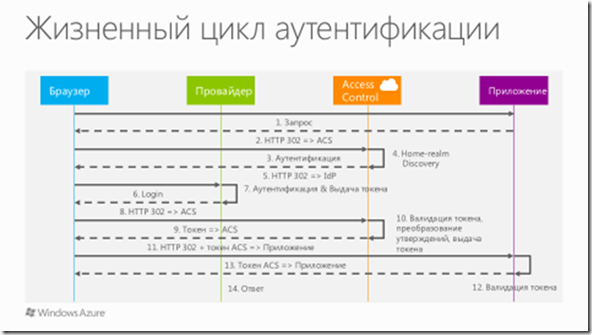

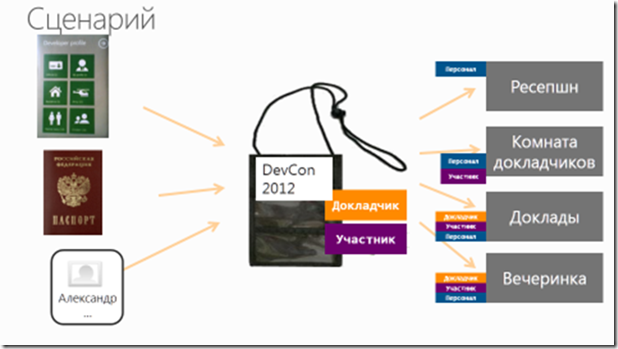

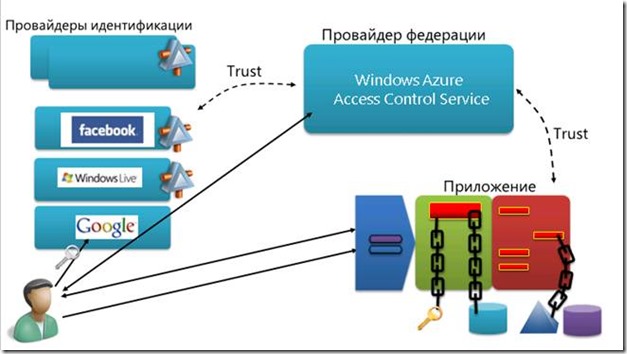

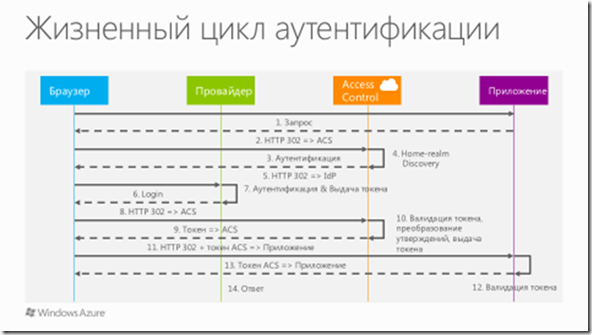

As for more complex scenarios of personal identity management, for example, not just a Live Id login, but the integration of Windows Azure authentication mechanisms and cloud (or local) applications, Microsoft offers its own Windows Azure Access Control Service. Windows Azure Access Control Service and provides service to provide federated security and control access to your cloud or local applications. AD has built-in support for AD FS 2.0 and all providers that support WS-Fed, + pre-configured providers on the portal are public providers LiveID, FB, Google. In addition, AD supports the OAuth protocol, OpenId and REST services.

Windows Azure and Access Control Service (including Windows Azure Active Directory) use claims-based authentication. These statements may include any information about the object that the identity provider allows to provide this data. The use of claims based authentication is one of the most effective methods for solving complex authentication scenarios. So, many web projects use statements - Google, Yahoo, Facebook and so on. After authentication using the selected identity provider, the client obtains statements using WS-Federation or Security Assertion Markup Language (SAML), which are then transmitted where necessary in the security token (the container containing the statements). Assertions make it possible to effectively implement the single sign-on principle (Single Sign-On), when users logging into the system, for example, using their own credentials of an organization, automatically gain access to all resources.

For example, a client has an application that uses some kind of user information store for authentication, located in a local data center. To do this, use a special authentication module that implements a specific standard. At a certain point, it becomes necessary to implement not only a fault-tolerant authentication mechanism with a single provider, but to enable users to authenticate through public identification providers, for example, Windows Live Id, Facebook, and so on. The logic of working with these identity providers is added to the authentication module. But in the event that any, even the most frivolous, change occurs in the authentication logic, standard or syntax of the identification provider, the developer will have to manually encode this change, which is an ineffective approach to the matter. The problem becomes even more serious if you migrate this application to the cloud. The Windows Azure Access Control Service allows you to solve this particular scenario by offering an elegantly built infrastructure — the user first enters the web application’s login page onto the Windows Azure Access Control Service, where he selects the required identification provider, then authenticates with it and logs in. In this case, the developer can completely abstract away from the internal authentication mechanisms, tokens, statements, and so on - Microsoft and the Windows Azure Access Control Service will do all the work for it. In this way, a common scenario can be realized when it is necessary to provide identity management in a situation with a ready-made application migrated to the cloud.

A frequent question is also “what to do if there is a ready-made application that authenticates users using their domain credentials?”. The answer to this question is to add an AD + Windows Azure application Active Directory Federation Services 2.0 to the bundle to get a working script for integrating the application in the cloud and the local Active Directory infrastructure. For the user in this case, authentication continues to be a transparent process. In addition, no credentials are sent to the cloud — AD FS 2.0 acts as an identification provider that receives user approvals with some credentials, which forms a security token from these claims and sends it through the Access Control Service secure channel. The application continues to trust only the Windows Azure Access Control Service and receive only claims from it, whereas these claims can come from completely different sources. A developer can also create their own claims provider and implement any necessary logic for managing user lists and passwords that will accept requests for approvals, create tokens, etc., or use ASP.NET Membership Provider as your own provider of approvals.

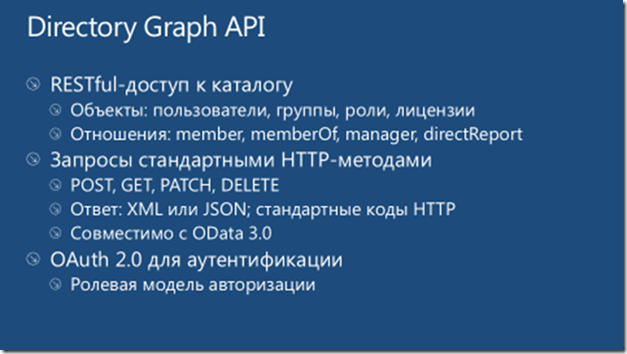

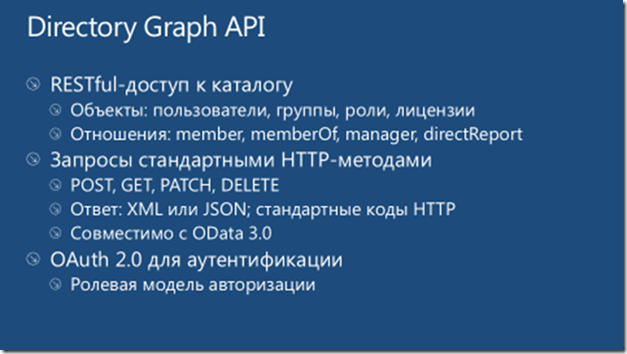

Windows Azure Active Directory

The newest service for implementing authentication scripts in Windows Azure is Windows Azure Active Directory. Immediately it is worth making a reservation - this service is not a complete analog of the local Active Directory, rather, it expands the local directory to the cloud, its “mirror”.

Windows Azure Active Directory consists of three main components: REST-service, with which you can create, receive, update and delete information from the directory, as well as use SSO (in the case of integration with Office 365, Dynamics, Windows Intune, for example); integration with various identity providers such as Facebook and Google, as well as a library that simplifies access to the functionality of Windows Azure Active Directory. Initially, Windows Azure Active Directory was used for Office 365. Now Windows Azure Active Directory provides convenient access to the following information:

Users : passwords, security policies, roles.

Groups : Security / Distribution groups.

And other basic information (for example, about services). All of this is provided through Windows Azure AD Graph — an innovative social corporate graph with a REST-capable interface, with a view of the explorer for easy discovery of information and connections.

As with the Access Control Service, when you want to integrate with the local infrastructure running AD, you need to install and configure Active Directory Federation Services Services Version 2.

Thus, using Windows Azure Active, you can create both internal and public applications that use, for example, Office 365, as well as implement federated authentication and synchronization scripts between the local Active Directory infrastructure and Windows Azure.

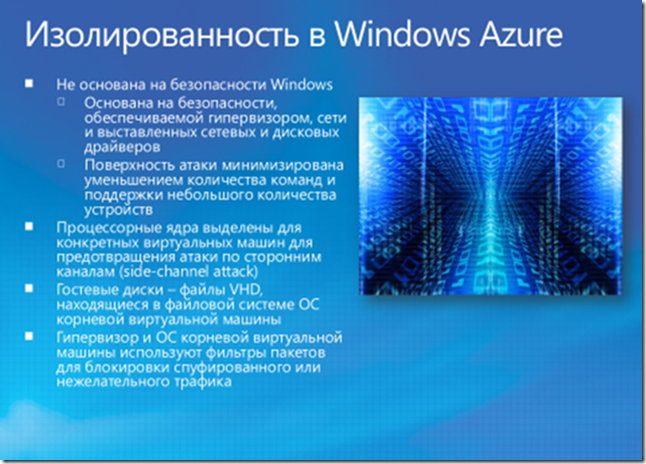

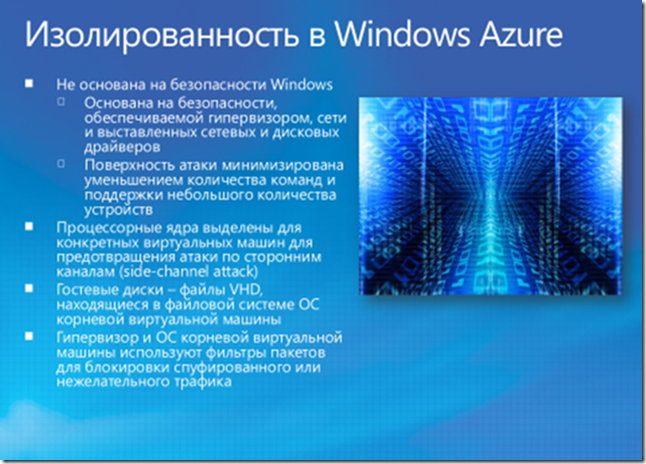

Isolation

Depending on the number of instances of the role defined by the client, Windows Azure creates an equal number of virtual machines, called role instances (for Cloud Services), and then launches the deployed application on these virtual machines. These virtual machines, in turn, run in a hypervisor, specially designed to work in the cloud (Windows Azure hypervisor).

Of course, in order to implement an effective security mechanism, it is necessary to isolate from each other instances of services that serve individual clients and the data that will be stored in storage services.

.

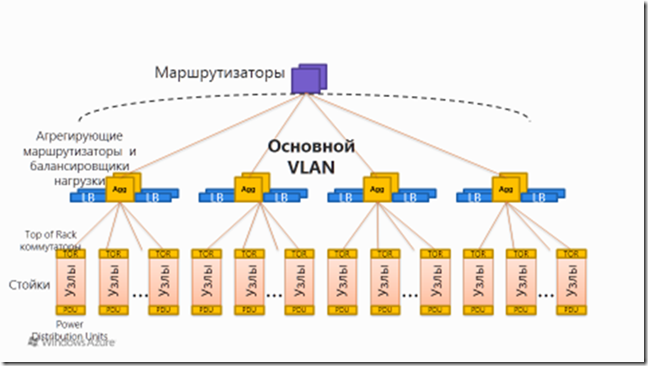

Given the virtual "origin" of everything and everything on the platform, it is crucial to ensure the isolation of the so-called Root VM (a secure system, where the Fabric Controller hosts its own Fabric Agent, which, in turn, manages the Guest Agent hosted on client virtual machines) from guest virtual machines and guest virtual machines from each other. Windows Azure uses its own hypervisor, a virtualization layer developed from Hyper-V. It runs directly on the hardware and divides each node into a certain number of virtual machines. Each node has a Root VM running a host operating system. Windows Azure uses as its operating system a heavily cropped version of Windows Server, on which only necessary services are installed to service the host virtual machines, which is done both to increase performance and reduce the attack surface. In addition, virtualization in the cloud has led to the emergence of new types of threats, for example:

• Privilege escalation due to attack from a virtual machine to a physical host or to another virtual machine

• Going beyond virtual machines and executing code in the context of an OS of a physical host with OS management seizure (Jailbreaking, Hyperjacking)

All network access and disk operations are controlled by the operating system on the Root VM. Filters on the virtual network of the hypervisor control traffic to and from the virtual machines, also preventing various attacks based on sniffing. In addition, there are other filters that block broadcasts and multicasts (except, of course, DHCP lease). The connection rules are cumulative - for example, if instances of roles A and B belong to different applications, then A can initiate the opening of a connection to B only if A can open connections to the Internet (which needs to be configured), and B can accept connections from The Internet.

As for packet filters, the controller, having a list of roles, takes this list and translates it into a list of instances of these roles, and then translates the resulting list into a list of IP addresses, which is then used by the agent to configure packet filters that allow intra-application connections to these IP addresses.

It should be noted that the Fabric Controller itself is maximally protected from potentially hacked Fabric Agents on the hosts. The communication channel between the controller and agents is bidirectional, and the agent implements the SSL-protected service that is used by the controller and can only respond to the request, but cannot initiate the creation of a connection to the controller. In addition, if it turns out that there is a controller or device that does not know how to SSL, it is located in a separate VLAN-e.

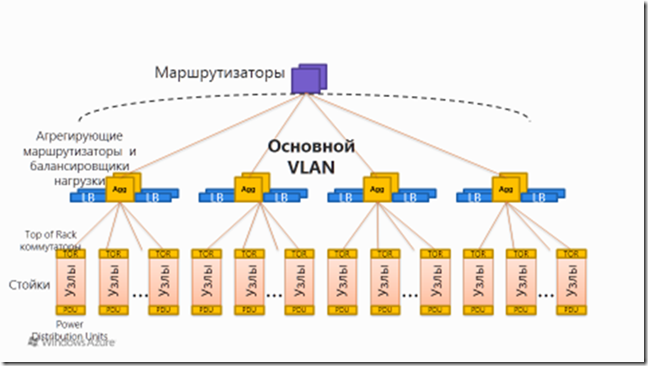

VLANs in Windows Azure are used quite actively. First of all, they are used to ensure the isolation of controllers and other devices. VLANs divide the network in such a way that there can be no “conversation” between two VLANs except through a router, which prevents the compromised node from working badly, for example, changing traffic or viewing it. There are three VLANs on each cluster:

1) Primary VLAN connects untrusted client nodes.

2) Controller VLAN - trusted controllers and supported systems.

3) Device VLANs - trusted infrastructure devices (for example, network devices).

Note: communication between the controller's VLAN and the main VLAN is possible, but only the controller to the main can initiate the connection, but not vice versa. Similarly, communications are blocked from the main VLAN to the VLAN of devices.

Encryption

An effective means of ensuring security is, of course, data encryption. As it has already been mentioned above, everything that is possible is protected by SSL. A client can use the Windows Azure SDK that extends basic .NET libraries with .NET Cryptographic Service Providers (CSP) integration capabilities in Windows Azure, for example:

1) A complete set of cryptographic related features, such as MD5 and SHA-2.

2) RNGCryptoServiceProvider - a class for generating random numbers sufficient to implement the entropy sufficient for cryptography.

3) Encryption algorithms (for example, AES), proven over the years of real use.

4) Etc.

All control messages transmitted over communication channels within the platform are protected by TLS protocol with cryptographic keys with a length of at least 128 bits.

All operations calls to Windows Azure are made using standard SOAP, XML, REST-based protocols. The communication channel can be encrypted or not encrypted depending on the settings.

What also needs to be considered when working with data in Windows Azure — at the storage services level, client data is not encrypted by default — that is, as they are in the storage of blobs or tables, they are stored in this form. In case you need to encrypt data, you can do it either on the client side or use the Trust Services functionality ( http://www.microsoft.com/en-us/sqlazurelabs/labs/trust-services.aspx ) required for server side encryption In the case of using Trust Services, data can only be decrypted by authorized users.

Microsoft Codename Trust Services is an encryption framework that is used at the application level to protect sensitive data within your cloud applications stored in Windows Azure. The data encrypted by the framework can only be decrypted by authorized clients, which makes it possible to distribute the encrypted data. At the same time, it supports search by encrypted data, encryption of streams, as well as separation of roles for data administration and publication.

For especially critical data, you can use a hybrid solution when important data is stored locally, but non-critical in the Windows Azure storage or SQL Databases.

Integrity

When a client uses data in electronic form, he quite naturally expects that this data will be protected from changes, both intentional and accidental. On Windows Azure, integrity is guaranteed, firstly, by the fact that clients do not have administrative privileges on virtual machines on compute nodes, and secondly, the code is executed under a Windows account with minimal privileges. There is no durable storage on the VM. Each VM is connected to three local virtual disks (VHD):

Disk D: contains one of several versions of Windows. WA provides various images and updates them in a timely manner. The client chooses the most appropriate version and as soon as a new version of Windows becomes available, the client can switch to it.

* Drive E: contains the image created by the controller, with the content provided by the client — for example, an application.

* Drive C: contains configuration information, paging files and other service data.

The D: and E: disks are, of course, virtual disks, and are read-only (ACL, access lists, contain certain rights to deny access from client processes). However, a “loophole” was left for the operating system - these virtual disks are implemented as VHD + delta files. For example, when the platform updates the VHD D: containing the operating system, the delta file of this disk is cleared and filled with a new image. Also with other drives. All drives return to their original state if the role instance is transferred to another physical machine.

Availability

Any business client or simple individual who puts the service in the cloud is critically important to make it as accessible as possible to both consumers and the client. The Microsoft cloud platform provides a certain layer of functionality that implements redundancy and, thus, the maximum possible availability of customer data.

The most important concept that reveals the basic accessibility mechanism on Windows Azure is replication. Let's consider new mechanisms (and they are really new - the official announcement took place on June 7, 2012) in more detail.

Locally Redundant Storage (LRS) provides storage with a high degree of durability and availability within a single geographic location (region). The platform stores three replicas of each data item in one main geographic location, which ensures that these data can be recovered after a general failure (for example, a disk, node, recycle, and so on) without idle storage account and, accordingly, affecting the availability and durability of the repository. All write operations to the storage are performed synchronously in three replicas in three different fault domains (fault domain), and only after the successful completion of all three operations does the code return a successful completion of the transaction. In the case of using local redundant storage, if the data center where the data replicas are located,will be for any reason incapacitated completely, Microsoft will contact the customer and report possible loss of information and data using the contacts provided in the customer's subscription.

Geo Redundant Storage (GRS) provides a much higher degree of durability and security, placing replicas of data not only in the main geographical location, but also in any additional in the same region, but hundreds of kilometers away. All data in the blob and table storage services is geographically replicated (but the queues are not). With geographically redundant storage, the platform again stores three replicas, but in two locations. Thus, if the data center stops working, the data will be available from the second location. As with the first redundancy option, data logging operations in the main geographic location must be confirmed before the system returns a successful completion code. Upon confirmation of the operation in asynchronous mode, replication occurs to another geographic location. Let's take a closer look athow geographic replication happens.

When you perform create, delete, update, and so on operations in the data warehouse, the transaction is fully replicated to three completely different storage nodes in three different error and update domains in the main geographic location, after which the client returns a successful completion code and confirmed in asynchronous mode The transaction is replicated to a second location, where it is fully replicated to three completely different storage nodes in different error and update domains. The overall performance does not fall, since everything is done asynchronously.

Regarding geographic fault tolerance and how everything is restored in case of serious failures. If a serious failure has occurred in the main geographical location, it is natural that the corporation is trying to smooth out the consequences to the maximum. However, if everything is really bad and the data is lost, it may be necessary to apply the rules of geographic fault tolerance - the client is notified of a catastrophe in the main location, after which the corresponding DNS records are overwritten from the main location to the second (account.service.core.windows.net ). Of course, in the process of translating DNS records, it is unlikely that something will work, but upon its completion, existing blobs and tables become available at their URLs. After the transfer process is completed, the second geographic location rises in status to the main one (untiluntil the next data center failure occurs). Also immediately upon completion of the process of raising the status of a data center, the process of creating a new second geographic location in the same region and further replicating data is initiated.

All this is controlled by the Fabric Controller. In the event that guest agents (GA) installed on virtual machines stop responding, the controller transfers everything to another node and reprograms the network configuration to ensure full accessibility.

Also on the Windows Azure platform, there are mechanisms such as update domains and error domains, which also guarantee the continued availability of the deployed service even during an operating system update or hardware errors.

Error domains are a kind of physical unit, a deployment container, and usually it is limited to recom (rack). Why is it limited to recom? Because if the domains are located in different rivers, then it will turn out that the instances will be located in such a way that there is not enough probability of their total failure. In addition, an error in one error domain should not lead to errors in other domains. Thus, if something breaks in the error domain, the entire domain is marked as broken and the deployment is transferred to another error domain. At the moment, it is impossible to control the number of error domains - this is the responsibility of the Fabric Controller.

Update domains are a more controlled entity. Above the update domains there is a certain level of control, and the user can perform incremental or rolling updates of a group of instances of his service at one time. The update domains differ from the error domains by the fact that they are a logical entity, whereas the error domains are physical. Since the update domain logically groups the roles, one application can be located in several update domains and at the same time only in two error domains. In this case, updates can be made first in the update domain No. 1, then in the update domain No. 2, and so on.

Each data center has at least two sources of electricity, including an autonomous power supply. The environment controls are autonomous and will function as long as the systems are connected to the Internet.

In this review, I tried as simple as possible to talk about how various aspects of security are provided on the Windows Azure platform. The review consists of two parts. The first part will reveal the main information - privacy, identity management, isolation, encryption, integrity and availability on the Windows Azure platform itself. The second part of the review will provide information about SQL Databases, physical security, client-side security tools, platform certification, and security recommendations.

Security is one of the most important topics when discussing the placement of applications in the "cloud". The growing popularity of cloud computing is drawing close attention to security issues, especially in light of the presence of resource sharing and multi-tenancy. Aspects of multi-tenancy and virtualization of cloud platforms necessitate some unique security methods, especially considering such types of attacks as a side-channel attack (a type of attack based on getting some information about the physical implementation).

')

The Windows Azure platform after June 7, 2012 cannot be called SaaS, PaaS or any platform, now it is rather an umbrella term, uniting many types of services. Microsoft provides a secure runtime environment that provides security at the level of the operating system and infrastructure. Some security aspects implemented at the cloud platform provider level are actually better than those available in the local infrastructure. For example, the physical security of data centers where Windows Azure is located is significantly more reliable than the vast majority of enterprises and organizations. Windows Azure network security, runtime isolation, and approaches to protecting the operating system are significantly higher than with traditional hosting. Thus, placing applications in the "cloud" can improve the security of your applications. In November, 2011, the Windows Azure platform and its information security management system were recognized by the British Standards Institute as satisfying the ISO 27001 certification. The certified functionality of the platform includes computing services, storage, virtual network and virtual machine. The next step will be certification of the rest of the functionality of Windows Azure: SQL Databases, Service Bus, CDN, etc.

In general, any cloud platform must provide three basic aspects of client data security: confidentiality, integrity and availability, and the Microsoft cloud platform is no exception. In this review I will try to reveal in as much detail as possible all the technologies and methods that are used to ensure the three aspects of security with the Windows Azure platform.

Confidentiality

Ensuring confidentiality allows the client to be sure that his data will be available only to those objects that have the appropriate right to do so. On the Windows Azure platform, privacy is maintained using the following tools and methods:

- Personality Management — Determining whether an authenticated principal is an object that has access to something.

- Isolation - ensuring the isolation of data using "containers" of both physical and logical levels.

- Encryption - additional data protection using encryption mechanisms. Encryption is used on the Windows Azure platform to protect communication channels and is used to provide better protection for customer data.

Let's look at all three of these technologies in more detail.

Personality management

To begin with, your subscription is accessed using a secure Windows Live ID system, which is one of the oldest and proven Internet authentication systems. Access to already deployed services is controlled by a subscription.

Deploying applications in Windows Azure can be done in two ways - from the Windows Azure portal and using the Service Management API (SMAPI). The Service Management API (SMAPI) provides web services using the Representational State Transfer (REST) protocol and is intended for developers. The protocol works over SSL.

SMAPI authentication is based on the user creating a pair of public and private keys and a self-signed certificate, which is registered on the Windows Azure portal. Thus, all critical application management actions are protected by your own certificates. In this case, the certificate is not tied to the trusted root certificate certificate (CA), instead it is self-signed, which allows with a certain degree of accuracy to be sure that only certain representatives of the client will have access to the services and data protected in this way.

Windows Azure storage uses its own authentication mechanism based on two Storage Account Key (SAK) keys that are associated with each account and can be reset by the user.

Thus, in Windows Azure implemented perfect comprehensive protection and authentication, general information about which is given in the table.

| Subjects | Objects of protection | Authentication mechanism |

| Customers | Subscription | Windows Live ID |

| Developers | Windows Azure / SMAPI Portal | Windows Live ID (portal) self-signed certificate (SMAPI) |

| Role instances | Storage | Key |

| External applications | Storage | Key |

| External applications | Applications | User Defined |

It should be noted that in the case of storage services to determine user rights, you can use (for all storage services - from version 1.7, previously - only for blobs) Shared Access Signatures. Shared Access Signatures were previously only available for blobs, which allowed storage account owners to issue signed URLs in a certain way to provide access to blobs. Now Shared Access Signature is available for both tables and queues in addition to blobs and containers. Before the introduction of this feature, in order to perform something from CRUD with a table or a queue, it was necessary to be the owner of the account. Now you can provide another person with a link signed by Shared Access Signature, and what rights should be granted. The functionality of Shared Access Signature is in this - detailed control of access to resources, determining what operations a user can perform on a resource, having a Shared Access Signature. The list of operations available for defining Shared Access Signature include:

- Reading and writing content - in the case of blobs, there is also their properties and metadata, as well as block lists.

- Deleting, leasing, creating blobs snapshots.

- Retrieving lists of content items.

- Add, delete, update messages in queues.

- Retrieving queue metadata, including the number of messages in the queue.

- Reading, adding, updating and inserting entities into a table.

The Shared Access Signature parameters include all the information necessary for issuing access to storage resources — the request parameters in the URL determine the time interval over which the Shared Access Signature will go out, the permissions granted to this Shared Access Signature, the resources to which access is granted and , in fact, the signature with which authentication occurs. In addition, you can include a link to the stored access policy in the Shared Access Signature URL, with which you can provide another layer of control.

Naturally, Shared Access Signature should be distributed using HTTPS and allow access to the shortest possible time period necessary for performing operations.

A typical example for using Shared Access Signature is an address book service, one condition for the development of which is that it can scale for a large number of users. The service allows the user to store his own address book in the cloud and access it from any device or application. The user subscribes to the service and receives the address book. You can implement this scenario using the Windows Azure role model, and then the service will work as a layer between the client application and the cloud platform storage services. After authenticating the client application, it will access the address book via the web interface of the service, which will send requests initiated by the client to the cloud platform table storage service. However, specifically in this scenario, using Shared Access Signature for table service looks great, and it is implemented quite simply. SAS for service tables can be used to provide direct access to the address book application. This approach allows you to increase the degree of scalability of the system and reduce the cost of the solution by removing the layer processing requests as a service. The role of the service in this case will be reduced to the processing of customer subscriptions and the generation of Shared Access Signature tokens for the client application.

You can read more about Shared Access Signatures in an article specifically dedicated to this topic.

An additional measure of security is the principle of least privilege, which, in fact, is also generally accepted recommended practice. According to this principle, clients are denied administrative access to their virtual machines (let me remind you that all services in Windows Azure work on the basis of virtualization), and the software launched by them works under a special limited account. Thus, anyone who in any way wants to gain access to the system must conduct an upgrade procedure.

Everything that is transmitted over the network to Windows Azure and inside the platform is securely protected using SSL, and in most cases SSL certificates are self-signed. The exception is data transfer outside the internal Windows Azure network, for example, for storage services or the fabric controller that use certificates issued by a Microsoft center.

Windows Azure Access Control Service

As for more complex scenarios of personal identity management, for example, not just a Live Id login, but the integration of Windows Azure authentication mechanisms and cloud (or local) applications, Microsoft offers its own Windows Azure Access Control Service. Windows Azure Access Control Service and provides service to provide federated security and control access to your cloud or local applications. AD has built-in support for AD FS 2.0 and all providers that support WS-Fed, + pre-configured providers on the portal are public providers LiveID, FB, Google. In addition, AD supports the OAuth protocol, OpenId and REST services.

|

Windows Azure and Access Control Service (including Windows Azure Active Directory) use claims-based authentication. These statements may include any information about the object that the identity provider allows to provide this data. The use of claims based authentication is one of the most effective methods for solving complex authentication scenarios. So, many web projects use statements - Google, Yahoo, Facebook and so on. After authentication using the selected identity provider, the client obtains statements using WS-Federation or Security Assertion Markup Language (SAML), which are then transmitted where necessary in the security token (the container containing the statements). Assertions make it possible to effectively implement the single sign-on principle (Single Sign-On), when users logging into the system, for example, using their own credentials of an organization, automatically gain access to all resources.

For example, a client has an application that uses some kind of user information store for authentication, located in a local data center. To do this, use a special authentication module that implements a specific standard. At a certain point, it becomes necessary to implement not only a fault-tolerant authentication mechanism with a single provider, but to enable users to authenticate through public identification providers, for example, Windows Live Id, Facebook, and so on. The logic of working with these identity providers is added to the authentication module. But in the event that any, even the most frivolous, change occurs in the authentication logic, standard or syntax of the identification provider, the developer will have to manually encode this change, which is an ineffective approach to the matter. The problem becomes even more serious if you migrate this application to the cloud. The Windows Azure Access Control Service allows you to solve this particular scenario by offering an elegantly built infrastructure — the user first enters the web application’s login page onto the Windows Azure Access Control Service, where he selects the required identification provider, then authenticates with it and logs in. In this case, the developer can completely abstract away from the internal authentication mechanisms, tokens, statements, and so on - Microsoft and the Windows Azure Access Control Service will do all the work for it. In this way, a common scenario can be realized when it is necessary to provide identity management in a situation with a ready-made application migrated to the cloud.

A frequent question is also “what to do if there is a ready-made application that authenticates users using their domain credentials?”. The answer to this question is to add an AD + Windows Azure application Active Directory Federation Services 2.0 to the bundle to get a working script for integrating the application in the cloud and the local Active Directory infrastructure. For the user in this case, authentication continues to be a transparent process. In addition, no credentials are sent to the cloud — AD FS 2.0 acts as an identification provider that receives user approvals with some credentials, which forms a security token from these claims and sends it through the Access Control Service secure channel. The application continues to trust only the Windows Azure Access Control Service and receive only claims from it, whereas these claims can come from completely different sources. A developer can also create their own claims provider and implement any necessary logic for managing user lists and passwords that will accept requests for approvals, create tokens, etc., or use ASP.NET Membership Provider as your own provider of approvals.

Windows Azure Active Directory

The newest service for implementing authentication scripts in Windows Azure is Windows Azure Active Directory. Immediately it is worth making a reservation - this service is not a complete analog of the local Active Directory, rather, it expands the local directory to the cloud, its “mirror”.

Windows Azure Active Directory consists of three main components: REST-service, with which you can create, receive, update and delete information from the directory, as well as use SSO (in the case of integration with Office 365, Dynamics, Windows Intune, for example); integration with various identity providers such as Facebook and Google, as well as a library that simplifies access to the functionality of Windows Azure Active Directory. Initially, Windows Azure Active Directory was used for Office 365. Now Windows Azure Active Directory provides convenient access to the following information:

Users : passwords, security policies, roles.

Groups : Security / Distribution groups.

And other basic information (for example, about services). All of this is provided through Windows Azure AD Graph — an innovative social corporate graph with a REST-capable interface, with a view of the explorer for easy discovery of information and connections.

As with the Access Control Service, when you want to integrate with the local infrastructure running AD, you need to install and configure Active Directory Federation Services Services Version 2.

Thus, using Windows Azure Active, you can create both internal and public applications that use, for example, Office 365, as well as implement federated authentication and synchronization scripts between the local Active Directory infrastructure and Windows Azure.

Isolation

Depending on the number of instances of the role defined by the client, Windows Azure creates an equal number of virtual machines, called role instances (for Cloud Services), and then launches the deployed application on these virtual machines. These virtual machines, in turn, run in a hypervisor, specially designed to work in the cloud (Windows Azure hypervisor).

Of course, in order to implement an effective security mechanism, it is necessary to isolate from each other instances of services that serve individual clients and the data that will be stored in storage services.

.

Given the virtual "origin" of everything and everything on the platform, it is crucial to ensure the isolation of the so-called Root VM (a secure system, where the Fabric Controller hosts its own Fabric Agent, which, in turn, manages the Guest Agent hosted on client virtual machines) from guest virtual machines and guest virtual machines from each other. Windows Azure uses its own hypervisor, a virtualization layer developed from Hyper-V. It runs directly on the hardware and divides each node into a certain number of virtual machines. Each node has a Root VM running a host operating system. Windows Azure uses as its operating system a heavily cropped version of Windows Server, on which only necessary services are installed to service the host virtual machines, which is done both to increase performance and reduce the attack surface. In addition, virtualization in the cloud has led to the emergence of new types of threats, for example:

• Privilege escalation due to attack from a virtual machine to a physical host or to another virtual machine

• Going beyond virtual machines and executing code in the context of an OS of a physical host with OS management seizure (Jailbreaking, Hyperjacking)

All network access and disk operations are controlled by the operating system on the Root VM. Filters on the virtual network of the hypervisor control traffic to and from the virtual machines, also preventing various attacks based on sniffing. In addition, there are other filters that block broadcasts and multicasts (except, of course, DHCP lease). The connection rules are cumulative - for example, if instances of roles A and B belong to different applications, then A can initiate the opening of a connection to B only if A can open connections to the Internet (which needs to be configured), and B can accept connections from The Internet.

As for packet filters, the controller, having a list of roles, takes this list and translates it into a list of instances of these roles, and then translates the resulting list into a list of IP addresses, which is then used by the agent to configure packet filters that allow intra-application connections to these IP addresses.

It should be noted that the Fabric Controller itself is maximally protected from potentially hacked Fabric Agents on the hosts. The communication channel between the controller and agents is bidirectional, and the agent implements the SSL-protected service that is used by the controller and can only respond to the request, but cannot initiate the creation of a connection to the controller. In addition, if it turns out that there is a controller or device that does not know how to SSL, it is located in a separate VLAN-e.

VLANs in Windows Azure are used quite actively. First of all, they are used to ensure the isolation of controllers and other devices. VLANs divide the network in such a way that there can be no “conversation” between two VLANs except through a router, which prevents the compromised node from working badly, for example, changing traffic or viewing it. There are three VLANs on each cluster:

1) Primary VLAN connects untrusted client nodes.

2) Controller VLAN - trusted controllers and supported systems.

3) Device VLANs - trusted infrastructure devices (for example, network devices).

Note: communication between the controller's VLAN and the main VLAN is possible, but only the controller to the main can initiate the connection, but not vice versa. Similarly, communications are blocked from the main VLAN to the VLAN of devices.

Encryption

An effective means of ensuring security is, of course, data encryption. As it has already been mentioned above, everything that is possible is protected by SSL. A client can use the Windows Azure SDK that extends basic .NET libraries with .NET Cryptographic Service Providers (CSP) integration capabilities in Windows Azure, for example:

1) A complete set of cryptographic related features, such as MD5 and SHA-2.

2) RNGCryptoServiceProvider - a class for generating random numbers sufficient to implement the entropy sufficient for cryptography.

3) Encryption algorithms (for example, AES), proven over the years of real use.

4) Etc.

All control messages transmitted over communication channels within the platform are protected by TLS protocol with cryptographic keys with a length of at least 128 bits.

All operations calls to Windows Azure are made using standard SOAP, XML, REST-based protocols. The communication channel can be encrypted or not encrypted depending on the settings.

What also needs to be considered when working with data in Windows Azure — at the storage services level, client data is not encrypted by default — that is, as they are in the storage of blobs or tables, they are stored in this form. In case you need to encrypt data, you can do it either on the client side or use the Trust Services functionality ( http://www.microsoft.com/en-us/sqlazurelabs/labs/trust-services.aspx ) required for server side encryption In the case of using Trust Services, data can only be decrypted by authorized users.

Microsoft Codename Trust Services is an encryption framework that is used at the application level to protect sensitive data within your cloud applications stored in Windows Azure. The data encrypted by the framework can only be decrypted by authorized clients, which makes it possible to distribute the encrypted data. At the same time, it supports search by encrypted data, encryption of streams, as well as separation of roles for data administration and publication.

For especially critical data, you can use a hybrid solution when important data is stored locally, but non-critical in the Windows Azure storage or SQL Databases.

Integrity

When a client uses data in electronic form, he quite naturally expects that this data will be protected from changes, both intentional and accidental. On Windows Azure, integrity is guaranteed, firstly, by the fact that clients do not have administrative privileges on virtual machines on compute nodes, and secondly, the code is executed under a Windows account with minimal privileges. There is no durable storage on the VM. Each VM is connected to three local virtual disks (VHD):

Disk D: contains one of several versions of Windows. WA provides various images and updates them in a timely manner. The client chooses the most appropriate version and as soon as a new version of Windows becomes available, the client can switch to it.

* Drive E: contains the image created by the controller, with the content provided by the client — for example, an application.

* Drive C: contains configuration information, paging files and other service data.

The D: and E: disks are, of course, virtual disks, and are read-only (ACL, access lists, contain certain rights to deny access from client processes). However, a “loophole” was left for the operating system - these virtual disks are implemented as VHD + delta files. For example, when the platform updates the VHD D: containing the operating system, the delta file of this disk is cleared and filled with a new image. Also with other drives. All drives return to their original state if the role instance is transferred to another physical machine.

Availability

Any business client or simple individual who puts the service in the cloud is critically important to make it as accessible as possible to both consumers and the client. The Microsoft cloud platform provides a certain layer of functionality that implements redundancy and, thus, the maximum possible availability of customer data.

The most important concept that reveals the basic accessibility mechanism on Windows Azure is replication. Let's consider new mechanisms (and they are really new - the official announcement took place on June 7, 2012) in more detail.

Locally Redundant Storage (LRS) provides storage with a high degree of durability and availability within a single geographic location (region). The platform stores three replicas of each data item in one main geographic location, which ensures that these data can be recovered after a general failure (for example, a disk, node, recycle, and so on) without idle storage account and, accordingly, affecting the availability and durability of the repository. All write operations to the storage are performed synchronously in three replicas in three different fault domains (fault domain), and only after the successful completion of all three operations does the code return a successful completion of the transaction. In the case of using local redundant storage, if the data center where the data replicas are located,will be for any reason incapacitated completely, Microsoft will contact the customer and report possible loss of information and data using the contacts provided in the customer's subscription.

Geo Redundant Storage (GRS) provides a much higher degree of durability and security, placing replicas of data not only in the main geographical location, but also in any additional in the same region, but hundreds of kilometers away. All data in the blob and table storage services is geographically replicated (but the queues are not). With geographically redundant storage, the platform again stores three replicas, but in two locations. Thus, if the data center stops working, the data will be available from the second location. As with the first redundancy option, data logging operations in the main geographic location must be confirmed before the system returns a successful completion code. Upon confirmation of the operation in asynchronous mode, replication occurs to another geographic location. Let's take a closer look athow geographic replication happens.

When you perform create, delete, update, and so on operations in the data warehouse, the transaction is fully replicated to three completely different storage nodes in three different error and update domains in the main geographic location, after which the client returns a successful completion code and confirmed in asynchronous mode The transaction is replicated to a second location, where it is fully replicated to three completely different storage nodes in different error and update domains. The overall performance does not fall, since everything is done asynchronously.

Regarding geographic fault tolerance and how everything is restored in case of serious failures. If a serious failure has occurred in the main geographical location, it is natural that the corporation is trying to smooth out the consequences to the maximum. However, if everything is really bad and the data is lost, it may be necessary to apply the rules of geographic fault tolerance - the client is notified of a catastrophe in the main location, after which the corresponding DNS records are overwritten from the main location to the second (account.service.core.windows.net ). Of course, in the process of translating DNS records, it is unlikely that something will work, but upon its completion, existing blobs and tables become available at their URLs. After the transfer process is completed, the second geographic location rises in status to the main one (untiluntil the next data center failure occurs). Also immediately upon completion of the process of raising the status of a data center, the process of creating a new second geographic location in the same region and further replicating data is initiated.

All this is controlled by the Fabric Controller. In the event that guest agents (GA) installed on virtual machines stop responding, the controller transfers everything to another node and reprograms the network configuration to ensure full accessibility.

Also on the Windows Azure platform, there are mechanisms such as update domains and error domains, which also guarantee the continued availability of the deployed service even during an operating system update or hardware errors.

Error domains are a kind of physical unit, a deployment container, and usually it is limited to recom (rack). Why is it limited to recom? Because if the domains are located in different rivers, then it will turn out that the instances will be located in such a way that there is not enough probability of their total failure. In addition, an error in one error domain should not lead to errors in other domains. Thus, if something breaks in the error domain, the entire domain is marked as broken and the deployment is transferred to another error domain. At the moment, it is impossible to control the number of error domains - this is the responsibility of the Fabric Controller.

Update domains are a more controlled entity. Above the update domains there is a certain level of control, and the user can perform incremental or rolling updates of a group of instances of his service at one time. The update domains differ from the error domains by the fact that they are a logical entity, whereas the error domains are physical. Since the update domain logically groups the roles, one application can be located in several update domains and at the same time only in two error domains. In this case, updates can be made first in the update domain No. 1, then in the update domain No. 2, and so on.

Each data center has at least two sources of electricity, including an autonomous power supply. The environment controls are autonomous and will function as long as the systems are connected to the Internet.

Source: https://habr.com/ru/post/153205/

All Articles