WebServer as a test task

How it all began

Despite the fact that my work is currently related to desktop applications, I was recently interested in “server technologies”. Some surfing the Internet, reading man's and trying to write something server-like for yourself is all that has been done lately, as there is no clear goal. Having come up with an interesting task for yourself, it’s not a bad thing to raise your skill level.

At one point, when I finally got bored at work from a routine, I put a check in one of the well-known job search resources, which is not against looking at the market, all of a sudden, something interesting will fall ... As a result, a certain number of job offers, on the topic: "Perhaps this will interest you." Among such proposals and a proposal came with a test task. The test task is to write a WebServer in C ++ for Linux with the implementation of the HTTP protocol; simple ...

Taking the phrase from the test task and typing it into Google, I found more reviews about such a not very short test task on the RSDN forum. The task was one to one in my mailer. As a task to perform it did not. The principle is simple: if the test task is worth performing, then it should be no more than 4 hours of working time calculated. But to try everything that was read and tested in places was interesting. This became a stimulus, i.e. setting an interesting problem. I cannot say to which office this task belongs to, since it came from a personnel agency, but this is not so important.

This article will look at the approaches and the corresponding APIs that I have found on this topic. I will give several implementations of WebServer using different approaches and tools, and comparative testing of the obtained “handicrafts” has been conducted. The article is not designed for "bearded" server-writers, but as a review people who have encountered similar tasks (not only in tests) may well be useful. I will be glad to have constructive comments from everyone, especially from the “bearded” server-writers, since writing an article is not only sharing my experience, but, quite possibly, replenishing it for myself ...

')

API and Library Overview

The result of consideration of server-description tools became API of * nix systems, Windows API (why not see, although this platform is not used for this task) and libraries such as boost.asio and libevent.

The Berkeley sockets, although a universal, portable mechanism, are not entirely unambiguously portable. So in some platforms close to close the socket, and in some closesocket; some need to initialize the library (Windows - WSAStartup / WSACleanup), some do not; somewhere the socket descriptor is int, and somewhere is SOCKET and other minor differences. It turns out, if you do not use any approaches of cross-platform programming such as pImpl and others, then the same code will not work, and often, it will be the same on different platforms. All these little things are hidden in libraries like boost.asio , libevent and similar. In addition, such libraries use the more specific API methods of the respective platform to implement the most optimal work with sockets, providing the user with a convenient interface without hints on the platform.

If you take a very generalized server operation, you get the following sequence of actions:

- Create socket

- Bind a socket to a network interface

- Listen to a socket bound to a specific network interface.

- Accept incoming connections

- Respond to events occurring on sockets

All items, except the fifth, are relatively similar and of little interest, but the response mechanisms for events occurring on the socket are many and most of them are specific to each platform.

If you look at Windows, you can see the following methods:

- Use select. Mainly for compatibility with the code of other platforms, he has no more advantages here.

- WSAAsyncSelect - Intended for window applications to send events on a socket to a window queue. Not fast and is unlikely to be interesting as a server code mechanism.

- WSAEventSelect work with the object "event" on the network interface. Already more attractive tool. Those. if you plan a server for no more than hundreds of simultaneously serviced connections, then this is the most optimal mechanism by the criterion of speed / speed of development.

- Overlapped I / O is a faster mechanism than WSAEventSelect, but also more laborious in development.

- I / O completion ports - for high-load server applications.

There is an excellent book on the development of network software for Windows - "Programming in Microsoft Windows networks".

Now, if you look at the * nix state, then there is also no small set of event selectors:

- Same select. And again his role is compatibility with other platforms. It is also not fast, since it is triggered (returns) when an event occurs on any of the sockets it oversees. After such a trigger, you need to run through all and see which of the sockets the event occurred. To summarize: one actuation is the mileage across the entire pool of monitored sockets.

- poll is a faster mechanism, but is not designed for a large number of sockets for monitoring.

- epoll (Linux systems) and kqueue (FreeBSD) are roughly the same mechanisms, but vehement FreeBSD fans in some forums very fervently say that kqueue is much more powerful. We will not kindle the holy war ... These mechanisms can be considered essential when writing high-loaded server applications in * nix systems. If we describe briefly their principle of operation and its dignity - they return a certain amount of information relating only to those sockets on which something happened and do not need to run around and check what happened where. Also, these mechanisms are designed for a larger number of simultaneously serviced connections.

In addition to the functions for waiting for events, there are some small but very useful things on descriptors:

- sendfile (Linux) and TransmitFile (Windows) allow you to feed them a pair of descriptors from where and where to send data. A very useful thing in HTTP servers when you need to transfer files, as it eliminates buffer allocation and call read / write functions, which has a positive effect on performance.

- aio - allows you to shift a certain amount of work to the operating system, as it allows you to perform asynchronous operations on the file descriptor. For example, tell the system that you have a buffer, write it here in this file descriptor, when you finish the signal (similar to reading).

- Nagle's algorithm is a useful thing when writing applications that need to send data to the network in small portions and without delays in buffering, but it is not always useful. In applications such as HTTP server, it is better to tell the system, on the contrary, that it buffer outgoing data and send TCP frames with useful information (for this you can use the socket option TCP_CORK).

- And of course, non-blocking sockets. No comments...

- There are also functions such as writev (nix) (and similar Windows WSA functions) that allow you to send several buffers at once, which is useful when you need to send an HTTP packet header and data attached to it and at the same time save on the number of system calls.

About the use of libraries, it is better to say the code for the beginning, which will be done below with examples boost.asio and libevent. boost.asio greatly simplifies the development of network applications, libevent is a server classic.

Implementation on epoll

Whichever mechanism is chosen to respond to epoll network events, poll, select, there are still many other nuances.

One of the very first questions in the implementation of a multi-threaded server is the choice of the number of threads. Most of those who once had to quickly assemble their “server on sockets” for training or pseudo-combat purposes, chose the strategy “One connection - one stream”. In this approach, there are both pluses and minuses. The biggest plus is the ease of development. There are a lot of minuses: a large amount of system resources spent, a lot of synchronization actions (code, something with something synchronizing). However, this approach is not bad for the HTTP server in terms of synchronization, since there are no special intersections between sessions. But, despite the simplicity of development, I did not consider this strategy for my implementation. There are different recommendations on the optimal choice of the number of threads - this is the number of processors / processor cores in the system, the same number, but with a certain coefficient. In the proposed implementation, the number of worker threads is an optional parameter set by the user when the server starts. For myself, it was decided that the number of worker threads is equal to the number of processors / cores multiplied by two.

In the current context, a workflow is a flow that processes user requests. In addition to these flows, two more were involved: the listening thread and the main one. Listening thread - listens to the server socket and accepts incoming connections, then they are placed in the queue for processing to workflows. The main thread starts the server and waits for a certain action from the user to stop it.

The second question that interested me in the implementation of this example is in which threads and how to handle network events when using an epoll. The first thing that occurred to me was to react to all events monitored by an epoll in one thread, and to process them in other threads (workers), transferring them there via a certain queue. Those. one stream tracks both incoming events on the listening socket, and data arrival events on the received connections and connection closing events. Received an event, put it in a queue, signaled to workflows, workflows called accept to accept a new connection, added observable sockets to the pool of epoll, read, write and close for connections. The decision is erroneous, since while one event is being processed, say, reading data from a socket, the socket closure event may already be on the socket. Of course, the reading will end with an error, but it will not come immediately to the actions to clean up all the resources related to the connection, but only when this event is read from the queue. Many events on closing of a socket simply were lost in my implementation. The implementation became more difficult, the number of synchronization places grew and under strange conditions there were drops. Falls were for a different reason. With each socket in the epoll-event structure as user data, a pointer was attached to the session object, which was responsible for all the work with the client until it was closed. Since the sequence of event processing became more complicated, hence the fall, since the object bound as user data has already been deleted (for example, when closing a session not by an outside event, but by the logic of the session itself), there was also an event in the queue handled with already broken pointer. Having received some such experience “on rakes” from the first idea that came up, a different strategy was adopted: the main listener stream through the epoll responds only to events of the listening socket, accepts incoming connections and, if their number is more than allowed for the waiting queue, then closes them , otherwise places received connections into a queue for processing; worker threads read this queue and put this socket into their epoll suite, which they are watching. It turns out that workflows work with their epoll descriptor and everything is done within one flow: placement in the epoll, reaction to data arrival events, read / write, close (deletion from the epoll occurs automatically at the system level when the descriptor closes) . As a result of such an organization, there is only one synchronization primitive for protecting the queue of incoming connections. On the one hand, only the listening thread writes to this queue, and on the other hand, the working threads from the selected connections are selected from it. One less problem. It remains to abandon the binding of the pointer to the session object with the user data of the epoll structure. Solution: use an associative array; key is a socket descriptor, data is a session object. This allows you to work with sessions not only when an event arrives, when we have the opportunity to get user data from an epoll event, but also when, according to some logic, it is necessary, for example, to close some connections by timeout (a pool of connections is available).

The first version, written entirely in a single file and in the style of a C # / Java developer (without separating ads and definitions), turned out to be more than 1800 lines of code. Too much for a test task, despite the fact that the implementation of the HTTP protocol is minimal, the very minimum for processing GET / HEAD without anything else and with a minimum of processing the parameters of the HTTP header. That's not the point. I’ll make a reservation once again that the test task was just a “kick” to try something. The main interest for me in this solution was not the implementation of the HTTP protocol, but the implementation of a multi-threaded server, connection and session management (a session can be understood as a logical data structure with a processing algorithm associated with the connection).

Having broken this monstrous file and sometimes combed the implementation, this is what I did:

class TCPServer : private Common::NonCopyable { public: TCPServer(InetAddress const &locAddr, int backlog, int maxThreadsCount, int maxConnectionsCount, UserSessionCreator sessionCreator); private: typedef std::tr1::shared_ptr<Common::IDisposable> IDisposablePtr; typedef std::vector<IDisposablePtr> IDisposablePool; Private::ClientItemQueuePtr AcceptedItems; IDisposablePool Threads; }; This is perhaps the shortest implementation of the server class that I had to write. This class only creates a few threads: the listener and a few workers, and is their holder.

Implementation

also not great. Both classes like TCPServer::TCPServer(InetAddress const &locAddr, int backlog, int maxThreadsCount, int maxConnectionsCount, UserSessionCreator sessionCreator) : AcceptedItems(new Private::ClientItemQueue(backlog)) { int EventsCount = maxConnectionsCount / maxThreadsCount; for (int i = 0 ; i < maxThreadsCount ; ++i) { Threads.push_back(IDisposablePtr(new Private::WorkerThread( EventsCount + (i <= maxThreadsCount - 1 ? 0 : maxConnectionsCount % maxThreadsCount), AcceptedItems ))); } Threads.push_back(IDisposablePtr(new Private::ListenThread(locAddr, backlog, AcceptedItems, sessionCreator))); } listening stream

so and class ListenThread : private TCPServerSocket , public Common::IDisposable { public: ListenThread(InetAddress const &locAddr, int backlog, ClientItemQueuePtr acceptedClients, UserSessionCreator sessionCreator) : TCPServerSocket(locAddr, backlog) , AcceptedClients(acceptedClients) , SessionCreator(sessionCreator) , Selector(1, WaitTimeout, std::tr1::bind(&ListenThread::OnSelect, this, std::tr1::placeholders::_1, std::tr1::placeholders::_2)) { Selector.AddSocket(GetHandle(), Network::ISelector::stRead); } private: enum { WaitTimeout = 100 }; ClientItemQueuePtr AcceptedClients; UserSessionCreator SessionCreator; SelectorThread Selector; void OnSelect(SocketHandle handle, Network::ISelector::SelectType selectType) { // , - } }; workflows

use the event flow class class WorkerThread : private Common::NonCopyable , public Common::IDisposable { public: WorkerThread(int maxEventsCount, ClientItemQueuePtr acceptedClients) : MaxConnections(maxEventsCount) , AcceptedClients(acceptedClients) , Selector(maxEventsCount, WaitTimeout, std::tr1::bind(&WorkerThread::OnSelect, this, std::tr1::placeholders::_1, std::tr1::placeholders::_2), SelectorThread::ThreadFunctionPtr(new SelectorThread::ThreadFunction(std::tr1::bind( &WorkerThread::OnIdle, this)))) { } private: enum { WaitTimeout = 100 }; typedef std::map<SocketHandle, ClientItemPtr> ClientPool; unsigned MaxConnections; ClientItemQueuePtr AcceptedClients; ClientPool Clients; SelectorThread Selector; void OnSelect(SocketHandle handle, Network::ISelector::SelectType selectType) { // , ( , , ) } void OnIdle() { // . - epoll. } }; SelectThread

. This thread uses class SelectorThread : private EPollSelector , private System::ThreadLoop { public: using EPollSelector::AddSocket; typedef System::Thread::ThreadFunction ThreadFunction; typedef std::tr1::shared_ptr<ThreadFunction> ThreadFunctionPtr; SelectorThread(int maxEventsCount, unsigned waitTimeout, ISelector::SelectFunction onSelectFunc, ThreadFunctionPtr idleFunc = ThreadFunctionPtr()); virtual ~SelectorThread(); private: void SelectItems(ISelector::SelectFunction &func, unsigned waitTimeout, ThreadFunctionPtr idleFunc); }; EPollSelector

for organizing reactions to events occurring on the descriptors of the accepted compounds. class EPollSelector : private Common::NonCopyable , public ISelector { public: EPollSelector(int maxSocketCount); ~EPollSelector(); virtual void AddSocket(SocketHandle handle, int selectType); virtual void Select(SelectFunction *function, unsigned timeout); private: typedef std::vector<epoll_event> EventPool; EventPool Events; int EPoll; static int GetSelectFlags(int selectType); }; If you look at the original server class, you can see that the last parameter is the functor for creating classes — user sessions. User session is an interface implementation.

struct IUserSession { virtual ~IUserSession() {} virtual void Init(IConnectionCtrl *ctrl) = 0; virtual void Done() = 0; virtual unsigned GetMaxBufSizeForRead() const = 0; virtual bool IsExpiredSession(std::time_t lastActionTime) const = 0; virtual void OnRecvData(void const *buf, unsigned bytes) = 0; virtual void OnIdle() = 0; }; Depending on the implementation of this interface, you can implement different protocols. The Init and Done methods are called at the beginning of the session and at its completion, respectively. GetMaxBufSizeForRead should return the maximum buffer size that will be allocated during data read operations. The read data comes in OnRecvData. In order for the session to say that it has expired in time, you need to implement the IsExpiredSession in an appropriate way. OnIdle is called between any actions, here the session implementation can perform some background actions and mark itself as intended to be closed via the interface struct IConnectionCtrl { virtual ~IConnectionCtrl() { } virtual void MarkMeForClose() = 0; virtual void UpdateSessionTime() = 0; virtual bool SendData(void const *buf, unsigned *bytes) = 0; virtual bool SendFile(int fileHandle, unsigned offset, unsigned *bytes) = 0; virtual InetAddress const& GetAddress() const = 0; virtual SocketTuner GetSocketTuner() const = 0; }; The IConnectionCtrl interface is sent so that the user session can send data to the network (SendData and SendFile methods), mark itself as intended for closing (MarkMeForClose method), say that it is alive (UpdateSessionTime method; updates the time that comes to IsExpiredSession), the session can also receive the address of the incoming connection (GetAddress method) and the SocketTuner object for the socket settings — the current connection (GetSocketTuner method).

The HTTP protocol implementation is in the HttpUserSession class. As I said above, the HTTP implementation was not the most interesting and priority for me, so I didn’t think much about it; I thought as much as I had to write what happened :)

Implementation on libevent

The implementation on libevent is a favorite for me. This library allows you to organize asynchronous I / O and hide from the developer many of the subtleties of network programming. Allows you to implement work with raw data, hanging up callback functions for receiving, sending data and other events, sending data asynchronously. In addition to low-level data manipulation, there are higher-level protocols. libevent has a built-in HTTP server, which makes it possible to abstract from the analysis of request headers and the formation of the same response headers. It is possible to implement RPC means of the library and other features.

If you implement an HTTP server using the built-in, the sequence will be something like this:

- Create some basic object by calling event_base_new (there is also a simplified for simpler cases - event_init). A pair function to remove an object is event_base_free.

- Create an HTTP engine object by calling evhttp_new. A pair function to remove the evhttp_free object.

- You can specify the methods that the server will support using the evhttp_set_allowed_methods function with a combination of flags. So, for example, to support only the GET method, it would look something like this: evhttp_set_allowed_methods (Http, EVHTTP_REQ_GET), where Http is the descriptor created in step (2).

- Set a callback function to handle incoming requests by calling evhttp_set_gencb.

- Associate a listening socket with an instance of an HTTP server object by calling evhttp_accept_socket. A listening socket can be created and configured through all the same socket / bind / listen.

- Start the event loop by calling the event_base_loop function. There is a simplified version - event_base_dispatch. event_base_loop needs to be called in a loop. This function either does something useful in the bowels of the library, whence calls to the installed callback functions come from, or when there is nothing to do it returns control and something useful can be done at this moment; also gives you the ability to more easily manage the life cycle of the message processing.

- In the request handler, you can send some text data by calling the evbuffer_add_printf function or give the file descriptor to the library and let it send it by calling evbuffer_add_file. These functions work with some buffer object that you can create yourself (and not forget to delete it in time) or use the query field: evhttp_request :: output_buffer. The beauty is that these functions are asynchronous, i.e. in the example with sending the file, you can give the file descriptor of the same evbuffer_add_file and it will return control, and after the file has been sent, close the file itself.

Everything turns out very nicely in one thread, but as it turned out, making a multi-threaded server is also not difficult. If you use boost :: thread or your cross-platform class that encapsulates the work flow, or something similar, you can get a fully cross-platform solution, since the libevent cross-platform library. In my own implementation, I will take some wrapper just above the threads for Linux. But it is not so important.

The main thread for each workflow must create its own descriptors perform steps 1-5. Workflows need only twist message processing cycles - step 6. Step 7 will be performed in each workflow. To summarize, we can say: we create one listening socket and impose its processing on several worker threads.

So in my implementation, given that I already have some primitives ready for streams, files, and command line parsing, I’ve got an HTTP server with support for only the GET method of about 200 lines in the C # / Java style. Such a reduction in the work of writing code with the presence of complete control of what is happening can not but rejoice. In addition, subjectively, the resulting server runs a little faster, but let's look at the tests at the end ...

Implementing HTTP server on libevent

#include <event.h> #include <evhttp.h> #include <unistd.h> #include <string.h> #include <signal.h> #include <vector> #include <iostream> #include <tr1/functional> #include <tr1/memory> #include "tcp_server_socket.h" #include "inet_address_v4.h" #include "thread.h" #include "command_line.h" #include "logger.h" #include "file_holder.h" namespace Network { namespace Private { DECLARE_RUNTIME_EXCEPTION(EventBaseHolder) class EventBaseHolder : private Common::NonCopyable { public: EventBaseHolder() : EventBase(event_base_new()) { if (!EventBase) throw EventBaseHolderException("Failed to create new event_base"); } ~EventBaseHolder() { event_base_free(EventBase); } event_base* GetBase() const { return EventBase; } private: event_base *EventBase; }; DECLARE_RUNTIME_EXCEPTION(HttpEventHolder) class HttpEventHolder : public EventBaseHolder { public: typedef std::tr1::function<void (char const *, evbuffer *)> RequestHandler; HttpEventHolder(SocketHandle sock, RequestHandler const &handler) : Handler(handler) , Http(evhttp_new(GetBase())) { evhttp_set_allowed_methods(Http, EVHTTP_REQ_GET); evhttp_set_gencb(Http, &HttpEventHolder::RawHttpRequestHandler, this); if (evhttp_accept_socket(Http, sock) == -1) throw HttpEventHolderException("Failed to accept socket for http"); } ~HttpEventHolder() { evhttp_free(Http); } private: RequestHandler Handler; evhttp *Http; static void RawHttpRequestHandler(evhttp_request *request, void *prm) { reinterpret_cast<HttpEventHolder *>(prm)->ProcessRequest(request); } void ProcessRequest(evhttp_request *request) { try { Handler(request->uri, request->output_buffer); evhttp_send_reply(request, HTTP_OK, "OK", request->output_buffer); } catch (std::exception const &e) { evhttp_send_reply(request, HTTP_INTERNAL, e.what() ? e.what() : "Internal server error.", request->output_buffer); } } }; class ServerThread : private HttpEventHolder , private System::Thread { public: ServerThread(SocketHandle sock, std::string const &rootDir, std::string const &defaultPage) : HttpEventHolder(sock, std::tr1::bind(&ServerThread::OnRequest, this, std::tr1::placeholders::_1, std::tr1::placeholders::_2)) , Thread(std::tr1::bind(&ServerThread::DispatchProc, this)) , RootDir(rootDir) , DefaultPage(defaultPage) { } ~ServerThread() { IsRun = false; } private: enum { WaitTimeout = 10000 }; bool volatile IsRun; std::string RootDir; std::string DefaultPage; void DispatchProc() { IsRun = true; while(IsRun) { if (event_base_loop(GetBase(), EVLOOP_NONBLOCK)) { Common::Log::GetLogInst() << "Failed to run dispatch events"; break; } usleep(WaitTimeout); } } void OnRequest(char const *resource, evbuffer *outBuffer) { std::string FileName; GetFullFileName(resource, &FileName); try { System::FileHolder File(FileName); if (!File.GetSize()) { evbuffer_add_printf(outBuffer, "Empty file"); return; } evbuffer_add_file(outBuffer, File.GetHandle(), 0, File.GetSize()); File.Detach(); } catch (System::FileHolderException const &) { evbuffer_add_printf(outBuffer, "File not found"); } } void GetFullFileName(char const *resource, std::string *fileName) const { fileName->append(RootDir); if (!resource || !strcmp(resource, "/")) { fileName->append("/"); fileName->append(DefaultPage); } else { fileName->append(resource); } } }; } class HTTPServer : private TCPServerSocket { public: HTTPServer(InetAddress const &locAddr, int backlog, int maxThreadsCount, std::string const &rootDir, std::string const &defaultPage) : TCPServerSocket(locAddr, backlog) { for (int i = 0 ; i < maxThreadsCount ; ++i) { ServerThreads.push_back(ServerThreadPtr(new Private::ServerThread(GetHandle(), rootDir, defaultPage))); } } private: typedef std::tr1::shared_ptr<Private::ServerThread> ServerThreadPtr; typedef std::vector<ServerThreadPtr> ServerThreadPool; ServerThreadPool ServerThreads; }; } int main(int argc, char const **argv) { if (signal(SIGPIPE, SIG_IGN) == SIG_ERR) { std::cerr << "Failed to call signal(SIGPIPE, SIG_IGN)" << std::endl; return 0; } try { char const ServerAddr[] = "Server"; char const ServerPort[] = "Port"; char const MaxBacklog[] = "Backlog"; char const ThreadsCount[] = "Threads"; char const RootDir[] = "Root"; char const DefaultPage[] = "DefaultPage"; // Server:127.0.0.1 Port:5555 Backlog:10 Threads:4 Root:./ DefaultPage:index.html Common::CommandLine CmdLine(argc, argv); Network::HTTPServer Srv( Network::InetAddressV4::CreateFromString( CmdLine.GetStrParameter(ServerAddr), CmdLine.GetParameter<unsigned short>(ServerPort)), CmdLine.GetParameter<unsigned>(MaxBacklog), CmdLine.GetParameter<unsigned>(ThreadsCount), CmdLine.GetStrParameter(RootDir), CmdLine.GetStrParameter(DefaultPage) ); std::cin.get(); } catch (std::exception const &e) { Common::Log::GetLogInst() << e.what(); } return 0; } Implementation on boost.asio

boost.asio is part of boost, which can help greatly reduce the development of network applications and, moreover, cross-platform ones. The library hides a lot of routine from the developer.

I did not write the implementation of the HTTP server on boost. Took ready from examples to boost.asio. An example of a multi-threaded HTTP server. HTTP Server 3 The implementation of this example is quite suitable for testing in conjunction with the examples above.

There is an implementation of an HTTP server for testing, but it would not be bad for general principles ... Unfortunately, unlike libevent in boost.asio there is no support for some higher-level protocols like HTTP and others. The library will hide the work with the network via TCP in this case, but the HTTP implementation will have to be done by the developer himself: to collect and parse protocol headers.

Below is a small example of a multi-stream echo server with a description, since I was less interested in parsing / assembling HTTP headers in the light of this topic. The sequence of steps to create a multi-threaded server using boost.asio is like this:

- Create objects of the classes boost :: asio :: io_service and boost :: asio :: ip :: tcp :: acceptor.

- Using boost :: asio :: ip :: tcp :: resolver and boost :: asio :: ip :: tcp :: endpoint translate the local address to which the listening socket will be bound to the structure used by the library.

- Call bind and listen for an object of class boost :: asio :: ip :: tcp :: acceptor.

- Create some class "Connection"; it is also “Session”, the instances of which will be used when receiving incoming user connections.

- Configure the appropriate callback functions to accept incoming connections, receive data.

- Start the message loop by calling boost :: asio :: io_service :: run.

And as with the libevent example, a multi-threaded server is quite simple to create from a single-threaded one using the steps described above. In this case, the difference between the single-threaded and multi-threaded server is only that the method boost :: asio :: io_service :: run must be called in each thread for a multi-threaded implementation.

Implement the echo server on boost.asio

#include <boost/noncopyable.hpp> #include <boost/asio.hpp> #include <boost/shared_ptr.hpp> #include <boost/thread.hpp> #include <boost/make_shared.hpp> #include <boost/bind.hpp> #include <boost/enable_shared_from_this.hpp> #include <boost/array.hpp> namespace Network { namespace Private { class Connection : private boost::noncopyable , public boost::enable_shared_from_this<Connection> { public: Connection(boost::asio::io_service &ioService) : Strand(ioService) , Socket(ioService) { } boost::asio::ip::tcp::socket& GetSocket() { return Socket; } void Start() { Socket.async_read_some(boost::asio::buffer(Buffer), Strand.wrap( boost::bind(&Connection::HandleRead, shared_from_this(), boost::asio::placeholders::error, boost::asio::placeholders::bytes_transferred) )); } void HandleRead(boost::system::error_code const &error, std::size_t bytes) { if (error) return; std::vector<boost::asio::const_buffer> Buffers; Buffers.push_back(boost::asio::const_buffer(Buffer.data(), bytes)); boost::asio::async_write(Socket, Buffers, Strand.wrap( boost::bind(&Connection::HandleWrite, shared_from_this(), boost::asio::placeholders::error) )); } void HandleWrite(boost::system::error_code const &error) { if (error) return; boost::system::error_code Code; Socket.shutdown(boost::asio::ip::tcp::socket::shutdown_both, Code); } private: boost::array<char, 4096> Buffer; boost::asio::io_service::strand Strand; boost::asio::ip::tcp::socket Socket; }; } class EchoServer : private boost::noncopyable { public: EchoServer(std::string const& locAddr, std::string const& port, unsigned threadsCount) : Acceptor(IoService) , Threads(threadsCount) { boost::asio::ip::tcp::resolver Resolver(IoService); boost::asio::ip::tcp::resolver::query Query(locAddr, port); boost::asio::ip::tcp::endpoint Endpoint = *Resolver.resolve(Query); Acceptor.open(Endpoint.protocol()); Acceptor.set_option(boost::asio::ip::tcp::acceptor::reuse_address(true)); Acceptor.bind(Endpoint); Acceptor.listen(); StartAccept(); std::generate(Threads.begin(), Threads.end(), boost::bind( &boost::make_shared<boost::thread, boost::function<void ()> const &>, boost::function<void ()>(boost::bind(&boost::asio::io_service::run, &IoService)) )); } ~EchoServer() { std::for_each(Threads.begin(), Threads.end(), boost::bind(&boost::asio::io_service::stop, &IoService)); std::for_each(Threads.begin(), Threads.end(), boost::bind(&boost::thread::join, _1)); } private: boost::asio::io_service IoService; boost::asio::ip::tcp::acceptor Acceptor; typedef boost::shared_ptr<Private::Connection> ConnectionPtr; ConnectionPtr NewConnection; typedef boost::shared_ptr<boost::thread> ThreadPtr; typedef std::vector<ThreadPtr> ThreadPool; ThreadPool Threads; void StartAccept() { NewConnection = boost::make_shared<Private::Connection, boost::asio::io_service &>(IoService); Acceptor.async_accept(NewConnection->GetSocket(), boost::bind(&EchoServer::HandleAccept, this, boost::asio::placeholders::error)); } void HandleAccept(boost::system::error_code const &error) { if (!error) NewConnection->Start(); StartAccept(); } }; } int main() { try { Network::EchoServer Srv("127.0.0.1", "5555", 4); std::cin.get(); } catch (std::exception const &e) { std::cerr << e.what() << std::endl; } return 0; } Testing

It's time to compare the resulting crafts ...

The platform on which everything was developed and tested is a regular laptop with 4GB of RAM and a 2-core processor running the Ubuntu 12.04 desktop.

First of all I put the utility for testing:

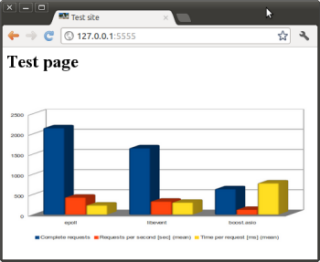

sudo apt-get install apache2-utils and test it in this way: ab -c 100 -k -r -t 5 "http://127.0.0.1:5555/test.jpg" For all servers, 4 workflows were set up, 100 parallel connections, a file for transmission of 2496629 bytes and an estimated time interval of 5 seconds.Results:

Implementation on epoll

Benchmarking 127.0.0.1 (be patient)

Finished 2150 requests

Server Software: MyTestHttpServer

Server Hostname: 127.0.0.1

Server Port: 5555

Document Path: /test.jpg

Document Length: 2496629 bytes

Concurrency Level: 100

Time taken for tests: 5.017 seconds

Complete requests: 2150

Failed requests: 0

Write errors: 0

Keep-Alive requests: 0

Total transferred: 5389312814 bytes

HTML transferred: 5388981758 bytes

Requests per second: 428.54 [# / sec] (mean)

Time per request: 233.348 [ms] (mean)

Time per request: 2.333 [ms] (mean, across all concurrent requests)

Transfer rate: 1049037.42 [Kbytes / sec] received

Connection Times (ms)

min mean [± sd] median max

Connect: 0 0 0.5 0 3

Processing: 74,226 58.2 229,364

Waiting: 2 133 64.8 141 264

Total: 77 226 58.1 229 364

Finished 2150 requests

Server Software: MyTestHttpServer

Server Hostname: 127.0.0.1

Server Port: 5555

Document Path: /test.jpg

Document Length: 2496629 bytes

Concurrency Level: 100

Time taken for tests: 5.017 seconds

Complete requests: 2150

Failed requests: 0

Write errors: 0

Keep-Alive requests: 0

Total transferred: 5389312814 bytes

HTML transferred: 5388981758 bytes

Requests per second: 428.54 [# / sec] (mean)

Time per request: 233.348 [ms] (mean)

Time per request: 2.333 [ms] (mean, across all concurrent requests)

Transfer rate: 1049037.42 [Kbytes / sec] received

Connection Times (ms)

min mean [± sd] median max

Connect: 0 0 0.5 0 3

Processing: 74,226 58.2 229,364

Waiting: 2 133 64.8 141 264

Total: 77 226 58.1 229 364

Implementation on libevent

Benchmarking 127.0.0.1 (be patient)

Finished 1653 requests

Server Software:

Server Hostname: 127.0.0.1

Server Port: 5555

Document Path: /test.jpg

Document Length: 2496629 bytes

Concurrency Level: 100

Time taken for tests: 5.008 seconds

Complete requests: 1653

Failed requests: 0

Write errors: 0

Keep-Alive requests: 1653

Total transferred: 4263404830 bytes

HTML transferred: 4263207306 bytes

Requests per second: 330.05 [# / sec] (mean)

Time per request: 302.987 [ms] (mean)

Time per request: 3.030 [ms] (mean, across all concurrent requests)

Transfer rate: 831304.15 [Kbytes / sec] received

Connection Times (ms)

min mean [± sd] median max

Connect: 0 53 223.3 0 1000

Processing: 3,228,275.5 62,904

Waiting: 0 11 42.5 5 639

Total: 3 280 417.9 62 1864

Finished 1653 requests

Server Software:

Server Hostname: 127.0.0.1

Server Port: 5555

Document Path: /test.jpg

Document Length: 2496629 bytes

Concurrency Level: 100

Time taken for tests: 5.008 seconds

Complete requests: 1653

Failed requests: 0

Write errors: 0

Keep-Alive requests: 1653

Total transferred: 4263404830 bytes

HTML transferred: 4263207306 bytes

Requests per second: 330.05 [# / sec] (mean)

Time per request: 302.987 [ms] (mean)

Time per request: 3.030 [ms] (mean, across all concurrent requests)

Transfer rate: 831304.15 [Kbytes / sec] received

Connection Times (ms)

min mean [± sd] median max

Connect: 0 53 223.3 0 1000

Processing: 3,228,275.5 62,904

Waiting: 0 11 42.5 5 639

Total: 3 280 417.9 62 1864

Implementation on boost.asio

Benchmarking 127.0.0.1 (be patient)

Finished 639 requests

Server Software:

Server Hostname: 127.0.0.1

Server Port: 5555

Document Path: /test.jpg

Document Length: 2496629 bytes

Concurrency Level: 100

Time taken for tests: 5.001 seconds

Complete requests: 639

Failed requests: 0

Write errors: 0

Keep-Alive requests: 0

Total transferred: 1655047414 bytes

HTML transferred: 1654999464 bytes

Requests per second: 127.78 [# / sec] (mean)

Time per request: 782.584 [ms] (mean)

Time per request: 7.826 [ms] (mean, across all concurrent requests)

Transfer rate: 323205.36 [Kbytes / sec] received

Connection Times (ms)

min mean [± sd] median max

Connect: 0 0 1.1 0 4

Processing: 286 724 120.0 689 1106

Waiting: 12 364 101.0 394 532

Total: 286 724 120.0 689 1106

Finished 639 requests

Server Software:

Server Hostname: 127.0.0.1

Server Port: 5555

Document Path: /test.jpg

Document Length: 2496629 bytes

Concurrency Level: 100

Time taken for tests: 5.001 seconds

Complete requests: 639

Failed requests: 0

Write errors: 0

Keep-Alive requests: 0

Total transferred: 1655047414 bytes

HTML transferred: 1654999464 bytes

Requests per second: 127.78 [# / sec] (mean)

Time per request: 782.584 [ms] (mean)

Time per request: 7.826 [ms] (mean, across all concurrent requests)

Transfer rate: 323205.36 [Kbytes / sec] received

Connection Times (ms)

min mean [± sd] median max

Connect: 0 0 1.1 0 4

Processing: 286 724 120.0 689 1106

Waiting: 12 364 101.0 394 532

Total: 286 724 120.0 689 1106

The results are summarized in the table.

| epoll | libevent | boost.asio | |

| Complete requests | 2150 | 1653 | 639 |

| Total transferred (bytes) | 5389312814 | 4263404830 | 1655047414 |

| HTML transferred (bytes) | 5388981758 | 4263207306 | 1654999464 |

| Requests per second [sec] (mean) | 428.54 | 330.05 | 127.78 |

| Time per request [ms] (mean) | 233.348 | 302.987 | 782.584 |

| Transfer rate [Kbytes / sec] received | 1049037.42 | 831304.15 | 323205.36 |

There are three types of lies: lies, blatant lies and statistics. Although, I must admit, the result can not fail to please me. I think you should not pay special attention to the results obtained, but you can look at them as some kind of supporting information that may be useful when making decisions in choosing a tool for developing your own server software. For fidelity of results, it is advisable to arrange multiple runs on server hardware by clients running on other machines on the network, etc.

100 parallel requests - it would seem small, but quite enough for testing in such modest conditions. Of course, I would like to check the results on thousands of parallel queries, but there are already other factors. One of these factors is the number of simultaneously open file descriptors of the process. You can find out and set some process parameters by calling the getrlimit and setrlimit functions. In order to find out how many file descriptors are allocated to a process, you can call getrlimit with the RLIMIT_NOFILE flag of the rlimit structure. For its operating system, it is 1024 file descriptors per process by default and 4096 is the maximum that can be set per process. … , , . Linux

WebServer «» , , . . , - , - , - - . , epoll . , , . « », , , C++, C, stl, .

, libevent, , , , . .

, boost . . boost.asio . «» , , .

Linux (aio) , «» .

SVN . , . But! , . « » , , — , — , — . :)

«» API .

Interesting materials

- HTTP libevent

- Boost network performance with libevent and libev

- MULTI-THREADED HTTPSERVER USING EVHTTP (LIBEVENT)

- Boost application performance using asynchronous I/O

- FreeBSD 100-200

Thanks for attention!

Source: https://habr.com/ru/post/152345/

All Articles