Terabytes of web project files - store and distribute

Hello!

Recently, there has been an interesting trend - the rapid "swelling" of web projects to infinity. The data volume of many popular sites is growing faster and faster, they need to be put somewhere, while effectively backing up (it will be fun if the files on the 500T are lost :-)), and of course distribute to customers quickly, so that everyone can download, download, swing ... at high speed.

For a system administrator, the task of even a rare, daily backup of such a volume of files evokes thoughts of suicide, and the web project manager wakes up in a cold sweat at the thought of impending data center prevention for 6 hours (to transfer files from one data center to another you need to load the trunk a couple of times car winchesters :-)).

')

Colleagues with a smart look advise you to purchase one of the solutions from NetApp , but it’s a pity that the project’s budget is 1,000 times smaller, it’s a start-up in general ... what are we going to do?

In the article I want to analyze frequent cases of cheap and expensive solutions to this problem - from simple to complex. At the end of the article I will tell you how the problem is solved in our flagship product - it is always useful to compare opensource solutions with commercial ones, brains need gymnastics.

If you have ever attended a HighLoad conference, then you probably know that at the entrance it is necessary to answer the question - why put nginx in front of apache? Otherwise, the wardrobe does not go ;-)

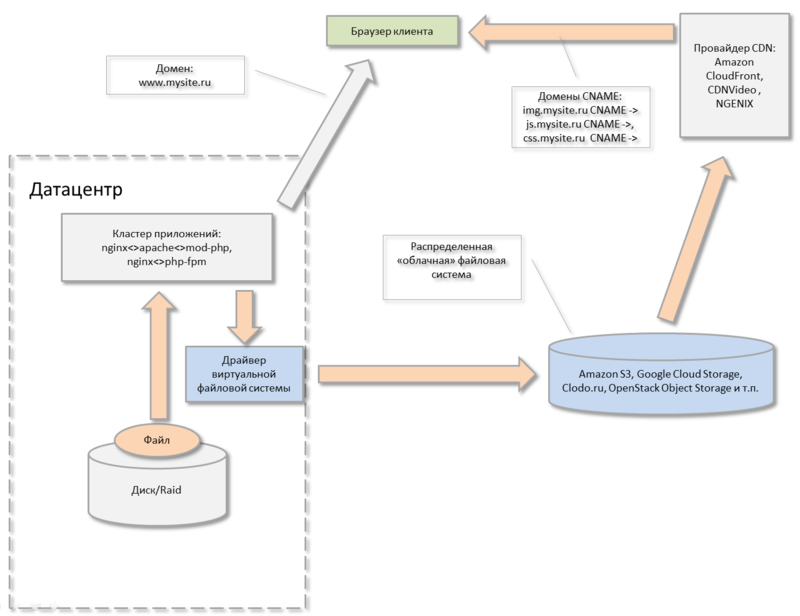

That's right, nginx or a similar reverse proxy allows you to efficiently distribute files, especially over slow channels, seriously reducing server load and improving overall web application performance: nginx distributes a bunch of files, apache or php-fpm processes requests to the application server.

Such web applications live well until the file size increases to, say, dozens of gigabytes — when several hundred clients start downloading files from the server at the same time, and there is not enough memory for caching files in RAM — the disk and then the RAID — will simply die.

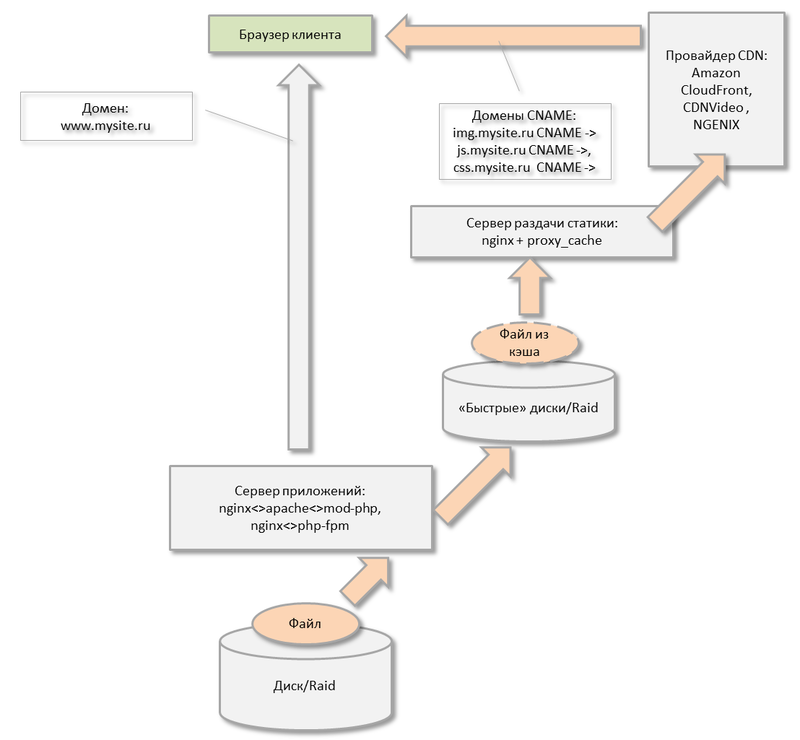

Approximately at this stage, they often start fictitiously at first, then physically distribute static files from another, preferably not giving up a lot of unnecessary cookies, domain. And this is correct - we all know that the client browser has a limit on the number of connections in one domain of the site and when the site suddenly begins to surrender itself from two or more domains - the speed of its loading into the client browser increases significantly.

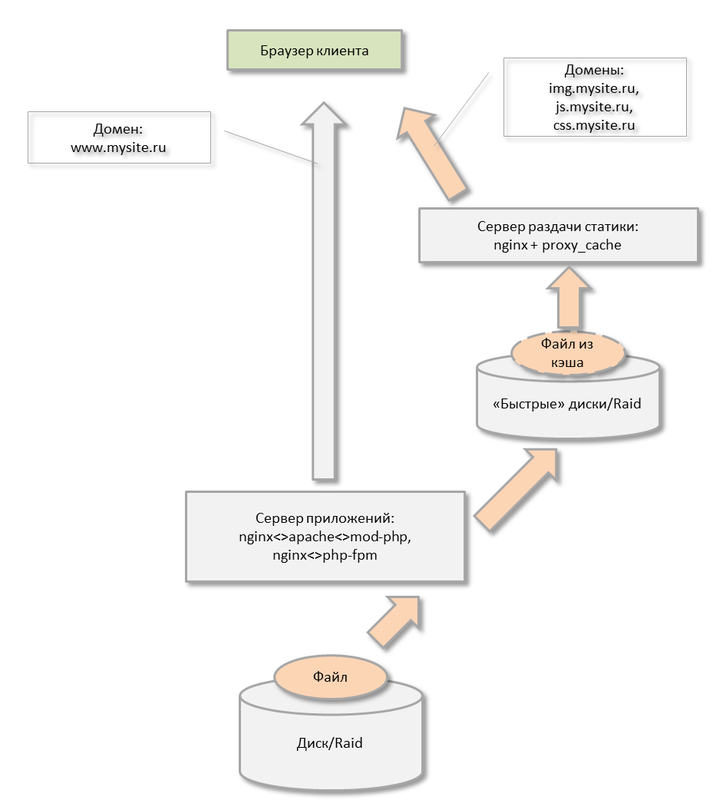

Do something like:

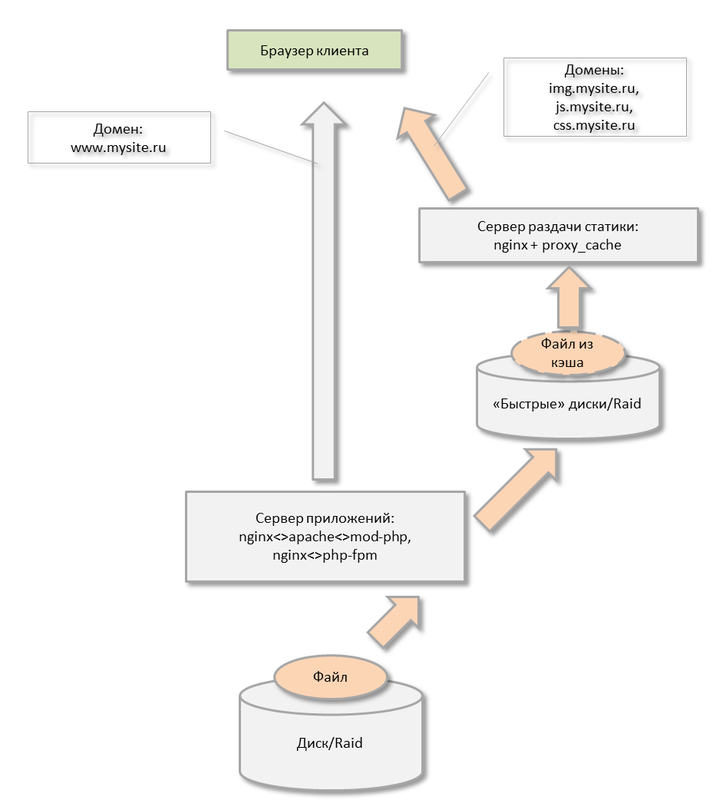

In order to more efficiently distribute static from different domains, it is submitted to a separate server (s) of statics:

It is useful to use nginx caching mode and “fast” RAID on this server (so that more clients are required for setting the disk “on its knees”).

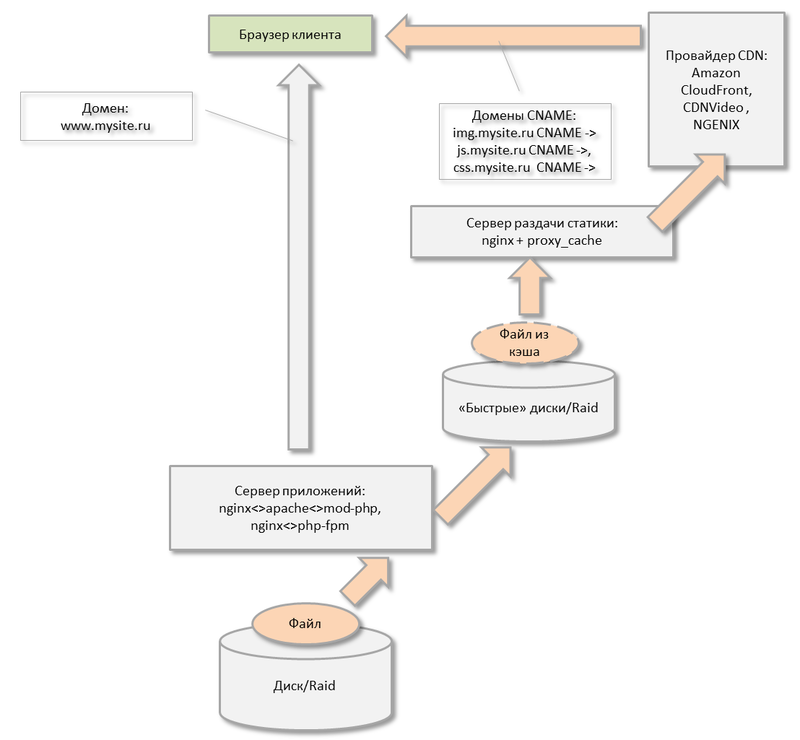

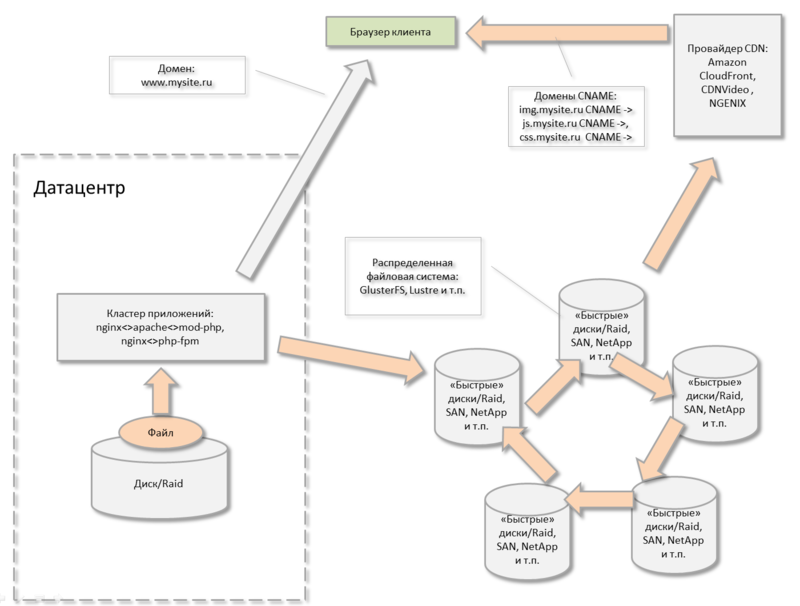

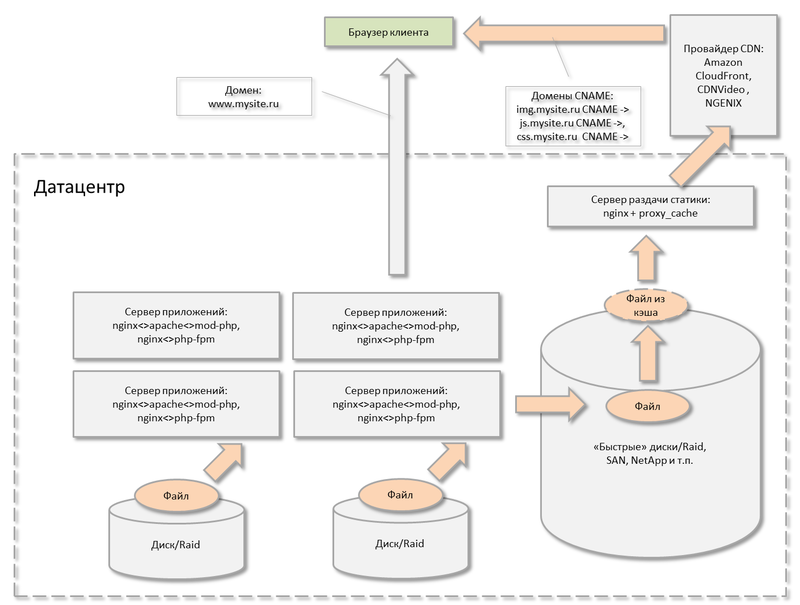

At this stage, the organizers of the web project begin to understand that it is not always effective to distribute even from very fast disks or even SAN from your data center:

In general, everyone understands that you need to be as close as possible to the client and give the client as much static as he wants, at the fastest possible speed, and not how much we can give from your server (s).

These tasks have recently begun to effectively address the CDN technology.

Your files really become available to the client at arm's length, from wherever he began to download them: from Red Square or from Sakhalin - the files will be sent from the nearest CDN provider servers.

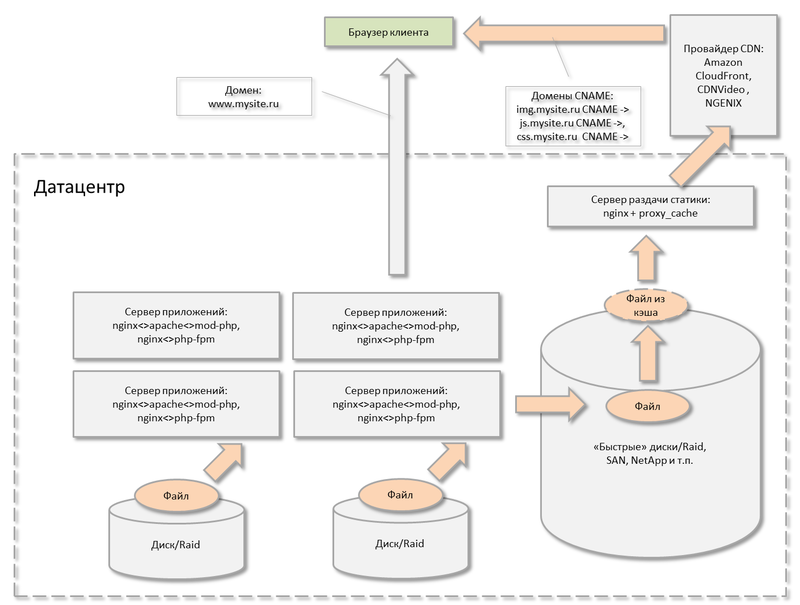

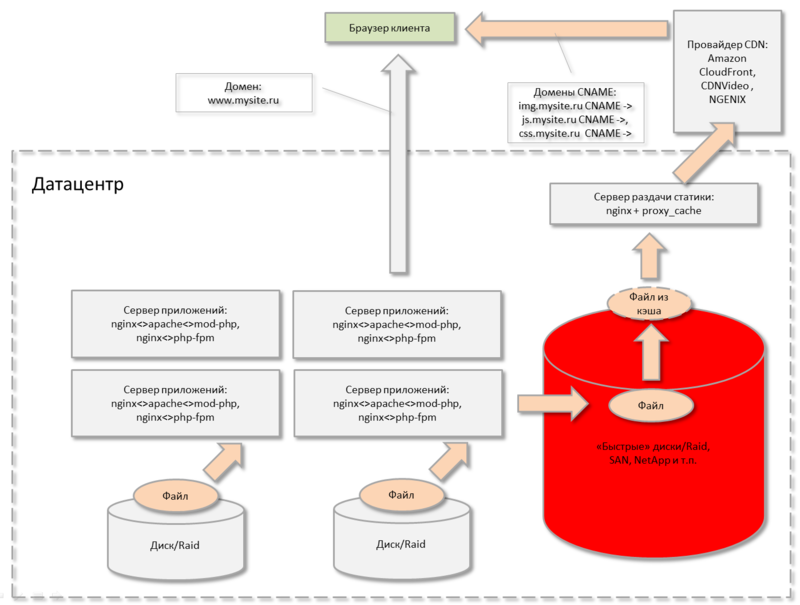

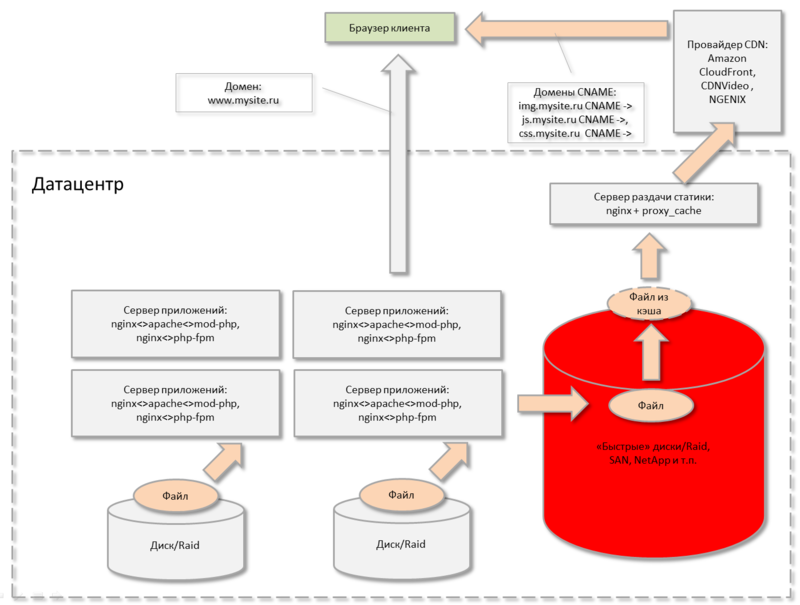

At this stage, the web project is gradually swelling. You accumulate more and more files for distribution, storing them, it can be said, centrally in one DC on one or a group of servers. Backing up such a number of files is becoming more expensive, longer and more difficult. Full backup is done for a week, you have to do snapshots, increments and other things that increase the risk of suicide.

You are afraid to think about the following:

And in general, you have invested so much money in the infrastructure, and the risks, and considerable ones, remain. Somehow it turns out dishonest.

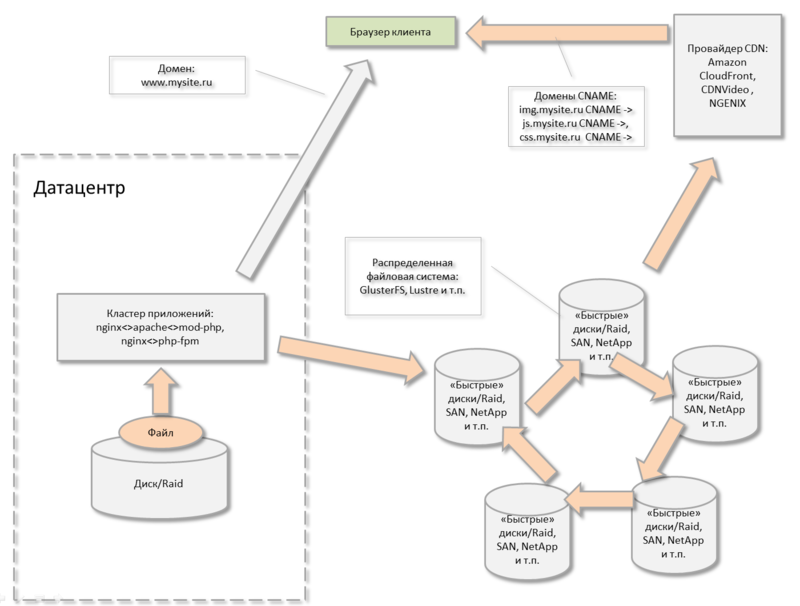

At this moment, the left, responsible for logic, hemisphere is disconnected from many, and they are drawn to mysterious monstrous solutions - to deploy their distributed file system in several data centers. Perhaps for a project with a large budget it will be the exit ...

Then it will be necessary to administer all this economy independently, which will probably require the presence of a whole exploitation department :-)

One of the most effective and, most importantly, cheap solutions is to use the capabilities of well-known cloud providers that rent for very tasty, especially with a large amount of data, prices, a way to store unlimited amount of your files in the cloud with very high reliability (giant DropBox) . The providers organized at their facilities the above-described distributed file systems, highly reliable and located in several data centers, separated geographically. Moreover, these services are, as a rule, closely integrated into the CDN network of this provider. Those. You will not only conveniently and cheaply store your files, but also distribute them as conveniently as possible for the client.

Things are easy - you need to configure a layer or a virtual file system (or an analogue of a cloud disk) on your web project. Among the free solutions, you can highlight the FUSE-tools (for linux) such as s3fs .

However, to translate the current web application to this cheap and very efficient technology from a business point of view, you need to program and make a number of changes to the existing code and application logic.

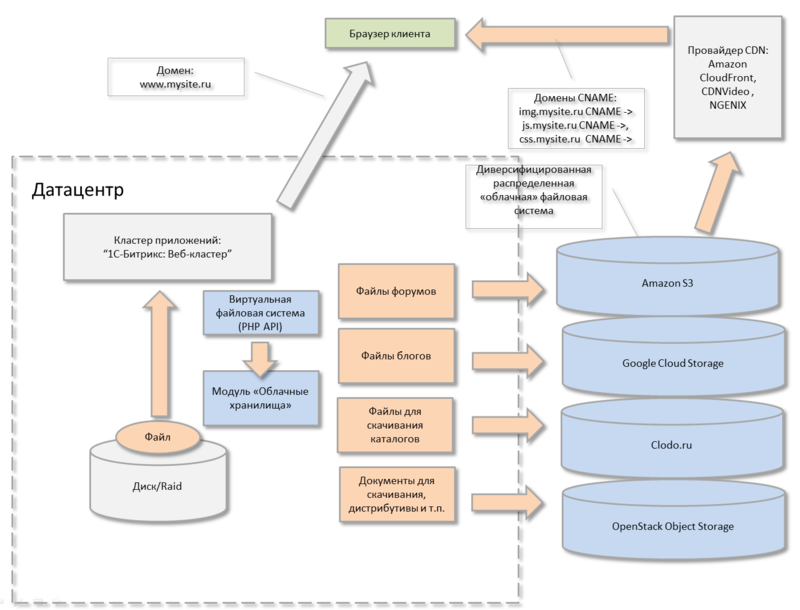

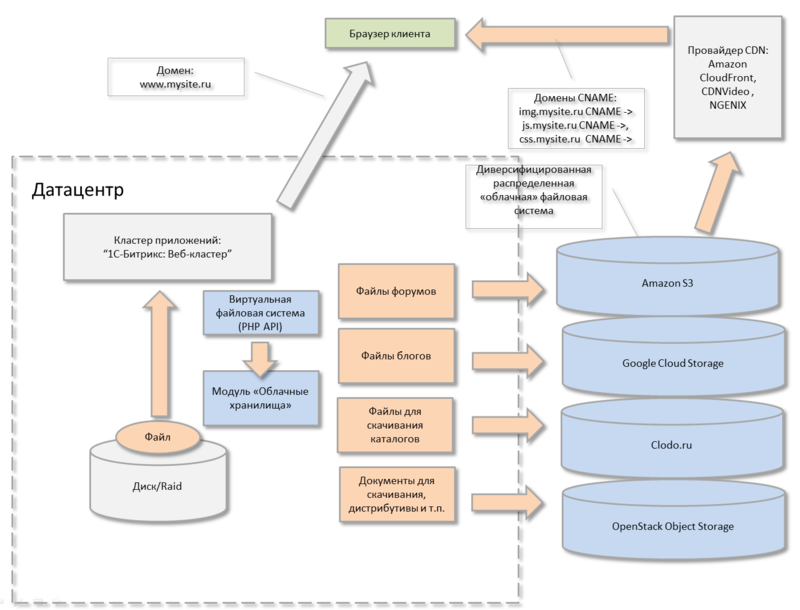

In our product, we carefully analyzed the cases of web projects described above, which need to quickly deliver files to clients, and sometimes files — terabytes and more, and implemented these capabilities out of the box in the Cloud Storage module .

I honestly like that you can store data from individual modules of the system ... in different cloud storages :-). It really diversifies your file storage. You can scatter them depending on the address or type of client. Either on the type of information - someone effectively gives a light static, someone heavy content.

It does not matter what you are writing a web project - sooner or later you will definitely encounter the chilling problem of an avalanche-like increase in the volume of files for storage and distribution and you will have to make the right architectural decision.

In the article, we reviewed the main stages and principles of organizing file distribution on web solutions of any size, weighed the pros and cons, looked at how it was implemented in the 1C-Bitrix platform. Given the fact that the volume of information distributed by web projects is growing very rapidly, this knowledge is relevant and must be useful to both web application architects and project managers.

And of course I invite everyone to our cloud service Bitrix24 , in which we actively use the technologies described above. Good luck to all!

Recently, there has been an interesting trend - the rapid "swelling" of web projects to infinity. The data volume of many popular sites is growing faster and faster, they need to be put somewhere, while effectively backing up (it will be fun if the files on the 500T are lost :-)), and of course distribute to customers quickly, so that everyone can download, download, swing ... at high speed.

For a system administrator, the task of even a rare, daily backup of such a volume of files evokes thoughts of suicide, and the web project manager wakes up in a cold sweat at the thought of impending data center prevention for 6 hours (to transfer files from one data center to another you need to load the trunk a couple of times car winchesters :-)).

')

Colleagues with a smart look advise you to purchase one of the solutions from NetApp , but it’s a pity that the project’s budget is 1,000 times smaller, it’s a start-up in general ... what are we going to do?

In the article I want to analyze frequent cases of cheap and expensive solutions to this problem - from simple to complex. At the end of the article I will tell you how the problem is solved in our flagship product - it is always useful to compare opensource solutions with commercial ones, brains need gymnastics.

Access wardrobe HighLoad

If you have ever attended a HighLoad conference, then you probably know that at the entrance it is necessary to answer the question - why put nginx in front of apache? Otherwise, the wardrobe does not go ;-)

That's right, nginx or a similar reverse proxy allows you to efficiently distribute files, especially over slow channels, seriously reducing server load and improving overall web application performance: nginx distributes a bunch of files, apache or php-fpm processes requests to the application server.

Such web applications live well until the file size increases to, say, dozens of gigabytes — when several hundred clients start downloading files from the server at the same time, and there is not enough memory for caching files in RAM — the disk and then the RAID — will simply die.

Static server

Approximately at this stage, they often start fictitiously at first, then physically distribute static files from another, preferably not giving up a lot of unnecessary cookies, domain. And this is correct - we all know that the client browser has a limit on the number of connections in one domain of the site and when the site suddenly begins to surrender itself from two or more domains - the speed of its loading into the client browser increases significantly.

Do something like:

- www.mysite.ru

- img.mysite.ru

- js.mysite.ru

- css.mysite.ru

- download.mysite.ru

- etc.

In order to more efficiently distribute static from different domains, it is submitted to a separate server (s) of statics:

It is useful to use nginx caching mode and “fast” RAID on this server (so that more clients are required for setting the disk “on its knees”).

CDN - distribution

At this stage, the organizers of the web project begin to understand that it is not always effective to distribute even from very fast disks or even SAN from your data center:

- A valuable client started downloading a file from a Moscow server from Vladivostok, and not from the city, but from the board of his yacht. The channel of course turned out to be narrow.

- The trouble happened and the cleaning lady accidentally pulled the power cord out of the static distribution server, cutting off 10,000 clients who were pumping a new distribution. Well, or in the datacenter it began and it is “unknown” when the prevention will end - or rather, work on finding the cause of the simultaneous failure of three diesel generators out of two.

- On the contrary, such an influx of clients could happen that it became lack of server power to distribute so many statics at the same time, or the channels are already loaded to the limit.

In general, everyone understands that you need to be as close as possible to the client and give the client as much static as he wants, at the fastest possible speed, and not how much we can give from your server (s).

These tasks have recently begun to effectively address the CDN technology.

Your files really become available to the client at arm's length, from wherever he began to download them: from Red Square or from Sakhalin - the files will be sent from the nearest CDN provider servers.

"Vertical" scaling distribution statics

At this stage, the web project is gradually swelling. You accumulate more and more files for distribution, storing them, it can be said, centrally in one DC on one or a group of servers. Backing up such a number of files is becoming more expensive, longer and more difficult. Full backup is done for a week, you have to do snapshots, increments and other things that increase the risk of suicide.

You are afraid to think about the following:

- Disks will be scattered on the file storage (raid10 may die, taking a pair of mirror disks with it at once, and this does not happen very rarely) and you will have to get files from the backup within ... two or three weeks.

- In the datacenter, they finally learned how to count the number of diesel generators, but in the morning you were pleased with the message that the disks crumbled, and your backups as a result of a software error were permanently damaged (this happened to us once in Amazon).

- There will be an advertising campaign and an influx of customers from the Far East or from Europe will begin - and you will not cope with the effective distribution of such a large number of statics.

And in general, you have invested so much money in the infrastructure, and the risks, and considerable ones, remain. Somehow it turns out dishonest.

Circles and wheels - distributed file system

At this moment, the left, responsible for logic, hemisphere is disconnected from many, and they are drawn to mysterious monstrous solutions - to deploy their distributed file system in several data centers. Perhaps for a project with a large budget it will be the exit ...

Then it will be necessary to administer all this economy independently, which will probably require the presence of a whole exploitation department :-)

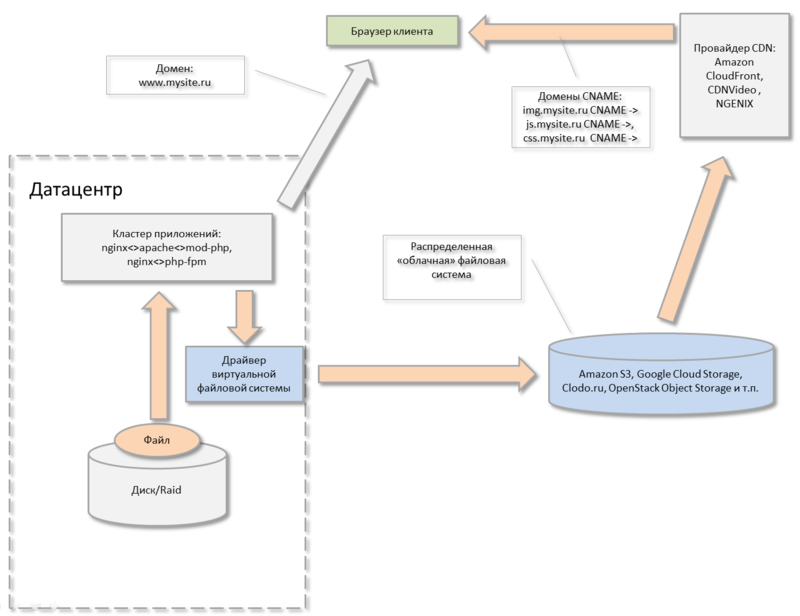

Virtual file system

One of the most effective and, most importantly, cheap solutions is to use the capabilities of well-known cloud providers that rent for very tasty, especially with a large amount of data, prices, a way to store unlimited amount of your files in the cloud with very high reliability (giant DropBox) . The providers organized at their facilities the above-described distributed file systems, highly reliable and located in several data centers, separated geographically. Moreover, these services are, as a rule, closely integrated into the CDN network of this provider. Those. You will not only conveniently and cheaply store your files, but also distribute them as conveniently as possible for the client.

Things are easy - you need to configure a layer or a virtual file system (or an analogue of a cloud disk) on your web project. Among the free solutions, you can highlight the FUSE-tools (for linux) such as s3fs .

However, to translate the current web application to this cheap and very efficient technology from a business point of view, you need to program and make a number of changes to the existing code and application logic.

Module "Cloud Storage" platform "1C-Bitrix"

In our product, we carefully analyzed the cases of web projects described above, which need to quickly deliver files to clients, and sometimes files — terabytes and more, and implemented these capabilities out of the box in the Cloud Storage module .

I honestly like that you can store data from individual modules of the system ... in different cloud storages :-). It really diversifies your file storage. You can scatter them depending on the address or type of client. Either on the type of information - someone effectively gives a light static, someone heavy content.

Results

It does not matter what you are writing a web project - sooner or later you will definitely encounter the chilling problem of an avalanche-like increase in the volume of files for storage and distribution and you will have to make the right architectural decision.

In the article, we reviewed the main stages and principles of organizing file distribution on web solutions of any size, weighed the pros and cons, looked at how it was implemented in the 1C-Bitrix platform. Given the fact that the volume of information distributed by web projects is growing very rapidly, this knowledge is relevant and must be useful to both web application architects and project managers.

And of course I invite everyone to our cloud service Bitrix24 , in which we actively use the technologies described above. Good luck to all!

Source: https://habr.com/ru/post/151865/

All Articles