Amazon EC2 Network Speed Test

When working with Amazon, much remains off screen. For the average user, this is even good, he needs a working service and it does not matter how this service is implemented. But for those who design systems for Amazon or other cloud providers, this can be a problem. Some internal aspects of the work are clarified when communicating with those. support, but in most cases for a better understanding it is necessary to conduct various tests and experiments.

Take, for example, network performance. Does Amazon guarantee a certain network bandwidth for any machine or not, how the network speed depends on server resources, region or time of day. I have to say that Amazon support strongly recommends using large machines if network speed is an important criterion and that the maximum speed is 1G / s. But it is always better to check everything in practice.

1. Test conditions and preparation of the test site

We needed a net maximum network bandwidth, as little as possible dependent on the operating system and software, so iperf was chosen as the tool, as Ubuntu 12.04 platform.

Running all the machines manually, installing the necessary software on them and running the tests manually is, of course, “not our method”.

')

Therefore, almost all operations were automated. For automation were selected:

What is not automated is creating Cloud Formation templates, displaying statistics and drawing graphs. All this, too, can be easily automated if there is a need to conduct such tests regularly.

Machines are started in pairs: server / client, one pair for each shape, availability zone and region.

The user-data transmits the address of the Chef server, the role for the Chef client, the attributes for the recipe and a tag that is unique for each pair of machines:

chefserver = \ "chefserver: 4000 \"; chefrole = \ "iperf \"; chefattributes = \ "iperf.role = client \"; tag = \ "us1a-to-us1a-t1micro \"

In order for the Cloud Formation template to validate, double quotes are escaped, without Cloud Formation, this is not necessary.

The iperf.role attribute contains a machine role: iperf in server mode or iperf in client mode, a unique identifier for each machine is formed with the help of a tag and role:

The server simply runs iperf:

The client searches for a host with the same tag and server role, gets its public_hostname, runs the test and sends the results of the execution to the mail. All this is given through the attributes:

If a server with the required tag is not found, the search is repeated at the interval specified on the Chef client.

Sample template for Cloud Formation:

All necessary regions must have the necessary AMI images and keys. Creating security groups can be described directly in the template.

Example of starting Cloud Formation stack by crown:

05 00 * * * cfn-create-stack --template-file = iperf_us-east-1a-to-us-east-1a.template --stack-name iperf-us-east-1a-to-us-east- 1a --region us-east-1

50 00 * * * cfn-delete-stack iperf-us-east-1a-to-us-east-1a --region us-east-1 --force

Each stack is in a state of neglect no more than an hour in order to save.

2. Test results.

All tests were repeated several times, in order to avoid random distortion, single indicators that were very different from the others were discarded, and the rest of the data were averaged.

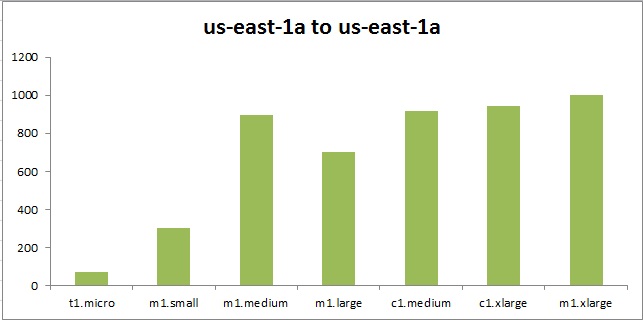

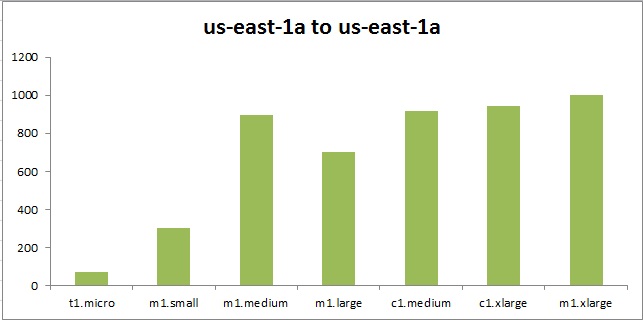

Within one Mbits / sec availability zone:

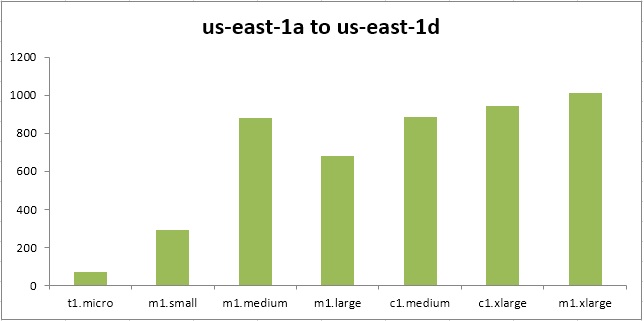

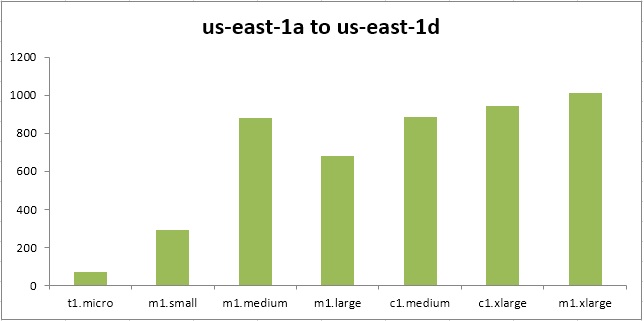

Different availability zones, within the same region, Mbits / sec :

You can see that m1.medium shows better results than m1.large. It can be assumed that machines are as small as t1. micro to m1.medium runs on weaker physical servers, and m1.medium can get almost the entire channel that the physical server has. While m1.large starts on more powerful, but more loaded servers and the network speed is lower for it.

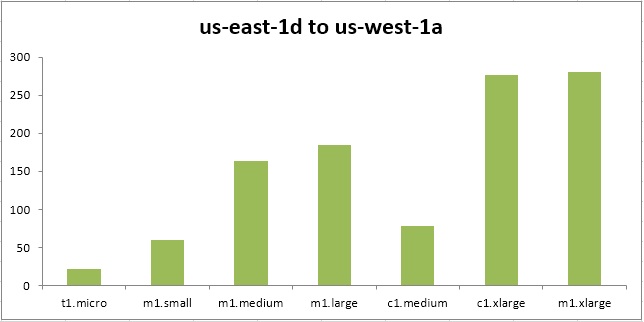

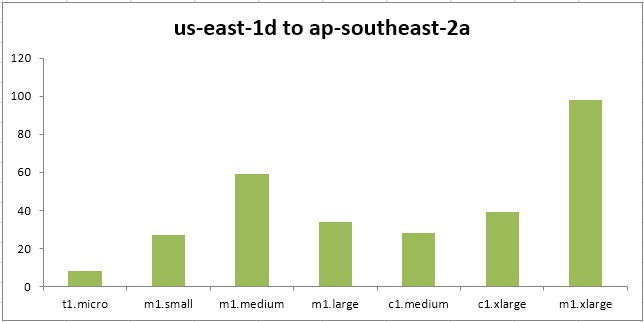

Different regions within the same continent, Mbits / sec :

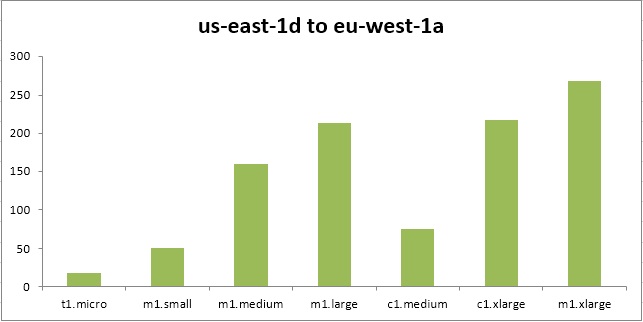

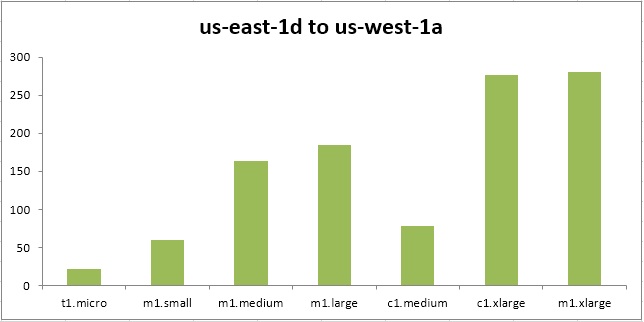

Between regions in US-EAST-1 and EU-WEST-1, Mbits / sec :

Here you can see that even between regions the difference in network speed for different sizes is different, but for machines optimized for memory (m1) the dependence of network speed on the size of the machine is directly proportional. There is an assumption that in this case, Amazon limits the speed to force on its network equipment.

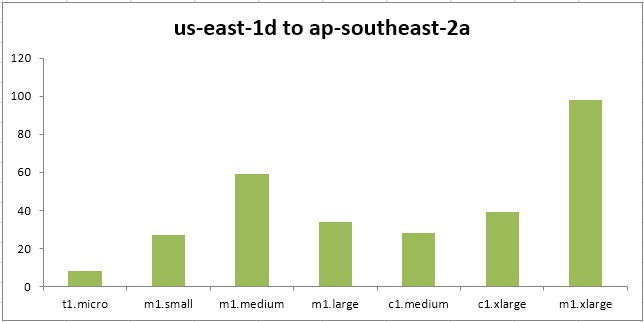

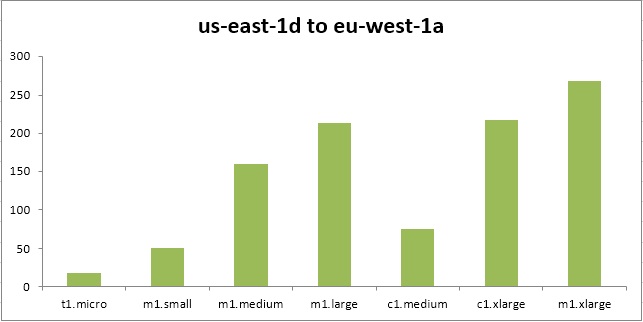

Between regions in US-EAST and AP-SOUTHEAST-2, Mbits / sec :

This test gave a very large variation in speed from launch to launch, most likely this is due to the strong influence of intermediate nodes and communication channels.

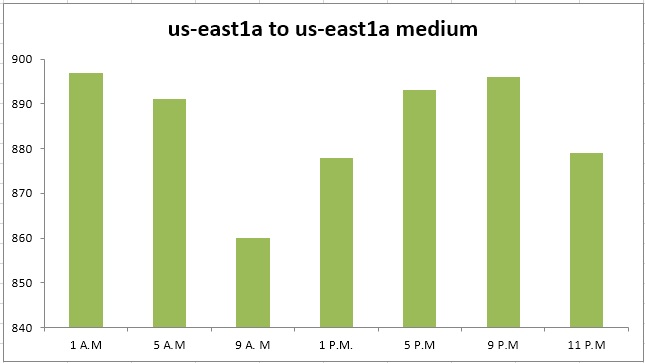

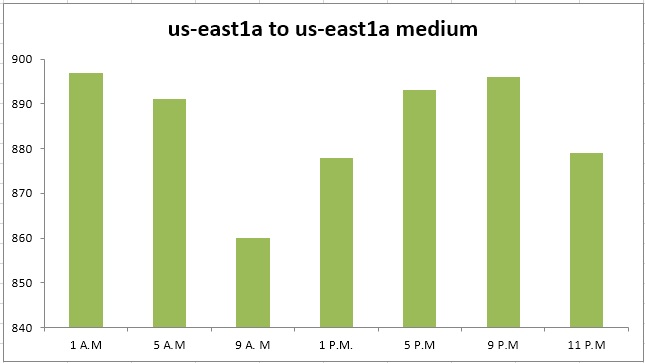

Depending on the time of day, for m1.medium, Mbits / sec , UTC:

To check the difference in network speed depending on the time of day, m1.medium was chosen, showing a fairly good network speed with an average machine size. Given the fact that one and the same pair of machines of any size when running several tests in a row could show deviations of 5-10 percent, it can be concluded that the time of day does not have a strong effect on the network load. Since the spread is also 5-10 percent.

Interesting facts revealed during the tests:

Hope this information was helpful to you.

Take, for example, network performance. Does Amazon guarantee a certain network bandwidth for any machine or not, how the network speed depends on server resources, region or time of day. I have to say that Amazon support strongly recommends using large machines if network speed is an important criterion and that the maximum speed is 1G / s. But it is always better to check everything in practice.

1. Test conditions and preparation of the test site

We needed a net maximum network bandwidth, as little as possible dependent on the operating system and software, so iperf was chosen as the tool, as Ubuntu 12.04 platform.

Running all the machines manually, installing the necessary software on them and running the tests manually is, of course, “not our method”.

')

Therefore, almost all operations were automated. For automation were selected:

- The AMI includes a startup script that receives all the necessary data from user-data, described here.

- Chef performing the recipe for installing, configuring and running iperf

- Cloud Formation for running scheduled virtual machine stacks

What is not automated is creating Cloud Formation templates, displaying statistics and drawing graphs. All this, too, can be easily automated if there is a need to conduct such tests regularly.

Machines are started in pairs: server / client, one pair for each shape, availability zone and region.

The user-data transmits the address of the Chef server, the role for the Chef client, the attributes for the recipe and a tag that is unique for each pair of machines:

chefserver = \ "chefserver: 4000 \"; chefrole = \ "iperf \"; chefattributes = \ "iperf.role = client \"; tag = \ "us1a-to-us1a-t1micro \"

In order for the Cloud Formation template to validate, double quotes are escaped, without Cloud Formation, this is not necessary.

The iperf.role attribute contains a machine role: iperf in server mode or iperf in client mode, a unique identifier for each machine is formed with the help of a tag and role:

tag = GetValue("#{node[:userdata]}","#{node[[:userdata]}","tag") node.override['iperf']['hostid'] = "#{tag}_#{node.iperf.role}" The server simply runs iperf:

execute "iperf-server-run" do command "/usr/bin/iperf -s&" action :run end The client searches for a host with the same tag and server role, gets its public_hostname, runs the test and sends the results of the execution to the mail. All this is given through the attributes:

server = search(:node, "hostid:#{node['iperf']['tag']}_server").first[:ec2][:public_hostname] Chef::Log.info("Server: #{server}") if server.any? execute "iperf-client-run" do command "/usr/bin/iperf -t #{node.iperf.time} -c #{server} | mail #{node['iperf']['email']} -s #{node['iperf']['region']}#{node['ipe rf']['shape']}_#{node['iperf']['role']}" action :run end else Chef::Log.info("iperf server not found, wait.") end If a server with the required tag is not found, the search is repeated at the interval specified on the Chef client.

Sample template for Cloud Formation:

{ "AWSTemplateFormatVersion" : "2010-09-09", "Parameters" : { "InstanceSecurityGroup" : { "Description" : "Name of an existing security group", "Default" : "iperf", "Type" : "String" } }, "Resources" : { "US1atoUS1aT1MicroServer" : { "Type" : "AWS::EC2::Instance", "Properties" : { "AvailabilityZone" : "us-east-1a", "KeyName" : "test", "SecurityGroups" : [{ "Ref" : "InstanceSecurityGroup" }], "ImageId" : "ami-31308c58", "InstanceType" : "t1.micro", "UserData" : { "Fn::Base64" : { "Fn::Join" : ["",[ "chefserver=\"chefserver:4000\";chefrole=\"iperf\";chefattributes=\"iperf.role=client\";tag=\"us1a-to-us1a-t1micro\"" ]]}} } }, "US1atoUS1aT1MicroClient" : { "Type" : "AWS::EC2::Instance", "Properties" : { "AvailabilityZone" : "us-east-1a", "KeyName" : "test", "SecurityGroups" : [{ "Ref" : "InstanceSecurityGroup" }], "ImageId" : "ami-31308c58", "InstanceType" : "t1.micro", "UserData" : { "Fn::Base64" : { "Fn::Join" : ["",[ "chefserver=\"chefserver:4000\";chefrole=\"iperf\";chefattributes=\"iperf.role=server\";tag=\"us1a-to-us1a-t1micro\"" ]]}} } } } } All necessary regions must have the necessary AMI images and keys. Creating security groups can be described directly in the template.

Example of starting Cloud Formation stack by crown:

05 00 * * * cfn-create-stack --template-file = iperf_us-east-1a-to-us-east-1a.template --stack-name iperf-us-east-1a-to-us-east- 1a --region us-east-1

50 00 * * * cfn-delete-stack iperf-us-east-1a-to-us-east-1a --region us-east-1 --force

Each stack is in a state of neglect no more than an hour in order to save.

2. Test results.

All tests were repeated several times, in order to avoid random distortion, single indicators that were very different from the others were discarded, and the rest of the data were averaged.

Within one Mbits / sec availability zone:

Different availability zones, within the same region, Mbits / sec :

You can see that m1.medium shows better results than m1.large. It can be assumed that machines are as small as t1. micro to m1.medium runs on weaker physical servers, and m1.medium can get almost the entire channel that the physical server has. While m1.large starts on more powerful, but more loaded servers and the network speed is lower for it.

Different regions within the same continent, Mbits / sec :

Between regions in US-EAST-1 and EU-WEST-1, Mbits / sec :

Here you can see that even between regions the difference in network speed for different sizes is different, but for machines optimized for memory (m1) the dependence of network speed on the size of the machine is directly proportional. There is an assumption that in this case, Amazon limits the speed to force on its network equipment.

Between regions in US-EAST and AP-SOUTHEAST-2, Mbits / sec :

This test gave a very large variation in speed from launch to launch, most likely this is due to the strong influence of intermediate nodes and communication channels.

Depending on the time of day, for m1.medium, Mbits / sec , UTC:

To check the difference in network speed depending on the time of day, m1.medium was chosen, showing a fairly good network speed with an average machine size. Given the fact that one and the same pair of machines of any size when running several tests in a row could show deviations of 5-10 percent, it can be concluded that the time of day does not have a strong effect on the network load. Since the spread is also 5-10 percent.

Interesting facts revealed during the tests:

- in about 5% of cases at the start of the stack, at least one of the machines did not pass the health check and did not start correctly

- in about 5% of cases, the entire stack did not start, but hung up in the state of "creation in progress", it had to be manually removed and restarted

- machines optimized for processor performance (c1) started twice as long as the rest, although when launched without Cloud Formation, they start up as fast as the others

Hope this information was helpful to you.

Source: https://habr.com/ru/post/151329/

All Articles