Kinect for Windows SDK. Part 3. Functionalities

- Kinect for Windows SDK. Part 1. Sensor

- Kinect for Windows SDK. Part 2. Data Flows

- [Kinect for Windows SDK. Part 3. Functionality]

- Playing cubes with Kinect

- Program, aport!

Tracking the human figure

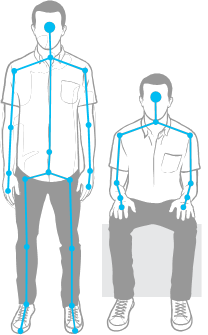

With such a great feature, Kinect is able to recognize the shape of a person and his movement. And, in fact, not even one, but as many as six! In the sense of determining that there are up to six people in the sensor's field of view, but only two can collect detailed information. Take a look at the picture:

Personally, my first question was: “Why did he [Kinect] build the full 20-point skeleton for these two peppers, and the rest showed only navels?” two recognized figures build a detailed skeleton, the rest are satisfied with the fact that they were even noticed. In MSDN, there is even an example of how to change this behavior, for example, to build a detailed skeleton for people closest to the sensor.

The points in the built skeleton are called Joint, which can be translated as a joint, joint, node. The node seems to me a more adequate translation, and the head is called a joint, somehow not very good.

')

So, the first thing that needs to be done in the application in order to receive information about the figures in the frame is to enable the necessary stream:

// sensor.SkeletonFrameReady += SkeletonsReady; // sensor.SkeletonStream.Enable(); The second is to handle the SkeletonFrameReady event. All that remains to be done is to extract information about the figures of interest from the frame. One figure - one object of the Skeleton class. The object stores tracking status data — the TrackingState property (whether the complete skeleton is built or only the location of the shape is known), the data about the nodes of the shape is the Joints property. In essence, this is a dictionary whose keys are the values of the JointType enumeration. For example, you wanted to get the location of the left knee - nothing is easier!

Joint kneeLeft = skeleton.Joints[JointType.KneeLeft]; int x = kneeLeft.Position.X; int y = kneeLeft.Position.Y; int z = kneeLeft.Position.Z; The values of the JointType enumeration are originally shown in the figure of the Vitruvian man.

Before these lines, I wrote about a 20-node skeleton. Build which is not always possible. This is how a mode called seated skeletal tracking appeared . In this mode, the sensor builds an incomplete 10-node skeleton.

In order for Kinect to recognize shapes in this mode, it is enough to set the TrackingMode property of the SkeletonStream object during stream initialization:

kinect.SkeletonStream.TrackingMode = SkeletonTrackingMode.Seated; In the tracking mode of a sitting figure, the sensor can also recognize up to six figures and track two figures. But there are also features. For example, in order for the sensor to “notice” you need to move, wave your hands, while in the recognition mode of a complete skeleton, it is enough to stand in front of the sensor. Tracking a seated figure is a more resource-intensive operation, so be prepared to reduce the FPS.

Another article in the series - Playing Kines with Kinect , is entirely devoted to the subject of tracking a human figure.

Speech recognition

Strictly speaking, speech recognition is not a built-in Kinect feature, since it uses an additional SDK, and the sensor acts as an audio source. Therefore, the development of speech recognition applications will require the installation of the Microsoft Speech Platform . Optionally, you can install different language packs, and for client machines there is a separate package (speech platform runtime).

In general, the use of speech platform is as follows:

- select a recognition engine for the required language available in the system;

- create a dictionary and pass it to the selected handler. Speaking humanly, you need to decide which words your application should be able to recognize and pass to the recognition processor as strings (there is no need to create audio files with the sound of each word);

- set the audio source for the handler. It can be Kinect, microphone, audio file;

- give the handler a command to start recognition.

After that, it remains only to process the event that occurs whenever the handler recognizes the word. An example of working with this platform can be found in the article Program, Aport!

Face tracking

In contrast to the shape tracking, face tracking is fully implemented programmatically, based on data obtained from the video stream (color stream) and the data stream of the rangefinder (depth stream) . Therefore, the resources of the client computer will depend on how quickly the tracking will work.

It is worth noting that face tracking is not the same as face recognition . It's funny, but in some articles I met exactly the stories that the facial recognition feature was implemented in Kinect. So what is face tracking and where can it be useful?

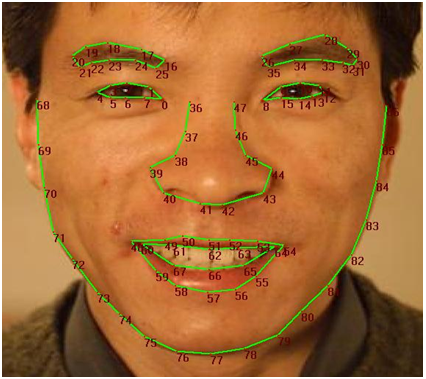

Face tracking is the tracking of a person’s face in a frame with the construction of a 87-node face pattern. In MSDN it is said that there is an opportunity to monitor several persons, but it does not say about the upper limit, it is probably equal to two (for so many people, the sensor can build an N node skeleton). The functionality can be useful in games, so that your character (avatar) can convey the whole palette of emotions displayed on your face; in applications that adapt to your mood (whiny or playful); in face recognition applications, finally, or even emotions (Dr. Lightman?).

So, the scheme. Actually here it is (the scheme of my dreams):

In addition to these 87 nodes, you can get coordinates for another 13: the centers of the eyes, the nose, the corners of the lips, and the borders of the head. The SDK can even build a 3D face mask, as shown in the following image:

Now, armed with a common understanding of face tracking implemented by the Face Tracking SDK, it's time to get to know it better. Face Tracking SDK is a COM library (FaceTrackLib.dll) that is included with the Developer Toolkit. There is also a Microsoft.Kinect.Toolkit.FaceTracking project wrapper (wrapper) that can be safely used in managed projects. Unfortunately, it was not possible to find a description of the wrapper, except for the link provided (I think that while active development is underway and while the Face Tracking SDK is not included in the Kinect SDK, it remains only to expect the appearance of MSDN help).

I will focus on only a few classes. Central to the class is FaceTracker , oddly enough. Its task is to initialize the handler (engine) tracking and tracking the movement of a person in the frame. The overloaded Track method allows you to search for a person using data from a video camera and a rangefinder. One of the method overloads takes a human figure - Skeleton , which has a positive effect on the speed and quality of the search. The class FaceModel helps in building 3D models, and also deals with the transformation of models into the camera's coordinate system. In the Microsoft.Kinect.Toolkit.FaceTracking project, in addition to the wrapper classes, you can find simpler, but equally useful types. For example, the FeaturePoint enumeration describes all nodes in a face map (see the above figure with 87 dots).

In general, the algorithm for using tracking may look like this:

- select a sensor and turn on the video stream (color stream) , the rangefinder data stream (depth stream) and the figure tracking stream (skeleton stream) ;

- add the AllFramesReady sensor event handler, which occurs when the frames of all threads are ready for use;

- in the event handler, initialize FaceTracker (if this has not yet been done) to go over the shapes found in the frame and collect the constructed face schemes for them;

- process face patterns (for example, show a built 3D mask or determine the emotions of people in the frame).

I want to note that I deliberately do not provide code examples, since I don’t want to give an example in a couple of lines, but I don’t want to overload the article with giant multi-line listings.

Remember that the quality of finding a person in the frame depends on both the distance to the head and its position (inclinations). Acceptable head tilts for the sensor are considered up-down ± 20 °, left-right ± 45 °, side tilt ± 45 °. The optimal will be ± 10 °, ± 30 ° and ± 45 ° for tilt up-down, left-right and sideways, respectively (see 3D Head Pose ).

Kinect studio

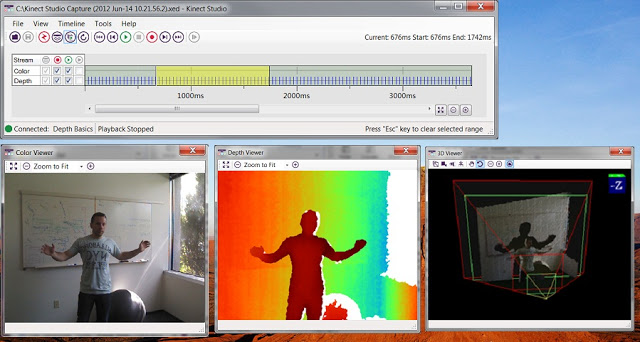

When you first try to write something for Kinect, the feeling that something is missing does not leave for a minute. And when for the hundredth time you set up breakpoints so that they work exactly with a certain gesture, and when for the hundredth time you make this gesture in front of the camera, then you understand what is really missing! A simple emulator. So that you can write down the necessary gestures, and then sit quietly and debug. Oddly enough, the rays of good sent by developers around the world who have experienced development for Kinect have reached their goal. The Developer Toolkit includes a tool called Kinect Studio .

Kinect Studio can be viewed as a debugger or emulator. Its role is extremely simple, help you write down the data received from the sensor and send it to your application. You can play the recorded data again and again, and if you wish, save them to a file and return to debugging after a while.

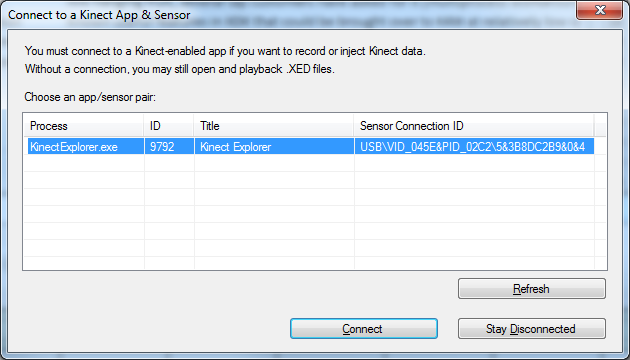

To get started with Kinect Studio, you connect to the application and select a sensor. Now everything is ready to start recording (recording) sensor data or injection (injection) of the stored data.

Afterword

The review article, which was not even planned, underwent more than one change during its work, and as a result was divided into three parts. In them, I tried to collect material that I found in the vast world wide web. But still the main source of knowledge was and remains MSDN. Now Kinect is more like a kitten who is just learning to crawl than a product that can be perceived not only as just for fun. I myself treat him with a certain skepticism. But who knows what will happen tomorrow. Now Kinect shows good results in the gaming industry, and Kinect for Windows opens up room for creativity for developers around the world.

Source: https://habr.com/ru/post/151296/

All Articles