Flashcache: first experience

The disk subsystem is often a bottleneck in server performance, forcing companies to invest heavily in fast disks and specialized solutions. Currently, SSDs are becoming increasingly popular, but they are still too expensive compared to traditional hard drives. Nevertheless, there are technologies designed to combine the speed of SSD with the amount of HDD. These are caching technologies when the amount of disk cache on an SSD is gigabytes, not megabytes of the HDD cache or controller.

One such technology is flashcache, developed by Facebook for use with its databases, and which is now distributed open source. I have long been eyeing her. Finally, I had the opportunity to test it when I decided to put an SSD-drive in my home computer as a system disk.

And before putting the SSD in the home computer, I connected it to the server, which just turned out to be free for testing. Next, I will describe the process of installing flashcache on CentOS 6.3 and give the results of some tests.

There is a server with 4 Western Digital Caviar Black WD1002FAEX SATA drives combined in a software RAID10, 2 Xeon E5-2620 processors and 8 GB of RAM. The choice of solid state drive fell on the OCZ Vertex-3 with a capacity of 90 GB. SSD is connected to a 6-gigabit SATA port, hard drives - to 3-gigabit ports. The SSD is defined as

')

For the experiments, I left unallocated a large area of disk space on a RAID device

On this volume I tested flashcache.

Recently, the installation of flashcache has become elementary: there are now binary packages in the elrepo repository. Before that, it was necessary to compile utilities and kernel module from source.

Let's connect the elrepo repository:

Flashcache consists of a kernel module and control utilities. The entire installation is reduced to one codeman:

Flashcache can work in one of three modes:

There are three utilities for managing the bundle:

Create a cache.

This command creates a caching device with the name

As a result, the

Now you can mount the section and start testing. It is necessary to pay attention to the moment that it is necessary to mount not the partition itself, but the caching device:

That's all: now disk operations in the

I will describe one nuance that can be useful when working not with a separate LVM volume, but with a group of volumes. The cache can be created on a block device containing the LVM group, in my case it is

But in order for it to work, you need to change the LVM configuration settings that are responsible for finding volumes. To do this, install the filter in the

or restrict LVM search only to the

After saving changes, you need to report this LVM subsystem:

I conducted three types of tests:

The utility is in the rpmforge repository.

The test was run as follows:

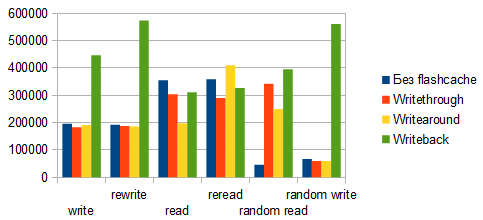

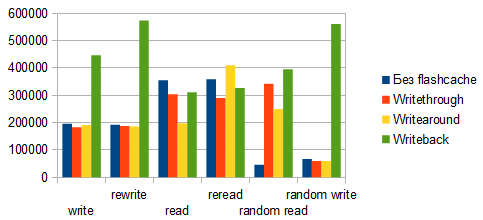

The diagram shows a slight loss of performance when the cache is turned on during sequential write and read operations, but in random read operations it gives a multiple increase in performance. The

The sequential read test was run by the following commands.

In one thread:

In 4 threads:

Before each launch, the following command was executed to clear the RAM cache:

Cached multi-threaded reading shows a speed close to the maximum speed of the SSD-drive, however, when you first start, the speed is lower than without a cache. This is most likely due to the fact that, along with the return of the data stream, the system has to write information to the solid-state drive. However, when you restart, all information is already recorded on the SSD and is given at the maximum speed.

Finally, a small test of the time of reading a large number of files of various sizes. I took dozens of real sites from backups, unpacked them into the

The last command searches all the files in the current directory and reads them, noting the total execution time.

In the diagram, we see the expected performance loss "in the first reading", which was about 25%, but when restarted, the command was executed more than 3 times, faster than the operation without a cache.

Before removing the caching device, you must unmount the partition:

Or, if you worked with a volume group, then disable LVM:

Then delete the cache:

I have not touched the flashcache tweaks that can be adjusted using sysctl. Among them, the choice of the algorithm for writing to the FIFO or LRU cache, the caching threshold for sequential access, etc. I think that with these settings you can squeeze out a little more performance gain if you adapt them to the necessary working conditions.

The technology has shown itself in the best way mainly in random reading operations, demonstrating a multiple increase in performance. But in real conditions on servers, random input / output operations are most often used. Therefore, by minimal investment, it became possible to combine the speed of solid-state drives with the volume of traditional hard drives, and the presence of packages in the repositories makes installation simple and fast.

In preparing this article, I used the official documentation from the developers, which can be found here .

That's all. I hope this information will be useful to someone. I would welcome comments, comments and recommendations. It would be particularly interesting to learn the experience of using flashcache on combat servers, and, in particular, the nuances of using writeback mode.

One such technology is flashcache, developed by Facebook for use with its databases, and which is now distributed open source. I have long been eyeing her. Finally, I had the opportunity to test it when I decided to put an SSD-drive in my home computer as a system disk.

And before putting the SSD in the home computer, I connected it to the server, which just turned out to be free for testing. Next, I will describe the process of installing flashcache on CentOS 6.3 and give the results of some tests.

There is a server with 4 Western Digital Caviar Black WD1002FAEX SATA drives combined in a software RAID10, 2 Xeon E5-2620 processors and 8 GB of RAM. The choice of solid state drive fell on the OCZ Vertex-3 with a capacity of 90 GB. SSD is connected to a 6-gigabit SATA port, hard drives - to 3-gigabit ports. The SSD is defined as

/dev/sda .')

For the experiments, I left unallocated a large area of disk space on a RAID device

/dev/md3 , in which I created an LVM volume group named vg1 , and a vg1 logical volume LVM named lv1 : # lvcreate -L 100G -n lv1 vg1 # mkfs -t ext4 /dev/vg1/lv1 On this volume I tested flashcache.

Flashcache installation

Recently, the installation of flashcache has become elementary: there are now binary packages in the elrepo repository. Before that, it was necessary to compile utilities and kernel module from source.

Let's connect the elrepo repository:

# rpm -Uvh http://elrepo.reloumirrors.net/elrepo/el6/x86_64/RPMS/elrepo-release-6-4.el6.elrepo.noarch.rpm Flashcache consists of a kernel module and control utilities. The entire installation is reduced to one codeman:

# yum -y install kmod-flashcache flashcache-utils Flashcache can work in one of three modes:

writethrough- read and write operations are saved in the cache, and the disk is written immediately. In this mode, data integrity is guaranteed.writearoundis the same as the previous one, except that only reads are saved in the cache.writebackis the fastest mode because read and write operations are saved in the cache, but the data is flushed to the disk in the background after some time. This mode is not so safe from the point of view of data integrity, as there is a risk that data will not be written to disk in case of a sudden server failure or loss of power.

There are three utilities for managing the bundle:

flashcache_create , flashcache_load and flashcache_destroy . The first is used to create a caching device, the other two are needed to work only in writeback mode to load an existing caching device and to delete it, respectively.flashcache_create has the following basic parameters:-p- caching mode. Required. It can take the valuesthru,aroundandbackto enable the writethrough, writearound and writeback modes, respectively.-s- cache size. If this parameter is not specified, the entire SSD disk will be used for the cache.-b- block size. The default is 4KB. Optimal for most uses.

Create a cache.

# flashcache_create -p thru cachedev /dev/sda /dev/vg1/lv1 cachedev cachedev, ssd_devname /dev/sda, disk_devname /dev/vg1/lv1 cache mode WRITE_THROUGH block_size 8, cache_size 0 Flashcache metadata will use 335MB of your 7842MB main memory This command creates a caching device with the name

cachedev , operating in writethrough mode on the SSD /dev/sda for the block device /dev/vg1/lv1 .As a result, the

/dev/mapper/cachedev device should appear, and the dmsetup status command should display statistics for various cache operations: # dmsetup status vg1-lv1: 0 209715200 linear cachedev: 0 3463845888 flashcache stats: reads(142), writes(0) read hits(50), read hit percent(35) write hits(0) write hit percent(0) replacement(0), write replacement(0) write invalidates(0), read invalidates(0) pending enqueues(0), pending inval(0) no room(0) disk reads(92), disk writes(0) ssd reads(50) ssd writes(92) uncached reads(0), uncached writes(0), uncached IO requeue(0) uncached sequential reads(0), uncached sequential writes(0) pid_adds(0), pid_dels(0), pid_drops(0) pid_expiry(0) Now you can mount the section and start testing. It is necessary to pay attention to the moment that it is necessary to mount not the partition itself, but the caching device:

# mount /dev/mapper/cachedev /lv1/ That's all: now disk operations in the

/lv1/ directory will be cached on the SSD.I will describe one nuance that can be useful when working not with a separate LVM volume, but with a group of volumes. The cache can be created on a block device containing the LVM group, in my case it is

/dev/md3 : # flashcache_create -p thru cachedev /dev/sda /dev/md3 But in order for it to work, you need to change the LVM configuration settings that are responsible for finding volumes. To do this, install the filter in the

/etc/lvm/lvm.conf file:filter = [ "r/md3/" ]or restrict LVM search only to the

/dev/mapper directory:scan = [ "/dev/mapper" ]After saving changes, you need to report this LVM subsystem:

# vgchange -ay Testing

I conducted three types of tests:

- iozone utility testing in various caching modes;

- sequential read with

ddin 1 and 4 threads; - reading a set of files of various sizes taken from backups of real sites.

Iozone testing

The utility is in the rpmforge repository.

# rpm -Uvh http://apt.sw.be/redhat/el6/en/x86_64/rpmforge/RPMS/rpmforge-release-0.5.2-2.el6.rf.x86_64.rpm # yum -y install iozone The test was run as follows:

# cd /lv1/ # iozone -a -i0 -i1 -i2 -s8G -r64k

The diagram shows a slight loss of performance when the cache is turned on during sequential write and read operations, but in random read operations it gives a multiple increase in performance. The

writeback mode goes into the lead as well on write operations.Testing dd

The sequential read test was run by the following commands.

In one thread:

# dd if=/dev/vg1/lv1 of=/dev/null bs=1M count=1024 iflag=direct In 4 threads:

# dd if=/dev/vg1/lv1 of=/dev/null bs=1M count=1024 iflag=direct skip=1024 & dd if=/dev/vg1/lv1 of=/dev/null bs=1M count=1024 iflag=direct skip=2048 & dd if=/dev/vg1/lv1 of=/dev/null bs=1M count=1024 iflag=direct skip=3072 & dd if=/dev/vg1/lv1 of=/dev/null bs=1M count=1024 iflag=direct skip=4096 Before each launch, the following command was executed to clear the RAM cache:

# echo 3 > /proc/sys/vm/drop_caches

Cached multi-threaded reading shows a speed close to the maximum speed of the SSD-drive, however, when you first start, the speed is lower than without a cache. This is most likely due to the fact that, along with the return of the data stream, the system has to write information to the solid-state drive. However, when you restart, all information is already recorded on the SSD and is given at the maximum speed.

Test reading various files

Finally, a small test of the time of reading a large number of files of various sizes. I took dozens of real sites from backups, unpacked them into the

/lv1/sites/ directory. The total amount of files was about 20 GB, and the number - about 760 thousand. # cd /lv1/sites/ # echo 3 > /proc/sys/vm/drop_caches # time find . -type f -print0 | xargs -0 cat >/dev/null The last command searches all the files in the current directory and reads them, noting the total execution time.

In the diagram, we see the expected performance loss "in the first reading", which was about 25%, but when restarted, the command was executed more than 3 times, faster than the operation without a cache.

Cache off

Before removing the caching device, you must unmount the partition:

# umount /lv1/ Or, if you worked with a volume group, then disable LVM:

# vgchange -an Then delete the cache:

# dmsetup remove cachedev Conclusion

I have not touched the flashcache tweaks that can be adjusted using sysctl. Among them, the choice of the algorithm for writing to the FIFO or LRU cache, the caching threshold for sequential access, etc. I think that with these settings you can squeeze out a little more performance gain if you adapt them to the necessary working conditions.

The technology has shown itself in the best way mainly in random reading operations, demonstrating a multiple increase in performance. But in real conditions on servers, random input / output operations are most often used. Therefore, by minimal investment, it became possible to combine the speed of solid-state drives with the volume of traditional hard drives, and the presence of packages in the repositories makes installation simple and fast.

In preparing this article, I used the official documentation from the developers, which can be found here .

That's all. I hope this information will be useful to someone. I would welcome comments, comments and recommendations. It would be particularly interesting to learn the experience of using flashcache on combat servers, and, in particular, the nuances of using writeback mode.

Source: https://habr.com/ru/post/151268/

All Articles