Kinect for Windows SDK. Part 2. Data Flows

- Kinect for Windows SDK. Part 1. Sensor

- [Kinect for Windows SDK. Part 2. Data Flows]

- Kinect for Windows SDK. Part 3. Functionalities

- Playing cubes with Kinect

- Program, aport!

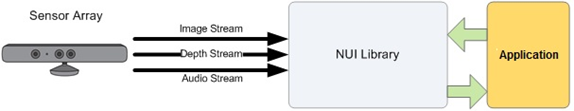

Continue to get acquainted with the possibilities of Kinect. Last time I outlined several features of Kinect, each of which undoubtedly deserves a separate article, and did not mention the workers at all, by whose efforts both speech recognition and tracking of the human figure are provided. Have you ever thought in what form the sensor transmits data? What is this stream or data streams? And if once a moonless night, in a dark alley, a maniac sneaks up on you and asks: “How many data streams does Kinect have at the exit?”, Without hesitation, answer: “Three!”. Video Stream (Color Stream) , Audio Stream (Audio Stream) and rangefinder data (Depth Stream) . The SDK builds on these threads. Let's start with them.

Setting a goal to use the Kinect features, the first thing to do is to select the desired sensor and initialize the required data streams. Remember that up to 4 sensors can be connected to the computer at the same time? With the help of the KinectSensor class it is not too difficult to sort them all. In the documentation you will find this way to select and enable the first connected sensor:

KinectSensor kinect = KinectSensor.KinectSensors.Where(s => s.Status == KinectStatus.Connected).FirstOrDefault(); kinect.Start(); After that, for the selected sensor, you can configure and enable the necessary threads. And it is on the three data streams received from the sensor, we will stop a little.

')

Sensor Stream

Carefully reading MSDN, you can see, it seems, useful information about this stream. For example, the developer is free to set the quality level and format of the picture when initializing the video stream. The quantity and speed of data transmitted from the sensor, which, in turn, is limited by USB 2.0 bandwidth, directly depends on the quality level. So for a picture with a resolution of 1280x960, the number of frames per second is 12, and for a picture with a resolution of 640x480 - 30. The image format is determined by the color model and can be either RGB or YUV.

The combinations of quality level and image format are represented by the ColorImageFormat listing. Three of its values determine the 32-bit encoding of each pixel of the image: RgbResolution1280x960Fps12 , RgbResolution640x480Fps30 and YuvResolution640x480Fps15 , and the fourth - 16-bit RawYuvResolution640x480Fps15 . YuvResolution 640x480Fps15 causes a lot of confusion . The MSDN clearly states ( here and here ) that this is a YUV converted to RGB32 ... but, nevertheless, continues to be YUV ...

To start receiving a video stream from the sensor, this same stream must be initialized:

// RGB 640x480(30fps) kinect.ColorStream.Enable(ColorImageFormat.RgbResolution640x480Fps30); // , kinect.ColorFrameReady +=SensorColorFrameReady; A slightly more detailed example can be found in MSDN.

Sensor audio stream

You already know that Kinect has a built-in set of four microphones using a 24-bit analog-to-digital converter, and the built-in audio signal handler includes echo cancellation and noise reduction. Each microphone is set to have a small focus. Whether to use echo or noise reduction depends on the developer, i.e. These options are set at the initialization stage of the audio stream. The optimal distance between the speaker and the sensor is 1-3 meters.

Kinect audio capabilities can be used in a variety of ways, for example, for high-quality audio capture, audio positioning, or speech recognition. We will discuss speech recognition further, but now I would like to dwell on one feature of Kinect, the description of which I did not find in the documentation (I was looking bad?). Initialization of the audio stream takes a little less than four seconds. This must be taken into account, and, say, after calling the Start () sensor method, a delay of four seconds is made before adjusting the parameters of the audio stream - KinectSensor.AudioSource . An example of determining the direction of sound can be found in MSDN.

Rangefinder data flow from sensor

Must be the most interesting thread. And it is primarily interesting to those who want to know more about tracking a human figure (skeletal tracking) .

This stream is formed from frames in which each pixel contains the distance (in millimeters) from the sensor plane to the nearest object in certain coordinates of the camera's field of view. As in the case of a video stream, the resolution of a single frame can be set for the rangefinder data stream, which is defined by the DepthImageFormat enumeration. With a frame rate of 30 per second, the developer is free to choose resolutions of 80x60 ( Resolution80x60Fps30 ), 320x240 ( Resolution320x240Fps30 ) and 640x480 ( Resolution640x480Fps30 ). And as already mentioned in the previous part, there are two ranges of “working” distances: Default Range and Near Range, defined by the DepthRange enumeration.

// kinect.DepthStream.Range = DepthRange.Near; // 640x480(30fps) kinect.DepthStream.Enable(DepthImageFormat.Resolution640x480Fps30); But that's not all. The fact is that the distance value in each pixel is encoded only by 13 bits, and 3 bits are designed to identify the person. If the distance to the object is outside the operating range (remember the Default and Near? Ranges), a zero or a specific constant will return in 13 bits. If during the sensor initialization, enable tracking of a human figure, in 3 bits the sequence number (1 or 2) of the detected person will be returned (if a person is found at this point, otherwise 0 will return):

kinect.SkeletonStream.Enable(); So, we have come close to the first opportunity of Kinect, which we will consider, namely the tracking of the human figure (skeletal tracking) .

Source: https://habr.com/ru/post/151131/

All Articles