Building Distributed Data Center (DC Interconnect, DCI)

When a company grows to a certain size and one data center becomes small, a lot of questions immediately arise how to further develop the network infrastructure. Indeed, how to expand the boundaries of the existing data center so that it transparently provides existing services at remote sites? To make a large L2 domain so that there are no problems with virtualization or to unite sites on the third level? If we make the infrastructure hierarchical, then how to get around the limitations of existing standards (802.1q) and what will happen to security in this case? And how, at the same time, to ensure reliable transmission of converged traffic (eg FCoE) between sites? And all this still needs to be managed smoothly ...

A steady “trend” of recent times for virtualization and building cloud infrastructures clearly shows that the option of combining data center sites along the second (L2) level is preferable to others for many reasons. However, the question immediately arises, what technology to use for this? Obviously, to build a currently distributed L2 domain based on STP is at least not rational. From the existing alternatives - TRILL, PBB / SPB, FabricPath (proprietary!), MPLS / VPLS, dark fiber - the variant using the VPLS technology for DCI is, on the one hand, the most mature and proven in practice, on the other - flexible and rich functionality. About him further and talk in detail.

In order not to complicate the example, let's take two geographically separated sites. We will build networks on Hewlett-Packard equipment, which recently has been actively developing network directions in the data center. So, the task is to transparently for the virtual environment (read VM) to merge two sites into the data center so that:

')

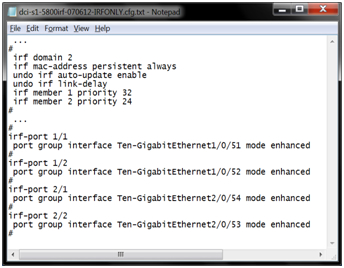

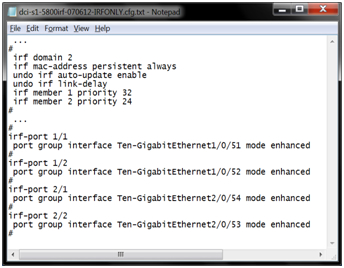

To solve this problem, we install two HP 5800 series switches on each site and combine them into one virtual switch (for reliability, if not critical, you can skip this step). This is done simply, the switches are connected via standard 10G ports, then the IRF stack is configured like this:

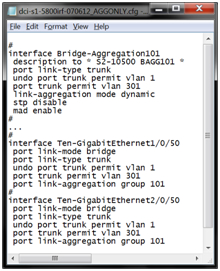

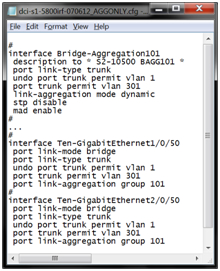

Then the sites are physically connected to 10G interfaces (we assume that there is optics between the data center sites) and the interfaces are assembled into an LACP unit, like this:

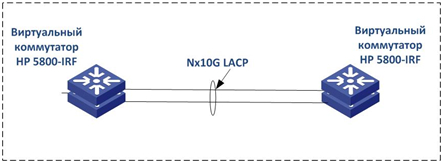

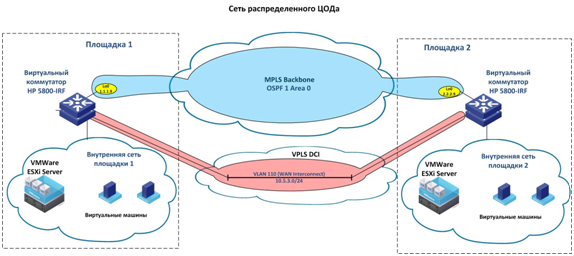

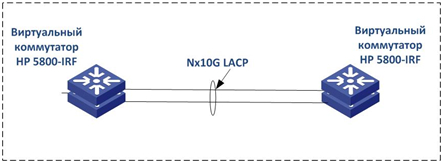

On the second site, the virtual switch is configured in the same way. At the same time, we note that the optics connecting the platforms “land” on physically different switches. This is done in case of the fall of one of the switches in the stack so that the traffic automatically switches to the second switch / channel. Ie, we receive such picture:

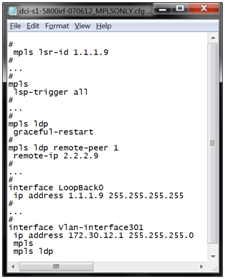

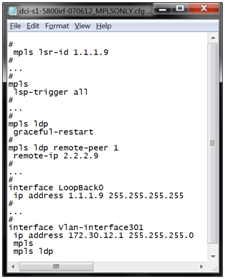

Then, in order to transparently “forward” VLANs (second-level traffic) between sites, we are raising a VPLS service on these switches. To do this, we first configure MPLS and LDP services so that LSP (Label Switched Path) “signals” automatically, like this:

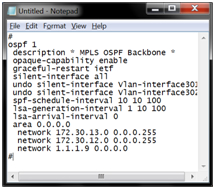

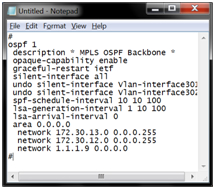

It raises OSPF in the MPLS core in order not to fool around with static routes and the switches at the sites see each other by IP (and put it in OSPF LoopBack), like this:

After that, we set up VPLS signaling on both sites. In this case, the question to use the variant of Compella or Martini is more a matter of religion. On the equipment HP work options. On LDP (Martini), an L2 MPLS VPN is configured, then a VPLS Instance (VSI) is created and attached to the interface, like this:

With BGP, it's a little more complicated, plus everything else needs to raise BGP Peering between the platforms, configure the VPLS family in it and set the parameters for the VPLS VSI (vpn-target and route-distinguisher), like this:

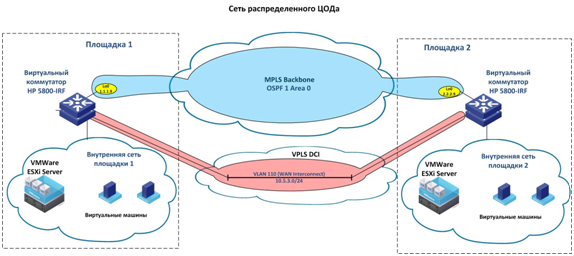

The result is something like this:

In such a scheme, second-level traffic is transparently forwarded in VLAN-s between sites, and virtual resources and applications can freely migrate from one site to another, as if they are located in one place.

In this simplified example, it is not entirely obvious, why raise the VPLS between two sites? It seems that you can just forward the trunk and VLANs will be available on both sites ... However, in addition to the traffic management, network security and quality of service tasks provided by MPLS / VPLS, as soon as another site appears in the L2 domain (or another redundant connection), it will be necessary to control access to the environment and to protect against loops. And doing this with STP is now at least a bad tone.

A steady “trend” of recent times for virtualization and building cloud infrastructures clearly shows that the option of combining data center sites along the second (L2) level is preferable to others for many reasons. However, the question immediately arises, what technology to use for this? Obviously, to build a currently distributed L2 domain based on STP is at least not rational. From the existing alternatives - TRILL, PBB / SPB, FabricPath (proprietary!), MPLS / VPLS, dark fiber - the variant using the VPLS technology for DCI is, on the one hand, the most mature and proven in practice, on the other - flexible and rich functionality. About him further and talk in detail.

In order not to complicate the example, let's take two geographically separated sites. We will build networks on Hewlett-Packard equipment, which recently has been actively developing network directions in the data center. So, the task is to transparently for the virtual environment (read VM) to merge two sites into the data center so that:

- Transparent level 2 (L2) traffic across the entire data center network;

- avoid blocked channels and inefficient use of bandwidth;

- simplify network management as much as possible;

- provide the required fault tolerance and protection against all sorts of failures;

- to ensure the simplicity of increasing the port capacity and, in general, the scalability of the network;

- the possibility of a gradual increase in port capacity by adding switches to the stack as needed;

')

To solve this problem, we install two HP 5800 series switches on each site and combine them into one virtual switch (for reliability, if not critical, you can skip this step). This is done simply, the switches are connected via standard 10G ports, then the IRF stack is configured like this:

Then the sites are physically connected to 10G interfaces (we assume that there is optics between the data center sites) and the interfaces are assembled into an LACP unit, like this:

On the second site, the virtual switch is configured in the same way. At the same time, we note that the optics connecting the platforms “land” on physically different switches. This is done in case of the fall of one of the switches in the stack so that the traffic automatically switches to the second switch / channel. Ie, we receive such picture:

Then, in order to transparently “forward” VLANs (second-level traffic) between sites, we are raising a VPLS service on these switches. To do this, we first configure MPLS and LDP services so that LSP (Label Switched Path) “signals” automatically, like this:

It raises OSPF in the MPLS core in order not to fool around with static routes and the switches at the sites see each other by IP (and put it in OSPF LoopBack), like this:

After that, we set up VPLS signaling on both sites. In this case, the question to use the variant of Compella or Martini is more a matter of religion. On the equipment HP work options. On LDP (Martini), an L2 MPLS VPN is configured, then a VPLS Instance (VSI) is created and attached to the interface, like this:

With BGP, it's a little more complicated, plus everything else needs to raise BGP Peering between the platforms, configure the VPLS family in it and set the parameters for the VPLS VSI (vpn-target and route-distinguisher), like this:

The result is something like this:

In such a scheme, second-level traffic is transparently forwarded in VLAN-s between sites, and virtual resources and applications can freely migrate from one site to another, as if they are located in one place.

In this simplified example, it is not entirely obvious, why raise the VPLS between two sites? It seems that you can just forward the trunk and VLANs will be available on both sites ... However, in addition to the traffic management, network security and quality of service tasks provided by MPLS / VPLS, as soon as another site appears in the L2 domain (or another redundant connection), it will be necessary to control access to the environment and to protect against loops. And doing this with STP is now at least a bad tone.

Source: https://habr.com/ru/post/150725/

All Articles