The correct calculation for VDI (part 1)

I present to you a series of two posts where I will try to talk about the development of a fairly typical VDI solution for a medium-sized enterprise. In the first part - preparation for implementation, planning; in the second - real practical examples.

It often happens that the infrastructure of our potential customer is already settled, and major changes in equipment are unacceptable. Therefore, within the framework of many new projects, there are challenges to optimize the operation of current equipment.

For example, one of the customers, a large domestic software company, has a fairly large fleet of servers and storage systems. This includes several 6th and 7th generation HP ProLiant servers and HP EVA storage systems that were in reserve. It was on their basis that a solution had to be developed.

The voiced requirements for a VDI solution were:

')

I had to figure out how many servers and storage systems would eventually be transferred from the reserve to the solution.

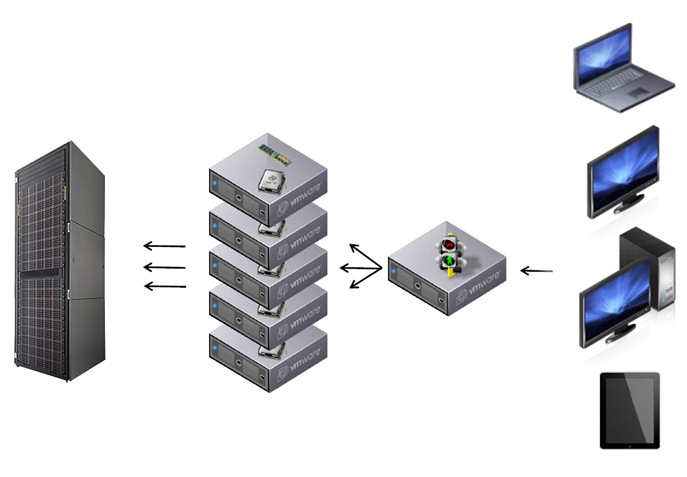

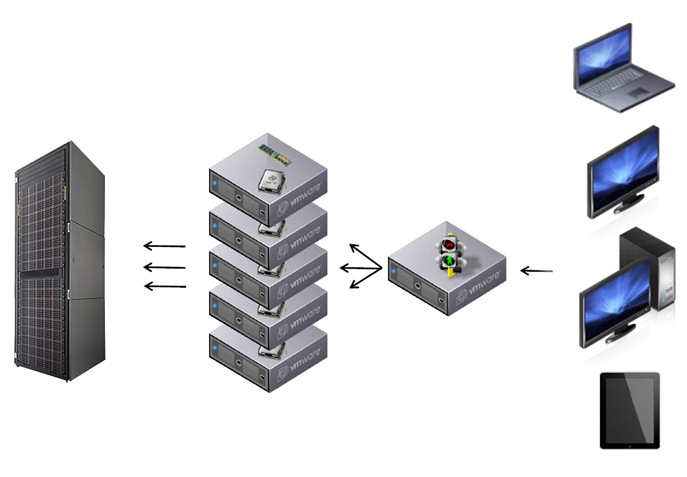

VMware is selected as the virtualization environment. The scheme of work turned out like this:

One of the servers acts as a connection broker, clients connect to it. Connection broker selects from the pool of physical servers on which to start the virtual machine to service the session.

The remaining servers are ESX hypervisors that run virtual machines.

ESX hypervisors connect to a storage system that stores virtual machine images.

Under ESX hypervisors, rather powerful servers with 6-core Intel Xeon processors were set aside. At first glance, the “weak link” is the storage system, because for VDI, the hidden killer is IOPS. But of course, there are many other points to consider when developing a VDI solution. I'll tell you here about some of them:

Finally, let's move on to I / O and the main issues related to I / O.

Windows running on a local PC with a hard disk has approximately 40-50 IOPS. When a set of services is loaded on such a PC along with the base OS - prefetching, indexing services, hardware services, etc. - often it is unnecessary user functionality, but it does not incur large losses in performance.

But when using a VDI client, almost all additional services are counterproductive - they produce a large number of I / O requests in an attempt to optimize the speed and load time, but the effect is reversed.

Also, Windows tries to optimize data blocks so that the appeal to them is mostly consistent, because on a local hard disk, sequential read and write operations require fewer movements of the hard disk head. For VDI, this needs special attention - see the end of the post.

The number of IOPS required by the client is more dependent on the services it needs. On average, this figure is 10-20 IOPS (IOPS, which is necessary in each specific case, can be measured using the mechanisms provided, for example, by Liquidware Labs ). Most IOPS are write operations. On average, in a virtual infrastructure, the ratio of read / write operations can reach 20/80.

What it all means in detail:

1. boot / logon storms problem - cache and policies, policies and cache

At that moment, when the user accesses his virtual machine to log in, a large load is created on the disk subsystem. Our task is to make this load predictable, that is, to reduce most of it to read operations, and then effectively use the dedicated cache for typical read data.

To achieve this, it is necessary to optimize not only the image of the client virtual machine, but also the user profiles. When it is configured correctly, the load on the IOPS becomes quite predictable. In a well-established virtual infrastructure, the read / write ratio at the time of loading will be 90/10 or even 95/5.

But if we are dealing with the simultaneous start of work at once of a large number of users, then the data storage system should be quite large, otherwise the process of logging in to the system for some users may take several hours. The only way out: correctly calculate the volume of the system, knowing the maximum number of simultaneous connections.

For example, if an image is loaded for 30 seconds, and if at peak time the number of simultaneous user connections is 10% of their total number, this creates a double write load and a tenfold read load, which is 36% of the normal storage load. If the number of concurrent connections is 3%, then the load on the storage system increases only by 11% compared to the normal load. We give advice to the customer - encourage late arrivals! (joke)

But we must not forget that the proportions of “read / write” after the loading phase change diametrically: IOPS per reading drops to 5 IOPS per session, but the number of IOPS per write does not decrease. If you forget about it, this is a hello to serious problems.

2. OPS storage systems - choose the right RAID

When requests from users come to the general storage system (SAN, iSCSI, SAS), then all I / O operations from the point of view of storage are 100% random. The performance of a disk with a rotation speed of 15,000 RPM is 150-180 IOPS, in a SAS / SATA storage system, disks in a RAID group (related to ATA, i.e. all disks in RAID are waiting for synchronization) will give 30% less performance than IOPS single SAS / SATA drive. The proportions are as follows:

Therefore, for virtualization, it is recommended to use RAID with higher write performance (RAID1, RAID0).

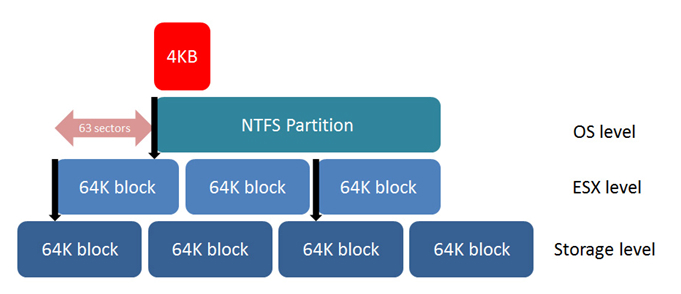

3. Disc location - alignment is most important

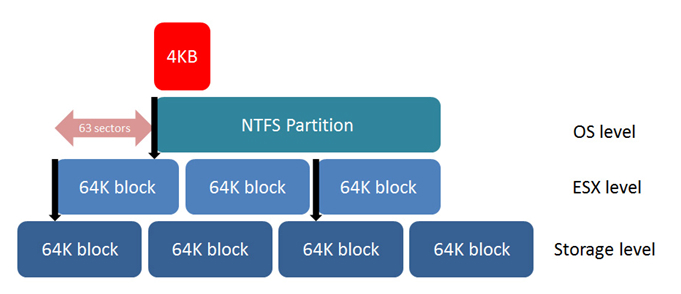

Because we want to minimize I / O operations from the storage system — our main task is to ensure that each operation is most efficient. Disk location is one of the main factors. Each byte requested from the storage system is not read separately from the others. Depending on the vendor, the data in the storage system is divided into blocks of 32 KB, 64 KB, 128 KB. If the file system on top of these blocks is not “aligned” with respect to these blocks, then a request for 1 IOPS from the file system will give a request for 2 IOPS from the storage system. If this system is sitting on a virtual disk, and this disk is on a file system that is not aligned, then the operating system IOPS request 1 in this case will result in a 3 IOPS request from the file system side. This shows that alignment across all levels is of paramount importance.

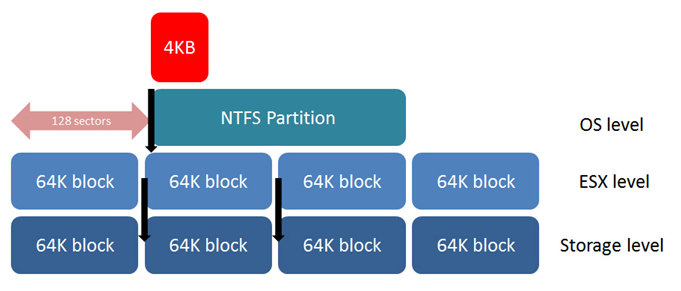

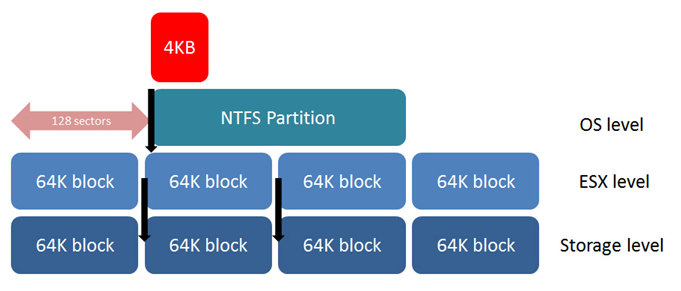

Unfortunately, Windows XP and Windows 2003 create a signature on the first part of the disk during the installation of the operating system and start writing on the last sectors of the first block, this completely shifts the OS file system relative to the storage system blocks. To fix this, you need to create partitions presented to the host or virtual machine using the utilities diskpart or fdisk. And assign the start of the recording from sector 128. The sector is 512 bytes and we put the beginning of the recording exactly on the 64KB marker. Once the partition is aligned we will receive 1 IOPS from the storage system for a request from the file system.

Same for VMFS. When a partition is created through the ESX Service Console, it will not default to the storage system. In this case, you must use fdisk or create a partition through VMware vCenter, which performs the alignment automatically. Windows Vista, Windows 7, Windows Server 2008 and later products by default try to align the partition with 1 MB, but it is better to check the alignment yourself.

The increase in performance from leveling can be about 5% for large files and 30-50% for small files and random IOPS. And since the random IOPS load is more characteristic of VDI, alignment is of paramount importance.

4. Defragmentation and prefetching should be disabled.

The NTFS file system consists of blocks of 4KB. Fortunately, the Windows system tries to arrange the blocks so that the call is as consistent as possible. When a user launches applications, requests are more likely to be written, rather than read. The defragmentation process tries to guess how the data will be read. Defragmentation, in this case, generates a load on the IO without giving a significant positive effect. Therefore, it is recommended to disable the defragmentation process for VDI solutions.

The same for the prefetching process. Prefetching is the process that places the files that are most accessed in a special Windows cache directory so that reading of these files is consistent, thus minimizing IOPS. But since requests from a large number of users make IOPS completely random from the point of view of storage, the prefetching process is not beneficial, only the generation of I / O traffic is “wasted”. Exit - the prefetching function must be completely disabled.

If the storage system uses deduplication, then this is another argument in favor of disabling prefetching and defragmentation - the prefetching process, moving files from one disk to another, seriously reduces the efficiency of the deduplication process, for which it is critical to store a table of rarely modified disk data blocks.

It often happens that the infrastructure of our potential customer is already settled, and major changes in equipment are unacceptable. Therefore, within the framework of many new projects, there are challenges to optimize the operation of current equipment.

For example, one of the customers, a large domestic software company, has a fairly large fleet of servers and storage systems. This includes several 6th and 7th generation HP ProLiant servers and HP EVA storage systems that were in reserve. It was on their basis that a solution had to be developed.

The voiced requirements for a VDI solution were:

')

- Floating Desktops Pool (with changes after the session);

- The initial configuration is 700 users, with extensions up to 1000.

I had to figure out how many servers and storage systems would eventually be transferred from the reserve to the solution.

VMware is selected as the virtualization environment. The scheme of work turned out like this:

One of the servers acts as a connection broker, clients connect to it. Connection broker selects from the pool of physical servers on which to start the virtual machine to service the session.

The remaining servers are ESX hypervisors that run virtual machines.

ESX hypervisors connect to a storage system that stores virtual machine images.

Under ESX hypervisors, rather powerful servers with 6-core Intel Xeon processors were set aside. At first glance, the “weak link” is the storage system, because for VDI, the hidden killer is IOPS. But of course, there are many other points to consider when developing a VDI solution. I'll tell you here about some of them:

- What you need to remember - a significant part of the cost of the solution will be software licenses. Most often, it is more profitable to consider offers from hardware vendors, since OEM licenses for virtualization software are less expensive.

- Secondly, it is worth considering the possibility of installing graphics accelerator cards for a large number of users to work with multimedia or in graphic editors.

- HP's interesting solution is the HP ProLiant WS460c Gen8 Workstation Blade Server. Its distinctive feature: the ability to install graphics cards directly into the blade, without losing space for 2 hard drives, 2 processors and 16 memory bars. Graphics accelerators support up to 240 CUDA cores, 2.0 GB of GDDR5 memory (an interesting read here ).

- Thirdly, it is necessary to calculate in advance the total cost of ownership (also known as TCO). Purchase of equipment is certainly a big waste, but you can and should show the savings from the implementation of the solution, the cost of updating and repair, as well as the cost of renewing software licenses.

Finally, let's move on to I / O and the main issues related to I / O.

Windows running on a local PC with a hard disk has approximately 40-50 IOPS. When a set of services is loaded on such a PC along with the base OS - prefetching, indexing services, hardware services, etc. - often it is unnecessary user functionality, but it does not incur large losses in performance.

But when using a VDI client, almost all additional services are counterproductive - they produce a large number of I / O requests in an attempt to optimize the speed and load time, but the effect is reversed.

Also, Windows tries to optimize data blocks so that the appeal to them is mostly consistent, because on a local hard disk, sequential read and write operations require fewer movements of the hard disk head. For VDI, this needs special attention - see the end of the post.

The number of IOPS required by the client is more dependent on the services it needs. On average, this figure is 10-20 IOPS (IOPS, which is necessary in each specific case, can be measured using the mechanisms provided, for example, by Liquidware Labs ). Most IOPS are write operations. On average, in a virtual infrastructure, the ratio of read / write operations can reach 20/80.

What it all means in detail:

1. boot / logon storms problem - cache and policies, policies and cache

At that moment, when the user accesses his virtual machine to log in, a large load is created on the disk subsystem. Our task is to make this load predictable, that is, to reduce most of it to read operations, and then effectively use the dedicated cache for typical read data.

To achieve this, it is necessary to optimize not only the image of the client virtual machine, but also the user profiles. When it is configured correctly, the load on the IOPS becomes quite predictable. In a well-established virtual infrastructure, the read / write ratio at the time of loading will be 90/10 or even 95/5.

But if we are dealing with the simultaneous start of work at once of a large number of users, then the data storage system should be quite large, otherwise the process of logging in to the system for some users may take several hours. The only way out: correctly calculate the volume of the system, knowing the maximum number of simultaneous connections.

For example, if an image is loaded for 30 seconds, and if at peak time the number of simultaneous user connections is 10% of their total number, this creates a double write load and a tenfold read load, which is 36% of the normal storage load. If the number of concurrent connections is 3%, then the load on the storage system increases only by 11% compared to the normal load. We give advice to the customer - encourage late arrivals! (joke)

But we must not forget that the proportions of “read / write” after the loading phase change diametrically: IOPS per reading drops to 5 IOPS per session, but the number of IOPS per write does not decrease. If you forget about it, this is a hello to serious problems.

2. OPS storage systems - choose the right RAID

When requests from users come to the general storage system (SAN, iSCSI, SAS), then all I / O operations from the point of view of storage are 100% random. The performance of a disk with a rotation speed of 15,000 RPM is 150-180 IOPS, in a SAS / SATA storage system, disks in a RAID group (related to ATA, i.e. all disks in RAID are waiting for synchronization) will give 30% less performance than IOPS single SAS / SATA drive. The proportions are as follows:

- In RAID5: 30-45 IOPS from disk to write, 160 IOPS to read;

- In RAID1: 70-80 IOPS from disk to write, 160 IOPS to read;

- In RAID0 140-150 IOPS from disk to write, 160 IOPS to read.

Therefore, for virtualization, it is recommended to use RAID with higher write performance (RAID1, RAID0).

3. Disc location - alignment is most important

Because we want to minimize I / O operations from the storage system — our main task is to ensure that each operation is most efficient. Disk location is one of the main factors. Each byte requested from the storage system is not read separately from the others. Depending on the vendor, the data in the storage system is divided into blocks of 32 KB, 64 KB, 128 KB. If the file system on top of these blocks is not “aligned” with respect to these blocks, then a request for 1 IOPS from the file system will give a request for 2 IOPS from the storage system. If this system is sitting on a virtual disk, and this disk is on a file system that is not aligned, then the operating system IOPS request 1 in this case will result in a 3 IOPS request from the file system side. This shows that alignment across all levels is of paramount importance.

Unfortunately, Windows XP and Windows 2003 create a signature on the first part of the disk during the installation of the operating system and start writing on the last sectors of the first block, this completely shifts the OS file system relative to the storage system blocks. To fix this, you need to create partitions presented to the host or virtual machine using the utilities diskpart or fdisk. And assign the start of the recording from sector 128. The sector is 512 bytes and we put the beginning of the recording exactly on the 64KB marker. Once the partition is aligned we will receive 1 IOPS from the storage system for a request from the file system.

Same for VMFS. When a partition is created through the ESX Service Console, it will not default to the storage system. In this case, you must use fdisk or create a partition through VMware vCenter, which performs the alignment automatically. Windows Vista, Windows 7, Windows Server 2008 and later products by default try to align the partition with 1 MB, but it is better to check the alignment yourself.

The increase in performance from leveling can be about 5% for large files and 30-50% for small files and random IOPS. And since the random IOPS load is more characteristic of VDI, alignment is of paramount importance.

4. Defragmentation and prefetching should be disabled.

The NTFS file system consists of blocks of 4KB. Fortunately, the Windows system tries to arrange the blocks so that the call is as consistent as possible. When a user launches applications, requests are more likely to be written, rather than read. The defragmentation process tries to guess how the data will be read. Defragmentation, in this case, generates a load on the IO without giving a significant positive effect. Therefore, it is recommended to disable the defragmentation process for VDI solutions.

The same for the prefetching process. Prefetching is the process that places the files that are most accessed in a special Windows cache directory so that reading of these files is consistent, thus minimizing IOPS. But since requests from a large number of users make IOPS completely random from the point of view of storage, the prefetching process is not beneficial, only the generation of I / O traffic is “wasted”. Exit - the prefetching function must be completely disabled.

If the storage system uses deduplication, then this is another argument in favor of disabling prefetching and defragmentation - the prefetching process, moving files from one disk to another, seriously reduces the efficiency of the deduplication process, for which it is critical to store a table of rarely modified disk data blocks.

Source: https://habr.com/ru/post/150513/

All Articles