Search@Mail.Ru, part two: review of data preparation architectures of large search engines

Overview of data preparation architectures of large search engines

Last time, you and I remembered how Go.Mail.Ru started in 2010, and how the Search was before. In this post we will try to draw a general picture - let's focus on how others work, but first we will tell about search distribution.

How are search engines distributed

As you requested, we decided to elaborate on the basics of distribution strategies of the most popular search engines.

There is an opinion that Internet search is one of those services that most users choose on their own, and the strongest should win this battle. This position is extremely sympathetic to us - it is for this reason that we are constantly improving our search technologies. But the situation on the market makes its own adjustments, and the so-called “browser wars” intervene in the first place.

')

There was a time when the search was not associated with the browser. Then the search engine was just another site that the user went to on his own. Imagine —Internet Explorer up to version 7, which appeared in 2006, did not have a search string; Firefox had a search string from the first version, but at the same time it appeared only in 2004.

Where did the search string come from? It was not the authors of the browsers who invented it - it first appeared as part of the Google Toolbar, released in 2001. Google Toolbar added to the browser the functionality of "quick access to Google search" - namely, the search line in your panel:

Why did Google release its toolbar? This is how Douglas Edwards, Google’s brand manager at the time, describes his mission in his book “I'm Feeling Lucky: The Confessions of Google Employee Number 59”:

“The Toolbar was a secret weapon in our war against Microsoft. By embedding the toolbar in the browser. If you’re on the PC, you’ll not be happy with your PC. We’ve needed to make sure Google’s search box didn’t become an obsolete relic. ”

“The toolbar was the secret weapon in the war against Microsoft. By integrating the Toolbar into the browser, Google opened another front in the battle for direct access to users. Bill Gates wanted to completely control how users interact with the PC: there were a lot of rumors that in the next version of Windows, the search string would be installed directly on the desktop. It was necessary to take measures so that Google’s search bar does not become a relic of the past. ”

How did the toolbar spread? Yes, all the same, along with the popular software: RealPlayer , Adobe Macromedia Shockwave Player , etc.

It is clear that other search engines have begun to distribute their toolbars (Yahoo Toolbar, for example), and browser manufacturers did not fail to take advantage of this opportunity to gain additional source of income from search engines and embedded the search line to themselves by introducing the concept of “default search engine”.

The business departments of the browser manufacturers chose an obvious strategy: the browser is the user's entry point to the Internet, the default search settings with high probability will be used by the audience of the browser - so why not sell these settings? And they were right in their own way, because the Internet search is a product with almost zero “sticking”.

At this point it is worthwhile to dwell. Many will be indignant: “No, a person gets used to the search and uses only the system that he trusts,” but the practice proves the opposite. If, say, your mailbox or account social. network for some reason is not available, you do not go right there to another postal service or another social network, because you are “glued” to your accounts: they are known by your friends, colleagues, family. Changing your account is a long and painful process. With search engines, everything is completely different: the user is not tied to this or that system. If the search engine is unavailable for some reason, users do not sit and do not wait for it to finally work - they just go to other systems (for example, we clearly saw it on LiveInternet counters a year ago, during failures at one of our competitors ). At the same time, users do not suffer much from the accident, because all the search engines are roughly the same (search line, query, results page) and even an inexperienced user will not be confused when working with any of them. Moreover, in about 90% of cases the user will receive an answer to his question, no matter what system he is looking for.

So, the search, on the one hand, has almost zero "sticking" (in English there is a special term "stickiness"). On the other hand, some search is already pre-installed in the default browser, and quite a large number of people will use it only for the reason that it is convenient to use it from there. And if the search behind the search line satisfies the user's tasks, he can continue to use it.

What are we coming to? The leading search engines have no other choice but to fight for the browser search strings, distributing their desktop search products - toolbars, which in the installation process change the default search in the user's browser. The instigator of this struggle was Google, the rest had to defend themselves. You can, for example, read these words of Arkady Volozh, the creator and owner of Yandex, in his interview :

“When in 2006–2007. Google’s share in the Russian search market began to grow, at first we could not understand why. Then it became obvious that Google is promoting itself by embedding it in browsers (Opera, Firefox). And with the release of its own browser and Google’s mobile operating system, it began to destroy the relevant markets altogether. ”

Since Mail.Ru is also a search, it cannot stand aside from the “browser wars”. We just entered the market a bit later than others. Now the quality of our search has increased markedly, and our distribution is a reaction to the very struggle of toolbars that is conducted in the market. At the same time, it is really important for us that an increasing number of people who are trying to use our Search remain satisfied with the results.

By the way, our distribution policy is several times less active than that of the nearest competitor. We see it on the counter top.mail.ru, which is installed on most of the sites of the RuNet. If a user goes to the site on request through one of the distribution products (toolbar, own browser, partner browser's searchbox), the URL contains the parameter clid = ... Thus, we can estimate the capacity of distribution requests: the competitor has almost 4 times more than us

But let's move on from distribution to how other search engines work. After all, internal discussions of architecture, we naturally began with the study of the architectural solutions of other search engines. I will not describe their architecture in detail - instead I will give links to open materials and highlight the features of their solutions that seem important to me.

Data preparation in large search engines

Rambler

Rambler, now closed, had a number of interesting architectural ideas. For example, it was aware of their own data storage system (NoSQL, as it is now fashionable to call such systems) and HICS ( or HCS ) distributed computing, which was used, in particular, for calculations in the reference graph. Also HICS allowed to standardize the presentation of data within the search in a single universal format.

The architecture of the Rambler was quite different from ours in the organization of the spider. Our spider was made as a separate server, with its own, self-written, base of addresses of the downloaded pages. For downloading each site, a separate process was launched that simultaneously downloaded pages, parsed them, highlighted new links and could immediately follow them. The spider of the Rambler was made much easier.

On one server there was a large text file with all known addresses of documents to Rambler, one per line, sorted in lexicographical order. Once a day, this file was crawled and other text-based download tasks were generated, which were performed by special programs that can only download documents from the address list. Then the documents were parsed, links were extracted and put next to this large file-list of all known documents, sorted, after which the lists were merged into a new large file, and the cycle was repeated again.

The advantages of this approach were in simplicity, the presence of a single register of all known documents. The drawbacks were that it was impossible to go to the newly extracted addresses of the documents right away, since downloading new documents could only happen at the next iteration of the spider. In addition, the size of the database and its processing speed was limited to one server.

Our spider, on the contrary, could quickly go through all the new links from the site, but was very poorly managed outside. It was hard to “add” additional data to the addresses (necessary for ranking documents within the site, determining the priority of downloading), it was difficult to dump the database.

Yandex

Not much was known about Yandex’s internal search engine until Den Raskovalov spoke about it in his lecture course .

From there you can find out that the search for Yandex consists of two different clusters:

- batch processing

- data processing in real time (this is not really “real time” in the sense in which this term is used in control systems where the delay in the execution of tasks can be critical. Rather, it is possible to get the document into the index as quickly as possible and independently of other documents or tasks; such a "soft" version of real time)

The first one is used for standard Internet hauling, the second one is used to deliver to the index the best and most interesting documents that have just appeared. For the time being, we will consider only batch processing, because before the index update in real time we were then quite far away, we wanted to go on updating the index once every two days.

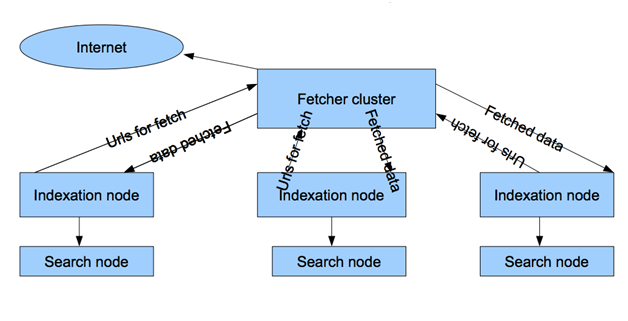

At the same time, despite the fact that externally, the Yandex batch processing cluster was somewhat similar to our pair of pumping and indexing clusters, there were several serious differences in it:

- The base of addresses of pages one, is stored on indexing nodes. As a result, there are no problems with synchronizing the two databases.

- Control of pumping logic is transferred to indexing nodes, i.e. spider nodes are very simple; they download what indexers point to them. We have the spider himself determined what to download and when.

- And, a very important difference is that inside all the data is presented in the form of relational tables of documents, sites, links. We have all the data was separated by different hosts, stored in different formats. The tabular presentation of data greatly simplifies access to them, allows you to make various samples and get the most diverse index analytics. We were deprived of all this, and at that time only synchronization of our two document bases (spider and indexer) took a week, and we had to stop both clusters for this time.

Google, without doubt, is a global technology leader, so it is always paid attention to, analyzed what it did, when and why. And the Google search architecture, of course, was for us the most interesting. Unfortunately, Google rarely opens up its architectural features; each article is a big event and almost instantly spawns a parallel OpenSource project (sometimes not one) implementing the described technologies.

For those who are interested in the features of Google search, you can safely advise to explore almost all the presentations and speeches of one of the most important specialists in the company on the internal infrastructure - Jeffrey Dean , for example:

- “Challenges in Building Large-Scale Information Retrieval Systems” (slides), through which you can find out how Google has developed, starting with the very first version, which was made by undergraduate and graduate students at Stanford University until 2008, until the introduction of Universal Search. There is a video of this performance and a similar performance at Standford, Building Software Systems At Google and Lessons Learned.

- MapReduce: Simplified Data Processing on Large Clusters . The article describes a computational model that allows you to easily parallelize calculations on a large number of servers. Immediately after this publication, the open source Hadoop platform appeared.

- “BigTable: A Distributed Structured Storage System”, a story about the NoSQL database BigTable, based on which HBase and Cassandra were made ( video can be found here , slides are here )

- "MapReduce, BigTable, and Other Distributed System Abstractions for Handling Large Datasets" - a description of the most famous technologies of Google.

Based on these presentations, you can highlight the following features of the Google search architecture:

- Tabular structure for data preparation. The entire search database is stored in a huge table, where the key is the address of the document, and the meta information is stored in separate columns, combined into families. Moreover, the table was originally designed in such a way as to work effectively with sparse data (that is, when not all documents have values in columns).

- Unified MapReduce distributed computing system. Data preparation (including creation of a search index) is a sequence of mapreduce tasks performed on BigTable tables or files in a distributed GFS file system.

All this looks quite reasonable: all known addresses of documents are stored in one large table, their prioritization is performed according to it, calculations over a reference graph, etc., the contents of the retrieved pages are retrieved by the search spider, and an index is built on it.

There is another interesting presentation by another Google specialist, Daniel Peng, about the innovations in BigTable, which made it possible to quickly add new documents to the index in a few minutes. This technology "outside" Google has been advertised under the name Caffeine , and in publications received the name Percolator. Video of the performance on OSDI'2010 can be seen here .

Speaking very roughly, this is the same BigTable, but in which the so-called are implemented. triggers, - the ability to upload your own pieces of code that are triggered by changes inside the table. If so far I have described batch processing, i.e. when data is combined and processed as far as possible, the implementation of the same on triggers is completely different. Suppose a spider has downloaded something, placed new content in a table; Trigger triggered, signaling “new content has appeared, it needs to be indexed”. The indexing process started immediately. It turns out that all the tasks of the search engine can eventually be divided into subtasks, each of which is triggered by a click. Having a large number of equipment, resources and debugged code, you can solve the problem of adding new documents quickly, in just a minute - as Google demonstrated.

The difference between Google architecture and Yandex architecture, where the index update system was also indicated in real time, is that Google claims that the whole index building procedure is performed on triggers, while Yandex only has it for a small subset of the best, valuable documents.

Lucene

It is worth mentioning about another search engine - Lucene. This is a free search engine written in Java. In a sense, Lucene is a platform for creating search engines, for example, a search engine on the web called Nutch has spun off from it. In essence, Lucene is a search engine for creating an index and a search engine, and Nutch is the same plus a spider that pages pages, because the search engine does not necessarily search for documents that are on the web.

In fact, Lucene itself has implemented not so many interesting solutions that could be borrowed by a large web search engine designed for billions of documents. On the other hand, you should not forget that it was Lucene developers who launched the Hadoop and HBase projects (every time a new interesting article from Google appeared, the Lucene authors tried to apply announced solutions for themselves. For example, Hase, which is a clone of BigTable) . However, these projects have long existed by themselves.

For me at Lucene / Nutch, it was interesting how they used Hadoop. For example, in Nutch, a special spider was written for web browsing, performed entirely as tasks for Hadoop. Those. A whole spider is simply a process that runs on Hadoop in the MapReduce paradigm. This is a rather unusual solution, outside the scope of how Hadoop is used. After all, this is a platform for processing large amounts of data, and this suggests that data is already available. And here this task does not calculate or process anything, but, on the contrary, downloads it.

On the one hand, this solution impresses with its simplicity. After all, the spider needs to get all the addresses of one site for pumping, bypass them one by one, the spider itself must also be distributed and run on several servers. So we are doing a mapper in the form of an address divider for sites, and we implement each individual pumping process in the form of a reducer.

On the other hand, this is a rather bold decision, because it is hard to build sites - not every site is responsible for guaranteed time, and the cluster computing resources are spent so that it just waits for a response from someone else's web server. And the problem of "slow" sites is always with a sufficiently large number of addresses for pumping. For 20% of the time, Spyder pumps in 80% of documents from fast sites, then spends 80% of time trying to download slow websites - and almost never can download them all, you always have to drop something and leave it “next time”.

We analyzed such a decision for some time, and as a result refused it. Perhaps, for us, the architecture of this spider was interesting as a kind of "negative example."

In more detail about the structure of our search engine, about how we built the search engine, I will tell in the next post.

Source: https://habr.com/ru/post/150303/

All Articles