Audit of deletion and access to files and recording events to a log file using Powershell

I think many people came up with a task when they come to you and ask: “We have a file missing on a shared resource, it was and was not, it looks like someone deleted, can you check who did it?” At best, you say that you don’t have time, at worst you’re trying to find a reference to this file in the logs. And when file auditing is enabled on the file server, the logs are there, to put it mildly, “well, very large,” and finding something there is unreal.

Here I am, after another such question (ok, backups are made several times a day) and my answer is that: “I don’t know who did it, but I will restore the file to you,” decided that I didn’t like it at all ...

Let's start.

To begin with, enable group policies to audit access to files and folders.

Local Security Policies-> Advanced Security Policy Configuration-> Access to Objects

Enable “File System Audit” for success and failure.

After that, we need to set up an audit for the necessary folders.

We pass into the properties of the shared folder on the file server, go to the "Security" tab, click "Advanced", go to the "Audit" tab, click "Change" and "Add." We select users for which to conduct an audit. I recommend to choose "All", otherwise meaningless. The level of application is “For this folder and its subfolders and files”.

Select actions over which we want to conduct an audit. I chose “Creating files / dosing data” Success / Failure, “Creating folders / dosing data” Success / Failure, Deleting subfolders and files and simply deleting, also on Success / Failure.

Click OK. We are waiting for the application of audit policies on all files. After that, in the security event log, there will be a lot of access to files and folders. The number of events directly proportional to the number of working users with a shared resource, and, of course, on the activity of use.

So, we already have the data in the logs, all that remains is to pull them out, and only those that interest us, without any extra “water.” After this, we scribble line by line our data into a text file, separating the data with tabs, in order to open them, for example, with a table editor.

# , , . - 1 . .. . $time = (get-date) - (new-timespan -min 60) #$BodyL - - $BodyL = "" #$Body - , ID. $Body = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4663;StartTime=$Time}|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name -eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}} |select TimeCreated, @{n="_";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name -eq "ObjectName"} | %{$_.'#text'}}},@{n="_";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name -eq "SubjectUserName"} | %{$_.'#text'}}} |sort TimeCreated # ( , : Secret) foreach ($bod in $body){ if ($Body -match ".*Secret*"){ # , $BodyL : , , . # , . # , $BodyL . $BodyL=$BodyL+$Bod.TimeCreated+"`t"+$Bod._+"`t"+$Bod._+"`n" } } #.. ( ), # . - . AccessFile : , , . $Day = $time.day $Month = $Time.Month $Year = $Time.Year $name = "AccessFile-"+$Day+"-"+$Month+"-"+$Year+".txt" $Outfile = "\serverServerLogFilesAccessFileLog"+$name # -. $BodyL | out-file $Outfile -append ')

And now a very interesting script.

The script writes a log about deleted files.

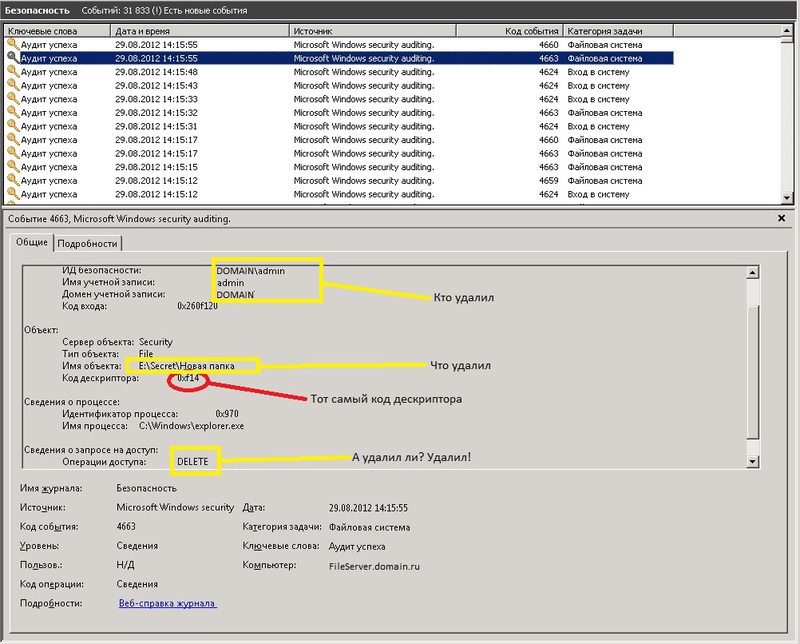

# $Time . $time = (get-date) - (new-timespan -min 60) #$Events - ID=4660. . #!!!! !!! 2 , ID=4660 ID=4663. $Events = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4660;StartTime=$time} | Select TimeCreated,@{n="";e={([xml]$_.ToXml()).Event.System.EventRecordID}} |sort # . , . $BodyL = "" $TimeSpan = new-TimeSpan -sec 1 foreach($event in $events){ $PrevEvent = $Event. $PrevEvent = $PrevEvent - 1 $TimeEvent = $Event.TimeCreated $TimeEventEnd = $TimeEvent+$TimeSpan $TimeEventStart = $TimeEvent- (new-timespan -sec 1) $Body = Get-WinEvent -FilterHashtable @{LogName="Security";ID=4663;StartTime=$TimeEventStart;EndTime=$TimeEventEnd} |where {([xml]$_.ToXml()).Event.System.EventRecordID -match "$PrevEvent"}|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name -eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}} |select TimeCreated, @{n="_";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name -eq "ObjectName"} | %{$_.'#text'}}},@{n="_";e={([xml]$_.ToXml()).Event.EventData.Data | ? {$_.Name -eq "SubjectUserName"} | %{$_.'#text'}}} if ($Body -match ".*Secret*"){ $BodyL=$BodyL+$Body.TimeCreated+"`t"+$Body._+"`t"+$Body._+"`n" } } $Month = $Time.Month $Year = $Time.Year $name = "DeletedFiles-"+$Month+"-"+$Year+".txt" $Outfile = "\serverServerLogFilesDeletedFilesLog"+$name $BodyL | out-file $Outfile -append As it turned out, when deleting files and deleting handles, the same event is created in the log, under ID = 4663. In this case, the message body may have different values of “Access operations”: Write data (or add a file), DELETE , etc.

Of course, we are interested in the DELETE operation. But that's not all. The most interesting thing is that during the usual file renaming, 2 events are created with ID 4663, the first with the Access operation: DELETE, and the second with the operation: Data recording (or adding a file). So if you just select 4663, then we will have a lot of unreliable information: where to get the files and deleted and just renamed.

However, I noticed that when the file is deleted explicitly, another event is created with ID 4660, in which, if you carefully examine the message body, there is the user name and a lot of other service information, but there is no file name. But there is a descriptor code.

However, prior to this event there was an event with ID 4663. Where exactly the file name, user name and time, and operation is indicated is not strange there DELETE. And most importantly, there is a descriptor number that corresponds to the descriptor number from the event above (4660, remember? Which is created when you explicitly delete the file). So now, to know exactly what files are deleted, you just need to find all the events with ID 4660, as well as the events that precede each event, with code 4663, which will contain the number of the desired descriptor.

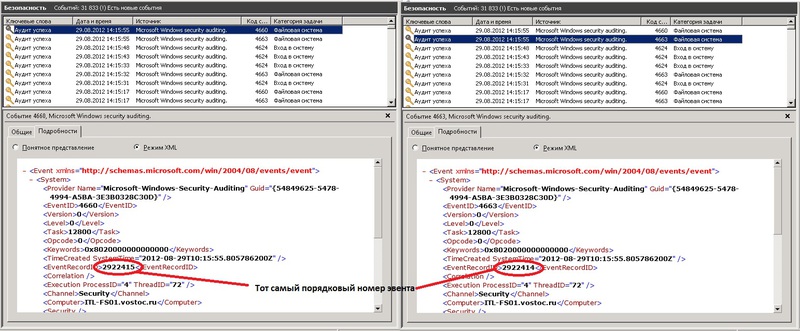

These 2 events are generated simultaneously when a file is deleted, but are recorded sequentially, first 4663, then 4660. At the same time, their sequence numbers differ by one. At 4660 the serial number is one more than 4663.

It is for this property that the desired event is sought.

Those. all events with ID 4660 are taken. They have 2 properties, creation time and sequence number.

Then in the cycle one event is taken every 4660. Its properties, time and sequence number are selected.

Next, the $ PrevEvent variable contains the number of the event we need, which contains the necessary information about the deleted file. It also determines the time frame in which it is necessary to search for this event with a specific sequence number (with the very one we brought in $ PrevEvent). Because If an event is generated almost simultaneously, the search will be reduced to 2 seconds: + - 1 second.

(Yes, it is +1 sec and -1 sec, why this is so, I cannot say, it was revealed experimentally, if you do not add a second, then some may not be found, possibly due to the fact that perhaps these two events can be created one before the other later and vice versa).

Immediately make a reservation that it is a very long time to search by order of all events for an hour - because the sequence number is in the body of the event, and to determine it, you need to parse each event - this is a very long time. That is why such a small period of 2 seconds is needed (+ -1sec from event 4660, remember?).

It is in this time interval that an event with the required sequence number is searched.

After it is found, the filters work:

|where{ ([xml]$_.ToXml()).Event.EventData.Data |where {$_.name -eq "ObjectName"}|where {($_.'#text') -notmatch ".*tmp"} |where {($_.'#text') -notmatch ".*~lock*"}|where {($_.'#text') -notmatch ".*~$*"}} Those. do not record information about deleted temporary files (. * tmp), MS Office document lock files (. * lock), and MS Office temporary files (. * ~ $ *)

In the same way, we take the necessary fields from this event, and write them into the $ BodyL variable.

After finding all the events, we write $ BodyL in a text log file.

For the log of deleted files I use the scheme: one file for one month with the name containing the number of the month and the year). Because deleted files at times less than the files to which was accessed.

As a result, instead of endless "digging" the logs in search of truth, you can open the log file with any table editor and view the data you need for the user or file.

Recommendations

You will have to determine the time during which you will be looking for the right events. The longer the period, the longer it looks. It all depends on the performance of the server. If weak - then start with 10 minutes. See how fast it works. If it is longer than 10 minutes, then either increase it again, it will suddenly help, or vice versa, reduce the period to 5 minutes.

After determine the time period. Place this script in the task scheduler and indicate that it is necessary to execute this script every 5, 10, 60 minutes (depending on how long you specified in the script). I have every 60 minutes indicated. $ time = (get-date) - (new-timespan -min 60) .

PS

I have both of these scripts working for a 100GB network resource, on which 50 users are actively working every day on average.

The search time for deleted files per hour is 10-15 minutes.

The search time for all files accessed is from 3 to 10 minutes. Depending on the server load.

Source: https://habr.com/ru/post/150149/

All Articles