Unified infrastructure "Cloud + physical equipment"

In this article we will talk about the problems that may arise when combining physical equipment and cloud capacity into a single infrastructure. Not everyone has such situations, since many companies initially place their resources completely in a public cloud, some, if resources allow, deploy their own private clouds, and some, not trusting cloud technologies, simply place everything on physical servers. But there are projects that, for one reason or another, need to use both physical and cloud servers. Naturally, the power data must be interconnected by a network. Some use the Internet for these purposes (directly or via VPN), but this is not always safe and profitable, since the amount of traffic between servers considerably exceeds the external one. This threatens to overload the external channel and, consequently, increase costs, due to the need to acquire a wide Internet channel.

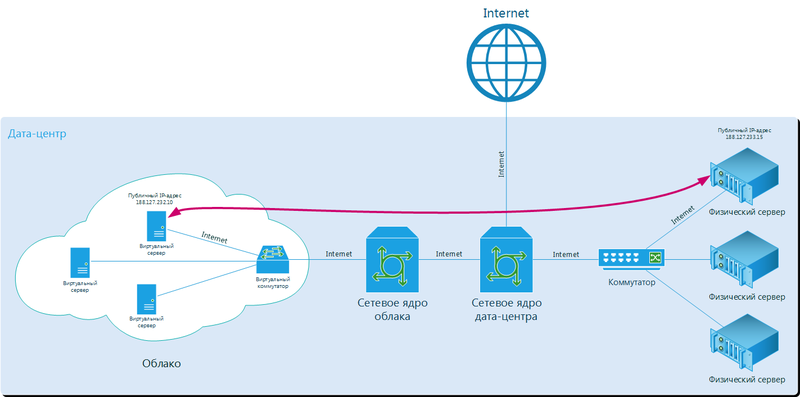

A common pattern of traffic route when connecting via the Internet between a physical and a virtual server in a single data center.

To avoid such problems, it is better to use a separate channel for the internal network. If the physical servers are located in one data center, or everything is located in one cloud, then this is not a problem. If the capacities are located both in the cloud and on physical servers, problems may arise, regardless of whether the project is located in one data center or is spaced apart in several. And if the public cloud is in one data center, and the physical equipment is in another? It's still harder.

')

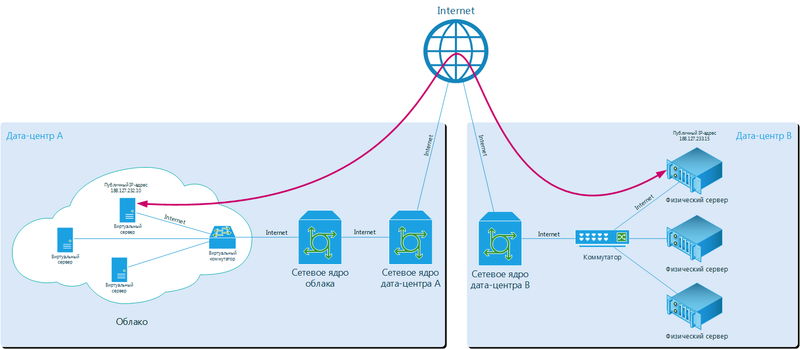

A common pattern of traffic route when connecting via the Internet between a physical and a virtual server in different data centers.

We found a solution to this problem and successfully applied it to the infrastructure of a Moscow wholesale and retail trading company. What we will discuss below.

To begin, let's determine why and in what cases it may be needed:

These are not all possible causes; we have listed the main and most common ones that we have encountered in practice.

Problems with the integration of cloud virtual servers with physical ones even in one data center are frequent. As a rule, this is due to the fact that the cloud has a separate network core operating separately from the network core of the data center. There may be several reasons for this:

If the cloud and physical equipment are in different data centers, then there are even more nuances, as in this case. But all issues can be solved if the cloud was originally designed correctly. Let's tell how we did it.

Initially, it was decided that building a separate network core under the cloud does not make sense. This was due to the fact that we wanted to maximally unify the network services for a part of the infrastructure on physical equipment in our data centers, and for project resources in the cloud. In fact, such services as: Protection against DDoS attacks, traffic balancing, VPN organization, Internet access bands, Dedicated communication channels and other services are provided in the framework of the project in the same way, be it physical equipment or a cloud.

We will not dwell on the description of the network kernel now; there is a separate post on it with photos and descriptions. We will tell only about the decision regarding the cloud.

It is clear that cloud resources are in separate racks, they have their own switches, but the core is used in common. In racks where the cloud is located, there are Cisco Catalyst 3750X series switches combined into a single stack. Four uplinks from the core are prokinto up to two switches into one of the racks. Naturally, these uplinks reserve each other, and in case of failure of one of the switches, a part of the kernel, or an uplink, the client will not notice this.

The switches in the stack serve all incoming and outgoing traffic in the project cloud, with the exception of iSCSI traffic. That is, Internet access, internal traffic between virtual servers, as well as internal traffic between virtual servers and physical equipment goes through these switches and these uplinks. Channel volumes are laid down in such a way that all traffic calmly passes without delays, and that in case of failure of one of the links, there is enough capacity for comfortable network operation. If the traffic volume approaches a certain threshold, we add uplinks, the benefit of the core capacity and the connected channels is enough in excess.

Naturally, all client traffic goes on different subnets that are isolated in different VLANs. The internal network of the project between virtual servers is completely isolated, and no one except the client has access to it. All VLANs are set up in the network core; this is the main advantage of the unified network architecture. In fact, using these VLANs, the network core can route, label, filter, etc. traffic inside each VLAN

At the same time, the virtualization system Hyper-V, which is installed on the hosts, is well aware of the separation of traffic into VLANs. The architecture is quite simple:

If the virtual server needs to receive traffic from several isolated VLANs, then several virtual network adapters are added, each of which is assigned a specific VLAN.

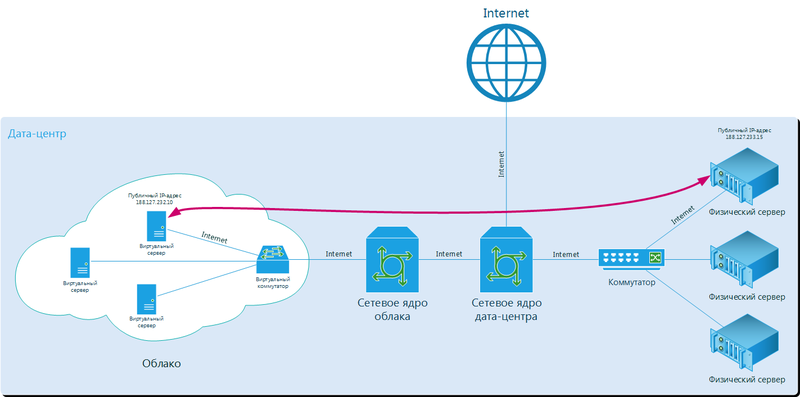

An approximate diagram of the organization of the internal network between the physical and virtual servers in this project.

How is this implemented in this project? The connection process is as follows:

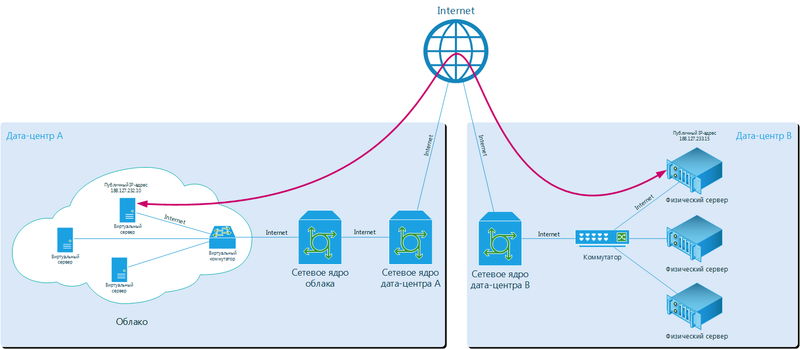

A simplified diagram of the organization of the internal network between the physical and virtual servers for this example.

These works are performed by our engineers at the request of the client. Since we have a regulation that we perform similar work only at night, having ordered a similar service today, tomorrow morning the client will receive the finished configuration.

The scheme is quite simple, and, most importantly, universal. There were no crutches either in the cloud or in the core.

As a result, the client received an infrastructure united by a single network, without the need to switch to new platforms, or to abandon physical equipment. What turned out to be much more cost effective.

In the case, if the physical equipment is located in another data center, this issue is also resolved. In this case, the cloud does not change anything: all the same additional network adapter, VLAN, get hooked. But on the part of the physical equipment you need to further organize the channel. You can organize the MPLS channel through our partner telecom operators, or you can independently agree with your service provider about the MPLS channel to our point of presence.

Thus, you can connect with a single network not just physical equipment with the cloud, but all of your sites, be it offices, data centers, mobile users, partners, etc. In fact, this allows you to organize a virtual server in the cloud and connect it to all your existing subnets.

As you can see, if you approach the solution in a complex way, you can realize any wish of the client. Naturally, one of the main issues that will arise is the cost of a decision. But this is a separate conversation. ;)

A common pattern of traffic route when connecting via the Internet between a physical and a virtual server in a single data center.

To avoid such problems, it is better to use a separate channel for the internal network. If the physical servers are located in one data center, or everything is located in one cloud, then this is not a problem. If the capacities are located both in the cloud and on physical servers, problems may arise, regardless of whether the project is located in one data center or is spaced apart in several. And if the public cloud is in one data center, and the physical equipment is in another? It's still harder.

')

A common pattern of traffic route when connecting via the Internet between a physical and a virtual server in different data centers.

We found a solution to this problem and successfully applied it to the infrastructure of a Moscow wholesale and retail trading company. What we will discuss below.

To begin, let's determine why and in what cases it may be needed:

- The company has a physical equipment located in the data center, and it intends to continue to use it, but the capacity is not enough, and it is necessary to develop somewhere. Cloud is a great solution!

- The whole project is located on physical servers (rented or own), and the company's management does not trust the clouds, but is ready to try.

- There is a need or desire to have a complete copy of the project hosted on physical servers in case of failure. Cloud replication is a good solution.

- There is a need or desire to store backups in the cloud.

- Sometimes projects cannot be fully virtualized, or they are beyond the power of cloud servers. In this case, it is possible to place part of the infrastructure on physical servers, and partly in the cloud.

These are not all possible causes; we have listed the main and most common ones that we have encountered in practice.

Problems with the integration of cloud virtual servers with physical ones even in one data center are frequent. As a rule, this is due to the fact that the cloud has a separate network core operating separately from the network core of the data center. There may be several reasons for this:

- Legal. The cloud developer company does not have its own data center, and is hosted as a regular colocation client in one or more data centers. In this case, the company can sell only its cloud capacity, but it does not have the ability to link its network core with the core of the data center.

- Technical. The cloud development company has its own data center, but when developing the cloud, a separate network core was created for it, and the possibility of integration with the data center core was not taken into account. Due to technical limitations, this is problematic later (various manufacturers of network equipment, software, equipment models, etc.)

- Organizational. The cloud developer company has its own data center, but due to organizational issues, the data center and the cloud are two different projects in which two independent teams are involved. Differentiation of network cores, for example, can be organized by virtue of the company's security policy.

If the cloud and physical equipment are in different data centers, then there are even more nuances, as in this case. But all issues can be solved if the cloud was originally designed correctly. Let's tell how we did it.

Initially, it was decided that building a separate network core under the cloud does not make sense. This was due to the fact that we wanted to maximally unify the network services for a part of the infrastructure on physical equipment in our data centers, and for project resources in the cloud. In fact, such services as: Protection against DDoS attacks, traffic balancing, VPN organization, Internet access bands, Dedicated communication channels and other services are provided in the framework of the project in the same way, be it physical equipment or a cloud.

We will not dwell on the description of the network kernel now; there is a separate post on it with photos and descriptions. We will tell only about the decision regarding the cloud.

It is clear that cloud resources are in separate racks, they have their own switches, but the core is used in common. In racks where the cloud is located, there are Cisco Catalyst 3750X series switches combined into a single stack. Four uplinks from the core are prokinto up to two switches into one of the racks. Naturally, these uplinks reserve each other, and in case of failure of one of the switches, a part of the kernel, or an uplink, the client will not notice this.

The switches in the stack serve all incoming and outgoing traffic in the project cloud, with the exception of iSCSI traffic. That is, Internet access, internal traffic between virtual servers, as well as internal traffic between virtual servers and physical equipment goes through these switches and these uplinks. Channel volumes are laid down in such a way that all traffic calmly passes without delays, and that in case of failure of one of the links, there is enough capacity for comfortable network operation. If the traffic volume approaches a certain threshold, we add uplinks, the benefit of the core capacity and the connected channels is enough in excess.

Naturally, all client traffic goes on different subnets that are isolated in different VLANs. The internal network of the project between virtual servers is completely isolated, and no one except the client has access to it. All VLANs are set up in the network core; this is the main advantage of the unified network architecture. In fact, using these VLANs, the network core can route, label, filter, etc. traffic inside each VLAN

At the same time, the virtualization system Hyper-V, which is installed on the hosts, is well aware of the separation of traffic into VLANs. The architecture is quite simple:

- Traffic arrives on the network adapter of the physical server.

- The network adapter completely passes network traffic, “not paying attention” to VLANs before the Hyper-V virtual switch (Promiscuous VLAN function).

- Next, the Hyper-V virtual switch divides the traffic into VLANs, and transfers the necessary traffic to the necessary virtual network adapters.

- The operating system in the virtual server receives the necessary traffic as "untagged" through the virtual network adapter.

If the virtual server needs to receive traffic from several isolated VLANs, then several virtual network adapters are added, each of which is assigned a specific VLAN.

An approximate diagram of the organization of the internal network between the physical and virtual servers in this project.

How is this implemented in this project? The connection process is as follows:

- The client was allocated two VLANs with subnets added there. For example, VLAN1 10.1.1.0/24 and VLAN2 10.1.2.0/24.

- A virtual network adapter connected to VLAN1 has been added to the virtual server.

- The physical server is a separate network port connected to the switch in the rack. VLAN2 is sent to the port.

- In the kernel, routing between networks 10.1.1.0/24 in VLAN1 and 10.1.2.0/24 in VLAN2 is configured (you must understand that communication between servers will be over L3).

- On the virtual server on the new network adapter, an ip address is assigned from the subnet 10.1.1.0/24 (If this is the only network adapter, then the gateway of this subnet is also registered. If another gateway is already registered on another network adapter, then the route for the 10.1 network is simply registered. 2.0 / 24).

- On the physical server, the same thing was done, but only for the 10.1.2.0/24 network.

- Done! Now there is an internal isolated network between the servers, and the traffic goes through the network core.

A simplified diagram of the organization of the internal network between the physical and virtual servers for this example.

These works are performed by our engineers at the request of the client. Since we have a regulation that we perform similar work only at night, having ordered a similar service today, tomorrow morning the client will receive the finished configuration.

The scheme is quite simple, and, most importantly, universal. There were no crutches either in the cloud or in the core.

As a result, the client received an infrastructure united by a single network, without the need to switch to new platforms, or to abandon physical equipment. What turned out to be much more cost effective.

In the case, if the physical equipment is located in another data center, this issue is also resolved. In this case, the cloud does not change anything: all the same additional network adapter, VLAN, get hooked. But on the part of the physical equipment you need to further organize the channel. You can organize the MPLS channel through our partner telecom operators, or you can independently agree with your service provider about the MPLS channel to our point of presence.

Thus, you can connect with a single network not just physical equipment with the cloud, but all of your sites, be it offices, data centers, mobile users, partners, etc. In fact, this allows you to organize a virtual server in the cloud and connect it to all your existing subnets.

As you can see, if you approach the solution in a complex way, you can realize any wish of the client. Naturally, one of the main issues that will arise is the cost of a decision. But this is a separate conversation. ;)

Source: https://habr.com/ru/post/150093/

All Articles