Silent revolution: flash memory in data centers

Flash memory is already revolutionizing data centers: data transfer to flash is the next step in the development of many centralized IT systems. Yes, it is quite expensive, it has its own characteristics - and yet today the question for data center administrators is no longer whether to use flash memory or not, but how and when to do it.

Here in this topic , introductory information has already been given, showing that new type of drives are noticeably faster and more reliable for threshing machines, on which heavy databases run. If it is possible to load flash storage with work (that is, to provide constant read-write operations), its content is much cheaper than the HDD array, plus you get a bunch of additional bonuses.

')

Below are tips on how to determine whether it is time to switch to this technology or not.

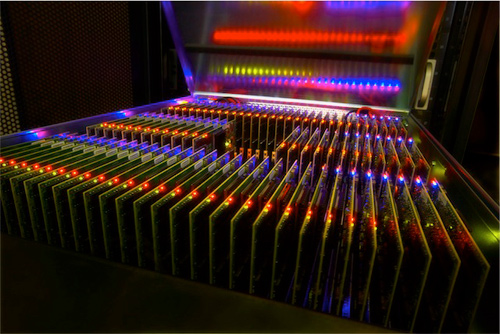

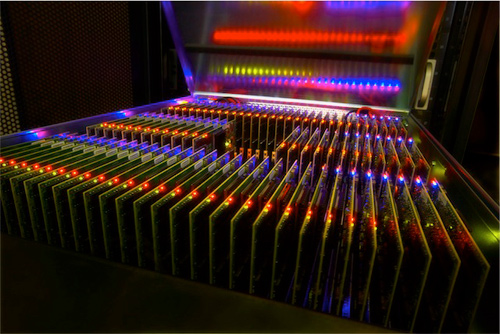

PCI-e flash card write bandwidth and external flash storage system Violin VMA3205

Corporate Applications

This is the first good use case. Flash memory improves two key indicators that are important for management and end users of IT systems: application availability and performance. Flash memory will perform in all its glory if two conditions are met:

On the other hand, some consider switching to flash memory as a panacea for all ills. This is not the case, but the tool is really effective for increasing the productivity of corporate applications; it really allows achieving this at the lowest price compared to other solutions on the market. Before making a decision, you need to look at the download specifically for your system. As my practice shows, in 70-80% of cases the problem with the speed of the application is either directly related to the low speed of the disk subsystem, or the situation can be seriously improved by accelerating the disks.

By the way, this solution may not be obvious. For example, when the system indicates that the CPU is 100% busy, it is not necessary to automatically assume that the problem will be solved by adding a CPU. It is necessary to dig a level deeper and see what the processor is busy. Often it is busy waiting for input / output (IOWAIT). By speeding up the disk system, you can unload the CPU and solve the problem.

In order to understand whether there is a problem with the disk subsystem or not, it is necessary to plot the response time of the disk subsystem. Each OS has its own tools that make it easy to do this - perfmon, iostat, sar, nmon and others.

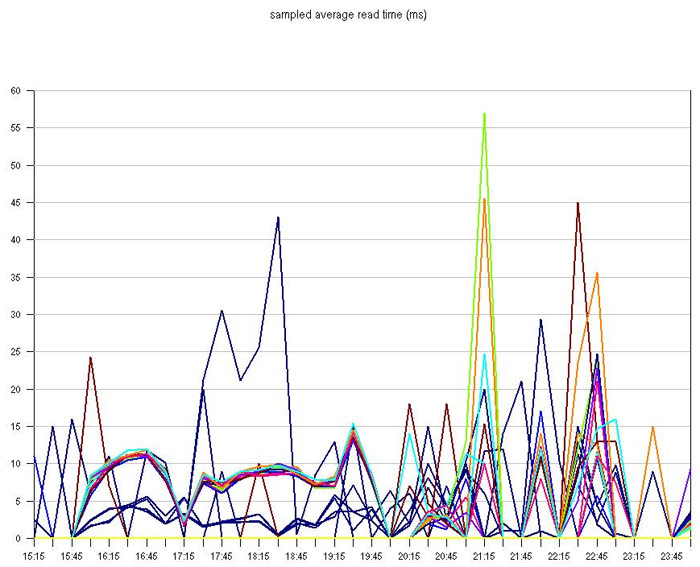

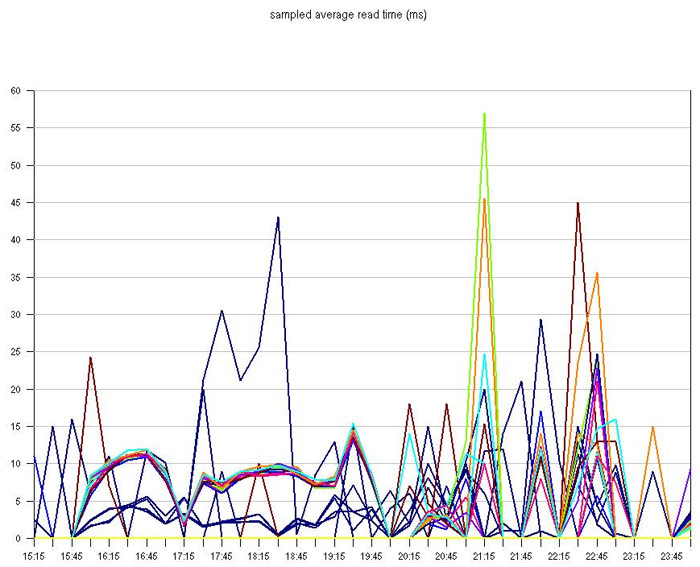

As a very general recommendation - if users complain about the lack of speed and response time of the disc - tens of milliseconds - something needs to be done with this. For example, this is the schedule of response of the volumes on which a rather large processing system was located. At such a speed, the data storage system worked quite badly for it, especially when people came into ATMs after work, or when the system summed up the day:

It often happens that even if the system slows down, they do not always complain about it. If the system slows down gradually, then people just get used to it. They cannot say whether it works well or badly — it works like yesterday, and this is the main thing. Users begin to complain if everything was good, and suddenly it became bad. This applies to many corporate applications that accumulate databases, order and customer information, and so on. Plus the fact that it works in the opposite direction. Therefore, if there is a fast and practical way to improve the user experience of the system, the IT specialist always looks like a wizard in the eyes of management. In this regard, our profession is something like everyday plumbing: it is easy to stand out when something has become bad, but it is very difficult to stand out because something has become better.

Virtualization

The second application for flash storage is virtualization, which almost everyone now has. Most companies introduce server virtualization, then workstations, and then go to the "cloud". From my practice - the majority of large customers are completing the first stage, this year is marked by a serious growth of projects on the virtualization of workstations. But, as we saw in the examples of several customers, often with the consolidation of a huge number of tasks for a small amount of iron, storage becomes the bottleneck.

If you try to add hundreds of machines in the data center to one large storage (where often only vertical scaling is possible, rather than installing dozens more), it often becomes the place that determines the speed of the entire system. This is very noticeable in workstation virtualization projects: problems often arise at the beginning of the working day, when everyone comes in, at lunchtime and at the end of the day. If before the computer was loaded 5 minutes, and now 15, and at the same time the user is also told that this is the most advanced technology, it becomes clear his displeasure. Moreover, the authorities are also dissatisfied: tens and hundreds of thousands of dollars were spent, and, judging by the form, it only became worse. Here you can again play the role of a good wizard.

You should also immediately voice such a moment: objectively, the space on storage systems, which is suitable for virtualization, is much more expensive than regular hard drives on servers. Just taking the volume that each user had and copying it to a large, good storage system will fail, we must somehow optimize the space.

A popular optimization technology when switching to storage is called a “golden image”. For example, when you need 1000 computers with Windows 7, you do not need to install 1000 distributions and occupy several terabytes of files of the operating system. The virtualization system will create one so-called “golden image” of the operating system. At the same time, all users will read from it, and only files other than those stored in the image will be stored on their virtual machines. It is clear that at the same time a huge number of read operations fall on a small amount of disk space. If the reference image somehow changes seriously (patches are put, for example), thousands of workstations are updated. Of course, this will also give a huge load on the storage system. Flash memory helps to relive such moments relatively easily and without forced pauses in the work process.

Above, I already gave an example with a 100% CPU load while waiting for a read-write operation. If you reduce the time during which the processor is idle, waiting for the disk subsystem, you may need fewer processors to solve the main task. This can help delay the server upgrade.

In addition, most corporate software such as Oracle, SAP, VMware, and so on is licensed either by physical processors or by their cores. Accordingly, the customer, scaling horizontally, pays several tens of thousands for the processor (in high-end servers the prices are exactly like that), and then pays even more for software. But for the acceleration of its storage, which will give no less effect, pay the software manufacturers do not need anything.

If you have a high-load system, do an analysis of how much the existing storage system is enough for it. If you need to optimize the storage of information - you should at least try flash storage. A flush, like any new technology, is associated with a lot of superstitions, uncertainty about whether a solution is suitable or not plus a completely understandable conservatism. You can decide most often only in practice. Just try it: ask a flash system or several SSDs in your array for a test from a friendly vendor or system integrator, calculate the result and evaluate how much you really need it.

In the near future, the Violin Memory demo array comes to us, and now a small queue is being built. If you are interested in it (and you have tasks that require high power storage), you can write to me at dd@croc.ru.

Here in this topic , introductory information has already been given, showing that new type of drives are noticeably faster and more reliable for threshing machines, on which heavy databases run. If it is possible to load flash storage with work (that is, to provide constant read-write operations), its content is much cheaper than the HDD array, plus you get a bunch of additional bonuses.

')

Below are tips on how to determine whether it is time to switch to this technology or not.

Let's start with simple facts.

- Flash memory is very expensive. Yes, the cost of storing a gigabyte of information on flash drives and flash storage systems is several times more expensive compared to mechanical HDDs. However, for applications that load the storage in thousands of IOPS and are sensitive to the response time of the disk subsystem, it becomes cheaper and more reliable (of course, such applications should be placed on specialized storage systems, and not on a single SSD disk or PCI-e card for which the stated theoretical performance of hundreds of thousands of IOPS).

- Flash memory is unreliable. Many still consider the technology “damp” and attribute the problems of the first prototypes to modern flash drives. As part of SSD-drives, and even more so, in specialized storage systems, modern flash memory shows reliability indicators higher than HDD. Moreover, I believe that any storage system on which serious services operate should be supported by the supplier or manufacturer. In this case, the failure of the HDD or SSD does not bother you at all, its replacement is a routine support service procedure. Many modern storage systems are able to report a failure to the service center. Often, faster than you yourself will find a refusal, you are already called to arrange a visit.

- The number of write cycles is limited. This is true now - SLC is approximately 100,000 rewriting cycles, the MLC is 10,000. The problem is solved by evenly loading the blocks, and this is provided by the controller. It is impossible that there was only reading in one section, and permanent changes on the other. The mechanism responsible for this is called wear leveling. So, for Violin Memory systems, this algorithm evenly “wears out” the entire storage space entirely. In other systems, the controller for each SSD is responsible for wear leveling, which is less effective. At the same time, part of the flash memory space (up to 30%) is reserved for transfer (remap) of such worn-out blocks. If we assume that the database will write on a system with a capacity of 10TB around the clock and 365 days a year with 25,000 IOPS blocks of 4Kb (approximately 100 MB / s), then 1% of the SLC system will wear out over 3 years and 4 months, 1% of the MLC array will 4 months.

- Flash memory is suitable for reading, but not very good for writing. This is due to the record processing mechanism. In order to write to the cell, it must first be cleared. Erasure does not occur with a single cell, but with a whole block in which from 64 to 128 and more KB are combined. And while the erasing process is underway, all other operations stop for a fairly long time, measured in milliseconds. Given that the block is usually larger than what is required for a transaction, a lot depends on the “firmware” and algorithms of the controller. If the disc is one, then the process is really slow. But the situation changes if the volume of the storage system is large. Then the controllers can redistribute the load so that the effect of blocking the system before recording will not greatly affect the operation of the system, and it can give almost the same performance on the record as it does on reading. If you group records and consistently write them into a cell, you will need much less erasure.

- Errors grow with the number of read cycles per block. Yes, it is a fact. Like the previous problems, the issue is solved by the controller. First of all, it should decommission obsolete cells. But even if this mechanism suddenly failed, no one has canceled the RAID protection that runs on storage controllers or servers.

- The speed drops as the media fills. Yes, the performance is perfect when the memory is almost not used, but as the data is overwritten, there remain blocks, where some cells contain the necessary data, and some do not. The controller starts the process of “garbage collection” (garbage collection), transferring the necessary data from underfilled cells and erasing them on the disk. To understand the true speed of a 400 GB disk, you need to write at least 1 TB on it in different transactions to get practical data. Here is an illustration of two graphs. Of course, the greater the amount of data on the storage system, the greater the space for maneuvering and smoothing this effect.

PCI-e flash card write bandwidth and external flash storage system Violin VMA3205

What to use?

Corporate Applications

This is the first good use case. Flash memory improves two key indicators that are important for management and end users of IT systems: application availability and performance. Flash memory will perform in all its glory if two conditions are met:

- A fairly loaded application. Big Oracle, SAP, CRM, ERP, corporate portals are excellent candidates.

- Use external storage with duplicated controllers and server connections.

On the other hand, some consider switching to flash memory as a panacea for all ills. This is not the case, but the tool is really effective for increasing the productivity of corporate applications; it really allows achieving this at the lowest price compared to other solutions on the market. Before making a decision, you need to look at the download specifically for your system. As my practice shows, in 70-80% of cases the problem with the speed of the application is either directly related to the low speed of the disk subsystem, or the situation can be seriously improved by accelerating the disks.

By the way, this solution may not be obvious. For example, when the system indicates that the CPU is 100% busy, it is not necessary to automatically assume that the problem will be solved by adding a CPU. It is necessary to dig a level deeper and see what the processor is busy. Often it is busy waiting for input / output (IOWAIT). By speeding up the disk system, you can unload the CPU and solve the problem.

In order to understand whether there is a problem with the disk subsystem or not, it is necessary to plot the response time of the disk subsystem. Each OS has its own tools that make it easy to do this - perfmon, iostat, sar, nmon and others.

As a very general recommendation - if users complain about the lack of speed and response time of the disc - tens of milliseconds - something needs to be done with this. For example, this is the schedule of response of the volumes on which a rather large processing system was located. At such a speed, the data storage system worked quite badly for it, especially when people came into ATMs after work, or when the system summed up the day:

It often happens that even if the system slows down, they do not always complain about it. If the system slows down gradually, then people just get used to it. They cannot say whether it works well or badly — it works like yesterday, and this is the main thing. Users begin to complain if everything was good, and suddenly it became bad. This applies to many corporate applications that accumulate databases, order and customer information, and so on. Plus the fact that it works in the opposite direction. Therefore, if there is a fast and practical way to improve the user experience of the system, the IT specialist always looks like a wizard in the eyes of management. In this regard, our profession is something like everyday plumbing: it is easy to stand out when something has become bad, but it is very difficult to stand out because something has become better.

Virtualization

The second application for flash storage is virtualization, which almost everyone now has. Most companies introduce server virtualization, then workstations, and then go to the "cloud". From my practice - the majority of large customers are completing the first stage, this year is marked by a serious growth of projects on the virtualization of workstations. But, as we saw in the examples of several customers, often with the consolidation of a huge number of tasks for a small amount of iron, storage becomes the bottleneck.

If you try to add hundreds of machines in the data center to one large storage (where often only vertical scaling is possible, rather than installing dozens more), it often becomes the place that determines the speed of the entire system. This is very noticeable in workstation virtualization projects: problems often arise at the beginning of the working day, when everyone comes in, at lunchtime and at the end of the day. If before the computer was loaded 5 minutes, and now 15, and at the same time the user is also told that this is the most advanced technology, it becomes clear his displeasure. Moreover, the authorities are also dissatisfied: tens and hundreds of thousands of dollars were spent, and, judging by the form, it only became worse. Here you can again play the role of a good wizard.

You should also immediately voice such a moment: objectively, the space on storage systems, which is suitable for virtualization, is much more expensive than regular hard drives on servers. Just taking the volume that each user had and copying it to a large, good storage system will fail, we must somehow optimize the space.

A popular optimization technology when switching to storage is called a “golden image”. For example, when you need 1000 computers with Windows 7, you do not need to install 1000 distributions and occupy several terabytes of files of the operating system. The virtualization system will create one so-called “golden image” of the operating system. At the same time, all users will read from it, and only files other than those stored in the image will be stored on their virtual machines. It is clear that at the same time a huge number of read operations fall on a small amount of disk space. If the reference image somehow changes seriously (patches are put, for example), thousands of workstations are updated. Of course, this will also give a huge load on the storage system. Flash memory helps to relive such moments relatively easily and without forced pauses in the work process.

Savings: bonuses

Above, I already gave an example with a 100% CPU load while waiting for a read-write operation. If you reduce the time during which the processor is idle, waiting for the disk subsystem, you may need fewer processors to solve the main task. This can help delay the server upgrade.

In addition, most corporate software such as Oracle, SAP, VMware, and so on is licensed either by physical processors or by their cores. Accordingly, the customer, scaling horizontally, pays several tens of thousands for the processor (in high-end servers the prices are exactly like that), and then pays even more for software. But for the acceleration of its storage, which will give no less effect, pay the software manufacturers do not need anything.

Summary

If you have a high-load system, do an analysis of how much the existing storage system is enough for it. If you need to optimize the storage of information - you should at least try flash storage. A flush, like any new technology, is associated with a lot of superstitions, uncertainty about whether a solution is suitable or not plus a completely understandable conservatism. You can decide most often only in practice. Just try it: ask a flash system or several SSDs in your array for a test from a friendly vendor or system integrator, calculate the result and evaluate how much you really need it.

In the near future, the Violin Memory demo array comes to us, and now a small queue is being built. If you are interested in it (and you have tasks that require high power storage), you can write to me at dd@croc.ru.

Source: https://habr.com/ru/post/149710/

All Articles