Another side of the moon

When writing applications, one of the most important issues is memory consumption and responsiveness (speed).

It is considered that the garbage collector is a black box whose work cannot be foreseen.

And they say that GC in .NET is practically not customizable. And also, that it is impossible to look at the sources of both the .NET Framework classes, and the CLR, GC, etc.

')

And I will say no matter how!

In this article we will consider:

Once I already wrote about determining the size of CLR-objects. In order not to retell the article, let's just remember the main points.

For variable reference types, when using the CIL instruction newobj or, for example, the new operator in C #, a fixed-size value is placed on the stack (4 bytes, for example, for x86, the DWORD type) containing the address of an instance of an object created in the regular heap (do not forget that the managed heap is divided into Small Object Heap and Large Object Heap - more on this later in the paragraph about GC). So, in C ++, this value is called a pointer to an object, and in the .NET world, a reference to an object.

The link lives on the stack when executing any method, or lives in the field of a class.

You cannot create an object in a vacuum without creating a link.

To avoid speculations about the size of objects and carrying out any tests using SOS (Son of Strike) , measuring GC.TotalMemory, etc. - just look at the CLR sources, or rather Shared Source Common Language Infrastructure 2.0 , which is a kind of research project.

Each type has its own MethodTable, and all instances of objects of the same type refer to the same MethodTable. This table stores information about the type itself (interface, abstract class, etc.).

Each object contains two additional fields — the object header, in which the SyncTableEntry address (syncblk entry) is stored, and the Method Table Pointer (TypeHandle).

SyncTableEntry is a structure that stores a link to a CLR object and a link to SyncBlock itself.

SyncBlock is a data structure that stores a hash code for any object.

Saying “for any” means that the CLR initializes a certain number of SyncBlocks in advance. Then, when calling GetHashCode () or Monitor.Enter (), the environment simply inserts a pointer to the already-ready SyncBlock into the object's header, simultaneously calculating the hash code.

This is done by calling the GetSyncBlock method (see the

The System.Object.GetHashCode method relies on the SyncBlock structure by calling the SyncBlock :: GetHashCode method.

The initial syncblk value is 0 for CLR 2.0, but since CLR 4.0 the value is -1.

When you call Monitor.Exit (), syncblk becomes -1 again.

I would also like to note that the SyncBlock array is stored in a separate memory, inaccessible by the GC.

How so? You ask.

The answer is simple - weak links. The CLR creates a weak reference to an entry in the SyncBlock array. When the CLR object dies, SyncBlock is updated.

The implementation of the Monitor.Enter () method depends on the platform and the JIT itself. So the pseudonym for this method in the SSCLI source is JIT_MonEnter .

Returning to the topic of placing objects in memory and their sizes, I would like to recall that any instance of an object (empty class) takes up at least 12 bytes in x86, and in x64 it is already 24 bytes.

Verify this without running SOS.

Go to the file

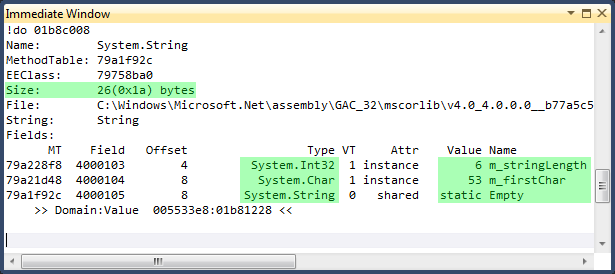

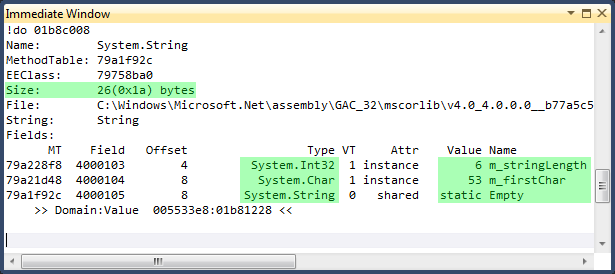

In the comments to the article about the size of CLR-objects I was accused of inaccurate calculation of the size of System.String without any evidence.

However, I trust the numbers and ... the source code!

System.String in .NET 4.0 consists of the following members:

Empty we do not take into account, since This is an empty static string.

m_stringLength specifies the length of the string.

m_firstChar is a pointer (!!!) to the beginning of storage of an array of Unicode characters, and not the first character in the array.

No magic is used here - the CLR simply finds an offset.

To make sure of this, again open the% folder with the archive% \ sscli20 \ clr \ src \ vm \ object.h

At the very beginning of the file we see the comments to the code:

This is the internal storage structure of string data.

Next we find the class StringObject and its method GetBuffer ().

Well, the buffer (also an array of characters) is simply calculated by offset.

But what about the System.String itself?

Open the file% folder with the archive% \ sscli20 \ clr \ src \ bcl \ system \ string.cs

We see the following lines:

However, System.String in its work relies on COMString, which implements the constructor itself, as well as many methods (PadLeft, etc.).

To correctly match the names of the methods from the framework and internal C ++ implementations, I advise you to look at the

Well, to finally make sure that m_firstChar is a pointer , consider, for example, part of the code of the Join method:

A slightly different version of the calculation (but with the same results ) leads the famous Jon Skeet.

Before moving forward, I would like to remember about the stack.

A stack is a container created by the environment on every call to any method. It stores all the data needed to complete the call (local variable addresses, parameters, etc.).

Thus, calls to the call tree are a FIFO container consisting of stacks. When the call to the current method is completed, the stack is cleared and destroyed, returning control to the parent branch.

As I wrote above, for variable reference types, a fixed-size value is placed on the stack (4 bytes, for example, for x86, type DWORD), which contains the address of an instance of an object created in a regular heap.

By default, instances of primitive types that are not involved in boxing are placed on the stack.

However, with some optimizations, JIT- can immediately locate the values of variables in the processor registers, bypassing RAM.

Recall what a processor register is - a block of memory cells that forms ultra-fast RAM inside the processor, used by the processor itself and for the most part inaccessible to the programmer.

The larger the CPU cache, the better performance you can get, regardless of the software platform.

As you know, memory management (creating and destroying objects) is done by the garbage collector - aka Garbage Collector (GC).

For the CLR application to work, it immediately initializes two segments of the virtual address space - the Small Object Heap and the Large Object Heap.

A quick note: virtual memory is a logical representation of memory, not a physical one. Physical memory is allocated only as needed. Each process in a modern operating system is allocated a virtual address space of the maximum addressable size (4GB for 32-bit OS) with division into pages (for x86, IA-64, PowerPC-64 platforms, the minimum size is 4KB, SPARC - 8 KB). Due to this, it becomes possible to isolate the address space of one process from another, and it also becomes possible to use swap on the disk.

To allocate and return memory to the system, the GC uses the Win32 functions of VirtualAlloc and VirtualFree .

The garbage collector in .NET is generational, i.e. managed pile (respectively, and objects) is divided into generations. All objects are divided by life cycle into several generations.

The source of references to objects is the so-called GC roots:

In this case, there are 3 generations:

The initial size of each segment (SOH, LOH) varies and depends on the specific machine (usually 16 MB for the desktop and 64 MB for the server). I want to note that this is virtual memory - an application can take up altogether 5 MB of physical memory.

Object LOH includes not only objects larger than 85,000 bytes, but also some types of arrays.

So an array from System.Double with a size of 10,600 elements (85,000 / 8 bytes) should fall into LOH. However, this happens at a rate of 1000+.

The objects in the managed heap are arranged one after the other, which can result in fragmentation if a large number of objects are deleted.

However, to solve this problem, the CLR will always (with the exception of manual memory management) defragment the Small Object Heap.

The process is as follows: the current objects are copied to free memory (spaces in the heap, which automatically disappear).

Thus, the minimum memory consumption is achieved, but it also requires a certain CPU time. However, this should not worry, because for Gen0, Gen1 objects, the delay is only 1 ms .

What about the Large Object Heap? It is never defragmented (almost never). This would require a large amount of time, which may have a bad effect on the operation of the application. However, this does not mean that the CLR begins to consume more and more memory just like that. During Full-GC (Gen0, Gen1, Gen2), the system still returns the OS memory, freeing itself from already dead objects from LOH (or defragmenting SOH).

The CLR also places new objects in LOH not only one after the other, as in SOH, for example, but also in places with already free memory, without waiting for Full-GC.

The launch of GC is not deterministic, except for calling the GC.Collect () method.

However, there are still approximate criteria by which it can be predicted (remember that the following conditions are approximate and the CLR adapts itself to the behavior of the application, much still depends on the type of garbage collector):

Also, garbage collection starts when there is a shortage of system memory. The CLR uses the Win32 functions CreateMemoryResourceNotification and QueryMemoryResourceNotification for this.

Another point when working with memory is the use of unmanaged resources.

Because unmanaged resources can contain any objects, regardless of the duration of life and the GC is not deterministic, then for these purposes there is a finalizer.

When the application starts, the CLR finds types with finalizers and excludes them from normal garbage collection (but this does not mean that objects are not tied to generations).

After the GC finishes its work, the objects being finalized are processed in a separate thread (method call Finalize).

An example of the implementation of the Dispose pattern:

Now consider the GCs themselves, available in the .NET Framework.

Before the release of .NET 4.0, there were two Server and Workstation modes available.

Workstation mode - GC is optimized to run on client machines. It tries not to particularly load the processor, and also works with minimal delays for applications with UI. Available in two modes - parallel and synchronous.

In parallel mode, the GC runs in a separate stream (with normal priority) for the Gen2 generation, while blocking the work of ephemeral generations (allocation of new objects is not possible, all threads are suspended).

If the application is available very (!!!) a lot of free memory, then SOH does not become compact (GC sacrifices memory, for the sake of application responsiveness).

Thus, Workstation mode is ideal for GUI applications.

In addition, if you need to use server-side GC, then you can enable it like this:

For verification, you can use the GCSettings.IsServerGC property in your code.

To force a shutdown of Workstation Concurrent GC, use the following parameters:

By default, parallel mode is enabled for Workstation GC. However, if the processor is single core, then the GC automatically goes into synchronous mode.

Consider now Server GC.

Server GC divides the managed heap into segments, the number of which is equal to the number of logical processors, using one thread to process each of them.

Small note: the logical processor does not necessarily correspond to the physical processor. Systems with multiple physical processors (i.e., multiple sockets) and multi-core processors provide the OS with many logical processors, and the core (!!!) can also be more than 1 logical processor (for example, using Intel's hyper-threading technology).

Also one of the main differences is the GCSettings.LatencyMode property, available with the .NET Framework 3.5 SP1 (consisting of three modes).

By default, LatencyMode for Workstation Concurrent GC is installed as Interactive, Server - Batch.

There is also a LowLatency, but using it can lead to an OutOfMemoryException, since in this mode GC Full garbage collection occurs only in case of high memory load. Also, it cannot be enabled for Server GC.

What is the difference between Batch and Interactive?

Because Server GC divides the managed heap into several segments (each of which serves a separate logical processor), then there is no longer a need for parallel garbage collection (if another thread was started on another logical processor). This mode forcibly overrides the gcConcurrent parameter. If gcConcurrent mode is enabled, Batch mode will prevent further parallel garbage collection (!!!). Batch is equivalent to non-parallel garbage collection on a workstation. When using this mode, the processing of large (!!!) data volumes is typical.

It should be remembered that changing the value of GCLatencyMode affects the current running threads, which means the impact on the execution environment itself and unmanaged code.

And since Since threads can run on different logical processors, there is no guarantee of instantaneous transfer of GC mode.

And what if another thread wants to change this value. And if flows 100?

Feel that a problem is brewing for a multi-threaded application? And especially for the CLR - an exception may be caused in the environment itself, and not in the application code.

For such cases, there is a constrained execution region (CER) - a guarantee of handling all exceptions (both synchronous and asynchronous).

In a block of code marked as CER, the runtime environment is prohibited from throwing some asynchronous exceptions.

For example, when calling Thread.Abort (), the stream executed under CER will not be interrupted until the execution of the CER-protected code is completed.

Also, the CLR prepares the CER during initialization to ensure operation even in the event of low memory.

It is recommended not to use CER for large areas of code, since There are a number of restrictions for this kind of code: boxing, calling virtual methods, calling methods through reflection, using Monitor.Enter, etc.

But let's not delve into this matter and see how to safely switch the LatencyMode mode.

Well, we have already reviewed the main part of the GC work in .NET - no questions have arisen?

Definitely not? And even nothing embarrassed?

Hmm ... didn’t the question about the impossibility of allocating new objects in ephemeral generations in any way interested?

But the .NET command - yes :)

Now we have a new Background GC available for Workstation mode (starting with .NET 4.5 and for Server).

The purpose of its creation was to reduce delays with Full-GC, in particular Gen2.

Background GC is the same Concurrent GC, with one exception - with Full-GC, ephemeral generations are not blocked for allocation of new objects.

Agree that handling Gen2 and LOH is very expensive. And blocking Gen0, Gen1 - i.e. The normal operation of the application may cause delays (in certain situations).

Another question addressed by the new GC is the postponement of the allocation of new objects when the size limit of the managed heap is reached (16 MB - desktop, 64 - server).

Now, to prevent such a situation, not only the background thread for Gen2, but also the foreground thread (yes, we also have Foreground GC), which marks dead objects from ephemeral generations and combines current ephemeral generations with Gen2 (because Merging is a less expensive operation than copying and transfers them to background thread processing, thereby allowing memory to be allocated for new objects (I remind you that in Background GC Gen0, Gen1 are not blocked while GC is working for Gen2).

Reducing the number of delays can be compared on the chart below:

One of the most interesting and unusual features of the CLR and, in particular, C # is manual memory management, i.e. work with pointers.

In general, this is the third type in .NET - Pointer Type. It is a DWORD address for a specific instance of either Value Type. Those. Reference Types are not available to us.

But we can also work with unmanaged code.

For such purposes, a System.Runtime.InteropServices.GCHandle structure has been created - objects with a fixed address that provide access to the managed object from unmanaged memory.

For GCHandle, the CLR uses a separate table for each AppDomain.

GCHandle is destroyed when GCHandle.Free () is called, or when the AppDomain is unloaded.

To create a method is used GChandle.Alloc ().

The following allocation modes are available:

Learn more about GCHandle - MSDN .

When you may need to work with memory, you ask?

For example, to copy an array of bytes.

, GC SOH? SOH.

( .NET — ). – .

, .NET 2.0, .NET 4.0.

[ .NET Framework Versions and Dependencies ]

.NET 1.1, , , 2.0.

.NET 3.5 CLR 2.0, 2.0 + 3.0 + 3.5. , , .. .

.NET 4.0 :

CLR 2.0 CLR 4.0 :

:

SEH- , :

, :

, .NET 4.0.

, JIT – , VC++, – FastCall.

, ECX, EDX 2 .

x64 – RCX, RDX, R8, R9.

?

, - ( , ).

startIndex endIndex ECX, EDX, (x, y) .

– .

, !

Thanks for attention!

It is considered that the garbage collector is a black box whose work cannot be foreseen.

And they say that GC in .NET is practically not customizable. And also, that it is impossible to look at the sources of both the .NET Framework classes, and the CLR, GC, etc.

')

And I will say no matter how!

In this article we will consider:

- structure of the organization of the placement of objects in memory

- CLR 4.5 Background Server GC

- correct setting of the garbage collector

- effective upgrade of applications to .NET 4.0+

- proper manual memory management

▌ Structure of the organization of the placement of objects in memory

Once I already wrote about determining the size of CLR-objects. In order not to retell the article, let's just remember the main points.

For variable reference types, when using the CIL instruction newobj or, for example, the new operator in C #, a fixed-size value is placed on the stack (4 bytes, for example, for x86, the DWORD type) containing the address of an instance of an object created in the regular heap (do not forget that the managed heap is divided into Small Object Heap and Large Object Heap - more on this later in the paragraph about GC). So, in C ++, this value is called a pointer to an object, and in the .NET world, a reference to an object.

The link lives on the stack when executing any method, or lives in the field of a class.

You cannot create an object in a vacuum without creating a link.

To avoid speculations about the size of objects and carrying out any tests using SOS (Son of Strike) , measuring GC.TotalMemory, etc. - just look at the CLR sources, or rather Shared Source Common Language Infrastructure 2.0 , which is a kind of research project.

Each type has its own MethodTable, and all instances of objects of the same type refer to the same MethodTable. This table stores information about the type itself (interface, abstract class, etc.).

Each object contains two additional fields — the object header, in which the SyncTableEntry address (syncblk entry) is stored, and the Method Table Pointer (TypeHandle).

SyncTableEntry is a structure that stores a link to a CLR object and a link to SyncBlock itself.

SyncBlock is a data structure that stores a hash code for any object.

Saying “for any” means that the CLR initializes a certain number of SyncBlocks in advance. Then, when calling GetHashCode () or Monitor.Enter (), the environment simply inserts a pointer to the already-ready SyncBlock into the object's header, simultaneously calculating the hash code.

This is done by calling the GetSyncBlock method (see the

% %\sscli20\clr\src\vm\syncblk.cpp) file % %\sscli20\clr\src\vm\syncblk.cpp) . In the body of the method we can see the following code: else if ((bits & BIT_SBLK_IS_HASHCODE) != 0) { DWORD hashCode = bits & MASK_HASHCODE; syncBlock->SetHashCode(hashCode); } The System.Object.GetHashCode method relies on the SyncBlock structure by calling the SyncBlock :: GetHashCode method.

The initial syncblk value is 0 for CLR 2.0, but since CLR 4.0 the value is -1.

When you call Monitor.Exit (), syncblk becomes -1 again.

I would also like to note that the SyncBlock array is stored in a separate memory, inaccessible by the GC.

How so? You ask.

The answer is simple - weak links. The CLR creates a weak reference to an entry in the SyncBlock array. When the CLR object dies, SyncBlock is updated.

The implementation of the Monitor.Enter () method depends on the platform and the JIT itself. So the pseudonym for this method in the SSCLI source is JIT_MonEnter .

Returning to the topic of placing objects in memory and their sizes, I would like to recall that any instance of an object (empty class) takes up at least 12 bytes in x86, and in x64 it is already 24 bytes.

Verify this without running SOS.

Go to the file

% %\sscli20\clr\src\vm\object.h #define MIN_OBJECT_SIZE (2*sizeof(BYTE*) + sizeof(ObjHeader)) class Object { protected: MethodTable* m_pMethTab; }; class ObjHeader { private: DWORD m_SyncBlockValue; // the Index and the Bits }; In the comments to the article about the size of CLR-objects I was accused of inaccurate calculation of the size of System.String without any evidence.

However, I trust the numbers and ... the source code!

System.String in .NET 4.0 consists of the following members:

- m_firstChar

- m_stringLength

- Empty

Empty we do not take into account, since This is an empty static string.

m_stringLength specifies the length of the string.

m_firstChar is a pointer (!!!) to the beginning of storage of an array of Unicode characters, and not the first character in the array.

No magic is used here - the CLR simply finds an offset.

To make sure of this, again open the% folder with the archive% \ sscli20 \ clr \ src \ vm \ object.h

At the very beginning of the file we see the comments to the code:

/* * StringObject - String objects are specialized objects for string * storage/retrieval for higher performance */ This is the internal storage structure of string data.

Next we find the class StringObject and its method GetBuffer ().

WCHAR* GetBuffer() { LEAF_CONTRACT; _ASSERTE(this); return (WCHAR*)( PTR_HOST_TO_TADDR(this) + offsetof(StringObject, m_Characters) ); } Well, the buffer (also an array of characters) is simply calculated by offset.

But what about the System.String itself?

Open the file% folder with the archive% \ sscli20 \ clr \ src \ bcl \ system \ string.cs

We see the following lines:

//NOTE NOTE NOTE NOTE //These fields map directly onto the fields in an EE StringObject. See object.h for the layout. // [NonSerialized]private int m_stringLength; [NonSerialized]private char m_firstChar; However, System.String in its work relies on COMString, which implements the constructor itself, as well as many methods (PadLeft, etc.).

To correctly match the names of the methods from the framework and internal C ++ implementations, I advise you to look at the

% %\sscli20\clr\src\vm\ecall.cppWell, to finally make sure that m_firstChar is a pointer , consider, for example, part of the code of the Join method:

fixed (char* ptr = &text.m_firstChar) { UnSafeCharBuffer unSafeCharBuffer = new UnSafeCharBuffer(ptr, num); unSafeCharBuffer.AppendString(value[startIndex]); for (int j = startIndex + 1; j <= num2; j++) { unSafeCharBuffer.AppendString(separator); unSafeCharBuffer.AppendString(value[j]); } } A slightly different version of the calculation (but with the same results ) leads the famous Jon Skeet.

Before moving forward, I would like to remember about the stack.

A stack is a container created by the environment on every call to any method. It stores all the data needed to complete the call (local variable addresses, parameters, etc.).

Thus, calls to the call tree are a FIFO container consisting of stacks. When the call to the current method is completed, the stack is cleared and destroyed, returning control to the parent branch.

As I wrote above, for variable reference types, a fixed-size value is placed on the stack (4 bytes, for example, for x86, type DWORD), which contains the address of an instance of an object created in a regular heap.

By default, instances of primitive types that are not involved in boxing are placed on the stack.

However, with some optimizations, JIT- can immediately locate the values of variables in the processor registers, bypassing RAM.

Recall what a processor register is - a block of memory cells that forms ultra-fast RAM inside the processor, used by the processor itself and for the most part inaccessible to the programmer.

The larger the CPU cache, the better performance you can get, regardless of the software platform.

▌ GC device

As you know, memory management (creating and destroying objects) is done by the garbage collector - aka Garbage Collector (GC).

For the CLR application to work, it immediately initializes two segments of the virtual address space - the Small Object Heap and the Large Object Heap.

A quick note: virtual memory is a logical representation of memory, not a physical one. Physical memory is allocated only as needed. Each process in a modern operating system is allocated a virtual address space of the maximum addressable size (4GB for 32-bit OS) with division into pages (for x86, IA-64, PowerPC-64 platforms, the minimum size is 4KB, SPARC - 8 KB). Due to this, it becomes possible to isolate the address space of one process from another, and it also becomes possible to use swap on the disk.

To allocate and return memory to the system, the GC uses the Win32 functions of VirtualAlloc and VirtualFree .

The garbage collector in .NET is generational, i.e. managed pile (respectively, and objects) is divided into generations. All objects are divided by life cycle into several generations.

The source of references to objects is the so-called GC roots:

- stack

- static (global) objects

- finalizable objects

- unmanaged interop objects (CLR objects participating in COM / unmanaged calls)

- processor registers

- other CLR objects with links

In this case, there are 3 generations:

- Generation 0 . The life cycle of objects of this generation is the shortest. Gen0 usually refers to temporary variables created in the body of methods.

- Generation 1 . The life cycle of objects of this generation is also short. This includes objects with an intermediate lifetime - objects moving from Gen0 to Gen2.

- Generation 2 . It is the most long-lived objects. Also, objects larger than 85,000 bytes automatically fall into the Large Object Heap and are marked as Gen2.

The initial size of each segment (SOH, LOH) varies and depends on the specific machine (usually 16 MB for the desktop and 64 MB for the server). I want to note that this is virtual memory - an application can take up altogether 5 MB of physical memory.

Object LOH includes not only objects larger than 85,000 bytes, but also some types of arrays.

So an array from System.Double with a size of 10,600 elements (85,000 / 8 bytes) should fall into LOH. However, this happens at a rate of 1000+.

The objects in the managed heap are arranged one after the other, which can result in fragmentation if a large number of objects are deleted.

However, to solve this problem, the CLR will always (with the exception of manual memory management) defragment the Small Object Heap.

The process is as follows: the current objects are copied to free memory (spaces in the heap, which automatically disappear).

Thus, the minimum memory consumption is achieved, but it also requires a certain CPU time. However, this should not worry, because for Gen0, Gen1 objects, the delay is only 1 ms .

What about the Large Object Heap? It is never defragmented (almost never). This would require a large amount of time, which may have a bad effect on the operation of the application. However, this does not mean that the CLR begins to consume more and more memory just like that. During Full-GC (Gen0, Gen1, Gen2), the system still returns the OS memory, freeing itself from already dead objects from LOH (or defragmenting SOH).

The CLR also places new objects in LOH not only one after the other, as in SOH, for example, but also in places with already free memory, without waiting for Full-GC.

The launch of GC is not deterministic, except for calling the GC.Collect () method.

However, there are still approximate criteria by which it can be predicted (remember that the following conditions are approximate and the CLR adapts itself to the behavior of the application, much still depends on the type of garbage collector):

- When Gen0 generation size reaches 256 KB

- When Gen1 generation size reaches 2 MB

- When Gen2 generation size is 10 MB

Also, garbage collection starts when there is a shortage of system memory. The CLR uses the Win32 functions CreateMemoryResourceNotification and QueryMemoryResourceNotification for this.

Another point when working with memory is the use of unmanaged resources.

Because unmanaged resources can contain any objects, regardless of the duration of life and the GC is not deterministic, then for these purposes there is a finalizer.

When the application starts, the CLR finds types with finalizers and excludes them from normal garbage collection (but this does not mean that objects are not tied to generations).

After the GC finishes its work, the objects being finalized are processed in a separate thread (method call Finalize).

An example of the implementation of the Dispose pattern:

class Foo : IDisposable { private bool _disposed; ~Foo() { Dispose(false); } public void Dispose() { Dispose(true); GC.SuppressFinalize(this); } protected virtual void Dispose(bool disposing) { if (!_disposed) { if (disposing) { // Free managed objects } // Free unmanaged objects _disposed = true; } } } Now consider the GCs themselves, available in the .NET Framework.

Before the release of .NET 4.0, there were two Server and Workstation modes available.

Workstation mode - GC is optimized to run on client machines. It tries not to particularly load the processor, and also works with minimal delays for applications with UI. Available in two modes - parallel and synchronous.

In parallel mode, the GC runs in a separate stream (with normal priority) for the Gen2 generation, while blocking the work of ephemeral generations (allocation of new objects is not possible, all threads are suspended).

If the application is available very (!!!) a lot of free memory, then SOH does not become compact (GC sacrifices memory, for the sake of application responsiveness).

Thus, Workstation mode is ideal for GUI applications.

In addition, if you need to use server-side GC, then you can enable it like this:

<configuration> <runtime> <gcServer enabled="true"/> </runtime> </configuration> For verification, you can use the GCSettings.IsServerGC property in your code.

To force a shutdown of Workstation Concurrent GC, use the following parameters:

<configuration> <runtime> <gcConcurrent enabled="false"/> </runtime> </configuration> By default, parallel mode is enabled for Workstation GC. However, if the processor is single core, then the GC automatically goes into synchronous mode.

Consider now Server GC.

Server GC divides the managed heap into segments, the number of which is equal to the number of logical processors, using one thread to process each of them.

Small note: the logical processor does not necessarily correspond to the physical processor. Systems with multiple physical processors (i.e., multiple sockets) and multi-core processors provide the OS with many logical processors, and the core (!!!) can also be more than 1 logical processor (for example, using Intel's hyper-threading technology).

Also one of the main differences is the GCSettings.LatencyMode property, available with the .NET Framework 3.5 SP1 (consisting of three modes).

By default, LatencyMode for Workstation Concurrent GC is installed as Interactive, Server - Batch.

There is also a LowLatency, but using it can lead to an OutOfMemoryException, since in this mode GC Full garbage collection occurs only in case of high memory load. Also, it cannot be enabled for Server GC.

What is the difference between Batch and Interactive?

Because Server GC divides the managed heap into several segments (each of which serves a separate logical processor), then there is no longer a need for parallel garbage collection (if another thread was started on another logical processor). This mode forcibly overrides the gcConcurrent parameter. If gcConcurrent mode is enabled, Batch mode will prevent further parallel garbage collection (!!!). Batch is equivalent to non-parallel garbage collection on a workstation. When using this mode, the processing of large (!!!) data volumes is typical.

It should be remembered that changing the value of GCLatencyMode affects the current running threads, which means the impact on the execution environment itself and unmanaged code.

And since Since threads can run on different logical processors, there is no guarantee of instantaneous transfer of GC mode.

And what if another thread wants to change this value. And if flows 100?

Feel that a problem is brewing for a multi-threaded application? And especially for the CLR - an exception may be caused in the environment itself, and not in the application code.

For such cases, there is a constrained execution region (CER) - a guarantee of handling all exceptions (both synchronous and asynchronous).

In a block of code marked as CER, the runtime environment is prohibited from throwing some asynchronous exceptions.

For example, when calling Thread.Abort (), the stream executed under CER will not be interrupted until the execution of the CER-protected code is completed.

Also, the CLR prepares the CER during initialization to ensure operation even in the event of low memory.

It is recommended not to use CER for large areas of code, since There are a number of restrictions for this kind of code: boxing, calling virtual methods, calling methods through reflection, using Monitor.Enter, etc.

But let's not delve into this matter and see how to safely switch the LatencyMode mode.

var oldMode = GCSettings.LatencyMode; System.Runtime.CompilerServices.RuntimeHelpers.PrepareConstrainedRegions(); try { GCSettings.LatencyMode = GCLatencyMode.Batch; // } finally { GCSettings.LatencyMode = oldMode; } Well, we have already reviewed the main part of the GC work in .NET - no questions have arisen?

Definitely not? And even nothing embarrassed?

Hmm ... didn’t the question about the impossibility of allocating new objects in ephemeral generations in any way interested?

But the .NET command - yes :)

Now we have a new Background GC available for Workstation mode (starting with .NET 4.5 and for Server).

The purpose of its creation was to reduce delays with Full-GC, in particular Gen2.

Background GC is the same Concurrent GC, with one exception - with Full-GC, ephemeral generations are not blocked for allocation of new objects.

Agree that handling Gen2 and LOH is very expensive. And blocking Gen0, Gen1 - i.e. The normal operation of the application may cause delays (in certain situations).

Another question addressed by the new GC is the postponement of the allocation of new objects when the size limit of the managed heap is reached (16 MB - desktop, 64 - server).

Now, to prevent such a situation, not only the background thread for Gen2, but also the foreground thread (yes, we also have Foreground GC), which marks dead objects from ephemeral generations and combines current ephemeral generations with Gen2 (because Merging is a less expensive operation than copying and transfers them to background thread processing, thereby allowing memory to be allocated for new objects (I remind you that in Background GC Gen0, Gen1 are not blocked while GC is working for Gen2).

Reducing the number of delays can be compared on the chart below:

▌ Manual memory management

One of the most interesting and unusual features of the CLR and, in particular, C # is manual memory management, i.e. work with pointers.

In general, this is the third type in .NET - Pointer Type. It is a DWORD address for a specific instance of either Value Type. Those. Reference Types are not available to us.

But we can also work with unmanaged code.

For such purposes, a System.Runtime.InteropServices.GCHandle structure has been created - objects with a fixed address that provide access to the managed object from unmanaged memory.

For GCHandle, the CLR uses a separate table for each AppDomain.

GCHandle is destroyed when GCHandle.Free () is called, or when the AppDomain is unloaded.

To create a method is used GChandle.Alloc ().

The following allocation modes are available:

- Normal

- Weak

- Weak track resurrection

- Pinned

Learn more about GCHandle - MSDN .

When you may need to work with memory, you ask?

For example, to copy an array of bytes.

static unsafe void Copy(byte[] source, int sourceOffset, byte[] target, int targetOffset, int count) { fixed (byte* pSource = source, pTarget = target) { // Set the starting points in source and target for the copying. byte* ps = pSource + sourceOffset; byte* pt = pTarget + targetOffset; // Copy the specified number of bytes from source to target. for (int i = 0; i < count; i++) { *pt = *ps; pt++; ps++; } } } , GC SOH? SOH.

( .NET — ). – .

▌ || .NET 4.0+

, .NET 2.0, .NET 4.0.

[ .NET Framework Versions and Dependencies ]

.NET 1.1, , , 2.0.

.NET 3.5 CLR 2.0, 2.0 + 3.0 + 3.5. , , .. .

.NET 4.0 :

- CLR

- GC

- Thread Pool

CLR 2.0 CLR 4.0 :

<configuration> <startup> <supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.0"/> </startup> </configuration> :

<runtime> <NetFx40_LegacySecurityPolicy enabled="true"/> </runtime> SEH- , :

<configuration> <runtime> <legacyCorruptedStateExceptionsPolicy enabled="true"/> </runtime> </configuration> , :

<startup useLegacyV2RuntimeActivationPolicy="true"> <supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.0"/> </startup> , .NET 4.0.

▌ || FastCall

, JIT – , VC++, – FastCall.

, ECX, EDX 2 .

x64 – RCX, RDX, R8, R9.

?

, - ( , ).

class Program { static void Main(string[] args) { int startIndex = 1; int endIndex = 2; int x = 3; int y = 5; int result = Compute(startIndex, endIndex, x, y); Console.WriteLine(result); } public static int Compute(int startIndex, int endIndex, int x, int y) { int result = 0; for (int i = startIndex; i < endIndex; i++) { result += x * startIndex + y * endIndex; } return result; } } startIndex endIndex ECX, EDX, (x, y) .

– .

CTRL + D, R .

CTRL + ALT + D .

, !

Thanks for attention!

Source: https://habr.com/ru/post/149584/

All Articles