Software-configured networks - how does it work?

- We brought you a connection to the modern world.

- In a month we will be back with anti-depressants.

The post “ How much does SDN cost ?” Gathered my share of Habr's attention - at the invitation of sergeykalenik I will now keep my blog on Habré and, to the best of my ability, talk about domestic successes in the field of SDN technologies.

The Nicira story about how in less than 5 years three university professors and private investors were able to build a startup worth $ 1.26 billion, which, according to experts, can drastically change the balance of power in the network equipment market and, possibly, become a “killer” of Cisco (hard to believe, but, nevertheless, such an opinion exists.)

')

In the comments to the post there were a lot of discussions about what software-configurable networks (PCBs) really are and how much this all is applicable in reality. Briefly, comments can be divided into the following groups:

- PKS are not needed, because everything works fine

- interesting, but it is not clear what and why

- marketing bullshit

- the approach is strictly laboratory, for tests at universities, and in practical life most likely not applicable

In general, the latter point of view is partly true. The very concept of a new network architecture was proposed only in 2007 as part of a thesis by a professor at Stanford University. Since then, the PCB developed mainly in the scientific laboratory at Stanford and Berkeley and on an industrial scale, no one has ever tried it. Therefore, it is at least premature to “idealize” and “bury” the PKS.

Why did Stanford and Berkeley even try to change the network architecture? As the authors of the campaign themselves: Martin Casado (Martin Casado) and Nick McKeown (Nick McKeown), they wanted to change or influence the following points:

- traditional architecture networks are proprietary, closed to research and almost any changes from outside. Equipment from different vendors often doesn’t fit well together

- the growth of traffic exponentially and the thesis that the networks of the current architecture simply cannot “digest” it at the required level of quality

- number of protocols and their network stacks

The basic network protocols in the TCP / IP architecture were developed in the 70s at the dawn of the Internet, when no one could predict modern speeds and volumes of transmitted data. In 2010, Eric Schmidt spoke at the conference Techonomy: "Five exabytes of information created by mankind since the birth of civilization until 2003, the same amount is now created every two days, and the speed increases ...".

According to Cisco, over the next 5 years, traffic will increase by 4 times, and mobile traffic will double annually. By 2015, the number of devices on the network will be two times higher than the population of the planet. The number of network addresses in the new IPv6 protocol is such that 6.7 * 1023 addresses fall on 1 m2 of the Earth’s surface (in fact, this is the number of devices that can potentially be connected to networks). Today at the same time over 35 million users work in Skype, over 200 million work on Facebook, and 72 hours of video are uploaded to YouTube every minute. Video traffic accounts for more than 50% of consumer traffic and its share will continue to grow. By 2015, traffic from wireless devices will exceed the amount of traffic from fixed. Surely, if today there was an opportunity to develop network protocols from a clean slate and taking into account all the accumulated experience, they would be completely different.

For illustration, imagine that all web servers were developed in the 70s and remained unchanged. It’s as if Nginx and not even Apache were now, but only the 1995 ISS. Unfortunately in the area of protocols, this is the case.

Until recently, the existing network architecture developed according to the “swallow's nest” method, i.e. as problems were discovered, a new one was added to the TCP / IP protocol stack, which solved this problem. For example, when a digital network with service integration emerged, combining voice, data and image transmission (ISDN), the problem of video traffic transmission arose. The essence of the problem can be summarized as follows: when transmitting video over networks, there are stringent requirements for managing service quality, since it is necessary to dynamically push through very high-speed traffic that does not allow delays.

RSVP just solved the problem of reserving the necessary resources for such traffic and, accordingly, allowed us to provide the necessary level of quality of services. However, as it turned out later, this protocol is also not sinless and has a number of limitations associated, for example, with the scalability of networks.

Another example is the dynamic host configuration protocol (DHCP) that settled in any home router — the dynamic host configuration protocol — was designed for IPv4 networks. The protocol allows you to allocate an IP address for a limited period of time ("lease time") or until the client rejects the address, whichever occurs first. To some extent, this solved the problem of limited IP addresses in IPv4 networks, but as a result another problem appeared - assigning the same IP addresses to different equipment within the same network infrastructure. Today, the number of actually (actively) used protocols is more than 600. And the figure is far from finite.

What did researchers from Stanford and Berkeley begin with? They suggested that it is possible to separate the functions of control and data transfer in computer networks.

Figure 1. Processing and transferring data on an Ethernet switch

When transferring data, the Ethernet switch issues a request to the switching table (Figure 1). Then, based on the information received, the switching matrix performs further processing and transmission of data to the target source port.

In a conventional Ethernet switch, control and data transfer are simultaneously implemented. The control level is represented by a built-in controller, the data transfer level is represented by a switching table and a switching matrix. The controller has some intelligent functions that allow it to make decisions about data transmission on the basis of information about the network structure. But it is impossible to directly control the decision-making - you can only configure the controller by setting certain sets of rules and priorities. This greatly limits the functionality of the switch and the entire network. For example, the organization of connections "every with each" in such a network can not be built without a third-level network device - a router.

The problem of separation of the level of control and data transfer, researchers at Stanford and Berkeley, proposed to solve within the framework of the approach called software-configured networks (PKS).

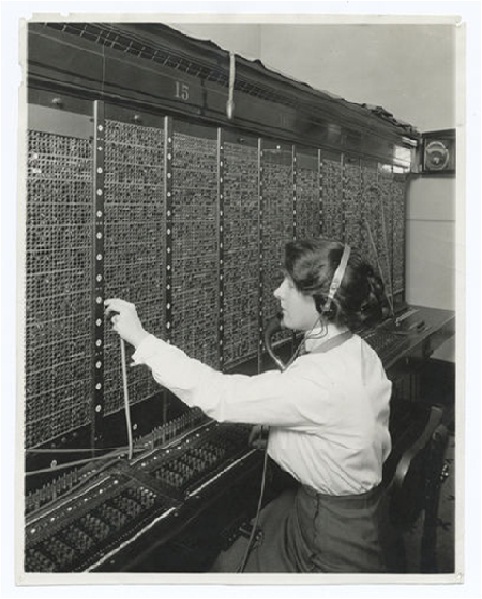

Prior to OpenFlow, the network architecture can be represented as a telephone connection at the very beginning of the 20th century, when switching was carried out manually (Young lady, Smolny!). In principle, now the administrator also manually adjusts the equipment according to the specified parameters, and any further changes are carried out mainly at the hardware level.

OpenFlow allows you to get away from such "manual" network management, which has a positive effect on its scalability, for example.

In the OpenFlow switch, only the data transfer layer is implemented. Instead of a controller, a much simpler device is used, whose task is to receive incoming data, retrieve their addresses and, if the recipient is in the switching table, immediately transfer data to the switching matrix. Otherwise, the switch sends a request to the OpenFlow Central Controller via a secure channel, and, based on the information received from it, makes the necessary changes to the switching table, after which the received data is processed (more equipment is not reconfigured by hand, but reconfigured using software).

The central controller has accurate information about the structure and topology of the network. This allows you to optimize the promotion of data packets, and, in particular, to build connections “every one with each other” at the L2 level, without resorting to IP routing (Fig. 2). It also becomes possible to “switch” data channels all the way from the source to the destination. As a result, data streams are transmitted over the network, rather than individual packets.

Therefore, in the terminology of the PKS, such a switching table is called FlowTable (flow table). Each controller has its own unique FlowTable, it fills this table only on the basis of information received from the central controller.

In the networks of the PSC architecture, all routers and switches are combined under the control of the Network Operating System (SOS), which provides applications with access to network management and which constantly monitors the configuration of network facilities.

In contrast to the traditional interpretation of the term SOS as an operating system integrated with the network protocol stack, in this case, SOS means a software system that provides monitoring, access, control, resources of the entire network, and not a specific node. SOS generates data on the state of all network resources and provides access to them for network management applications. These applications control various aspects of network operation, such as building topology, making routing decisions, load balancing, and so on.

The OpenFlow protocol also solves the problem of dependency on the network equipment of a particular vendor, because the SDN uses common abstractions to forward packets that the network operating system uses to manage network switches.

We can say that OpenFlow is a lower level protocol for programming switches. Software for SDN / PKS networks is at the very beginning of its development, in most cases it is only to be developed. In the near future, developers are only to be convinced of the correctness of the approach, of the possibility of scalability of new generation networks, since the technology has just left the laboratories.

But now the interest from the manufacturers is huge. For example, Cisco added OpenFlow to its Nexus and Catalyst 37XX switches , Jupiper also added the OpenFlow option to the JunOS SDK, and in June of this year announced the implementation of this technology in the EX and MX Series switches. Moreover, a number of network equipment manufacturers already offer switches that implement only the OpenFlow protocol, and traditional protocols do not support: NEC, Pronto, Marvell.

In Russia, at the initial stage, the Center for Applied Research of Computer Networks will check all declared characteristics of Open Flow. If you want to try everything with your own hands, write to everizhnikova@arccn.ru, we will be glad to cooperate.

Source: https://habr.com/ru/post/149126/

All Articles