How to speed up the scheduling algorithm

Hello, dear habravchane!

Surely, many of you have come across in your work with the need to solve a planning problem in the field of scheduling theory. I would like to tell you how you can speed up the work of such a program without affecting the algorithm itself.

There is a huge number of approaches to solving these problems, implemented by using and combining various heuristic, approximate algorithms and brute force reduction algorithms. In life, all of their implementation is very dependent on the specifics of the enterprise, therefore, without going into a separate solution, consider the general steps to reduce the time to build a result.

Let's take a very vital task “Distribution of works by posts”. And also a user who makes up a set of work and sends it for planning. And he must withdraw not one or two options, but all possible intervals for the day. Those. to say whether it is possible to stick this set of work at 8.00? And at 8.30? And so on all day.

Each post is characterized by the type of work that can be performed on it. At each post, working intervals are known (i.e., time periods that are not occupied by breaks or other work). Each planned work is characterized by a standard of time and type.

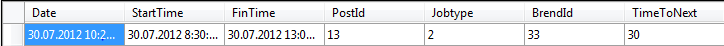

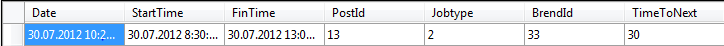

Information about free intervals from my server is dragged into such a label:

')

Jobs are stored in a list of the following structures:

When performing several works in succession of the same type, transferring them to different posts is an undesirable situation and costs some time resources. When planning, it makes sense to introduce the concept of “weight of the path”, which will determine the quality of the calculated schedule. Each transition between posts should increase the weight, each execution on a post of an unsuitable type should make the weight of the overall schedule even more heavy. Also, the weight between the time of completion of work and the reference one, the workload of the selected posts and a lot of factors at the request of the customer can influence the weight.

If the user just needs to show any possible schedule, we will use quick approximate methods and do it. But if you need to display the schedule, which had the least weight, you will have to implement the method of your search method. The duration of this method will grow exponentially depending on the amount of work. Accordingly, if the speed of building a schedule with one or two jobs will vary within hundredths of a second, with four or five within tenths, then calculating the schedule with more than twenty minor jobs may well come out in a few minutes. Of course, all figures are approximate and highly dependent on the situation, but the general principle is clear.

The first step in which you can reduce the time of the program is to check the resource of the workshop before the start of the calculation. The types of planned work and the time of each work are known, the schedule and composition of the workshop is known. Therefore, you need to take the total free time for each type of posts, without specifying where and when it is free and impose it on the total duration of the work of the respective types. If there is not enough time, then it is clear that the schedule cannot be built for the selected time, we will send a message to the user that there are not enough resources in the workshop and we will offer to choose another day.

If the resources are still found, then move on to the second step - reducing brute force. It is clear that a solution with a minimum weight is such a solution in which work of each type was performed only on one post without interruption. In life, this is expressed in the fact that the car in the service center will be processed at one post, and will not be dragged throughout the workshop. It is likely that work will have to start later, but here you have to compare the weight of the delay with the weight of the transfer of the machine and choose the lesser of two evils.

Before the start of the planning process, all the works are combined into single blocks, grouped by type, in the order of work performed. If the problem statement does not say about the dependence of tasks (i.e., that the work of i + 2 cannot be completed before i + 1 is finished), then we will sort the works by type. The duration of each block is considered to be equal to the total duration of all works included in the block.

Thus, in the simplest case, the planning will be sent not only 20 small works, but one long one. About the difference in execution time was mentioned above. All scanned paths for combined work are worth saving into some decision tree. With a negative result, you need to gradually reduce the density of the group and delete from the search area those paths that have already been added to the tree.

As soon as the path with the minimum desired weight is found, we will stop the search process and divide the group back into separate jobs, in order to provide information about what work will be done at what time.

The difficulty lies in the fact that breaks are possible in the workshop, which must be taken into account when backing up the work. In the above case, you need to make sure that the start of work does not fall into a break, and when the end of work gets into it, it will be autofocus for the next working period.

When using the above approach, one should understand that there is always a chance of failure, then the set of work will still need to be driven according to the standard scheme through planning, but in total time will be spent more. In my case, the customer decided that it was better to suffer once out of ten than all ten by a little and the green light was given to the method.

Even for purely visual acceleration, it is worth taking the whole calculation into a separate thread and pulling an event in it for each calculated time interval. The client should sign up for the event and give the user results before the full completion of the calculation. It will also be useful to display the progress bar so that the person behind the computer understands that everything is working, nothing is hanging and less nervous. And if he works with a client (for example, when writing a car to a service center), then he has already voiced the first sentences for recording in the morning.

I sincerely hope that the things described in my article will come in handy once. Thanks for attention.

Surely, many of you have come across in your work with the need to solve a planning problem in the field of scheduling theory. I would like to tell you how you can speed up the work of such a program without affecting the algorithm itself.

There is a huge number of approaches to solving these problems, implemented by using and combining various heuristic, approximate algorithms and brute force reduction algorithms. In life, all of their implementation is very dependent on the specifics of the enterprise, therefore, without going into a separate solution, consider the general steps to reduce the time to build a result.

Let's take a very vital task “Distribution of works by posts”. And also a user who makes up a set of work and sends it for planning. And he must withdraw not one or two options, but all possible intervals for the day. Those. to say whether it is possible to stick this set of work at 8.00? And at 8.30? And so on all day.

Data structure

Each post is characterized by the type of work that can be performed on it. At each post, working intervals are known (i.e., time periods that are not occupied by breaks or other work). Each planned work is characterized by a standard of time and type.

Information about free intervals from my server is dragged into such a label:

')

Jobs are stored in a list of the following structures:

private class JobTypes { public JobTypes(int jId, TimeSpan time, int type, string job_code = "0") { this.JobId = jId; this.JobRuntime = time; this.JobType = type; this.JobCode = job_code; } public int JobId { get; private set; } public TimeSpan JobRuntime { get; private set; } public int JobType { get; private set; } public string JobCode { get; set; } } When performing several works in succession of the same type, transferring them to different posts is an undesirable situation and costs some time resources. When planning, it makes sense to introduce the concept of “weight of the path”, which will determine the quality of the calculated schedule. Each transition between posts should increase the weight, each execution on a post of an unsuitable type should make the weight of the overall schedule even more heavy. Also, the weight between the time of completion of work and the reference one, the workload of the selected posts and a lot of factors at the request of the customer can influence the weight.

If the user just needs to show any possible schedule, we will use quick approximate methods and do it. But if you need to display the schedule, which had the least weight, you will have to implement the method of your search method. The duration of this method will grow exponentially depending on the amount of work. Accordingly, if the speed of building a schedule with one or two jobs will vary within hundredths of a second, with four or five within tenths, then calculating the schedule with more than twenty minor jobs may well come out in a few minutes. Of course, all figures are approximate and highly dependent on the situation, but the general principle is clear.

Verify build capability

The first step in which you can reduce the time of the program is to check the resource of the workshop before the start of the calculation. The types of planned work and the time of each work are known, the schedule and composition of the workshop is known. Therefore, you need to take the total free time for each type of posts, without specifying where and when it is free and impose it on the total duration of the work of the respective types. If there is not enough time, then it is clear that the schedule cannot be built for the selected time, we will send a message to the user that there are not enough resources in the workshop and we will offer to choose another day.

private bool CheckPeriodsAvailable(SchData sch_data) { var jTime = from s in sch_data.jobs group s by new { jType = s.JobType } into g select new { g.Key.jType, qty = g.Sum(s => s.JobRuntime.TotalMinutes) }; var pTime = (from s in (sch_data.BasicData.Tables["MonthFree"]).AsEnumerable() group s by new { jType = s.Field<int>("JobType") } into g select new { g.Key.jType, qty = g.Sum(s => (s.Field<DateTime>("FinTime") - s.Field<DateTime>("StartTime")).TotalMinutes) }).ToList(); foreach (var j in jTime) { double p = pTime.Find(item => item.jType.Equals(j.jType)).qty; if (j.qty > p) return false; } return true; } If the resources are still found, then move on to the second step - reducing brute force. It is clear that a solution with a minimum weight is such a solution in which work of each type was performed only on one post without interruption. In life, this is expressed in the fact that the car in the service center will be processed at one post, and will not be dragged throughout the workshop. It is likely that work will have to start later, but here you have to compare the weight of the delay with the weight of the transfer of the machine and choose the lesser of two evils.

Reducing the number of iterations

Before the start of the planning process, all the works are combined into single blocks, grouped by type, in the order of work performed. If the problem statement does not say about the dependence of tasks (i.e., that the work of i + 2 cannot be completed before i + 1 is finished), then we will sort the works by type. The duration of each block is considered to be equal to the total duration of all works included in the block.

Thus, in the simplest case, the planning will be sent not only 20 small works, but one long one. About the difference in execution time was mentioned above. All scanned paths for combined work are worth saving into some decision tree. With a negative result, you need to gradually reduce the density of the group and delete from the search area those paths that have already been added to the tree.

As soon as the path with the minimum desired weight is found, we will stop the search process and divide the group back into separate jobs, in order to provide information about what work will be done at what time.

private List<PeriodElems> SeparateSch(List<PeriodElems> resultList, List<JobTypes> jobs, DataTable PostsData) { int j = 0; while (j < resultList.Count) { if (((j) < jobs.Count) && (resultList[j].time > jobs[j].JobRuntime) && (resultList[j].type == jobs[j].JobType)) { PeriodElems ins_elem = resultList[j].Clone(); // , var periods = from item in (PostsData).AsEnumerable() where item.Field<int>("PostId") == resultList[j].post_id && ( (item.Field<DateTime>("StartTime") <= resultList[j].start && item.Field<DateTime>("FinTime") > resultList[j].start) || (item.Field<DateTime>("StartTime") > resultList[j].start && item.Field<DateTime>("FinTime") < resultList[j].fin) || (item.Field<DateTime>("StartTime") < resultList[j].fin && item.Field<DateTime>("FinTime") >= resultList[j].fin) ) select item; DataView OldMonthRows = periods.AsDataView<DataRow>(); if (OldMonthRows.Count == 0) { return null; } // - ok, , . TimeSpan shift_time = OldMonthRows.Count == 1 ? jobs[j].JobRuntime : GetShiftTime(jobs[j].JobRuntime, OldMonthRows, DateTime.Parse(OldMonthRows[0]["FinTime"].ToString()) - resultList[j].start); // - TimeSpan delta = DateTime.Parse(OldMonthRows[0]["StartTime"].ToString()) - ins_elem.start; if (delta > TimeSpan.Zero) { resultList[j].start += delta; resultList[j].fin += delta; } ins_elem.start = resultList[j].fin = resultList[j].start + shift_time; if (!ins_elem.start.Equals(ins_elem.fin)) resultList.Insert(j + 1, ins_elem); } j++; } return resultList; } The difficulty lies in the fact that breaks are possible in the workshop, which must be taken into account when backing up the work. In the above case, you need to make sure that the start of work does not fall into a break, and when the end of work gets into it, it will be autofocus for the next working period.

private TimeSpan GetShiftTime(TimeSpan jTime, DataView OldMonthRows, TimeSpan fndTime) { TimeSpan sftTime = jTime; if (OldMonthRows.Count > 0) { //, . if (fndTime < sftTime) { sftTime = fndTime + TimeSpan.FromMinutes(Convert.ToInt32(OldMonthRows[0]["TimeToNext"])); for (int per_numb_s = 1; per_numb_s < OldMonthRows.Count - 1; per_numb_s++) { if (fndTime >= jTime) break; fndTime += Convert.ToDateTime(OldMonthRows[per_numb_s]["FinTime"]) - Convert.ToDateTime(OldMonthRows[per_numb_s]["StartTime"]); sftTime += Convert.ToDateTime(OldMonthRows[per_numb_s]["FinTime"]) - Convert.ToDateTime(OldMonthRows[per_numb_s]["StartTime"]) + TimeSpan.FromMinutes(Convert.ToInt32(OldMonthRows[per_numb_s]["TimeToNext"])); } sftTime += jTime - fndTime; } } return sftTime; } When using the above approach, one should understand that there is always a chance of failure, then the set of work will still need to be driven according to the standard scheme through planning, but in total time will be spent more. In my case, the customer decided that it was better to suffer once out of ten than all ten by a little and the green light was given to the method.

Even for purely visual acceleration, it is worth taking the whole calculation into a separate thread and pulling an event in it for each calculated time interval. The client should sign up for the event and give the user results before the full completion of the calculation. It will also be useful to display the progress bar so that the person behind the computer understands that everything is working, nothing is hanging and less nervous. And if he works with a client (for example, when writing a car to a service center), then he has already voiced the first sentences for recording in the morning.

I sincerely hope that the things described in my article will come in handy once. Thanks for attention.

Source: https://habr.com/ru/post/148881/

All Articles