Web architecture

Hello to all!

You've probably already heard about the Internet Map. If not, then you can look at it here , and you can read about it in my previous post .

In this article I would like to tell you how the Internet Map site is arranged, what technologies ensure its normal operation and what steps had to be taken to withstand a large flow of visitors who want to look at the map.

')

The performance of the Internet Map is supported by modern technologies from the Internet giants: Google Maps is powered by Google Maps, web requests are processed by Microsoft's .net technology, and Amazon Web Services by Amazon is used to host and deliver content. All three components are vital for normal card operation.

Further, a large sheet about the internal architecture of the card: mainly AWS praises, performance issues and hosting prices will also be affected. If you are not afraid - welcome under cat.

The Google Maps technology involves the use of tiles - small 256x256 pixels of which the map image is formed. The main point associated with these pictures is that there are a lot of them. When you see a map on your screen in high resolution, it consists of all these small pictures. This means that the server must be able to very quickly handle multiple requests and give tiles at the same time so that the client does not notice the mosaic. The total number of tiles required to display a map is equal to sum (4 ^ i), where i runs from 0 to N, where N is the total number of zooms. In the case of the Internet Map, the number of zooms is 14, i.e. The total number of tiles should be approximately 358 million. Fortunately, this astronomical figure was reduced to 30 million, abandoning the generation of empty tiles. If you open the browser console, you will see a lot of 403 errors, this is exactly what they are - missing tiles, but the map is not visible because if there is no tile, the square is filled with a black background. Anyway, 30 million tiles is also a significant figure.

Therefore, the standard scheme for placing content on a dedicated server, in this case, is not suitable. There are a lot of tiles, there are many users, there should also be a lot of servers and they should be near the users so that they do not notice the delay. Otherwise, users from Russia will receive a good response, and users from Japan will remember the time of dial-up modems looking at your card. Fortunately, Amazon has a solution for this case (there is also the company Akamay, but it's not about her). It is called CloudFront and is a global content delivery network (CDN). You place your content somewhere (this is called Origin) and create a distribution (Distribution) in CloudFront. When a user requests your content, CloudFront automatically finds its site closest to the user and, if there is no copy of your data there, they will be requested either from another site or from Origin.

It turns out that your data is replicated many times and with high probability will be delivered from CloudFront servers, and not from your expensive, weak and unreliable storage. In the case of the Internet Map, the CloudFront connection actually meant that the data from my hard disk was physically copied to the Singapore Simple Storage Service (S3) segment, and then through the AWS console a distribution was created (Distribution) in CloudFront, where S3 was specified as the data source (Origin). If you look at the code of the Internet Map page, you can see that the tiles are taken from the address CloudFront d2h9tsxwphc7ip.cloudfront.net . Determining the nearest site, keeping the content up to date and all such things, CloudFront does automatically. Hooray!

In the picture you can see how the original map is broken into tiles, the tiles are stored in S3, and from there they are loaded into CloudFront and already from its nodes are delivered to users.

To ensure the search site on the map, you need a database where you will store information about the sites and their coordinates. In this case, we have MS SQL Express in the Amazon cloud. This is called the Relational Database Service (RDS). Relationality is not particularly necessary for us. we have only one table, but it's better to still have a full-fledged database than reinventing bicycles. RDS allows you to use not only MS SQL, but also Oracle, MySql and, probably, something else.

In the picture you can see how the original map turns into a table in the RDS database.

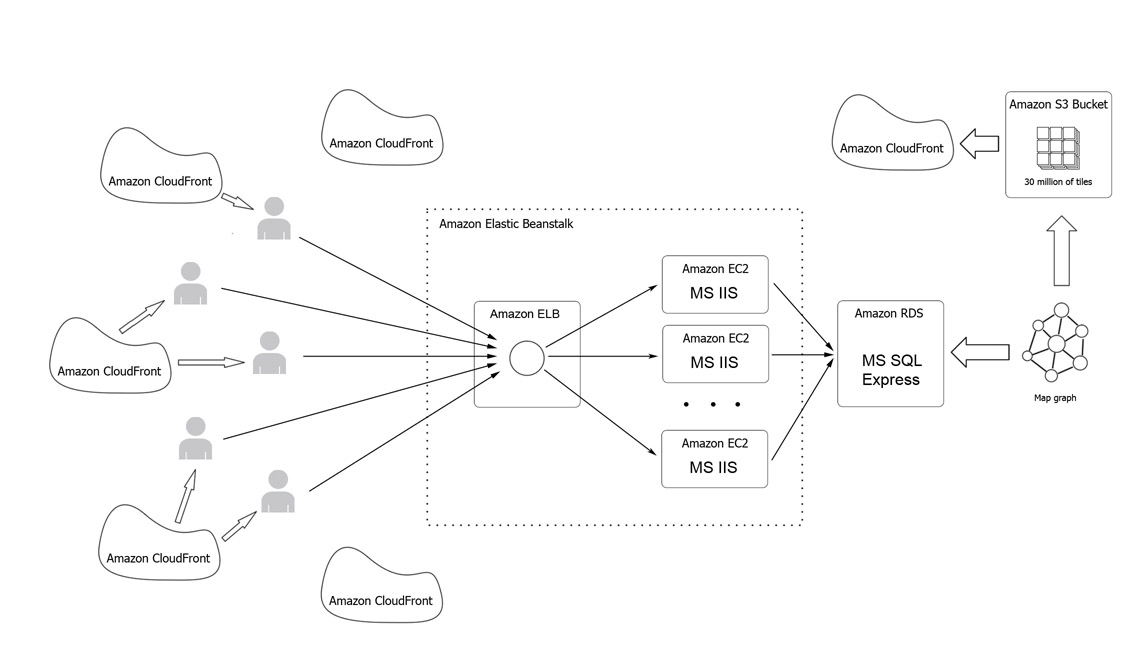

Probably, this feature in the Amazon cloud services family amazed me the most. Elastic Beanstalk allows literally one click to release a project under load with minimal time or without leaving the site offline. Knowing how hard releases are being made, especially when the infrastructure contains several servers and a load balancer, I was just amazed at how easily and gracefully Elastic Beanstalk handles this! During the first deployment, it creates all the infrastructure necessary for your application (environment): load balancer (Elastic Load Balancer - ELB), computing units (Elastic Compute Cloud - EC2) and determines the scaling parameters. Roughly, if you have one server and all requests go directly to it, then when you reach a certain threshold, your server will stop coping with the load and most likely will fall. Sometimes he will not even be able to rise under the load on which he had previously worked perfectly, because it usually takes some time to get into working mode, and constant requests do not allow this. In general, who fought he knows.

Elastic Beanstalk takes all the infrastructure issues for you. In fact, you can put the plugin in MS Visual Studio and forget about the details. He will himself maintain version control, deploy, etc. And in the event of an increase in load, it will create as many EC2 instances as necessary.

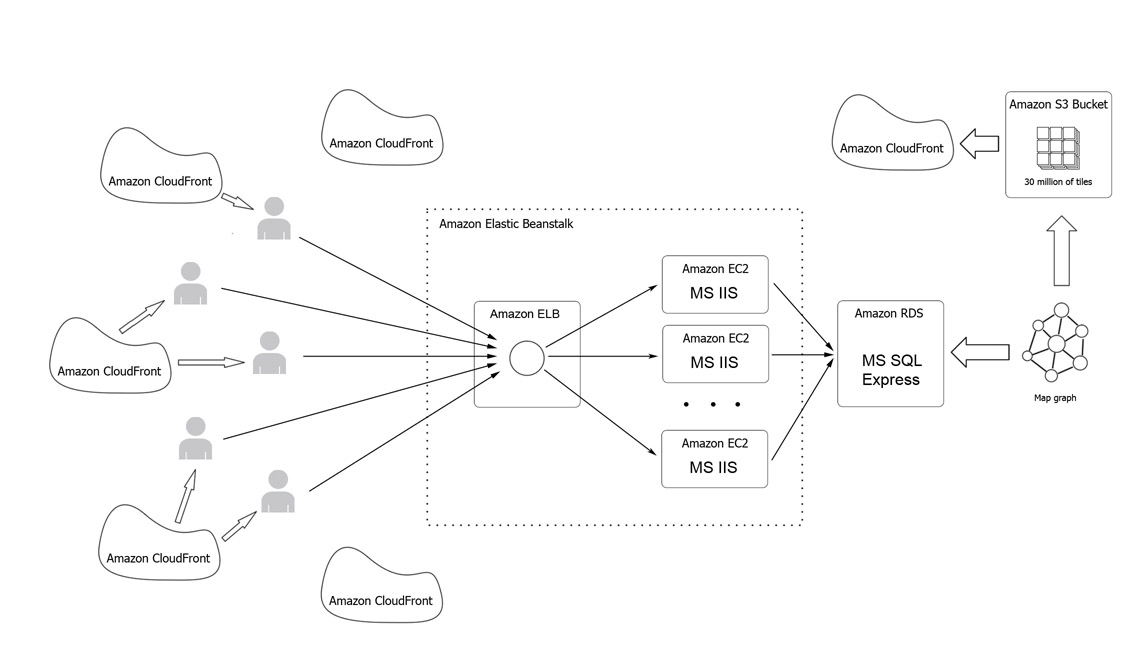

The Elastic Beanstalk chart is circled with a dotted line, inside you can see an ELB that accepts incoming requests and distributes them to IISs in EC2 instances.

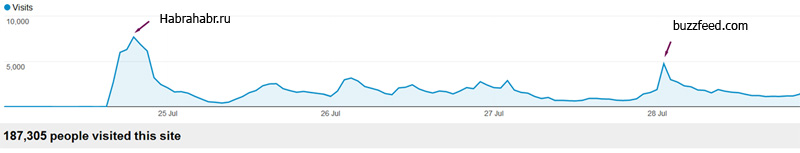

Immediately after the publication of the article on the Habrahabr.ru website, a stream of visitors went to the Internet Map. On the graph you can see a very sharp increase in attendance, for the first 6 hours the site was visited by 30,000 people, and on the first day almost 50,000, mostly from Russia and the countries of the former USSR. Feeling that something was wrong, Elastic Beanstalk created 10 EC2 instances and they did a good job. There were no complaints about problems with access to the site. The map could be viewed freely. But RDS immediately died: first, the search began to work very slowly, then intermittently, and then completely stopped. The account for the first day was about $ 200. Approximately 100 for S3 + CloudFront and 50 each for EC2 and RDS.

Having studied the experience, I optimized and reconfigure the autoscaling parameters. And it helped. During the week, the site was visited by an average of 30-50 thousand people a day from different countries of the world and nothing fell off. True, such a sharp influx as the first day was not there either.

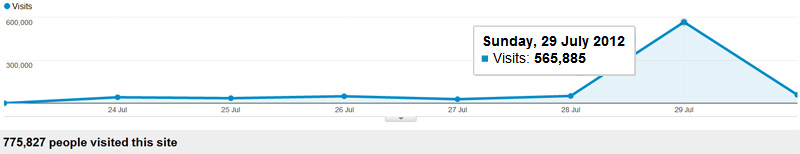

Then someone posted information about the Card on reddit.com and this caused an explosive increase in attendance. On Sunday, about half a million people visited the site and at the same time only one small instance EC2 and one small RDS instance worked. True, there was one complaint that the map does not load, but I think that this is normal for such a wave.

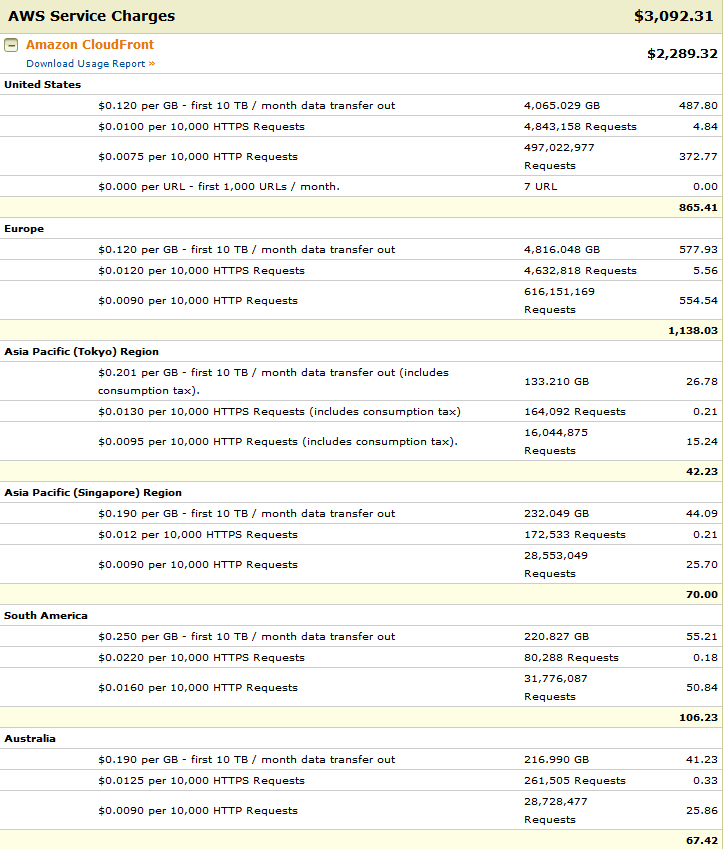

And here is the bill for the first week

I started working on information technology when the word cloud had nothing to do with IT. Since then, much has changed and the standalone server is living its time. Of course, hosting in the cloud has its drawbacks (you can ask Instagram for example). But the ability to shift most of the worries to the cloud service, in my opinion, is more than worth the risk. If you start to develop your project and quality, availability, reliability and scalability are important for you, then most likely you are on the road to the cloud.

You've probably already heard about the Internet Map. If not, then you can look at it here , and you can read about it in my previous post .

In this article I would like to tell you how the Internet Map site is arranged, what technologies ensure its normal operation and what steps had to be taken to withstand a large flow of visitors who want to look at the map.

')

The performance of the Internet Map is supported by modern technologies from the Internet giants: Google Maps is powered by Google Maps, web requests are processed by Microsoft's .net technology, and Amazon Web Services by Amazon is used to host and deliver content. All three components are vital for normal card operation.

Further, a large sheet about the internal architecture of the card: mainly AWS praises, performance issues and hosting prices will also be affected. If you are not afraid - welcome under cat.

Amazon CloundFront and Google Maps

The Google Maps technology involves the use of tiles - small 256x256 pixels of which the map image is formed. The main point associated with these pictures is that there are a lot of them. When you see a map on your screen in high resolution, it consists of all these small pictures. This means that the server must be able to very quickly handle multiple requests and give tiles at the same time so that the client does not notice the mosaic. The total number of tiles required to display a map is equal to sum (4 ^ i), where i runs from 0 to N, where N is the total number of zooms. In the case of the Internet Map, the number of zooms is 14, i.e. The total number of tiles should be approximately 358 million. Fortunately, this astronomical figure was reduced to 30 million, abandoning the generation of empty tiles. If you open the browser console, you will see a lot of 403 errors, this is exactly what they are - missing tiles, but the map is not visible because if there is no tile, the square is filled with a black background. Anyway, 30 million tiles is also a significant figure.

Therefore, the standard scheme for placing content on a dedicated server, in this case, is not suitable. There are a lot of tiles, there are many users, there should also be a lot of servers and they should be near the users so that they do not notice the delay. Otherwise, users from Russia will receive a good response, and users from Japan will remember the time of dial-up modems looking at your card. Fortunately, Amazon has a solution for this case (there is also the company Akamay, but it's not about her). It is called CloudFront and is a global content delivery network (CDN). You place your content somewhere (this is called Origin) and create a distribution (Distribution) in CloudFront. When a user requests your content, CloudFront automatically finds its site closest to the user and, if there is no copy of your data there, they will be requested either from another site or from Origin.

It turns out that your data is replicated many times and with high probability will be delivered from CloudFront servers, and not from your expensive, weak and unreliable storage. In the case of the Internet Map, the CloudFront connection actually meant that the data from my hard disk was physically copied to the Singapore Simple Storage Service (S3) segment, and then through the AWS console a distribution was created (Distribution) in CloudFront, where S3 was specified as the data source (Origin). If you look at the code of the Internet Map page, you can see that the tiles are taken from the address CloudFront d2h9tsxwphc7ip.cloudfront.net . Determining the nearest site, keeping the content up to date and all such things, CloudFront does automatically. Hooray!

In the picture you can see how the original map is broken into tiles, the tiles are stored in S3, and from there they are loaded into CloudFront and already from its nodes are delivered to users.

Amazon RDS

To ensure the search site on the map, you need a database where you will store information about the sites and their coordinates. In this case, we have MS SQL Express in the Amazon cloud. This is called the Relational Database Service (RDS). Relationality is not particularly necessary for us. we have only one table, but it's better to still have a full-fledged database than reinventing bicycles. RDS allows you to use not only MS SQL, but also Oracle, MySql and, probably, something else.

In the picture you can see how the original map turns into a table in the RDS database.

Amazon Elastic Beanstalk

Probably, this feature in the Amazon cloud services family amazed me the most. Elastic Beanstalk allows literally one click to release a project under load with minimal time or without leaving the site offline. Knowing how hard releases are being made, especially when the infrastructure contains several servers and a load balancer, I was just amazed at how easily and gracefully Elastic Beanstalk handles this! During the first deployment, it creates all the infrastructure necessary for your application (environment): load balancer (Elastic Load Balancer - ELB), computing units (Elastic Compute Cloud - EC2) and determines the scaling parameters. Roughly, if you have one server and all requests go directly to it, then when you reach a certain threshold, your server will stop coping with the load and most likely will fall. Sometimes he will not even be able to rise under the load on which he had previously worked perfectly, because it usually takes some time to get into working mode, and constant requests do not allow this. In general, who fought he knows.

Elastic Beanstalk takes all the infrastructure issues for you. In fact, you can put the plugin in MS Visual Studio and forget about the details. He will himself maintain version control, deploy, etc. And in the event of an increase in load, it will create as many EC2 instances as necessary.

The Elastic Beanstalk chart is circled with a dotted line, inside you can see an ELB that accepts incoming requests and distributes them to IISs in EC2 instances.

Performance and price

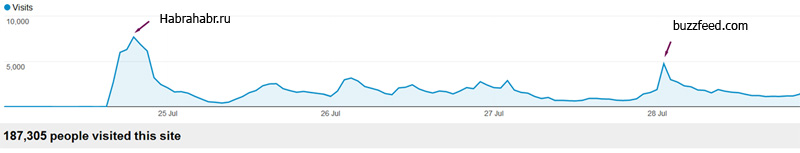

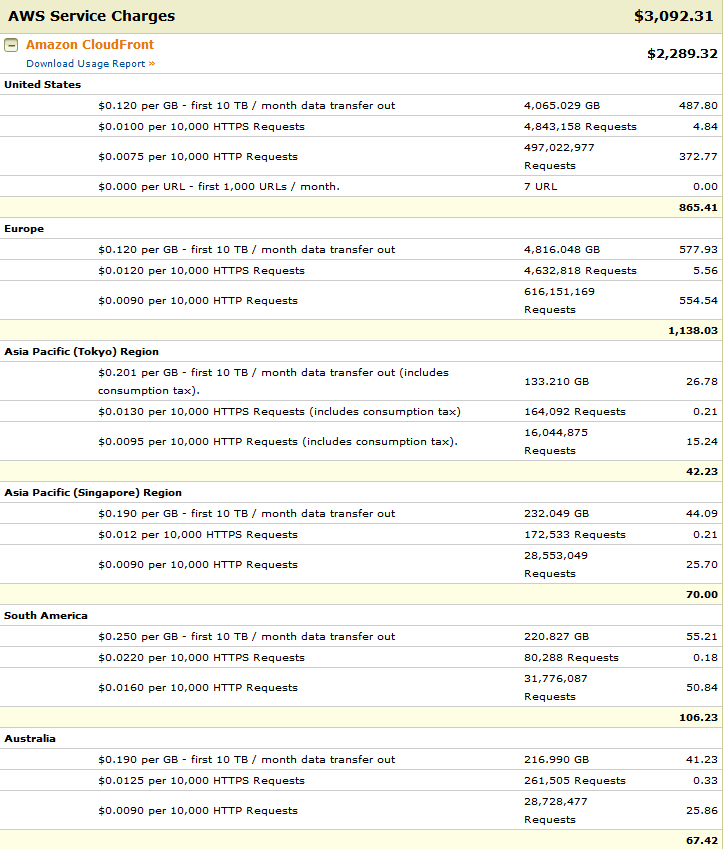

Immediately after the publication of the article on the Habrahabr.ru website, a stream of visitors went to the Internet Map. On the graph you can see a very sharp increase in attendance, for the first 6 hours the site was visited by 30,000 people, and on the first day almost 50,000, mostly from Russia and the countries of the former USSR. Feeling that something was wrong, Elastic Beanstalk created 10 EC2 instances and they did a good job. There were no complaints about problems with access to the site. The map could be viewed freely. But RDS immediately died: first, the search began to work very slowly, then intermittently, and then completely stopped. The account for the first day was about $ 200. Approximately 100 for S3 + CloudFront and 50 each for EC2 and RDS.

Having studied the experience, I optimized and reconfigure the autoscaling parameters. And it helped. During the week, the site was visited by an average of 30-50 thousand people a day from different countries of the world and nothing fell off. True, such a sharp influx as the first day was not there either.

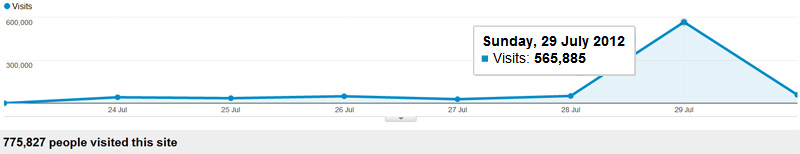

Then someone posted information about the Card on reddit.com and this caused an explosive increase in attendance. On Sunday, about half a million people visited the site and at the same time only one small instance EC2 and one small RDS instance worked. True, there was one complaint that the map does not load, but I think that this is normal for such a wave.

And here is the bill for the first week

Conclusion

I started working on information technology when the word cloud had nothing to do with IT. Since then, much has changed and the standalone server is living its time. Of course, hosting in the cloud has its drawbacks (you can ask Instagram for example). But the ability to shift most of the worries to the cloud service, in my opinion, is more than worth the risk. If you start to develop your project and quality, availability, reliability and scalability are important for you, then most likely you are on the road to the cloud.

Source: https://habr.com/ru/post/148721/

All Articles