How does Lytro, or another review

Lytro is a film camera with no focusing produced by Lytro ( Ren Ng startup - a graduate of Stanford). The camera works by the method of light fields, about the principle of operation of which I will try to tell (under the cut traffic!).

Pictures, where there are photos from lytro, are clickable (but the lytro site lately, sometimes, lies - probably it will fall now).

Camera

Full specs you can find on the site Lytro , about the appearance described in a previous review . The camera itself is made in China, connects and charges via USB:

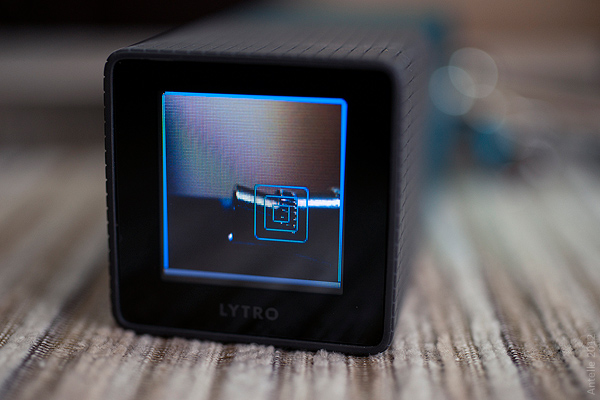

The display is touch, of normal resolution, here it shows that the camera is charging:

')

The principle of operation is simple: top zoom (you need to hold your finger on the elastic band), on top of the same shutter button. The zoom is similar to 24-105mm.

Macro

If you bring the camera close to the object, it will not focus (yes, and after the shooting also does not focus):

To overcome this, you can enable the "creative mode". It is activated by clicking on the white square:

In creative mode, you can focus the camera by clicking on the touchscreen. He poked at the object, the camera thought and focused (autofocus, apparently, contrasting):

Focusing can be pretty close:

The limitation of creative mode is that after shooting the focus range will be limited.

At one time, manufacturers promised to do built-in focus stacking for the macro. Now there is no such feature in the camera, but this is easy to accomplish in Photoshop, getting the image from lfp - as you can see, the GRIP here is much more than before processing:

Settings

There are no more settings in the camera. The camera sets the exposure on its own; it is impossible to influence this process (I noticed that the metering in Matrix in EverydayMode is center-weighted in CreativeMode - but also not always). Here are a couple of examples:

In the worst conditions, it would be ISO 3200, 1/15 (although it is strange: with an accelerometer, it would be possible to determine that the camera is on a tripod and increase the exposure):

Soft

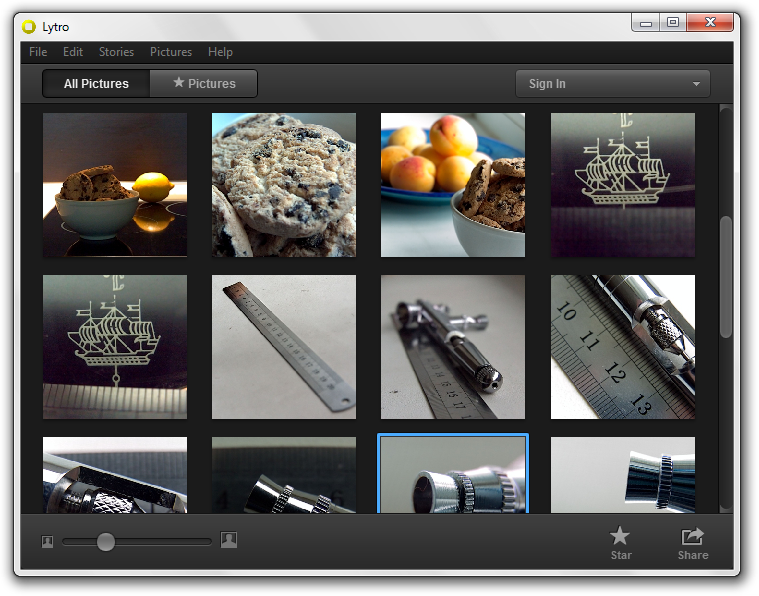

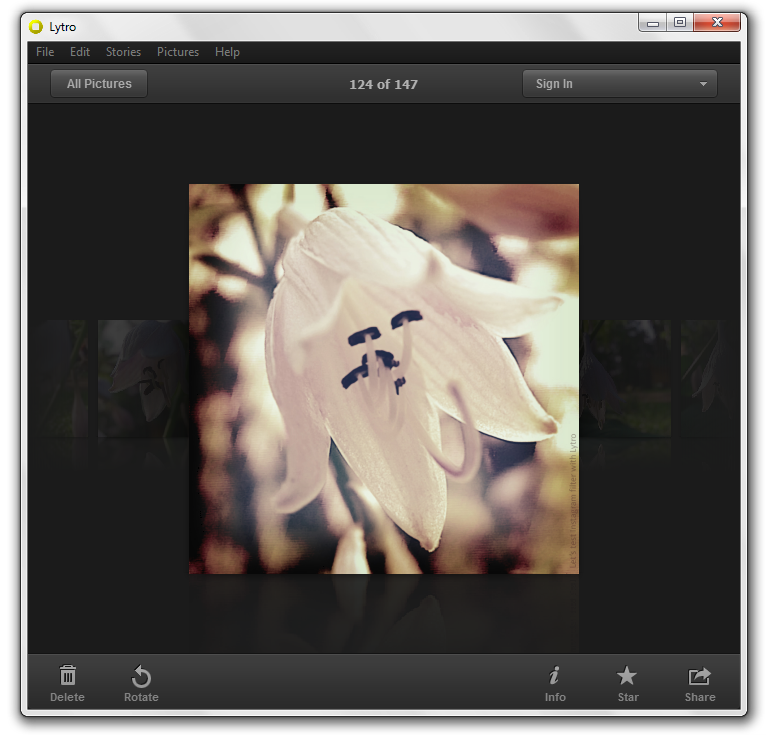

When you connect the camera, the software copies the photos from it, allows you to rotate them and upload them on lytro.com.Written in Qt, stores information in a SQLite database, the format of which is described here .

Most recently, software came out under win, which is good news:

Format

Lytro has one .lfp format, used for both raw and web-optimized files. Consider it in more detail.So .lfp is a container, divided into sections. Sections are of several types:

- File metadata: always present, describes file sections and contains their SHA-1 hashes, json format.Example

{ "picture" : { "frameArray" : [ { "frame" : { "metadataRef" : "sha1-079f3465638687275008d656c408c9c91d1bc6ee", "privateMetadataRef" : "sha1-44cdec4d744eae5c06dbef8f086fd7905bd8f55a", "imageRef" : "sha1-2f429e42bef0dc3fa0351dd2d55404c351e3beca" }, "parameters" : { "vendorContent" : { "com.lytro.tags" : { "darkFrame" : false, "modulationFrame" : false } } } } ], "viewArray" : [ { "type" : "com.lytro.stars", "vendorContent" : { "starred" : false } } ], "accelerationArray" : [ { "type" : "com.lytro.acceleration.refocusStack", "generator" : "Lytro Lightfield Engine 1.000000", "vendorContent" : { "viewParameters" : {}, "displayParameters" : { "displayDimensions" : { "mode" : "fixedToValue", "value" : { "width" : 1080, "height" : 1080 } } }, "defaultLambda" : 0, "depthLut" : { "width" : 20, "height" : 20, "representation" : "raw", "imageRef" : "sha1-3797be865adeaf3d28b372a2dca0aa8e3ef79b6f" }, "imageArray" : [ { "representation" : "jpeg", "width" : 1080, "height" : 1080, "lambda" : 4.8976802825927734, "imageRef" : "sha1-6e268b48ced0b5c38e39d477a3100f00bb9c188b" }, { "representation" : "jpeg", "width" : 1080, "height" : 1080, "lambda" : 5.886573314666748, "imageRef" : "sha1-80b19c4365465e63e623d5cc1fb9d345dfab7ce0" }, { "representation" : "jpeg", "width" : 1080, "height" : 1080, "lambda" : 6.8429036140441895, "imageRef" : "sha1-5f7c28a7b4aad7d06fcea230293aadb700ad27ef" } ] } } ], "derivationArray" : [ "sha1-f408b48df6a6c09e40e60585a655e8d81415b789" ] }, "thumbnailArray" : [], "version" : { "major" : 1, "minor" : 0, "provisionalDate" : "2011-08-03" } } - Metadata about the frame: is present only in the RAW-file, it is the readings from all sensors at the time of shooting in json.Example

{ "type" : "lightField", "image" : { "width" : 3280, "height" : 3280, "orientation" : 1, "representation" : "rawPacked", "rawDetails" : { "pixelFormat" : { "rightShift" : 0, "black" : { "r" : 168, "gr" : 168, "gb" : 168, "b" : 168 }, "white" : { "r" : 4095, "gr" : 4095, "gb" : 4095, "b" : 4095 } }, "pixelPacking" : { "endianness" : "big", "bitsPerPixel" : 12 }, "mosaic" : { "tile" : "r,gr:gb,b", "upperLeftPixel" : "b" } }, "color" : { "ccmRgbToSrgbArray" : [ 3.1115827560424805, -1.9393929243087769, -0.172189861536026, -0.3629055917263031, 1.6408803462982178, -0.27797481417655945, 0.078967012465000153, -1.1558042764663696, 2.0768373012542725 ], "gamma" : 0.41666001081466675, "applied" : {}, "whiteBalanceGain" : { "r" : 1.07421875, "gr" : 1, "gb" : 1, "b" : 1.26953125 } }, "modulationExposureBias" : -1.1193209886550903, "limitExposureBias" : 0 }, "devices" : { "clock" : { "zuluTime" : "2012-07-25T14:05:20.000Z" }, "sensor" : { "bitsPerPixel" : 12, "mosaic" : { "tile" : "r,gr:gb,b", "upperLeftPixel" : "b" }, "iso" : 205, "analogGain" : { "r" : 5.5625, "gr" : 4.0625, "gb" : 4.0625, "b" : 4.6875 }, "pixelPitch" : 1.3999999761581417e-006 }, "lens" : { "infinityLambda" : 123.91555023193359, "focalLength" : 0.011369999885559081, "zoomStep" : 661, "focusStep" : 1271, "fNumber" : 2.0399999618530273, "temperature" : 38.670684814453125, "temperatureAdc" : 2501, "zoomStepperOffset" : 2, "focusStepperOffset" : 22, "exitPupilOffset" : { "z" : 0.039666198730468748 } }, "ndfilter" : { "exposureBias" : 0 }, "shutter" : { "mechanism" : "sensorOpenApertureClose", "frameExposureDuration" : 0.015281426720321178, "pixelExposureDuration" : 0.015281426720321178 }, "soc" : { "temperature" : 47.337005615234375, "temperatureAdc" : 2632 }, "accelerometer" : { "sampleArray" : [ { "x" : -0.066666670143604279, "y" : 1.0392156839370728, "z" : 0.08235294371843338, "time" : 0 } ] }, "mla" : { "tiling" : "hexUniformRowMajor", "lensPitch" : 1.3898614883422851e-005, "rotation" : 0.0020797469187527895, "defectArray" : [], "scaleFactor" : { "x" : 1, "y" : 1.0005592107772827 }, "sensorOffset" : { "x" : -1.0190916061401367e-005, "y" : -2.3611826896667481e-006, "z" : 2.5000000000000001e-005 } } }, "modes" : { "creative" : "tap", "regionOfInterestArray" : [ { "type" : "exposure", "x" : 0.7625085711479187, "y" : 0.21875333786010742 }, { "type" : "creative", "x" : 0.7625085711479187, "y" : 0.21875333786010742 } ] }, "camera" : { "make" : "Lytro, Inc.", "model" : "F01", "firmware" : "v1.0a60, vv1.0.0, Thu Feb 23 15:02:57 PST 2012, e10fcca0668db3dbf94ae347248db3da070d21e9, mods=0, ofw=0" } } - Private metadata: serial of the camera and matrix in json.Example

{ "devices" : { "sensor" : { "sensorSerial" : "0x5086D178DEADBEEF" } }, "camera" : { "serialNumber" : "A20B00B1E3" } } - Raw sensor data: is present only in the RAW file, it is the RAW data from the matrix. It opens like this:

- Depth lookup table: present only in a web-optimized file. Depth map. Answers the question: if I poked here, which of the pictures I need to show?

- Prerendered λ =% lambda%: is present only in a web-optimized file. This image is shown to the user when he is "focused" on a particular area by a click. In the file from 1 to 12 (maybe more happens, I have not met) such sections.

The size of the RAW file is 16MB, web-optimized 1MB. The latter is distinguished by the fact that it stores a set of pictures with a map that corresponds to the coordinates of the click and the number of the picture. A full byte description of the format can be found here .

RAW

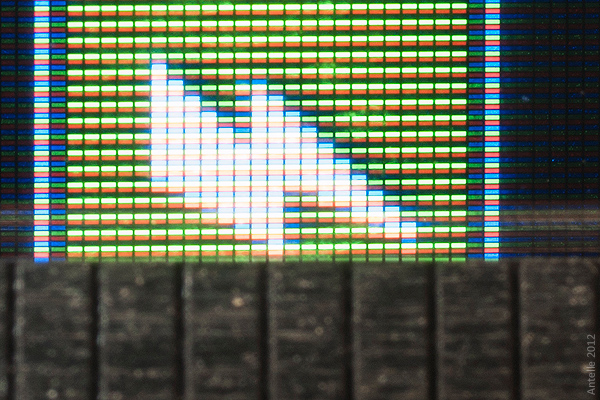

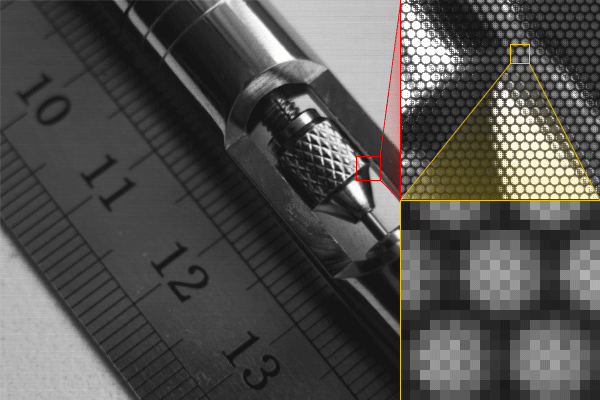

The RAW file itself is the data from the matrix. We see the Bayer filter matrix, in front of which are located microlysis:

Treatment

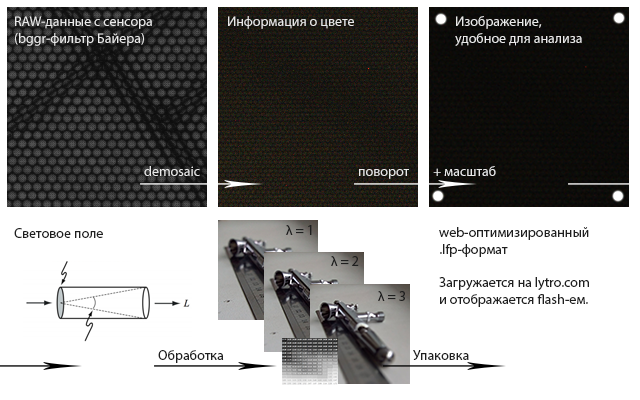

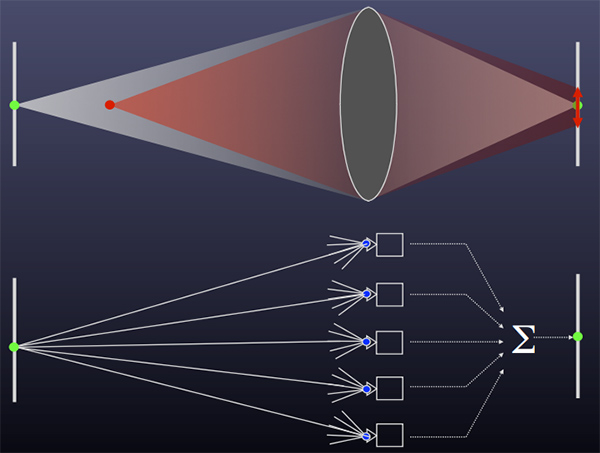

The most interesting is processing. The process is fully described in the works of Ren Ng, I depicted it schematically:

First comes the demosai of the RAW file using traditional methods. After that, the light field is brought to such a state that the “cells” (micro lysis) fall into an integer number of pixels. To do this, you need to rotate the image slightly and scale it (this is the most difficult step that requires painstaking adjustment: ).

Then the light field is integrated with certain parameters and the output is a set of pictures with different focal lengths. I note that the software itself chooses where to focus and how many images you need to get: as long as there is no possibility to adjust this.

The resulting file is packaged and uploaded to the Internet. When a user clicks on one of the sections of the file (“focusing”), the application looks at the map, what kind of image it is and shows it. This is a depth map, but it is very rough, for practical purposes with such a resolution, you will most likely find it too small.

Post processing

Well, when you can fix something in Photoshop. It is possible here, but with a reservation.Because The lytro format is described, it is easy to write a script that will be able to export all parts of the lfp file. Workflow is:

- Parse lfp;

- Editing pictures. Yes, Ivana Govnova will have to clean up not once, but exactly as many as the pictures in the container;

- We collect lfp back. Here we must take into account that it is necessary to count all sha1-hashes, including updating them in the TableOfContents-section, and then recalculate the hash from itself;

- Launch the Lytro application, which, when you click on this file, realizes that it has changed and updates its preview.

Voila, Lytro + Instagram Filter:

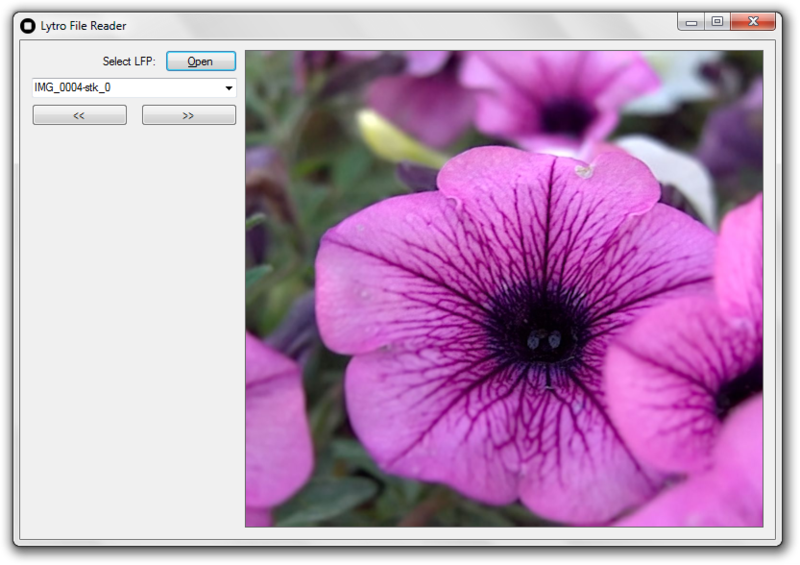

About light fields and plenoptic photo

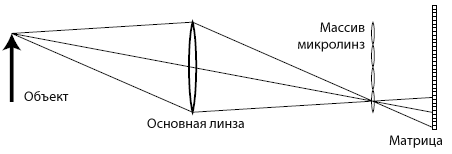

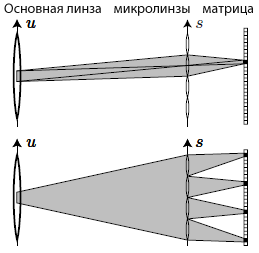

In a classical camera, light coming from different points of the lens into one point of the matrix is integrated and stored as the brightness value of a pixel at that point. In the plenoptic chamber, there is an array of microlenses in front of the matrix:

Each microlens distributes the light rays that come into it in different parts of the matrix:

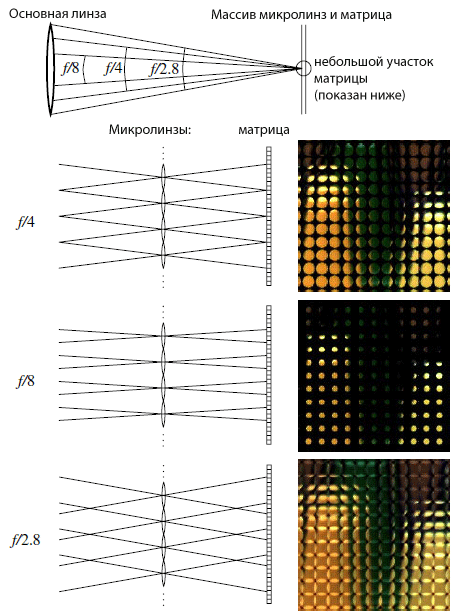

The f / 2 aperture is not random. It allows the most economical use of the matrix. If you take less, there will be unused pixels on the matrix, if there are more, the projected images will intersect, which is unacceptable:

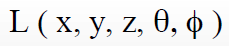

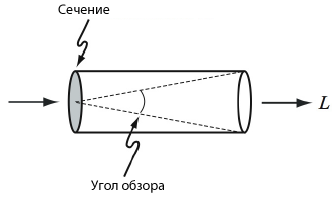

The light field in space is described by a function in five-dimensional space:

As you can see, the light field describes the energy brightness (radiance) for each point in space and for each direction of the beam from that point.

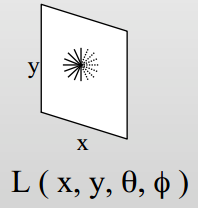

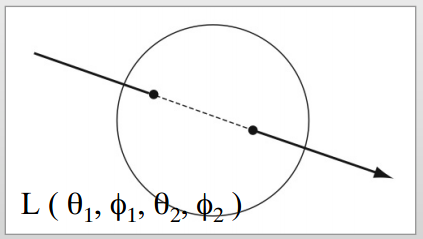

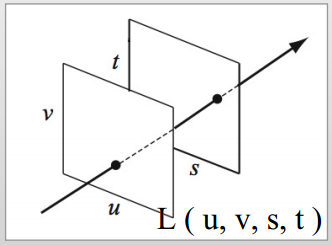

This function can be set in several ways:

On surface:

On the sphere:

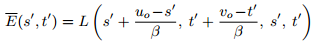

Between two planes:

Just the last way to describe the light field is used in lytro: two planes are the plane of the back wall of the lens and the plane of the matrix.

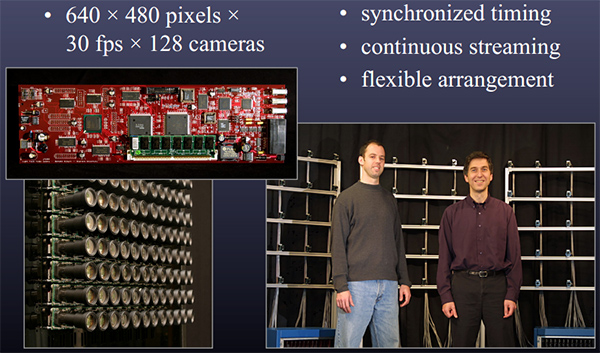

The light field can be obtained using an array of cameras:

The plenoptic camera can be represented as a special case of an array of cameras: the role of the camera is performed by a microlens.

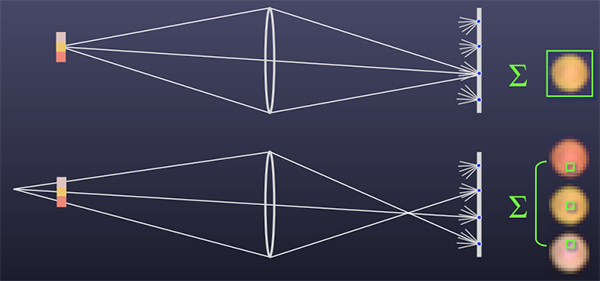

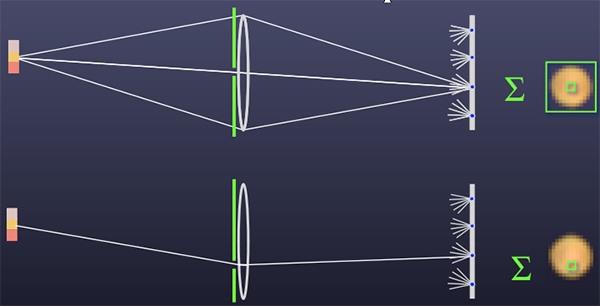

To obtain a photograph, it is necessary to integrate data from cameras (or in the case of lytro, data from microlens sections):

Depending on the method of selecting points within the window, there are two conversions:

The observer offset is the choice of a specific point inside the microlens:

Digital refocusing - the choice of the method of summable points from the sections of each microlens. Since there are few pixels under the microlens, in the case of lytro the possibilities of refocusing are very limited:

Theorem

Having a plenoptic camera with an aperture of f / N, in which each microlength corresponds to PxP pixels, you can get an image equivalent in sharpness obtained with a classical camera with an aperture of f / (N * P). For Lytro, it's about f / 14.Another interpretation of the same theorem:

The plenoptic camera described in the theorem makes it possible to obtain images with a f / N depth of field focused in any place within the depth of field of a classic camera with an aperture of f / (N * P).

Handling difficulties

When processing there are several obstacles that complicate the writing of the visualizer of the light field:- Microlenses are uneven. It is necessary to calibrate the algorithm;

- It is necessary to exclude the influence of vignetting. But remembering that under the microlens of only about 50 pixels, it becomes clear that this operation can produce a large error;

- Defective pixels. Like any other camera, they are here too. They need to be determined statistically so that they do not spoil the depth map;

- Performance. Calculations must be performed in the frequency domain using 4D Fourier transform.

Bokeh

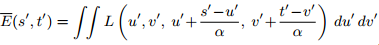

Blur in the camera like this (these are pictures from one shot, pulled out of lfp):

For comparison, I made the same frame on lytro (left) and on the SLR f / 2 (right):

Artifacts

Almost all the images crawl out unnatural artifacts of various kinds. For example, such:

Or such:

Heartbreaking sight.

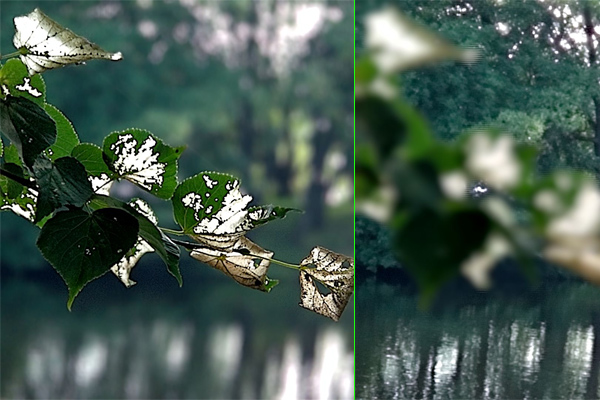

Tools to work with lytro

Lytro.Net : can open lfp and export their parts, there is also a console version:

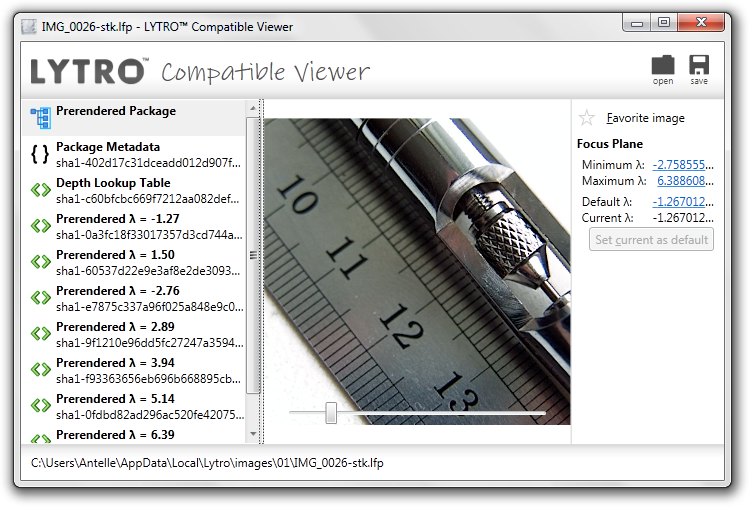

Lytro compatible viewer : opens files, allows focusing on click, there is a focus distance slider:

Reflections

I could not find any tools that could work with light fields. All that is - work with. Lfp-format: display, export to jpeg.After reading the works of Ren Ng, the technology no longer seems so mystical and unreal as it seemed at first.

The same file format with the same magic is definitely evil. I do not understand why they did it.

If you want to work with raw, I can send it to you (and I can sell the camera a little later).

Pictures

All the "live" pictures from the article are available on lytro.com :

The second day lytro site lies in the evening. Just in case, I uploaded lfp to file hosting , they open it up .

Links

About lytro:

Reverse Engineering the Lytro .LFP File Format - a simple, clear description of how-to-hack

lfptools - utility for working with lytro files on C

Lytro.Net - port lfptools on C #

Lytro meltdown - nui gui for working with lytro files on c #, SQLite structure of lytro SQLite, file format

The (New) Stanford Light Field Archive - examples of light fields from multiple cameras can be played online

Lytro Teardown - Lytro is disassembled here, you can see all the electronics

Ren Ng's work on light fields:

Light Field Sensing - light fields in pictures; not understand this description is difficult

Fourier Slice Photography - a theoretical description of the technique of light fields

Light Field Photography with a Hand-held Plenoptic Camera - how to apply the technique of light fields in photography

LightShop: Interactive Light Field Manipulation and Rendering - a little about how to present the information of the light field visually

Source: https://habr.com/ru/post/148497/

All Articles