Options for building highly available systems in AWS. Overcoming interruptions. Part 2

In this part, we will consider the following options for building high-availability systems based on AWS:

Build a highly available system between AWS availability zones.

The Amazon access zones are separate, independent, isolated data centers within the same region. Availability zones have a stable network connection among themselves, but are designed in such a way that problems in one zone do not affect the accessibility of other zones (they have independent power supply, cooling and security systems). The diagram below shows the existing distribution of availability zones (marked in purple) within regions (indicated in blue).

Most high-level Amazon services such as Amazon Simple Storage Service (S3), Amazon SimpleDB, Amazon Simple Queue Service (SQS), and Amazon Elastic Load Balancing (ELB) have built-in mechanisms for fault tolerance and high availability. Basic infrastructure related services such as Amazon Simple Storage Service (S3), Amazon SimpleDB, Amazon Simple Queue Service (SQS), and Amazon Elastic Load Balancing (ELB) provide features such as Elastic IP, snapshots, and availability zones. Each fault-tolerant and highly accessible system must use these features and use them correctly. In order for the system to be reliable and affordable, it is not enough just to deploy it in the AWS cloud, it needs to be designed so that the application works in several availability zones. Accessibility zones are located in different geographic areas, so using multiple zones can protect an application from problems in a specific area. The following diagram shows the different levels (with software examples) that need to be designed using multiple availability zones.

In order for an application to fail in one zone, the application continues to work without failures and data loss in another zone, it is important to have software application stacks independent from each other in different zones (both within one region and in different regions). At the design stage of the system, you need to understand well which parts of the application are tied to the zone.

Example:

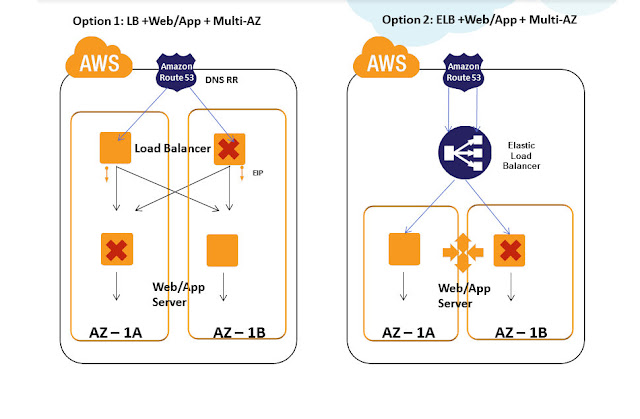

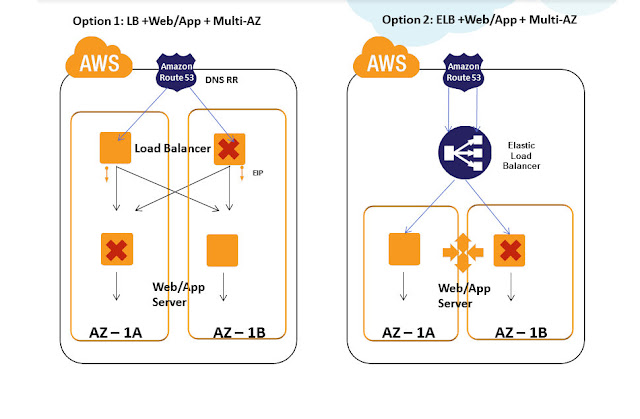

A typical Web application consists of such levels as DNS, Load Balancer, Web server, application server, cache, database. All of these levels can be distributed and deployed in two or more access zones, as described below.

Also, most of the built-in AWS units are initially designed to support accessibility in several zones. Architects and developers working with AWS units at the design stage of an application can use the built-in features to ensure high availability.

Designing a highly available system using built-in AWS units:

These blocks can be used as Web services. These units are initially highly available, reliable and scalable. They have built-in compatibility and the ability to work in multiple availability zones. For example, S3 is designed to ensure 99.999999999% safety and 99.99% availability of objects per year. You can work with these blocks through the API using simple calls. All of them have their advantages and disadvantages that must be considered when building and operating the system. Proper use of these blocks can dramatically reduce the cost of developing and creating complex infrastructure and help the team focus on the product, rather than creating and maintaining the infrastructure.

')

Build a highly available system between AWS regions

Currently there are 7 AWS regions around the world, and their infrastructure is increasing every day. The following diagram shows the current infrastructure of the regions:

Using AWS in several regions can be divided into the following categories: Cold, Warm, Hot Standby and Hot Active.

Cold and Warm are more about disaster recovery, while Hot Stanby and Hot Active can be considered for designing a highly available system between different regions. When designing a highly accessible system between different regions, the following problems should be taken into account:

The following diagram illustrates a simple, highly available system within several regions.

Now consider how to solve these problems:

Application Migration Images of AMI, both S3 and EBS, are available only within one region, respectively, all the necessary identical images must be created in all regions that are planned to be used. Each time you update the application code, the corresponding updates and changes must be made in all regions on all images. You can use automation scripts to do this, but it is better to use auto-configuration systems such as Puppet or Chef, this can reduce and simplify the costs of deploying applications. It is worth noting that in addition to AMI images, there is a service such as Amazon Elastic IP, which also works only within one region.

Data Migration. Each complex system contains data distributed among different data sources, such as relational databases, non-relational databases, caches, and file storages. Below are some technologies recommended for use when working with non-specific regions:

Since all the above technologies are asynchronous replications, you need to fear data loss and not forget about the values of RPO (allowable recovery point) and RTO (allowable recovery time) to ensure high availability.

Network flow: the ability to provide network traffic flow between different regions. Consider the key points that need to be considered when working with a network stream:

Building a highly available system between different cloud and hosting providers

Many people talk about building a highly available system using multiple Cloud and hosting providers. The following are the reasons why companies want to use these complex artectural systems:

Most of the solutions described in the section for several AWS regions are also suitable for this case.

When designing high-availability systems between different Cloud providers or different data centers, you need to carefully determine the following key points:

Sync data. Usually this step is not a big problem if the data warehouses can be installed, deployed to each of the Cloud providers used. Compatibility of software for databases, tools and utilities for working with databases, provided that they can be easily installed, will help solve problems with synchronizing data between different providers.

Network stream

Workload Migration:

Amazon AMI images may not be available to other Cloud providers. New images for virtual machines must be created for each data center or provider, which may be the reason for additional time and money costs.

Complex automation scripts using the Amazon API must be rewritten for each Cloud provider using the appropriate API. Some Cloud providers do not even provide their own API for infrastructure management. This can lead to increased maintenance.

Types of operating systems, software, hardware compatibility, all this should be analyzed and taken into account when designing.

Unified infrastructure management can be a big problem when using different providers, if no tools like RightScale are used for this.

Original article: harish11g.blogspot.in/2012/06/aws-high-availability-outage.html

Posted by: Harish Ganesan

- Build a highly available system between AWS availability zones

- Build a highly available system between AWS regions

- Building a highly available system between different cloud and hosting providers

Build a highly available system between AWS availability zones.

The Amazon access zones are separate, independent, isolated data centers within the same region. Availability zones have a stable network connection among themselves, but are designed in such a way that problems in one zone do not affect the accessibility of other zones (they have independent power supply, cooling and security systems). The diagram below shows the existing distribution of availability zones (marked in purple) within regions (indicated in blue).

Most high-level Amazon services such as Amazon Simple Storage Service (S3), Amazon SimpleDB, Amazon Simple Queue Service (SQS), and Amazon Elastic Load Balancing (ELB) have built-in mechanisms for fault tolerance and high availability. Basic infrastructure related services such as Amazon Simple Storage Service (S3), Amazon SimpleDB, Amazon Simple Queue Service (SQS), and Amazon Elastic Load Balancing (ELB) provide features such as Elastic IP, snapshots, and availability zones. Each fault-tolerant and highly accessible system must use these features and use them correctly. In order for the system to be reliable and affordable, it is not enough just to deploy it in the AWS cloud, it needs to be designed so that the application works in several availability zones. Accessibility zones are located in different geographic areas, so using multiple zones can protect an application from problems in a specific area. The following diagram shows the different levels (with software examples) that need to be designed using multiple availability zones.

In order for an application to fail in one zone, the application continues to work without failures and data loss in another zone, it is important to have software application stacks independent from each other in different zones (both within one region and in different regions). At the design stage of the system, you need to understand well which parts of the application are tied to the zone.

Example:

A typical Web application consists of such levels as DNS, Load Balancer, Web server, application server, cache, database. All of these levels can be distributed and deployed in two or more access zones, as described below.

Also, most of the built-in AWS units are initially designed to support accessibility in several zones. Architects and developers working with AWS units at the design stage of an application can use the built-in features to ensure high availability.

Designing a highly available system using built-in AWS units:

- Amazon S3 for object and file storage

- Amazon CloudFront for content delivery system

- Amazon ELB for load balancing

- Amazon AutoScaling for auto scaling EC2

- Amazon CloudWatch to monitor

- Amazon SNS and SQS for message service

These blocks can be used as Web services. These units are initially highly available, reliable and scalable. They have built-in compatibility and the ability to work in multiple availability zones. For example, S3 is designed to ensure 99.999999999% safety and 99.99% availability of objects per year. You can work with these blocks through the API using simple calls. All of them have their advantages and disadvantages that must be considered when building and operating the system. Proper use of these blocks can dramatically reduce the cost of developing and creating complex infrastructure and help the team focus on the product, rather than creating and maintaining the infrastructure.

')

Build a highly available system between AWS regions

Currently there are 7 AWS regions around the world, and their infrastructure is increasing every day. The following diagram shows the current infrastructure of the regions:

Using AWS in several regions can be divided into the following categories: Cold, Warm, Hot Standby and Hot Active.

Cold and Warm are more about disaster recovery, while Hot Stanby and Hot Active can be considered for designing a highly available system between different regions. When designing a highly accessible system between different regions, the following problems should be taken into account:

- Application Migration - the ability to migrate the environment for an application between AWS regions

- Data synchronization - the ability to migrate data in real time between two or more regions

- Network traffic - the ability to control the flow of network traffic between regions

The following diagram illustrates a simple, highly available system within several regions.

Now consider how to solve these problems:

Application Migration Images of AMI, both S3 and EBS, are available only within one region, respectively, all the necessary identical images must be created in all regions that are planned to be used. Each time you update the application code, the corresponding updates and changes must be made in all regions on all images. You can use automation scripts to do this, but it is better to use auto-configuration systems such as Puppet or Chef, this can reduce and simplify the costs of deploying applications. It is worth noting that in addition to AMI images, there is a service such as Amazon Elastic IP, which also works only within one region.

Data Migration. Each complex system contains data distributed among different data sources, such as relational databases, non-relational databases, caches, and file storages. Below are some technologies recommended for use when working with non-specific regions:

- Databases: MySQL Master-Slave replications, SQL Server 2012 HADR, SQL Server 2008, Programmatic RDS

- File Storage: GlusterFS distributed network file system, S3 level replication

- Cache: since replication for cache between regions is expensive in most cases, it is recommended to keep a separate preheated cache in each region.

- For high-speed file transfer, services such as Aspera are recommended.

Since all the above technologies are asynchronous replications, you need to fear data loss and not forget about the values of RPO (allowable recovery point) and RTO (allowable recovery time) to ensure high availability.

Network flow: the ability to provide network traffic flow between different regions. Consider the key points that need to be considered when working with a network stream:

- Currently, the built-in load balancer ELB does not support load distribution between regions, so it is not suitable for distributing traffic between regions

- Reverse proxies and load balancers (such as HAProxy, Nginx) are suitable for this purpose. However, if the entire region is unavailable, then the servers that distribute the load may be unavailable or may not see servers located in other regions, which may lead to application errors.

- A common practice is to use distribution management at the DNS level. Using services such as UltraDNS, Akamai, or Route53 LBR (routing

- based on response time) you can redistribute traffic between regions

- Using Amazon Route53 LBR, you can switch traffic between different regions and redirect user requests to a region with a shorter response time. As the endpoint for route53, the server’s public IP address, Elastic IP, ELB IP can be used.

- Amazon Elastic IP cannot switch between different regions. Such services, FTP and other IP-oriented services intended for communication between applications must be reconfigured to use domain names instead of IP addresses; This is a key point to consider when using multiple regions.

Building a highly available system between different cloud and hosting providers

Many people talk about building a highly available system using multiple Cloud and hosting providers. The following are the reasons why companies want to use these complex artectural systems:

- Large enterprises that have already invested the bulk of funds in building their own data centers or in renting physical equipment in the data center providers want to use AWS for disaster recovery (Disaster recovery) in case of problems with the main data center. Businesses that already have their own private Eucalyptus, Open Stack, Cloud Stack or vCloud-based clouds installed in their own data centers and also want to use AWS for disaster recovery. Eucalyptus has maximum compatibility with AWS, as it has compatibility at the API level. Most workloads can be integrated between AWS and Eucalyptus. For example, imagine that an enterprise has a set of developed scripts for Amazon EC2 using AWS API in a private cloud of Eucalyptus, this cloud can easily migrate to AWS in case of need for fault tolerance. If the enterprise uses OpenStack or another private cloud provider, the scripts will have to be rewritten, adapted to work in a new environment. This may be economically disadvantageous for complex systems.

- Companies that consider their infrastructure to be suitable for scalability or high availability can use AWS as their primary site, and their data centers as secondary in case of disaster recovery.

- Companies that are not satisfied with the stability and reliability of their current public Cloud Providers may want to use a multi-cloud structure. This is the rarest case now, but may become major in the future.

Most of the solutions described in the section for several AWS regions are also suitable for this case.

When designing high-availability systems between different Cloud providers or different data centers, you need to carefully determine the following key points:

Sync data. Usually this step is not a big problem if the data warehouses can be installed, deployed to each of the Cloud providers used. Compatibility of software for databases, tools and utilities for working with databases, provided that they can be easily installed, will help solve problems with synchronizing data between different providers.

Network stream

- Switching between multiple providers can be achieved using DNS-enabled services such as Akamai, Route53, UltraDNS. Since these solutions are independent of Cloud providers, regions, and availability zones, they can effectively be used to switch network traffic between data centers and providers for high availability.

- A fixed IP address can be provided by a datacenter or hosting provider, while some Cloud providers currently cannot provide a fixed IP for virtual machines. This can be a bottleneck in providing high availability between Cloud providers.

- Very often there is a need to establish a VPN connection between the data center, Cloud provider for the migration of confidential data between the clouds. There may be a problem with setting up, implementing and supporting this service with some Cloud providers. This point should be taken into account when designing.

Workload Migration:

Amazon AMI images may not be available to other Cloud providers. New images for virtual machines must be created for each data center or provider, which may be the reason for additional time and money costs.

Complex automation scripts using the Amazon API must be rewritten for each Cloud provider using the appropriate API. Some Cloud providers do not even provide their own API for infrastructure management. This can lead to increased maintenance.

Types of operating systems, software, hardware compatibility, all this should be analyzed and taken into account when designing.

Unified infrastructure management can be a big problem when using different providers, if no tools like RightScale are used for this.

Original article: harish11g.blogspot.in/2012/06/aws-high-availability-outage.html

Posted by: Harish Ganesan

Source: https://habr.com/ru/post/148240/

All Articles