Data Center for Increased Responsibility: Photo Excursion

For over 15 years we have been building and servicing data centers for customers. Our third own data center "Compressor" is an object that embodies much of the accumulated experience.

Distributive

Racks

')

Below is a story about a data center device in pictures and an explanation of where, what and how it works.

Caution traffic .

We needed the data center for renting to customers. Most often - banks, companies of the financial sector and in general large organizations from areas where there are high requirements for reliability. Therefore, we immediately decided to build on the rules of the Uptime Institute for further certification by TIER III.

The Uptime Institute is an organization that has accumulated a sea of all sorts of different practical cases with data centers and has drawn conclusions for each situation. Standards encompass many, many things that often seem to be unimportant to data center designers - and reliability suffers. Fully certified TIER III data centers in the Russian Federation are actually a few: usually either the object is built “approximately according to the standard” without guarantees, or they are guided by the standard TIA-942, which does not imply verification. And without checking the reliability statement - just words.

Speaking very roughly, TIER I means that in the event of a breakdown, the data center stops working and the systems are being repaired. TIER II says that the data center runs continuously, but if necessary, stops to service critical nodes or repair breakdowns. In case of single failures, TIER III is repaired without stopping, so a lot of things are duplicated (it stops only in really extreme cases when several knots break simultaneously). TIER IV implies full duplication: in fact, two TIER-second data centers in one building are built around the racks. The cost per square meter, respectively, 15K, 20K, 25K and 40K dollars, but this is for America. We have, unfortunately, because of customs and Russian specifics, 2-3 times more expensive. And then only for really large data centers, where there is economies of scale.

In our case, only a meteorite-level failure is permissible, allowing a total of suspension of work for 1 hour and 35 minutes per year, that is, fault tolerance - 99.982%.

The requirements were as follows:

Here is the building that we found a few years before the launch of the data center - so it was then:

Previously, it was a warehouse of compressors in the relevant plant. In this "house" even drove the train for unloading. Considering under what loads the foundation was considered (6 tons per square meter at Tsodovskaya norm of 1.2 tons), it completely satisfied us. We made all the "stuffing" of the building and the roof again. It is clear, it was possible to build a data center and far beyond the city: it would lead to savings in construction costs, and, in part, in electricity. But in our case, the ability to comfortably reach and quickly synchronize is more important. Milliseconds, which adds a distance in synchronous replication (the speed of light) tend to fold in minutes on complex write operations that are important to banks.

In general, the data center market for customers is divided into 2 main parts: in the first case we are talking about selling units in racks - these are usually solutions for small companies that, for example, need to keep a website or, rarely, 1-2 small application systems. In such data centers, power is supplied without reserve, many technical reservations such as requirements for the customer’s equipment (at least in terms of the rack size), it is often impossible to install your hardware at all. The second part of the market works for large customers. Work with iron in such data centers is carried out without restrictions: the equipment can be at least round (in history it was - CRAY), and one server can even occupy several racks. In our case, many customers additionally shield their equipment in machine rooms. You can come to us often, work as much as you need - and any composition. True, it is better to warn about large delegations: the change of protection is intensifying when there are more than 5 people in the machine room.

Two independent power lines from the city, each at 8 megawatts, fit the data center. The third - the reserve is 7 diesel generators of 2 MVA each. Diesels operate according to the N + 1 scheme, that is, 6 is always in operation, one can be serviced. The load on the diesel should not exceed 80%, so the system is built with a margin of one and a half times. In total, with this in mind and the power loss, they give a little more than the required 8 megawatts. Of these, 4.5 are spent on iron, 2.5 — on cooling, the remaining megawatt is spent on other engineer and other systems — light, household air conditioners, and so on.

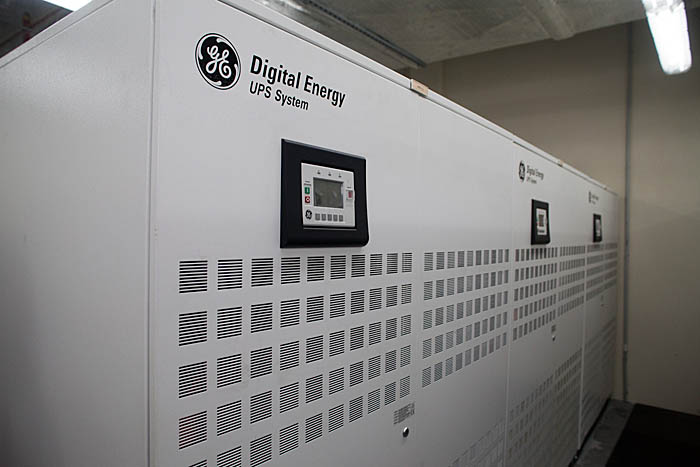

In case of loss of both power lines, the UPS operates, and the automation switches the data center to diesel engines. There is a very important point: the starting currents of some systems are quite high, so if you just throw the entire load on diesel engines at one moment, diesel engines can not cope. The UPSs work for a few minutes, so there is time to switch equipment sequentially, in blocks, with a delay of, for example, 10 seconds. The diesel engine starts in 1 minute, in another 4 full switching takes place, and the entire data center switches to autonomous power.

Next to the building are diesels:

Tests of backup equipment are performed at regular intervals: it is necessary that the reserve is always ready and functional. For example, a diesel under load is checked once a month. Test plan usually includes some emergency scenario that involves the use of all systems, that is, the data center is disconnected from both power lines, the UPS is turned on, monitoring automatically switches all iron to diesel engines, the cooling system uses a chilled water reservoir to reset the heat (it partially shuts down to several minutes to switch, except for the internal circuit), and so on. Then the "accident" is eliminated, and the automation returns everything to its normal state. This is a very tough testing procedure, but it is necessary for the calmness of engineers and customers. In reality, similar situations have happened in our data center over the past 3 years only once: one direction from the city was tested manually, the second went out. Everything worked properly.

Outside the data center about diesel engines about 30 tons of copper in the cable.

Diesel powered diesel fuel from the tanks: here are two hatches.

Each storage fits in 30 cubic meters. The fuel rings running through all diesel engines are moving away from the tanks. Pipelines are also reserved, there is a valve before each pipe outlet. So you can block just one input to one diesel: the second input of this diesel and both inputs of the other cars will continue to receive fuel.

One such tank is enough for a day of work.

Here is the pipelines to diesel engines. On the pipe covers with a winter jacket, insulating pipe and heating cable. The second pipe is “naked” - specifically, it is now under preventive maintenance.

Fuel ring tubes and valves. And also the heating cable removed at the time of the prevention, so that there were no problems with fuel in the winter.

Before commissioning, the machine room is tested, 10 heaters of 100 kW each are installed for three days, while air conditioners are tested. Why and why so long - below, when we get to such a test room.

People pass through a standard checkpoint, but the equipment gets here much more interesting - through such a hall:

There are two loaders, plus you can easily unload onto the ramp from the tail lift. The ramp is the same height as the raised floor - 1.2 meters. The main thing when driving a loader in a machine room is to remember that it slows down as much as it accelerates. For servers weighing more than 1.5 tons, this is a very important rule.

Here is the IBM System P795, which is a server weighing about 1,700 kilograms, which cannot be tilted more than 10 degrees, otherwise the warranty on it is lost immediately.

A similar one was somehow installed in our data center and in a backup data center of another company. We had a car arrived, went overboard to the ramp, our employees took the server and set it up, even talking about nothing. In the second data center, a whole bike was born about how they delivered it - there is a narrow ramp, I had to carry it with my hands, and six healthy men tweaked it somehow, and another looked after the level, so that God forbid not lose the expensive guarantee. And there, the server did not pass the height of the door, the magnetic lock interfered, we had to urgently cut it off with a grinder.

For comparison, the procedure for delivering iron to our data center is very simple: a specialist arrives from IBM, walks in, looks, enjoys, calls forwarding agents. The floors, by the way, are prepared for everyone: they are hard, and even the heaviest iron rolls on them normally. Another customer remembers how he took out a piece of iron under a tonne across the carpeted floor, and what it cost him to pull it out of his former data center.

Here is the elevator: it raises 2.5 tons. It is cross-cutting: if you need to first, just drive through it, if you need to go to the second floor, you go up.

On the way, by the way, there is a toilet for disabled people to the level of the raised floor, this is also a requirement of the standard. True, Russian, but we follow all the rules.

Electricity comes to the transformer substation, then goes to the main switchboard (switchboard).

AVR - emergency input reserve. Everything on the screens can be seen in the monitoring, from each module goes Ethernet-cable.

For some important devices, remote control is deliberately turned off: the fact is that clicks on a computer are not safe - the notorious “human factor”. And it is important for us to approach and do it with our hands, this is much more conscious. At the same time, I remind you that switching to the reserve when the two main lines are disconnected is done automatically - in such situations there is not even 30 seconds to think and run.

In this room electricity is supplied from behind the wall from transformers through busbars, from the raised floor below - from diesel engines. Shields select the channel and give up on the UPS.

Pay attention to these great buslines:

Busbar looks like a rail left in the background

They are very easy to maintain: modular pieces, save space, are easy to replace, if necessary, make a branch easily. If instead of them they put an ordinary cable, then it would have to be laid by a whole brigade. Even small cables have to be bent together, or three, and there is no reason to talk about such cables. He would also have a bending radius of one and a half meters, the busbar bends at a right angle.

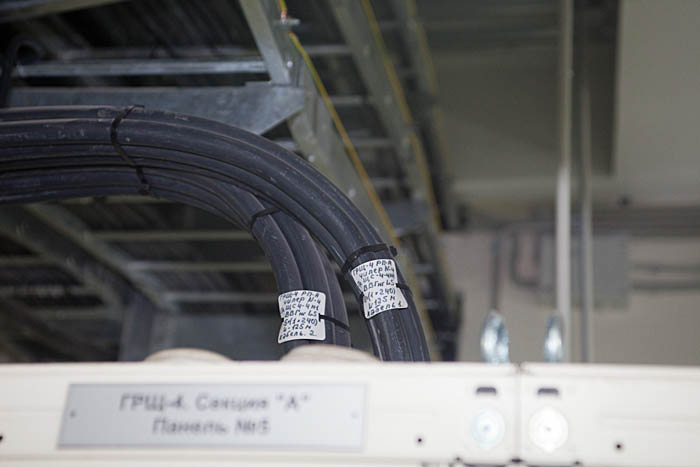

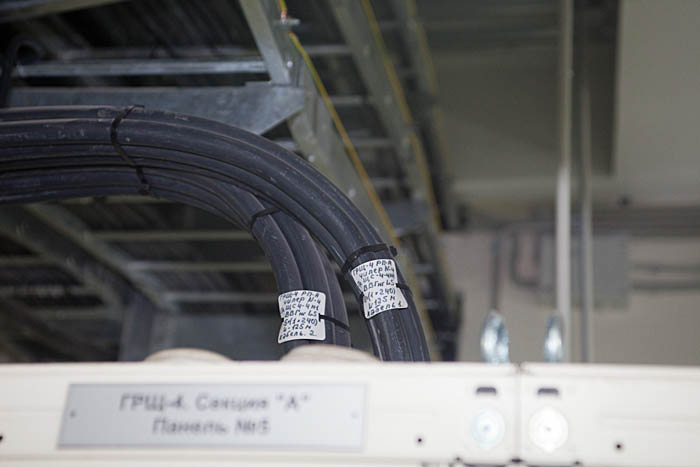

By the way, there are ordinary cables. They go to non-critical consumers - lighting (there is a backup), external conditioning circuit, etc. Here, the reservation is only diesel engines, UPS is not needed. They are neatly laid, despite the impressive sections - up to 240 mm 2 per core.

Red pipes are a fire system. 125th freon is used, it binds oxygen, non-toxic. Under the raised floor there are also sprayers and sensors: any more or less insulated volume is supplied with its own system.

Safety is always important. In the corner, the necessary pieces for the hall:

But the shields that control the power racks customers. Also duplicated. Introductory automata check the voltage and show everything in the monitoring system, and the automaton racks show the on / off status, also in the monitoring system. This is very convenient, for example, when there is a short circuit in the customer’s equipment, you can see who, what, where - and what to do about it .

Our engineers in the rack climb only for one reason: with smoke. Over the past three years, one more or less irregular case has happened: in the storage system in the built-in UPS, the battery has failed, the characteristic smell of burning has appeared. It was not possible to localize the source in the machine room (there is a strong wind in the halls). The smell quickly dissipated. We contacted the customer, having received permission, went into the lattice, looked at the indication, found a malfunction and reported. In general, the scenario for such cases is quite interesting: in any emergency situation, a “cosmonaut” arrives within a minute - a fireman in a protective “suit” with an oxygen balloon (he can walk the halls after the fire extinguishing gas has started), guards and engineers. By the way, the most amazing thing, but the fireman in all exercises really fits the standard, despite the fact that he has to run for at least 20 seconds.

Machine rooms are designed to maximally divide areas of responsibility. In simpler language, if the customer decides to fence his equipment, our engineers should not go inside the fences: that is, all systems should be behind the bars. This is taken from experience: in our second data center, you need to constantly receive approval for the replacement of some trifles in the client's area (and invite specialists and security guards to visit, stand behind the engineer: this is the procedure). On the other hand, there is a risk that the customer himself will change something on the engineering equipment: in general, it is better not to overlap.

Inside the fences only video cameras, gas sprayers and gas analyzer tubes. The rest is outside the machine halls.

It blows strongly here, cold air comes from two corridors, each of which has 8 fan coils. There are no solid barriers in the raised floor and the false ceiling. Cold air is blown through the grates from the bottom, hot is taken from the top. Thus, the cold air rises through the rack, where it heats up, goes up and goes to cool.

Cameras are viewing the rack. When the customer moves the iron, the racks - the cameras all track. Cameras in the corridors are installed as the corridor is built, it is convenient for non-standard equipment and organization of partitions between customers.

In our first data center, we first arranged the cameras and infrastructure such as cables to the racks, and then reworked everything, because the same hardware is not found in two different customers. Cables are not laid until the customer arrives - so that the customer receives exactly what he needs. General crosses are not very desirable, it is disturbing to many.

There are also gas sprayers in the engine room. The system is designed for full distribution of gas in 10 seconds after it works (including, on the raised floor and the false ceiling).

An early fire detection system is used: an ordinary sensor catches 60 degrees or smoke is a case when something is already burning. We have much more sensitive tubes of gas analyzers on the ceiling.

Procedure in case of fire: disconnection of the module, extinguishing with hands, withdrawal of people, manual start of gas by a button on the outside of the door of the machine room At the same time, equipment and electrics continue to work, there is just no oxygen left in the hall. It takes two hours to extinguish and remove smoke, while no one without a suit and a balloon can enter the hall. Recharging the fire extinguishing system and all the others that work, costs about 2 million rubles. Pressed the button - spent.

Here is the rack and the nearest infrastructure. For all modular solutions are used. Cable system boxes are moving. Optical cassettes are used, the connector is like a patchcord, only 12 fibers allow you to quickly mount and make changes in the course of operation.

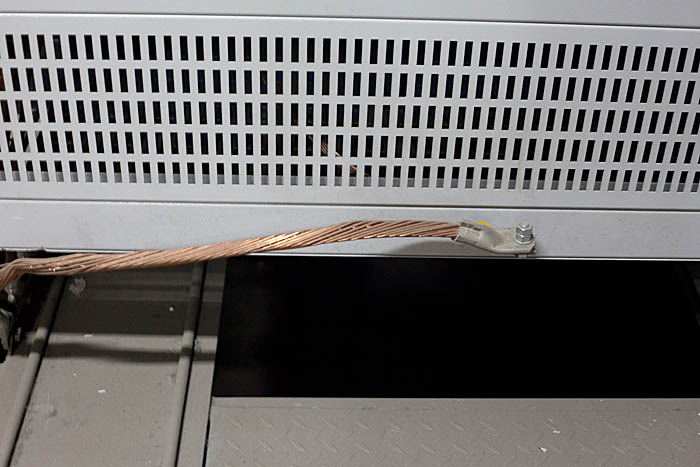

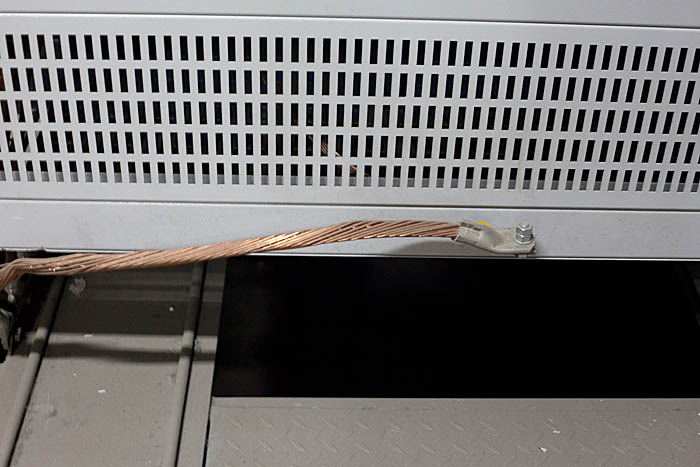

Rack necessarily grounded. For equipment at low currents, this is critical.

Another room, here under the floor is water - circular routes for cold supply and heat removal:

A bunch of jumpers on these rings: they are reserved (even gate valves, so that after 10 years they don’t find out if an accident is not closing). Sensors are installed everywhere. Under the room is a mini-pool with a slope for receiving water in case of leaks and a pump - so that the electrician is safe.

Fire alarm sensors, VESDA. This is the heart of an early fire detection system:

Conventional grounding and potential equalization system is used. Approach to the raised floor, the raised floor plates themselves are also conductive. Any object in the machine room is grounded. In the hall you can walk barefoot and touch everything - it will not hit you.

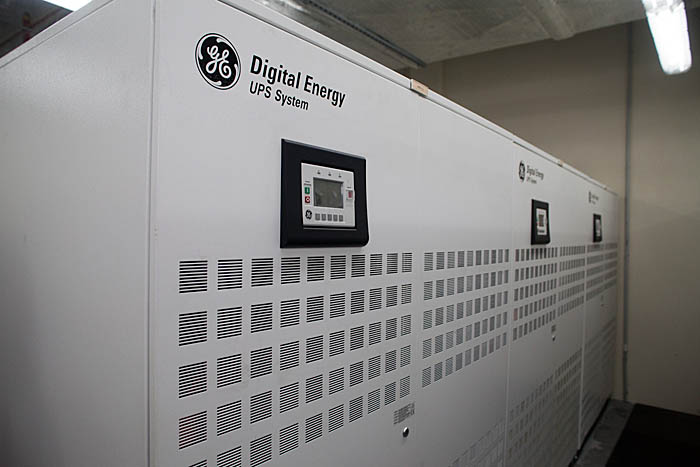

And this is a shield and inverter.

The room is without air conditioning, because the efficiency of the UPS is very high, the heat is extremely small, and what is there, is given a regular supply. Air is prepared in the ventilation chamber with filters, humidifiers and dehumidifiers.

The batteries are in a separate room because they need their own temperature. Slightly colder - and reduced capacity. If you heat on an extra couple of degrees - the service life drops from ten years to two. After filling the machinery, there will be about a hundred tons of batteries. The batteries have pressure relief valves (hydrogen is released), which creates the risk of a voluminous explosion, so there is a separate exhaust system in the room.

Red pipes take the air outside.

Battery contacts are not isolated. One such line can shy 400 volts. At the same time, unlike AC, the effect is not localized on the skin, but affects the internal organs much more, which is very dangerous. In general, the higher the frequency - less danger from the current.

This is a ramp for a crane: after all, you also need to reserve equipment delivery routes. The ramp for the crane was already used by us when importing the engineer.

By the way, guess why you need grilles in the floor right at the exit from the ramp?

And in this hall is a hard test of cooling.

In the hall there are heaters of 100 kW each, which heat the air for 72 hours. During the test it is very important to understand that the temperature does not increase with long heating: for in short tests it is not clear whether this is an error or the accumulation of heat by the walls. This is done for so long for the simple reason that in the data center no one measures the capabilities of walls, ceilings and equipment as heat accumulators. The air warms up in 5-10 minutes, then all the heat goes to the walls, etc. There was a case when the premises of another data center kept heat for 4 hours (and it was impossible to turn on equipment that is automatically cut down at around 35 degrees). Only if the temperature does not change a degree, the test is considered complete.

In areas where people go - water extinguishing. Volumes with different types of fire extinguishing - gas and sprinkler - are isolated from each other. A comfortable temperature is maintained in the staff area.

This is pumping. Here are two cooling circuits.

Considering how much power the system takes, one would need to install a huge room with a UPS in order to maintain it while switching to diesel. Naturally, there is an elegant solution: it is enough to keep the pool with 13-degree chilled water, which gives the same thing as a UPS (after all, the result of the electrics' work is cold water), but without loss and without delays, plus without complicated maintenance. In the pool - 90 tons of pre-cooled water, it lasts for 15 minutes of regular work at least, as well as electricity from the UPS. The rest is due to diesel engines. Outward heat is removed through the second circuit with chillers and cooling towers. In the chillers, by the way, it is not water, but a water solution of glycol, such as vodka, in order not to be cold in winter. The intersection of the circuits - in heat exchangers.

Pipes should ideally stand for 10 years without replacement. So that they do not grow, the water must be cleaned.

The pool is visible from behind (behind the lamps) - this is its upper edge.

Roof - here chillers and dry cooling towers.

In the summer, freon closers are used, the rest of the time there is enough outside air for the free cooling mode. The glycol solution is chased through radiators, outside of which is outside air. When one of the radiators is standing there, it can freeze a lot of things, so when it is turned on, it is potentially possible to freeze. To prevent this, bypasses are used; they do not allow the supercoated solution to flow until it heats up.

Here is a ramp at the top:

CHP. By the way, some bad people wrote in Wikimapia that it was “Nuclear power plant”, why foreigners almost ruined TIER III certificate with the question: “Russians, have you lost your mind about building around a nuclear facility?”.Long argued that this is the usual CHP.

Here, air preparation for supply to the premises of the data center is a ventilation chamber:

On the first floor there are transformers:

And here is a co-working room for customer delegations.

First, of course, customers need a place to configure servers after installation. Secondly, there remain people who at some point need to be within walking distance of iron. Thirdly, if you come to the data center in light clothing, you can quickly go to the mashroom, so you need a place to drink hot tea. Here there may be a change of admins, when the client has one hundred racks.

This is the dining room. It is cozy and all equipment is also duplicated.

Here is a data center increased responsibility.

Distributive

Racks

')

Below is a story about a data center device in pictures and an explanation of where, what and how it works.

Caution traffic .

Brief introductory

We needed the data center for renting to customers. Most often - banks, companies of the financial sector and in general large organizations from areas where there are high requirements for reliability. Therefore, we immediately decided to build on the rules of the Uptime Institute for further certification by TIER III.

The Uptime Institute is an organization that has accumulated a sea of all sorts of different practical cases with data centers and has drawn conclusions for each situation. Standards encompass many, many things that often seem to be unimportant to data center designers - and reliability suffers. Fully certified TIER III data centers in the Russian Federation are actually a few: usually either the object is built “approximately according to the standard” without guarantees, or they are guided by the standard TIA-942, which does not imply verification. And without checking the reliability statement - just words.

What are these levels of reliability?

Speaking very roughly, TIER I means that in the event of a breakdown, the data center stops working and the systems are being repaired. TIER II says that the data center runs continuously, but if necessary, stops to service critical nodes or repair breakdowns. In case of single failures, TIER III is repaired without stopping, so a lot of things are duplicated (it stops only in really extreme cases when several knots break simultaneously). TIER IV implies full duplication: in fact, two TIER-second data centers in one building are built around the racks. The cost per square meter, respectively, 15K, 20K, 25K and 40K dollars, but this is for America. We have, unfortunately, because of customs and Russian specifics, 2-3 times more expensive. And then only for really large data centers, where there is economies of scale.

In our case, only a meteorite-level failure is permissible, allowing a total of suspension of work for 1 hour and 35 minutes per year, that is, fault tolerance - 99.982%.

How to choose a place

The requirements were as follows:

- Close to Moscow: our customers are organizations that require synchronous data replication between data centers, so more than 20–40 kilometers from their existing data center (and there is one) you cannot leave. The closer - the better.

- Convenient location: representatives of the customer come all the time, therefore the location in accessibility from the metro is critical. Plus - convenient parking.

- Of course, the availability of electricity. The data center consumes 8 megawatts - that is, you need two independent lines of such power plus space for diesel generators.

- The ability to be certified by TIER III, which implies the removal from government agencies, power lines, gas stations and other similar objects.

Here is the building that we found a few years before the launch of the data center - so it was then:

Previously, it was a warehouse of compressors in the relevant plant. In this "house" even drove the train for unloading. Considering under what loads the foundation was considered (6 tons per square meter at Tsodovskaya norm of 1.2 tons), it completely satisfied us. We made all the "stuffing" of the building and the roof again. It is clear, it was possible to build a data center and far beyond the city: it would lead to savings in construction costs, and, in part, in electricity. But in our case, the ability to comfortably reach and quickly synchronize is more important. Milliseconds, which adds a distance in synchronous replication (the speed of light) tend to fold in minutes on complex write operations that are important to banks.

In general, the data center market for customers is divided into 2 main parts: in the first case we are talking about selling units in racks - these are usually solutions for small companies that, for example, need to keep a website or, rarely, 1-2 small application systems. In such data centers, power is supplied without reserve, many technical reservations such as requirements for the customer’s equipment (at least in terms of the rack size), it is often impossible to install your hardware at all. The second part of the market works for large customers. Work with iron in such data centers is carried out without restrictions: the equipment can be at least round (in history it was - CRAY), and one server can even occupy several racks. In our case, many customers additionally shield their equipment in machine rooms. You can come to us often, work as much as you need - and any composition. True, it is better to warn about large delegations: the change of protection is intensifying when there are more than 5 people in the machine room.

Two independent power lines from the city, each at 8 megawatts, fit the data center. The third - the reserve is 7 diesel generators of 2 MVA each. Diesels operate according to the N + 1 scheme, that is, 6 is always in operation, one can be serviced. The load on the diesel should not exceed 80%, so the system is built with a margin of one and a half times. In total, with this in mind and the power loss, they give a little more than the required 8 megawatts. Of these, 4.5 are spent on iron, 2.5 — on cooling, the remaining megawatt is spent on other engineer and other systems — light, household air conditioners, and so on.

In case of loss of both power lines, the UPS operates, and the automation switches the data center to diesel engines. There is a very important point: the starting currents of some systems are quite high, so if you just throw the entire load on diesel engines at one moment, diesel engines can not cope. The UPSs work for a few minutes, so there is time to switch equipment sequentially, in blocks, with a delay of, for example, 10 seconds. The diesel engine starts in 1 minute, in another 4 full switching takes place, and the entire data center switches to autonomous power.

Next to the building are diesels:

Tests of backup equipment are performed at regular intervals: it is necessary that the reserve is always ready and functional. For example, a diesel under load is checked once a month. Test plan usually includes some emergency scenario that involves the use of all systems, that is, the data center is disconnected from both power lines, the UPS is turned on, monitoring automatically switches all iron to diesel engines, the cooling system uses a chilled water reservoir to reset the heat (it partially shuts down to several minutes to switch, except for the internal circuit), and so on. Then the "accident" is eliminated, and the automation returns everything to its normal state. This is a very tough testing procedure, but it is necessary for the calmness of engineers and customers. In reality, similar situations have happened in our data center over the past 3 years only once: one direction from the city was tested manually, the second went out. Everything worked properly.

Outside the data center about diesel engines about 30 tons of copper in the cable.

Diesel powered diesel fuel from the tanks: here are two hatches.

Each storage fits in 30 cubic meters. The fuel rings running through all diesel engines are moving away from the tanks. Pipelines are also reserved, there is a valve before each pipe outlet. So you can block just one input to one diesel: the second input of this diesel and both inputs of the other cars will continue to receive fuel.

One such tank is enough for a day of work.

Here is the pipelines to diesel engines. On the pipe covers with a winter jacket, insulating pipe and heating cable. The second pipe is “naked” - specifically, it is now under preventive maintenance.

Fuel ring tubes and valves. And also the heating cable removed at the time of the prevention, so that there were no problems with fuel in the winter.

Before commissioning, the machine room is tested, 10 heaters of 100 kW each are installed for three days, while air conditioners are tested. Why and why so long - below, when we get to such a test room.

We go in the building itself

People pass through a standard checkpoint, but the equipment gets here much more interesting - through such a hall:

There are two loaders, plus you can easily unload onto the ramp from the tail lift. The ramp is the same height as the raised floor - 1.2 meters. The main thing when driving a loader in a machine room is to remember that it slows down as much as it accelerates. For servers weighing more than 1.5 tons, this is a very important rule.

Here is the IBM System P795, which is a server weighing about 1,700 kilograms, which cannot be tilted more than 10 degrees, otherwise the warranty on it is lost immediately.

A similar one was somehow installed in our data center and in a backup data center of another company. We had a car arrived, went overboard to the ramp, our employees took the server and set it up, even talking about nothing. In the second data center, a whole bike was born about how they delivered it - there is a narrow ramp, I had to carry it with my hands, and six healthy men tweaked it somehow, and another looked after the level, so that God forbid not lose the expensive guarantee. And there, the server did not pass the height of the door, the magnetic lock interfered, we had to urgently cut it off with a grinder.

For comparison, the procedure for delivering iron to our data center is very simple: a specialist arrives from IBM, walks in, looks, enjoys, calls forwarding agents. The floors, by the way, are prepared for everyone: they are hard, and even the heaviest iron rolls on them normally. Another customer remembers how he took out a piece of iron under a tonne across the carpeted floor, and what it cost him to pull it out of his former data center.

Here is the elevator: it raises 2.5 tons. It is cross-cutting: if you need to first, just drive through it, if you need to go to the second floor, you go up.

On the way, by the way, there is a toilet for disabled people to the level of the raised floor, this is also a requirement of the standard. True, Russian, but we follow all the rules.

Electricity comes to the transformer substation, then goes to the main switchboard (switchboard).

AVR - emergency input reserve. Everything on the screens can be seen in the monitoring, from each module goes Ethernet-cable.

For some important devices, remote control is deliberately turned off: the fact is that clicks on a computer are not safe - the notorious “human factor”. And it is important for us to approach and do it with our hands, this is much more conscious. At the same time, I remind you that switching to the reserve when the two main lines are disconnected is done automatically - in such situations there is not even 30 seconds to think and run.

In this room electricity is supplied from behind the wall from transformers through busbars, from the raised floor below - from diesel engines. Shields select the channel and give up on the UPS.

Pay attention to these great buslines:

Busbar looks like a rail left in the background

They are very easy to maintain: modular pieces, save space, are easy to replace, if necessary, make a branch easily. If instead of them they put an ordinary cable, then it would have to be laid by a whole brigade. Even small cables have to be bent together, or three, and there is no reason to talk about such cables. He would also have a bending radius of one and a half meters, the busbar bends at a right angle.

By the way, there are ordinary cables. They go to non-critical consumers - lighting (there is a backup), external conditioning circuit, etc. Here, the reservation is only diesel engines, UPS is not needed. They are neatly laid, despite the impressive sections - up to 240 mm 2 per core.

Red pipes are a fire system. 125th freon is used, it binds oxygen, non-toxic. Under the raised floor there are also sprayers and sensors: any more or less insulated volume is supplied with its own system.

Safety is always important. In the corner, the necessary pieces for the hall:

But the shields that control the power racks customers. Also duplicated. Introductory automata check the voltage and show everything in the monitoring system, and the automaton racks show the on / off status, also in the monitoring system. This is very convenient, for example, when there is a short circuit in the customer’s equipment, you can see who, what, where - and what to do about it .

Our engineers in the rack climb only for one reason: with smoke. Over the past three years, one more or less irregular case has happened: in the storage system in the built-in UPS, the battery has failed, the characteristic smell of burning has appeared. It was not possible to localize the source in the machine room (there is a strong wind in the halls). The smell quickly dissipated. We contacted the customer, having received permission, went into the lattice, looked at the indication, found a malfunction and reported. In general, the scenario for such cases is quite interesting: in any emergency situation, a “cosmonaut” arrives within a minute - a fireman in a protective “suit” with an oxygen balloon (he can walk the halls after the fire extinguishing gas has started), guards and engineers. By the way, the most amazing thing, but the fireman in all exercises really fits the standard, despite the fact that he has to run for at least 20 seconds.

Machine rooms are designed to maximally divide areas of responsibility. In simpler language, if the customer decides to fence his equipment, our engineers should not go inside the fences: that is, all systems should be behind the bars. This is taken from experience: in our second data center, you need to constantly receive approval for the replacement of some trifles in the client's area (and invite specialists and security guards to visit, stand behind the engineer: this is the procedure). On the other hand, there is a risk that the customer himself will change something on the engineering equipment: in general, it is better not to overlap.

Inside the fences only video cameras, gas sprayers and gas analyzer tubes. The rest is outside the machine halls.

It blows strongly here, cold air comes from two corridors, each of which has 8 fan coils. There are no solid barriers in the raised floor and the false ceiling. Cold air is blown through the grates from the bottom, hot is taken from the top. Thus, the cold air rises through the rack, where it heats up, goes up and goes to cool.

Cameras are viewing the rack. When the customer moves the iron, the racks - the cameras all track. Cameras in the corridors are installed as the corridor is built, it is convenient for non-standard equipment and organization of partitions between customers.

In our first data center, we first arranged the cameras and infrastructure such as cables to the racks, and then reworked everything, because the same hardware is not found in two different customers. Cables are not laid until the customer arrives - so that the customer receives exactly what he needs. General crosses are not very desirable, it is disturbing to many.

There are also gas sprayers in the engine room. The system is designed for full distribution of gas in 10 seconds after it works (including, on the raised floor and the false ceiling).

An early fire detection system is used: an ordinary sensor catches 60 degrees or smoke is a case when something is already burning. We have much more sensitive tubes of gas analyzers on the ceiling.

Procedure in case of fire: disconnection of the module, extinguishing with hands, withdrawal of people, manual start of gas by a button on the outside of the door of the machine room At the same time, equipment and electrics continue to work, there is just no oxygen left in the hall. It takes two hours to extinguish and remove smoke, while no one without a suit and a balloon can enter the hall. Recharging the fire extinguishing system and all the others that work, costs about 2 million rubles. Pressed the button - spent.

Here is the rack and the nearest infrastructure. For all modular solutions are used. Cable system boxes are moving. Optical cassettes are used, the connector is like a patchcord, only 12 fibers allow you to quickly mount and make changes in the course of operation.

Rack necessarily grounded. For equipment at low currents, this is critical.

Another room, here under the floor is water - circular routes for cold supply and heat removal:

A bunch of jumpers on these rings: they are reserved (even gate valves, so that after 10 years they don’t find out if an accident is not closing). Sensors are installed everywhere. Under the room is a mini-pool with a slope for receiving water in case of leaks and a pump - so that the electrician is safe.

Fire alarm sensors, VESDA. This is the heart of an early fire detection system:

Conventional grounding and potential equalization system is used. Approach to the raised floor, the raised floor plates themselves are also conductive. Any object in the machine room is grounded. In the hall you can walk barefoot and touch everything - it will not hit you.

And this is a shield and inverter.

The room is without air conditioning, because the efficiency of the UPS is very high, the heat is extremely small, and what is there, is given a regular supply. Air is prepared in the ventilation chamber with filters, humidifiers and dehumidifiers.

The batteries are in a separate room because they need their own temperature. Slightly colder - and reduced capacity. If you heat on an extra couple of degrees - the service life drops from ten years to two. After filling the machinery, there will be about a hundred tons of batteries. The batteries have pressure relief valves (hydrogen is released), which creates the risk of a voluminous explosion, so there is a separate exhaust system in the room.

Red pipes take the air outside.

Battery contacts are not isolated. One such line can shy 400 volts. At the same time, unlike AC, the effect is not localized on the skin, but affects the internal organs much more, which is very dangerous. In general, the higher the frequency - less danger from the current.

This is a ramp for a crane: after all, you also need to reserve equipment delivery routes. The ramp for the crane was already used by us when importing the engineer.

By the way, guess why you need grilles in the floor right at the exit from the ramp?

And in this hall is a hard test of cooling.

In the hall there are heaters of 100 kW each, which heat the air for 72 hours. During the test it is very important to understand that the temperature does not increase with long heating: for in short tests it is not clear whether this is an error or the accumulation of heat by the walls. This is done for so long for the simple reason that in the data center no one measures the capabilities of walls, ceilings and equipment as heat accumulators. The air warms up in 5-10 minutes, then all the heat goes to the walls, etc. There was a case when the premises of another data center kept heat for 4 hours (and it was impossible to turn on equipment that is automatically cut down at around 35 degrees). Only if the temperature does not change a degree, the test is considered complete.

In areas where people go - water extinguishing. Volumes with different types of fire extinguishing - gas and sprinkler - are isolated from each other. A comfortable temperature is maintained in the staff area.

This is pumping. Here are two cooling circuits.

Considering how much power the system takes, one would need to install a huge room with a UPS in order to maintain it while switching to diesel. Naturally, there is an elegant solution: it is enough to keep the pool with 13-degree chilled water, which gives the same thing as a UPS (after all, the result of the electrics' work is cold water), but without loss and without delays, plus without complicated maintenance. In the pool - 90 tons of pre-cooled water, it lasts for 15 minutes of regular work at least, as well as electricity from the UPS. The rest is due to diesel engines. Outward heat is removed through the second circuit with chillers and cooling towers. In the chillers, by the way, it is not water, but a water solution of glycol, such as vodka, in order not to be cold in winter. The intersection of the circuits - in heat exchangers.

Pipes should ideally stand for 10 years without replacement. So that they do not grow, the water must be cleaned.

The pool is visible from behind (behind the lamps) - this is its upper edge.

Roof - here chillers and dry cooling towers.

In the summer, freon closers are used, the rest of the time there is enough outside air for the free cooling mode. The glycol solution is chased through radiators, outside of which is outside air. When one of the radiators is standing there, it can freeze a lot of things, so when it is turned on, it is potentially possible to freeze. To prevent this, bypasses are used; they do not allow the supercoated solution to flow until it heats up.

Here is a ramp at the top:

CHP. By the way, some bad people wrote in Wikimapia that it was “Nuclear power plant”, why foreigners almost ruined TIER III certificate with the question: “Russians, have you lost your mind about building around a nuclear facility?”.Long argued that this is the usual CHP.

Here, air preparation for supply to the premises of the data center is a ventilation chamber:

On the first floor there are transformers:

And here is a co-working room for customer delegations.

First, of course, customers need a place to configure servers after installation. Secondly, there remain people who at some point need to be within walking distance of iron. Thirdly, if you come to the data center in light clothing, you can quickly go to the mashroom, so you need a place to drink hot tea. Here there may be a change of admins, when the client has one hundred racks.

This is the dining room. It is cozy and all equipment is also duplicated.

Here is a data center increased responsibility.

Source: https://habr.com/ru/post/147474/

All Articles