About superscalar, parallelism and crisis of the genre

Probably, it will not be news to any of the habraudees that the fundamental ideas and principles embodied in the current digital technology and its components come from the past by computer standards, namely from the middle of the previous century. It was then that the foundations were invented that for 50 years have been successfully developing, improving and selling the largest (and not so big) IT companies - in their structure, of course, Intel. However, with all the evidence, this situation is definitely a pessimistic attitude: have all the great ideas ended, and our generation is doomed to petty picking in the Great Heritage of the Past?

Consider one specific and unsurprising example for this blog - look at the thoughts of those who are thinking about improving processor architectures.

First we give the necessary explanation - the definition of superscalarity. The simplest scalar computational action involves two operands; in a vector operation, two sets of numbers are performed. There is also an intermediate (or mixed) version: at the same time, various operations are performed on different pairs of scalars due to the presence of an excess number of processing cells - this approach is called superscalar.

Schematic diagram of superscalar command execution

We will not go into the question of who first created the superscalar processor - whether it was American or Soviet scientists, and in what year it happened. More importantly, the superscalar at the moment drives, and he hasn’t yet seen a real opponent. However, the rectilinear development of the superscalar principle has now rested on the ideological limit — with the current approach, we will not squeeze more out of it. The superscalar approach, in principle, is equally applicable to RISC and CISC architecture. RISC, by virtue of a simpler system of commands, still has the potential for overclocking in the framework of the classical development methodology - this is what we are seeing now using the example of ARM, the dynamics of growth in computing power which is impressive. However, there and there the “crisis of the genre” is inevitable, because it is determined by the very essence of superscalarity.

')

What is the problem? In the complexity of the task that the processor assumes. The superscalar parallelizes the sequential code. But this parallelization process is too laborious even for the current processing power, and it is this that limits the performance of the machine. It is impossible to make this conversion faster than a certain number of teams per clock. More can be done, but at the same time the clock purity drops - this approach is obviously meaningless. A reasonable limit on the number of teams is currently studied from all sides and is not subject to revision.

Superscalar can be called a brilliant architecture, but it is an extremely good implementation of the program, which is written consistently. The trouble is not in the superscalar itself, but in the presentation of programs. Programs are presented sequentially, and they need to be converted into parallel execution at runtime. The main problem of the superscalar is in the unsuitability of the input code to its needs. There is a parallel algorithm of the kernel and parallel organized hardware. However, between them, in the middle, there is a certain bureaucratic organization - a consistent system of commands. The compiler converts a program to a sequential instruction set; from the sequence in which he does it, will depend on the overall speed of the process. But the compiler does not know exactly how the machine works. Therefore, generally speaking, the work of the compiler today is shamanism. He simply looks: “if you rearrange like this, then it will be faster”. Probably.

Here is an instructive example. Software preloading ( prefetch ) is a technology that allows you to pre-read data into the processor’s cache. It was invented about 10 years ago and was considered a very beneficial effect on system performance. And then it turned out that after disabling prefetch, the computer starts to work 1.5 times faster. The fact is that prefetch was debugged on one CPU model, and used on others (where some automatic prefetch is executed by the processor), and it is easier to simply disable it than to adapt to the current diversity of the processor fleet. And such cases now occur all the time.

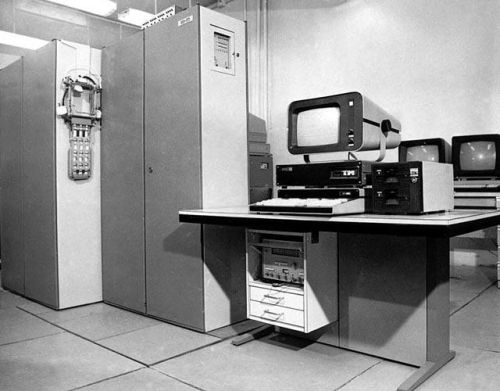

"Elbrus-1KB" - Soviet superscalar computer

You can imagine how difficult it is for a compiler to make a program for a superscalar. But the hardware is even harder. See what the superscalar does: it begins to turn the parallel representation of execution commands into parallel. In a sequential view, all data dependencies are removed. However, it is still necessary to address the register in the correct sequence: do not interchange the calls to the same register. And in order to rearrange the team superscalar, he needs to know all the dependencies. Therefore, it converts the entire computational flow back. Horror - you can not imagine worse. And attempts to optimize it are more like patching holes than fundamental changes.

Even 30 years ago, Soviet developers of computer technology, designing Elbrus systems , understood that superscalar is a good architecture, but complex, unreasonably complex. And besides, having speed limits. A fundamentally new approach was needed, and, most importantly, it was necessary to remove the stumbling block - a consistent presentation of the team. It is necessary that the car received everything in parallel form - and it will be a very simple solution. That is perfect. And the problem of compatibility of the old code and the new architecture can be solved with the help of binary compilation .

Another possibility of this approach is the ability to use more than one core. This is not a scheduling calculation, but simply the sharing of resources. Resources are divided statically into “computational portions”; a superscalar cannot do this, since in order to divide into portions well, you need to look ahead (this can only be done by the program). In fact, instead of a single thread, explicit parallelism takes place. This can save a lot of restrictions.

Variants of hardware parallelism in processors have been repeatedly tested by various research groups, of course, such developments are underway at Intel; One of their results was an Itanium processor using the VLIW architecture (very long instruction word - a very long command word). VLIW is a chemically pure implementation of the idea expressed above, when the entire burden of creating parallelism falls on the shoulders of the compiler, and the stream of commands that is already carefully and correctly parallelized flows into the processor.

Intel Itanium 2 processor

Another promising direction of movement toward a parallel future is processors with a large number of micronuclei, where multithreading is achieved in the most hardware way, with each core also supporting the superscalarity mechanism. In Intel, this kind of development is conducted under the flag of MIC ( Many Integrated Core Architecture ). The project went through several stages of development, with the transition from one to the other, priorities and product positioning changed somewhat - there will soon be a separate story about all this.

Let's sum up the intermediate result. Are fundamental developments currently underway that can drastically change our life / perception of the processor in the future? Yes, undoubtedly, much of what we talked about today will be included in textbooks on the history of digital technology in 50 years from now. Well, we are in the blog Intel (where else!) We undertake, without waiting for this period, to inform further about the news from the processor fronts.

Source: https://habr.com/ru/post/147108/

All Articles