History of a single file manager

I do not know about you, but I began to study the web and PHP in particular by writing free scripts. I wrote 2 of my CMS, gallery, forum, guestbook ... My first project was a file manager, and I would like to tell you about what stages of development it went through and what it became in the end. For example, I taught him to open folders with 500k files without getting out for 32 MB of memory_limit with a page generation time of a few seconds.

I prepared a small demo of his work , and also laid out the source file manager on github. The source texts are not of very high quality, for this was mainly written by me in 2007, that is, 5 years ago :).

In fact, the first project I decided to write was the file manager. The reason was very simple: then I did not know how to use MySQL, but I more or less learned from files :). I called the file manager PHPFM and even posted it on cgi.myweb.ru under the name aa. PHPFM , and I added the first two letters “a” to be at the very top of the list :). Echoes of this can still be found on the network, although nothing can be downloaded - websites with “free software”, apparently, have lost an archive with source codes for some iteration.

Everything was implemented very simply - the same code for working with folders was roughly of this kind:

The code was a classic PHP script, in which PHP and HTML code is mixed, nothing is escaped, and non-obvious dynamic typing errors in PHP are allowed (in fact, reading the directory should look like while (false! == ($ f = readdir ( $ dh))) , because otherwise the reading will end on a file with the name "0"). Nevertheless, it worked quite well and generated an answer even in 1,000 files in about 0.5 seconds, but it took a lot of time to render.

')

This file manager had a lot of drawbacks: it was Web 1.0, it didn’t work quite correctly and couldn’t edit anything if the user running the web server didn’t have write access to the files. By the way, this is a typical approach for virtual hosting - access via FTP, from under a different user (for example, yuriy), rather than a web server user (for example, www-data).

The main reason for rewriting the first version of the file manager was my desire to make it more “Web 2.0,” although such a term did not yet exist. I wanted to make it possible to select files by clicking on a file, as in a regular Windows Explorer, and also to make support for the context menu. This version is already preserved in some places (it can be downloaded via a direct link , it is not necessary to register) By the way, it still works (at least in PHP 5.3 :), but the layout and JS are sharpened for IE 6, so not all the functionality will be available in modern browsers. Actually, there is still a miracle of opening the right-click context menu, as well as displaying information about the selected file in the Details tab (implemented through the iframe, because the world did not yet know about the existence of XMLHttpRequest).

Here is an example of the function of deleting a directory, which still exists in my file manager in a similar form, almost with the same errors :). In the current version, these errors are not corrected, because I am thinking about starting from scratch again and finally writing a decent version that will work really well and will not contain obvious security errors, and will also have a good structure.

At least 3 things are wrong here:

1) there is no verification of the result

2) there is no check for symbolic links (which means that if there is a symlink on "/" in the directory, the function will start recursively deleting the root file system, as far as the rights are enough :))

3) Invalid directory reading code: the function will stop at the file with the name "0"

I liked the idea of “web 2.0” so much that I decided to go further and do everything on frames, like in real Windows Explorer, and finally add the “icon” view, not the list. In fact, I copied most of the behavior of the explorer, after which my JavaScript code turned into such a mess that I just could not support it, absolutely :).

Here is what I wrote on the forum, so as not to retell:

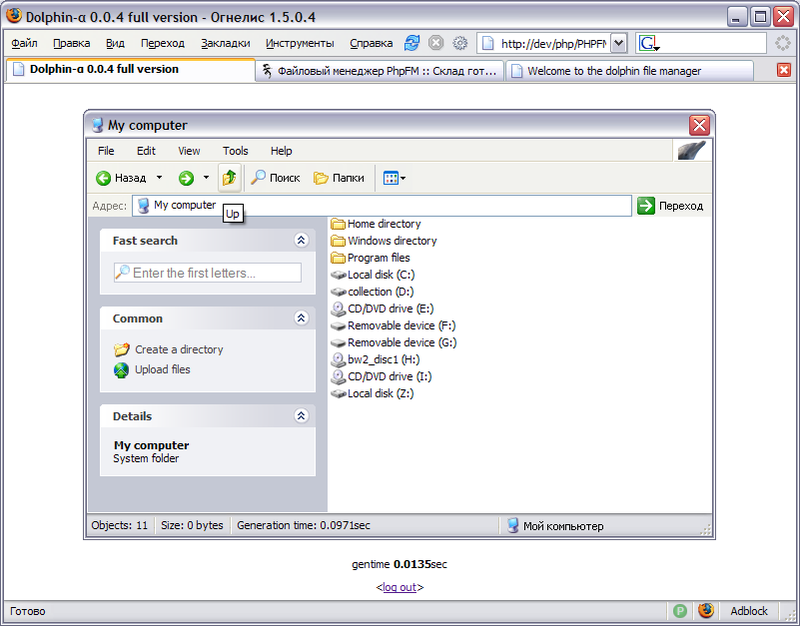

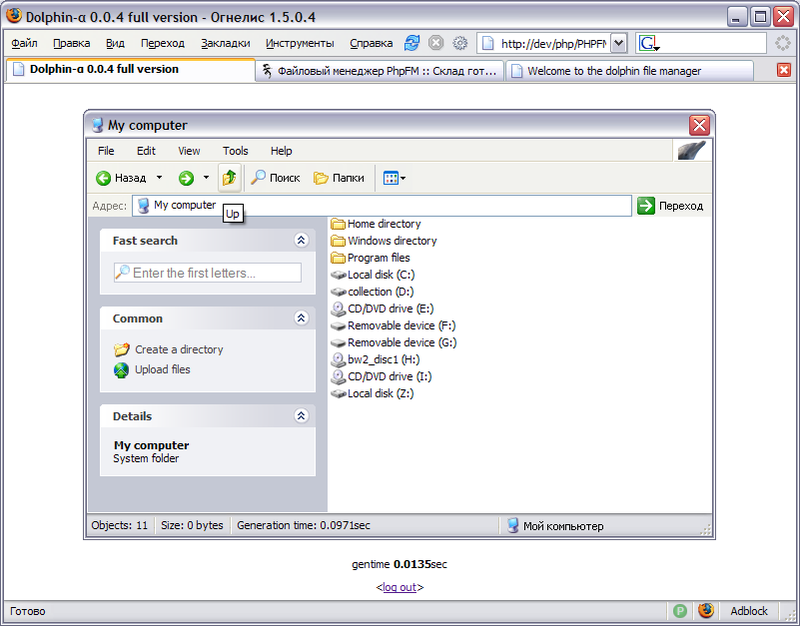

After some time, I realized that I had already gained more or less experience (and also started to understand something in JavaScript) and now I can manage to do what I wanted - a complete copy of the Explorer, only in PHP: ). Here is one of the screenshots of that version:

The menu elements were decorative, but the buttons "back", "forward", etc. they were animated as close to Explorer, as it generally made sense to do on the Web, and as far as I had the skills. In fact, even PHPFM 3.0, abandoned by me, worked so much like Explorer that someone “finalized” it (adding the missing functionality to the level “just to work”) and installed it as a file sharing service at some institute. Previous versions of PHPFM were also installed by someone on the local network as a file storage for accounting, since everything was very similar to Explorer and it was very easy for people to navigate through it.

I was actively developing the “dolphin” from 2006 to 2007, and at some point it dawned on me that it was not good to copy someone else's design, and that it was theoretically to attack me with a copywriter if I continued to take interface elements from Explorer :). Therefore, I decided to change the design, as a result of which the file manager is still in an unfinished state, since I did not fully catch all the bugs associated with changing it. Actually, I didn’t do it myself.

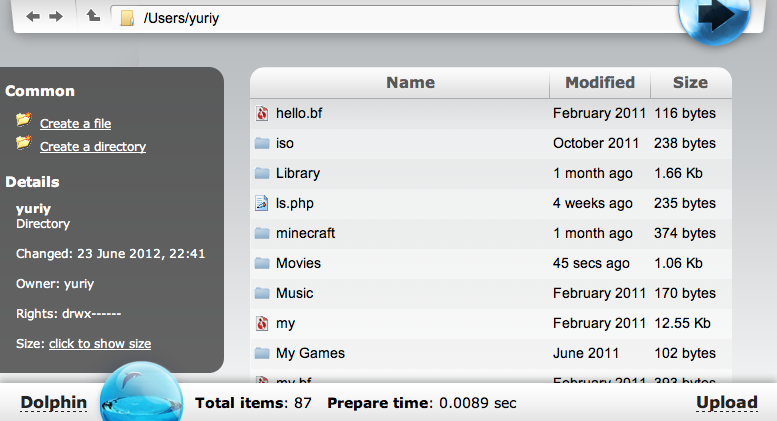

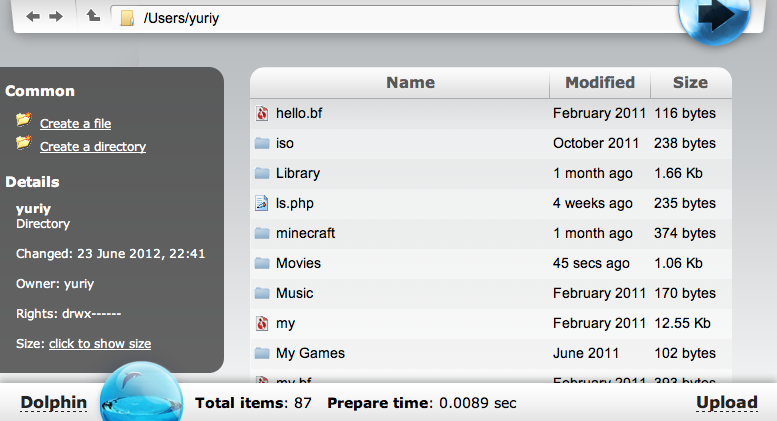

Now the file manager looks like this:

I, frankly, belong to the category of people who are important performance. I love it when everything is fast, and for my file manager, I always tried to come up with new ways to speed up its work. The main thing I wanted to speed up was the opening of the file list — the most frequently used function in the file manager. Here are some ideas for increasing the speed of work I thought out:

1. Minimizing the number of operations performed in the directory reading cycle

2. Performance analysis when opening the i386 folder for Windows made it clear that calling stat () for Windows is a very expensive operation. If you divide the result into pages and call stat () only for files on the current page, you can save a lot. I also tried to save on matches by generating code on the fly and feeding it with eval, so the code was very complex, but rather fast.

3. The idea of the next optimization came to my mind when I wrote my DBMS, which I already told about here on Habré (yes, that crazy one is me :)). The previous mechanism was doing well, except that it was necessary to do the division into pages, and for each page I had to bypass the directory again. Therefore, I decided to write code that would cache the directory structure in such a way that it was possible to quickly select ranges of files, for example, to quickly get files from 1000 to 1100.

4. If you read the code above, you probably noticed that the code was very simple at first, then it became very difficult (to the extent that it was impossible to maintain), then it became easier ... And of course, the simplest idea came to me for some reason to the head last: the fastest code is one that does as little as possible, and for PHP it is very relevant. You may notice that the "/" symbol can never be present in the names of files and directories, so the list of files can be made up as a string:

Since in PHP concatenation is O (1), this code in PHP, I think, works as fast as it is possible at all (in this situation) and consumes the minimum possible amount of RAM: it is very hard to do less (without compression), because somehow separate file names.

This code, in fact, allows you to open a folder of 500k files, consuming less than 32 MB, and this taking into account json_encode. Since we compose a very simple data structure - just a string, the running time of json_encode will also be very short, as is the cost of parsing by the browser. After receiving the line split is done ('/') and we get a complete list of files in the folder. After that, the second AJAX request can ask for information only for visible files (you can also include the first few hundred files in response). Thus, we solve all the problems that we had before: long generation of the list of files, wasteful memory consumption and inconvenience with scrolling large lists.

Video showing the latest version of my file manager (version is not without problems): www.youtube.com/watch?v=XSvY9joxQqI

Sources: github.com/YuriyNasretdinov/Dolphin.php

The forum branch with the surviving old version: forum.dklab.ru/viewtopic.php?t=9504

I prepared a small demo of his work , and also laid out the source file manager on github. The source texts are not of very high quality, for this was mainly written by me in 2007, that is, 5 years ago :).

2002 How it all began PHPFM 1.0

In fact, the first project I decided to write was the file manager. The reason was very simple: then I did not know how to use MySQL, but I more or less learned from files :). I called the file manager PHPFM and even posted it on cgi.myweb.ru under the name aa. PHPFM , and I added the first two letters “a” to be at the very top of the list :). Echoes of this can still be found on the network, although nothing can be downloaded - websites with “free software”, apparently, have lost an archive with source codes for some iteration.

Everything was implemented very simply - the same code for working with folders was roughly of this kind:

Govnokod!

// , PHP , htmlspecialchars, .. $dh = opendir($dir); while ($f = readdir($dh)) { if ($f == '.' || $f == '..') continue; $fullpath = $dir . '/' . $f; $is_dir = is_dir($fullpath); ?><tr><td><input type="checkbox" name="files[]" value="<?=$f?>" /></td><td><img src="..." /></td><td><a href="..."><?=$f?></a></td></tr><? } The code was a classic PHP script, in which PHP and HTML code is mixed, nothing is escaped, and non-obvious dynamic typing errors in PHP are allowed (in fact, reading the directory should look like while (false! == ($ f = readdir ( $ dh))) , because otherwise the reading will end on a file with the name "0"). Nevertheless, it worked quite well and generated an answer even in 1,000 files in about 0.5 seconds, but it took a lot of time to render.

')

This file manager had a lot of drawbacks: it was Web 1.0, it didn’t work quite correctly and couldn’t edit anything if the user running the web server didn’t have write access to the files. By the way, this is a typical approach for virtual hosting - access via FTP, from under a different user (for example, yuriy), rather than a web server user (for example, www-data).

2003 Version two, improved

The main reason for rewriting the first version of the file manager was my desire to make it more “Web 2.0,” although such a term did not yet exist. I wanted to make it possible to select files by clicking on a file, as in a regular Windows Explorer, and also to make support for the context menu. This version is already preserved in some places (it can be downloaded via a direct link , it is not necessary to register) By the way, it still works (at least in PHP 5.3 :), but the layout and JS are sharpened for IE 6, so not all the functionality will be available in modern browsers. Actually, there is still a miracle of opening the right-click context menu, as well as displaying information about the selected file in the Details tab (implemented through the iframe, because the world did not yet know about the existence of XMLHttpRequest).

Here is an example of the function of deleting a directory, which still exists in my file manager in a similar form, almost with the same errors :). In the current version, these errors are not corrected, because I am thinking about starting from scratch again and finally writing a decent version that will work really well and will not contain obvious security errors, and will also have a good structure.

// , function removedir($dir) { $dh=opendir($dir); while ($file=readdir($dh)) { if($file!="." && $file!="..") { $fullpath=$dir."/".$file; if(!is_dir($fullpath)) { unlink($fullpath); }else { removedir($fullpath); } } } closedir($dh); if(rmdir($dir)) { return true; }else { return false; } } At least 3 things are wrong here:

1) there is no verification of the result

2) there is no check for symbolic links (which means that if there is a symlink on "/" in the directory, the function will start recursively deleting the root file system, as far as the rights are enough :))

3) Invalid directory reading code: the function will stop at the file with the name "0"

2004-2005. Attempts to create a third version

I liked the idea of “web 2.0” so much that I decided to go further and do everything on frames, like in real Windows Explorer, and finally add the “icon” view, not the list. In fact, I copied most of the behavior of the explorer, after which my JavaScript code turned into such a mess that I just could not support it, absolutely :).

Here is what I wrote on the forum, so as not to retell:

Message text

Gentlemen, I decided (I also did not know how much time ...) once again take into account all the wishes ... And make a new PhpFM 3.0 on frames (in the image and likeness of the Explorer). + besides, to make the view support not only a list, but also in the form of icons. The version that is being developed is very heavy, but cross-browser JavaScript (that is, it works in Opera (in the javascript settings, you must allow the interception of clicks on the right mouse button), and in Mozille, and IE). So far, under development, here's what's done:

1) frame structure, rubber design!

2) in the upper frame, the address bar works (the “Transition” button is decorative!) And the “Up” button

3) in the left frame the view is as in Explorer with the “Folder” mode enabled. It does not work on JSHTTPRequest (it is planned to change it based on it - a very handy thing), but all folders open dynamically (using an invisible IFRAME). The opening and folding of folders works - the context menu is NO!

4) in the main frame in the form of icons (there is, in principle, still in the form of a list, but it is nothing special), drawn by javascript, and when resizing the frame, its contents are redrawn without reloading the page. File selection works (so far, multiple selection is not debugged) with the mouse, when you hover the mouse over the file after a while, a title with information about the file pops up (earlier it was done for folders too). Too long names are cut off, when you click on a file or folder, its name is restored to its full size (although, so far, without line breaks). Double-clicking a folder opens, if a file or folder is clicked twice with a significant gap, a window pops up with the file rename (it didn’t work out for me to make a normal change to <input type = text ...). For files and folders, the context menu works. So far, a lot of things have not been implemented, please rate at least the idea.

Address of the demonstration: <non-working address, unfortunately>, in order to find out the password, send me a message, I will send you a password (a precautionary measure).

1) frame structure, rubber design!

2) in the upper frame, the address bar works (the “Transition” button is decorative!) And the “Up” button

3) in the left frame the view is as in Explorer with the “Folder” mode enabled. It does not work on JSHTTPRequest (it is planned to change it based on it - a very handy thing), but all folders open dynamically (using an invisible IFRAME). The opening and folding of folders works - the context menu is NO!

4) in the main frame in the form of icons (there is, in principle, still in the form of a list, but it is nothing special), drawn by javascript, and when resizing the frame, its contents are redrawn without reloading the page. File selection works (so far, multiple selection is not debugged) with the mouse, when you hover the mouse over the file after a while, a title with information about the file pops up (earlier it was done for folders too). Too long names are cut off, when you click on a file or folder, its name is restored to its full size (although, so far, without line breaks). Double-clicking a folder opens, if a file or folder is clicked twice with a significant gap, a window pops up with the file rename (it didn’t work out for me to make a normal change to <input type = text ...). For files and folders, the context menu works. So far, a lot of things have not been implemented, please rate at least the idea.

Address of the demonstration: <non-working address, unfortunately>, in order to find out the password, send me a message, I will send you a password (a precautionary measure).

2006 Dolphin (Dolphin.php)

After some time, I realized that I had already gained more or less experience (and also started to understand something in JavaScript) and now I can manage to do what I wanted - a complete copy of the Explorer, only in PHP: ). Here is one of the screenshots of that version:

The menu elements were decorative, but the buttons "back", "forward", etc. they were animated as close to Explorer, as it generally made sense to do on the Web, and as far as I had the skills. In fact, even PHPFM 3.0, abandoned by me, worked so much like Explorer that someone “finalized” it (adding the missing functionality to the level “just to work”) and installed it as a file sharing service at some institute. Previous versions of PHPFM were also installed by someone on the local network as a file storage for accounting, since everything was very similar to Explorer and it was very easy for people to navigate through it.

2007 Change of design due to fear of the reaction of copywriters from Microsoft :)

I was actively developing the “dolphin” from 2006 to 2007, and at some point it dawned on me that it was not good to copy someone else's design, and that it was theoretically to attack me with a copywriter if I continued to take interface elements from Explorer :). Therefore, I decided to change the design, as a result of which the file manager is still in an unfinished state, since I did not fully catch all the bugs associated with changing it. Actually, I didn’t do it myself.

Now the file manager looks like this:

Performance optimization

I, frankly, belong to the category of people who are important performance. I love it when everything is fast, and for my file manager, I always tried to come up with new ways to speed up its work. The main thing I wanted to speed up was the opening of the file list — the most frequently used function in the file manager. Here are some ideas for increasing the speed of work I thought out:

1. Minimizing the number of operations performed in the directory reading cycle

Description

Code:

Performance rating on a folder of 50,000 files:

<?php function d_filelist_fast($dir) { setreadable($dir,true); if(!(@$dh=opendir($dir)) && !(@$ftp_list=d_ftplist($dir))) return false; if($dh) { $dirs = $files = $fsizes = array(); /* chdir($dir); */ while(false!==(@$file=readdir($dh))) { if($file=='.' || $file=='..') continue; if(is_dir($dir.'/'.$file)) $dirs[]=$dir.'/'.$file; else $files[]=$dir.'/'.$file; $fsizes[$dir.'/'.$file] = filesize($dir.'/'.$file); } closedir($dh); }else return $ftp_list; return array('dirs'=>$dirs,'files'=>$files,'fsizes'=>$fsizes); } Performance rating on a folder of 50,000 files:

488 ms generation

33.51 MB of memory

246 KB in gzip (+ 500 ms per download)

305 ms in browser

= 1300 ms

+ no data uploads (at all)

- the slowest option

- it takes a lot of memory

2. Performance analysis when opening the i386 folder for Windows made it clear that calling stat () for Windows is a very expensive operation. If you divide the result into pages and call stat () only for files on the current page, you can save a lot. I also tried to save on matches by generating code on the fly and feeding it with eval, so the code was very complex, but rather fast.

Description

Code:

Performance Analysis:

/* extremely complicated :), extremely fast (on huge directories) and extremely customizable sorted filelist :) $dir -- directory from which to get filelist $params -- array with optional parameters (see beginning of function for details) RETURN: array( 'pages' => array( $pagemin => array( 'files' => array('field1' => $list1, ..., 'fieldN' => $listN), 'dirs' => ... (array of the same format) ), ... (the intermediate pages) $pagemax => array( 'files' => ..., 'dirs' => ... ) ), 'pages_num' => ..., 'items_num' => ... ) where field1, ..., fieldN -- requested fields (default 'name' and 'size') $list1, ..., $listN -- a list of values (array('value1', 'value2', ...,'valueN')), $pagemin and $pagemax -- the page numbers of the specified range (default 1) pages_num -- filtered number of pages (returns 1 if you do not ask not to split to pages) items_num -- filtered number of files + total number of folders EXAMPLE: $res = d_filelist_exteme('/home/yourock'); print_r($res); this will result in: Array ( [pages] => Array ( [1] => Array ( [files] => Array ( [name] => Array ( [0] => file1 [1] => file3 [2] => file20 ) [size] => Array ( [0] => 1000 [1] => 2000 [2] => 300000 ) ) [dirs] => Array ( [name] => Array ( [0] => dir1 ) [size] => Array ( [0] => 512 ) ) ) ) [pages_num] => 1 [items_num] => 4 ) */ /* TODO: move it to config.php in some time */ define('LIGHT_PERPAGE', 30); function d_filelist_extreme($dir, $params=array()) { /* set defaults: $key = ''; is equal to default value for $params['key'] */ $fields = array('name', 'size'); // name, chmod or any field of stat(): (size, mtime, uid, gid, ...) $filt=''; // filename filter $sort='name'; // what field to sort (see $fields) $order='asc'; // sorting order: "asc" (ascending) or "desc" (descending) $fast=true; // use some optimizations (eg can allow to get some range from a filelist of 5000 files in 0.1 sec) $maxit=defined('JS_MAX_ITEMS') ? JS_MAX_ITEMS : 200; // how many items is enough to enable optimization? $split=true; // split result to pages and return only results for pages from $pagemin to $pagemax (including both) $pagemin=1; $pagemax=1; // see description for $split $perpage=LIGHT_PERPAGE; // how many files per page $ftp=true; // try to get filelist through FTP also (can affect performance) /* read parameters, overwriting default values */ extract($params,EXTR_OVERWRITE); if($sort!='name') { /*return d_error('Not supported yet');*/ $fast = false; if(array_search('mode', $fields) === false) $fields[] = 'mode'; } if($pagemax < $pagemin) $pagemax = $pagemin; if(array_search($sort, $fields)===false) $fields[] = $sort; if($order != 'asc') $order = 'desc'; $filt = strtolower($filt); /* check required fields */ $st = stat(__FILE__); foreach($fields as $k=>$v) { if($v == 'name' || $v=='chmod') continue; if(!isset($st[$v])) { $keys = array_filter(array_keys($st), 'is_string'); return d_error('Unknown field: '.$v.'. Use the following: name, chmod, '.implode(', ', $keys)); } } setreadable($dir, true); if(!@$dh = opendir($dir)) { if(!$ftp) return d_error('Directory not readable'); if(!@$ftp_list=d_ftplist($dir)) return d_error('Directory not readable'); } if($dh) { $it = array(); /* items */ if(!$filt) { while(false!==(@$f=readdir($dh))) { if($f=='.' || $f=='..') continue; $it[] = $f; } }else { while(false!==(@$f=readdir($dh))) { if($f=='.' || $f=='..') continue; if(strpos(strtolower($f),$filt)!==false) $it[] = $f; } } closedir($dh); if(!$split) $perpage = sizeof($it); $old_dir = getcwd(); chdir($dir); $l = sizeof($it); if($l < $maxit) $fast = false; /* $fast means do not sort "folders first" */ if($fast) /* $sort = 'name' and $split = true */ { if($order=='asc') sort($it); else rsort($it); }else { $dirs = $files = array(); if($sort=='name') { for($i = 0; $i < $l; $i++) { if(is_dir($it[$i])) $dirs[] = $it[$i]; else $files[] = $it[$i]; } if($order=='asc') { sort($dirs); sort($files); $it = array_merge($dirs, $files); }else { rsort($files); rsort($dirs); $it = array_merge($files, $dirs); } } } /* array_display($it); */ $res = array('pages_num' => ceil($l / $perpage), 'items_num' => $l); $all = $pages = array(); /* fix invalid page range, if it is required */ if($pagemin > $res['pages_num']) { $pagemin = //max(1, $pagemin + ($pagemax - $res['pages_num']) ); $pagemax = $res['pages_num']; } $cd = $cf = $ci = ''; /* Code for Directories, Code for Files and Code for Items */ foreach($fields as $v) { $t = '[\''.$v.'\'][] = '; if ($v == 'name') $t .= '$n'; else if ($v == 'chmod') $t .= 'decoct($s[\'mode\'] & 0777)'; else $t .= '$s[\''.$v.'\']'; if($sort == 'name') { $cd .= '$pages[$page][\'dirs\']'.$t.";\n"; $cf .= '$pages[$page][\'files\']'.$t.";\n"; }else { $ci .= '$all'.$t.";\n"; } } /* we cannot optimize sorting, if sorted field is not name: we will have to call expensive stat() for every file, not only $perpage files. */ if($sort == 'name') { eval(' for($i = 0; $i < '.$l.'; $i++) { $page = floor($i / '.$perpage.') + 1; if( $page < '.$pagemin.' || $page > '.$pagemax.' ) continue; $n = $it[$i]; $s = stat($n); // is a directory? if(($s[\'mode\'] & 0x4000) == 0x4000) { '.$cd.' }else { '.$cf.' } } '); } if($sort!='name') { eval(' for($i = 0; $i < '.$l.'; $i++) { $n = $it[$i]; $s = stat($n); '.$ci.' } '); $code = 'array_multisort($all[\''.$sort.'\'], SORT_NUMERIC, SORT_'.strtoupper($order); foreach($fields as $v) { if($v != $sort) $code .= ', $all[\''.$v.'\']'; } $code .= ');'; /* code is evalled to prevent games with links to arrays */ eval($code); $pages = array(); $cf = $cd = ''; foreach($fields as $k => $v) { $cf .= '$pages[$page][\'files\'][\''.$v.'\'][] = $all[\''.$v.'\'][$i];'; $cd .= '$pages[$page][\'dirs\'][\''.$v.'\'][] = $all[\''.$v.'\'][$i];'; } eval(' for($i = 0; $i < $l; $i++) { $page = floor($i / '.$perpage.') + 1; if( $page < '.$pagemin.' || $page > '.$pagemax.' ) continue; if(($all[\'mode\'][$i] & 0x4000) == 0x4000) { '.$cd.' }else { '.$cf.' } } '); } if($res) $res['pages'] = $pages; chdir($old_dir); return $res; }else { extract($ftp_list); if($fields !== array('name', 'size') || $sort!='name') return d_error('Custom fields and sorting not by name are not currently supported in FTP mode'); $files = array_map('basename', $files); $dirs = array_map('basename', $dirs); $fl = sizeof($files); $dl = sizeof($dirs); if($filt) { $files_c = $dirs_c = array(); for($i=0; $i<$fl; $i++) if(strpos(strtolower($files[$i]),$filt)!==false) $files_c[]=$files[$i]; for($i=0; $i<$dl; $i++) if(strpos(strtolower($dirs[$i]),$filt)!==false) $dirs_c[]=$dirs[$i]; $dirs = $dirs_c; $files = $files_c; $fl = sizeof($files); $dl = sizeof($dirs); } if(!$split) $perpage = $fl + $dl; $pages = array(); for($i=0,$arr='files'; $arr=='files'||$i<$dl; $i++) { if($arr=='files' && $i>=$fl) { $arr='dirs'; $i=0; } $page = floor($i / $perpage) + 1; if( $page < $pagemin || $page > $pagemax ) continue; $pages[$page][$arr]['name'][] = ${$arr}[$i]; $pages[$page][$arr]['size'][] = @$fsizes[$dir.'/'.${$arr}[$i]]; } return array('items_num' => ($fl+$dl), 'pages_num' => ceil(($fl+$dl) / $perpage), 'pages' => $pages); } } Performance Analysis:

169 ms generation

13.48 MB of memory

2 kb in gzip

7 ms to request in browser

= 200 ms

+ quick start

- it takes a lot of memory

- long and resource-intensive data upload: folder scan again

3. The idea of the next optimization came to my mind when I wrote my DBMS, which I already told about here on Habré (yes, that crazy one is me :)). The previous mechanism was doing well, except that it was necessary to do the division into pages, and for each page I had to bypass the directory again. Therefore, I decided to write code that would cache the directory structure in such a way that it was possible to quickly select ranges of files, for example, to quickly get files from 1000 to 1100.

Description

Performance Analysis:

/* the cached version of filelist especially made for JS GGGR returns: array( 'items' => array( start: array( 'name' => ..., 'size' => ..., 'is_dir' => true|false, ), ... start+length-1: array( 'name' => ..., 'size' => ..., 'is_dir' => true|false, ), ), 'cnt' => N ) */ function d_filelist_cached($dir, $start, $length, $filter = '') { //sleep(1); $tmpdir = is_callable('get_tmp_dir') ? get_tmp_dir() : '/tmp'; $cache_prefix = $tmpdir.'/dolphin'.md5(__FILE__); $cache_dat = $cache_prefix.'.dat'; $cache_idx = $cache_prefix.'.idx'; $new = false; if(!file_exists($cache_dat) || filemtime($dir) > filemtime($cache_dat)) { $new = true; $fp = fopen($cache_dat, 'w+b'); $ifp = fopen($cache_idx, 'w+b'); }else { $fp = fopen($cache_dat, 'r+b'); $ifp = fopen($cache_idx, 'r+b'); list(, $l) = unpack('n', fread($fp, 2)); $cached_dir = fread($fp, $l); list(, $l) = unpack('n', fread($fp, 2)); $cached_filter = fread($fp, $l); if($cached_dir != $dir || $cached_filter != $filter) { ftruncate($fp, 0); ftruncate($ifp, 0); fseek($fp, 0, SEEK_SET); fseek($ifp, 0, SEEK_SET); $new = true; } } $items = array(); $cnt = 0; $old_cwd = getcwd(); try { if(!@chdir($dir)) throw new Exception('Could not chdir to the folder'); if($new) { fwrite($fp, pack('n', strlen($dir)).$dir); fwrite($fp, pack('n', strlen($filter)).$filter); $pos = ftell($fp); $dh = opendir('.'); if(!$dh) throw new Exception('Could not open directory for reading'); $filter = strtolower($filter); while( false !== ($f = readdir($dh)) ) { if($f == '.' || $f == '..') continue; if(strlen($filter) && !substr_count(strtolower($f), $filter)) continue; fwrite($ifp, pack('NN', $pos, strlen($f))); fwrite($fp, $f); $pos += strlen($f); // ftell is not as fast as it should be, sadly } fflush($ifp); fflush($fp); } fseek($ifp, $start * 8 /* length(pack('NN')) */); $first = true; $curr_idx = $start; while( $curr_idx < $start + $length ) { list(, $pos, $l) = unpack('N2', fread($ifp, 8)); if($first) { fseek($fp, $pos); $first = false; } $f = fread($fp, $l); if(!strlen($f)) break; if(strlen($f) != $l) { throw new Exception('Consistency error'); } $items[$curr_idx++] = array( 'name' => $f, 'size' => filesize($f), 'is_dir' => is_dir($f), ); } $cnt = filesize($cache_idx) / 8; }catch(Exception $e) { fclose($fp); fclose($ifp); unlink($cache_dat); unlink($cache_idx); @chdir($old_cwd); return is_callable('d_error') ? d_error($e->getMessage()) : false; } if($cnt < 500 && $length >= 500) { usort($items, 'd_filelist_cached_usort_cmp'); } @chdir($old_cwd); return array( 'items' => $items, 'cnt' => $cnt ); } function d_filelist_cached_usort_cmp($it1, $it2) { return strcmp( $it1['name'], $it2['name'] ); } Performance Analysis:

460 ms generation

1.18 MB of memory

2 kb in gzip

8 ms to request in browser

= 500 ms

+ easy and fast loading

+ does not require much memory

- slow start

- storage of intermediate files of significant size (800 Kb per 50,000 files with a directory file size of 1.6 MB)

4. If you read the code above, you probably noticed that the code was very simple at first, then it became very difficult (to the extent that it was impossible to maintain), then it became easier ... And of course, the simplest idea came to me for some reason to the head last: the fastest code is one that does as little as possible, and for PHP it is very relevant. You may notice that the "/" symbol can never be present in the names of files and directories, so the list of files can be made up as a string:

function d_filelist_simple($dir) { $dh = opendir($dir); if (!$dh) return d_error("Cannot open $dir"); // use as little memory as possible using strings $files = ''; // assuming that first two entries are always "." and ".." or (in case of root dir) it is only "." // we can read first two entries separately and skip check for "." and ".." in main cycle for // maximum possible performance for ($i = 0; $i < 2; $i++) { $f = readdir($dh); if ($f === false) return array('res' => '', 'cnt' => 0); if ($f === "." || $f === "..") continue; $files .= "$f/"; } while (false !== ($f = readdir($dh))) $files .= "$f/"; closedir($dh); return array('res' => $files, 'cnt' => substr_count($files, '/')); } Since in PHP concatenation is O (1), this code in PHP, I think, works as fast as it is possible at all (in this situation) and consumes the minimum possible amount of RAM: it is very hard to do less (without compression), because somehow separate file names.

This code, in fact, allows you to open a folder of 500k files, consuming less than 32 MB, and this taking into account json_encode. Since we compose a very simple data structure - just a string, the running time of json_encode will also be very short, as is the cost of parsing by the browser. After receiving the line split is done ('/') and we get a complete list of files in the folder. After that, the second AJAX request can ask for information only for visible files (you can also include the first few hundred files in response). Thus, we solve all the problems that we had before: long generation of the list of files, wasteful memory consumption and inconvenience with scrolling large lists.

Analysis of the performance of this scheme (50 000 files)

48 ms generation

1.92 MB of memory

107 KB in gzip (+ 200 ms per download)

56 ms in browser

= 300 ms

+ quick start

+ no data load for file names

+ low CPU cost

- spends a lot of traffic

- spends a lot of memory in the browser

1.92 MB of memory

107 KB in gzip (+ 200 ms per download)

56 ms in browser

= 300 ms

+ quick start

+ no data load for file names

+ low CPU cost

- spends a lot of traffic

- spends a lot of memory in the browser

Links

Video showing the latest version of my file manager (version is not without problems): www.youtube.com/watch?v=XSvY9joxQqI

Sources: github.com/YuriyNasretdinov/Dolphin.php

The forum branch with the surviving old version: forum.dklab.ru/viewtopic.php?t=9504

Source: https://habr.com/ru/post/146408/

All Articles