Formation of high-level signs using a large-scale learning experiment without a teacher

The article Human Face Detection: 19 facts that computer vision researchers should know was an experimental fact: there are neurons in the primate brain that react selectively to the face image (human, monkey, etc.), with an average delay of about 120 ms From which I made an amateurish conclusion in the commentary that the visual image is processed by direct propagation of the signal, and the number of layers of the neural network is about 12.

I propose a new experimental confirmation of this fact, published concretely by our beloved Andrew Ng.

The authors conducted a large-scale experiment to build a detector of human faces based on a huge number of images that are not labeled in any way. A 9-layer neural network was constructed with the structure of a sparse autoencoder with local receptive fields. The neural network is implemented on a cluster of 1000 computers, 16 cores each, and contains 1 billion connections between neurons. For training the network used 10 million frames of 200x200 pixels randomly obtained from YouTube videos. The training by the method of asynchronous gradient descent took 3 days.

As a result of training on an unmarked sample, without a teacher, contrary to intuition, a neuron was selected in the output layer of the network, which selectively responds to the presence of a face in the image. Control experiments showed that this classifier is not only resistant to the displacement of the face in the image field, but also to scaling and even 3D rotation outside the image plane! It turned out that this neural network is able to learn how to recognize a variety of high-level concepts, such as human figures or cats.

')

The full text of the article, see the link , here I give a brief retelling.

In neurobiology, it is believed that separate neurons can be distinguished in the brain that are selective to certain generalized categories, such as human faces. They are also called "grandmother's neurons."

In artificial neural networks, a standard approach is training with a teacher: for example, to build a classifier of human faces, a set of faces is taken as a set, as well as a set of images that do not contain faces, with each image marked “face” - “not a face”. The need for large, labeled collections of training data is a big technical problem.

In this paper, the authors attempted to answer two questions: can the neural network be trained in recognizing faces from unmarked data, and can we experimentally confirm the possibility of self-learning of “grandmother's neurons” on unmarked data. This, in principle, will confirm the hypothesis that babies are independently trained to group similar images (for example, individuals) without teacher intervention.

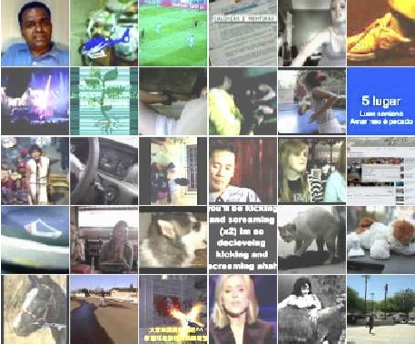

The training set was compiled by random sampling one frame from 10 million YouTube clips. Only one frame was taken from each video to avoid duplicates in the sample. Each frame was scaled to a size of 200x200 pikeles (which distinguishes the described experiment from most recognition works, usually operating with 32x32 frames or the like). Here are typical images from the training sample:

The basis for the experiment served as a sparse autoencoder ( described in Habré). Early experiments with shallow depth autoencoders made it possible to obtain classifiers of low-level features (segments, boundaries, etc.) similar to Gabor filters.

The described algorithm uses a multilayer autoencoder, as well as some important distinguishing features:

Local receptive fields allow scaling of the neural network for large images. Combining frames (pooling) and local normalization of contrasts allows for invariance to movement and local deformations.

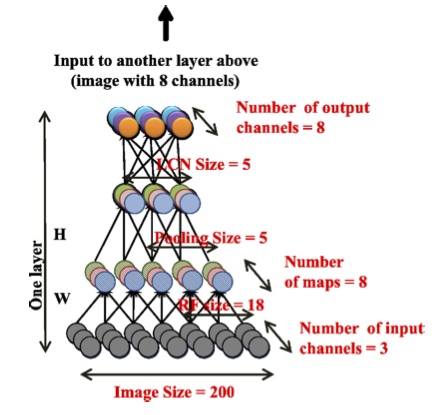

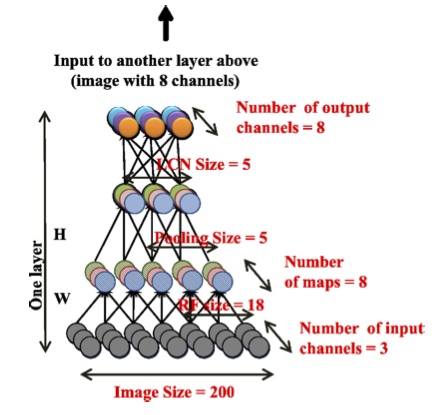

The used autoencoder consists of three repeating layers, each of which uses the same sublevels: local filtering, local pooling, local normalization of contrasts.

The most important feature of this structure is the local connectivity between neurons. The first sublayer uses receptive fields of 18x18 pixels, and the second sublayer combines frames of 5x5 intersecting adjacent areas. Note that local receptive fields do not use convolutions, i.e. the parameters of each recurrent field are not repeated, they are unique. This approach is closer to the biological prototype, and also allows you to learn more invariants.

Local normalization of contrast also imitates processes in the biological visual tract. From the activation of each neuron subtract the weighted average of the activation of neighboring neurons (Gaussian weights). Then normalization is performed according to the local mean square value of activations of neighboring neurons, also Gaussian-weighted.

The objective function during network training minimizes the value of the error in reproducing the original image by a sparse autoencoder. Optimization is performed globally for all managed network parameters (over 1 billion parameters) using the asynchronous gradient descent method (asynchronous SGD). To solve such a large-scale task, parallelism of the model was implemented, which consists in the fact that local weights of the neural network are distributed to different machines. One copy of the model is distributed to 169 computers, with 16 processor cores in each.

For further parallelization of the learning process, asynchronous gradient descent is implemented using several instances of the model. In the experiment described, the training sample was divided into 5 portions, and each portion was trained on a separate model instance. Models transmit updated parameter values to centralized “parameter servers” (256 servers). In a simplified presentation, before processing a mini-series of 100 training images, the model requests parameters from the parameter servers, learns (updates the parameters), and passes the parameter gradients to the parameter servers.

Such asynchronous gradient descent is resistant to failures of individual network nodes.

To conduct this experiment, a DistBelief framework was developed that solves all communication issues between parallel computers in a cluster, including dispatching requests to parameter servers. The learning process lasted 3 days.

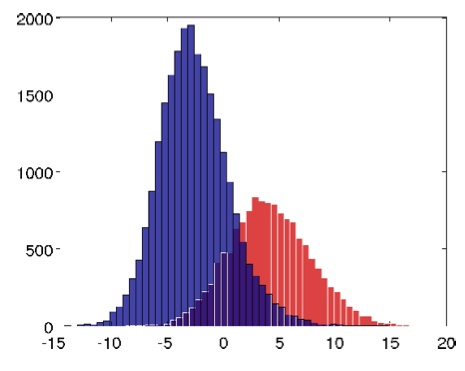

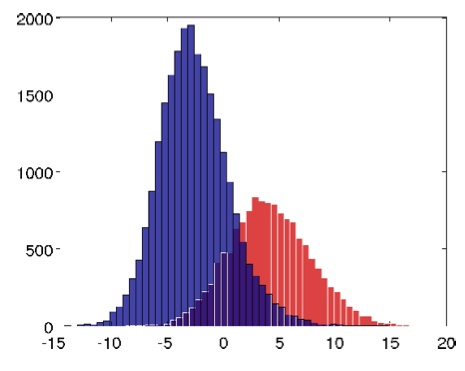

Surprisingly, in the process of learning, neurons were formed, the best of which showed an accuracy in recognizing faces 81.7%. The histogram of activation levels (relative to the threshold value Zero) shows how many images in the test sample caused one or another activation of the “grandmother's neuron”. Blue color shows images that do not contain faces (random images), and red - containing faces.

In order to confirm that the classifier was trained specifically for face recognition, a trained network was visualized using two methods.

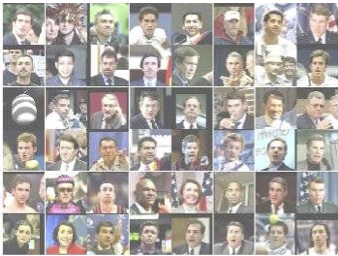

The first method is the selection of test images that caused the greatest activation of the neuron classifier:

The second approach is numerical optimization to obtain the optimal stimulus. Using the gradient descent method, an input pattern was obtained, which maximizes the value of the output activation of the classifier for the given parameters of the neural network:

More detailed quantitative estimates and conclusions about the results of the experiment, see the original article. From myself I want to note that a large-scale computational experiment proved the possibility of self-learning of a neural network without a teacher, and the parameters of this network (in particular, the number of layers and interconnects) approximately correspond to biological values.

I propose a new experimental confirmation of this fact, published concretely by our beloved Andrew Ng.

The authors conducted a large-scale experiment to build a detector of human faces based on a huge number of images that are not labeled in any way. A 9-layer neural network was constructed with the structure of a sparse autoencoder with local receptive fields. The neural network is implemented on a cluster of 1000 computers, 16 cores each, and contains 1 billion connections between neurons. For training the network used 10 million frames of 200x200 pixels randomly obtained from YouTube videos. The training by the method of asynchronous gradient descent took 3 days.

As a result of training on an unmarked sample, without a teacher, contrary to intuition, a neuron was selected in the output layer of the network, which selectively responds to the presence of a face in the image. Control experiments showed that this classifier is not only resistant to the displacement of the face in the image field, but also to scaling and even 3D rotation outside the image plane! It turned out that this neural network is able to learn how to recognize a variety of high-level concepts, such as human figures or cats.

')

The full text of the article, see the link , here I give a brief retelling.

Concept

In neurobiology, it is believed that separate neurons can be distinguished in the brain that are selective to certain generalized categories, such as human faces. They are also called "grandmother's neurons."

In artificial neural networks, a standard approach is training with a teacher: for example, to build a classifier of human faces, a set of faces is taken as a set, as well as a set of images that do not contain faces, with each image marked “face” - “not a face”. The need for large, labeled collections of training data is a big technical problem.

In this paper, the authors attempted to answer two questions: can the neural network be trained in recognizing faces from unmarked data, and can we experimentally confirm the possibility of self-learning of “grandmother's neurons” on unmarked data. This, in principle, will confirm the hypothesis that babies are independently trained to group similar images (for example, individuals) without teacher intervention.

Educational data

The training set was compiled by random sampling one frame from 10 million YouTube clips. Only one frame was taken from each video to avoid duplicates in the sample. Each frame was scaled to a size of 200x200 pikeles (which distinguishes the described experiment from most recognition works, usually operating with 32x32 frames or the like). Here are typical images from the training sample:

Algorithm

The basis for the experiment served as a sparse autoencoder ( described in Habré). Early experiments with shallow depth autoencoders made it possible to obtain classifiers of low-level features (segments, boundaries, etc.) similar to Gabor filters.

The described algorithm uses a multilayer autoencoder, as well as some important distinguishing features:

- local receptive fields

- frame pooling

- local normalization of contrasts.

Local receptive fields allow scaling of the neural network for large images. Combining frames (pooling) and local normalization of contrasts allows for invariance to movement and local deformations.

The used autoencoder consists of three repeating layers, each of which uses the same sublevels: local filtering, local pooling, local normalization of contrasts.

The most important feature of this structure is the local connectivity between neurons. The first sublayer uses receptive fields of 18x18 pixels, and the second sublayer combines frames of 5x5 intersecting adjacent areas. Note that local receptive fields do not use convolutions, i.e. the parameters of each recurrent field are not repeated, they are unique. This approach is closer to the biological prototype, and also allows you to learn more invariants.

Local normalization of contrast also imitates processes in the biological visual tract. From the activation of each neuron subtract the weighted average of the activation of neighboring neurons (Gaussian weights). Then normalization is performed according to the local mean square value of activations of neighboring neurons, also Gaussian-weighted.

The objective function during network training minimizes the value of the error in reproducing the original image by a sparse autoencoder. Optimization is performed globally for all managed network parameters (over 1 billion parameters) using the asynchronous gradient descent method (asynchronous SGD). To solve such a large-scale task, parallelism of the model was implemented, which consists in the fact that local weights of the neural network are distributed to different machines. One copy of the model is distributed to 169 computers, with 16 processor cores in each.

For further parallelization of the learning process, asynchronous gradient descent is implemented using several instances of the model. In the experiment described, the training sample was divided into 5 portions, and each portion was trained on a separate model instance. Models transmit updated parameter values to centralized “parameter servers” (256 servers). In a simplified presentation, before processing a mini-series of 100 training images, the model requests parameters from the parameter servers, learns (updates the parameters), and passes the parameter gradients to the parameter servers.

Such asynchronous gradient descent is resistant to failures of individual network nodes.

To conduct this experiment, a DistBelief framework was developed that solves all communication issues between parallel computers in a cluster, including dispatching requests to parameter servers. The learning process lasted 3 days.

Experimental results

Surprisingly, in the process of learning, neurons were formed, the best of which showed an accuracy in recognizing faces 81.7%. The histogram of activation levels (relative to the threshold value Zero) shows how many images in the test sample caused one or another activation of the “grandmother's neuron”. Blue color shows images that do not contain faces (random images), and red - containing faces.

In order to confirm that the classifier was trained specifically for face recognition, a trained network was visualized using two methods.

The first method is the selection of test images that caused the greatest activation of the neuron classifier:

The second approach is numerical optimization to obtain the optimal stimulus. Using the gradient descent method, an input pattern was obtained, which maximizes the value of the output activation of the classifier for the given parameters of the neural network:

More detailed quantitative estimates and conclusions about the results of the experiment, see the original article. From myself I want to note that a large-scale computational experiment proved the possibility of self-learning of a neural network without a teacher, and the parameters of this network (in particular, the number of layers and interconnects) approximately correspond to biological values.

Source: https://habr.com/ru/post/146077/

All Articles