HA (High Available) VMware vSphere cluster on HP BL460c and EVA blades

Practical application of knowledge about working with EVA and iLO arrays in ProLiant servers , which you received a little earlier, can be the deployment of a high-availability cluster on vSphere.

The cluster can be used for medium and large enterprises to reduce unplanned downtime. Since for a business such parameters as availability of its service or services to the client in 24x7 mode are important, this solution is based on a high availability cluster. The cluster always includes at least 2 servers. In our solution, servers running VMware monitor each other’s state, while at the same time only one of them will lead, and a virtual machine with our business application will be deployed on it. In the event of a master server failure, its role automatically assumes the second, while for the customer access to the business application is almost not interrupted.

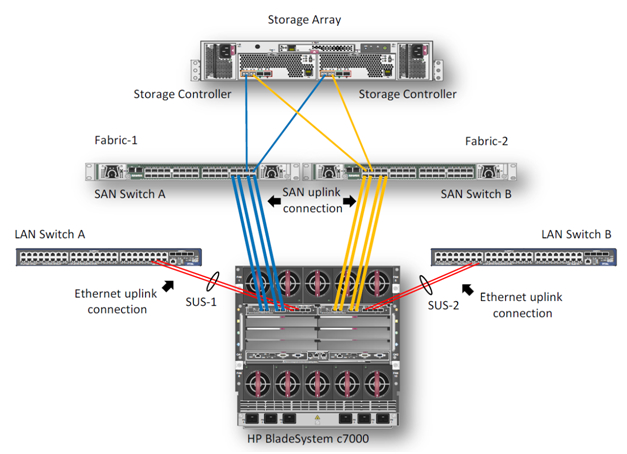

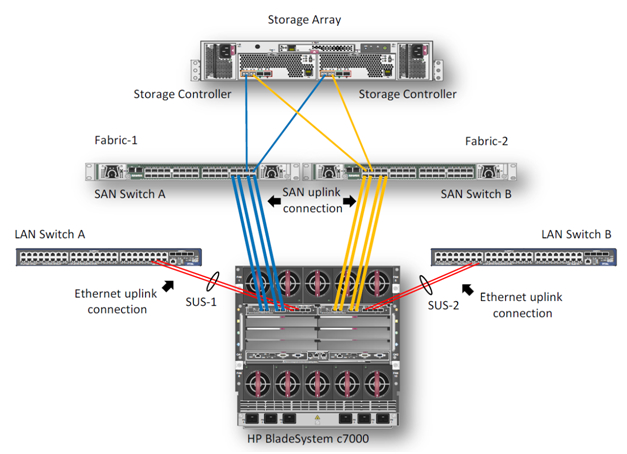

In this example, we describe the process of creating a highly available VMware cluster on the BL460c G7 server blades. The hardware consists of an HP c7000 blade basket and two BL460c G7 blade servers, where a VMware HA (High Available) cluster is configured. In the HP demo center in Moscow, only models of G7 blade servers are now available, but based on the described example, you can assemble a cluster on new Gen8 blade servers. Configuration, in general, will not differ. The storage system is HP EVA 4400. For the Ethernet and Fiber Channel connections in the c7000 cage, 2 HP Virtual Connect Flex Fabric modules are installed in compartments 1 and 2.

')

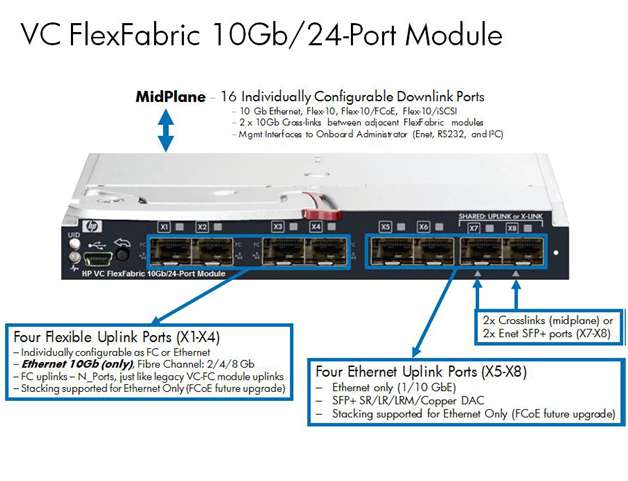

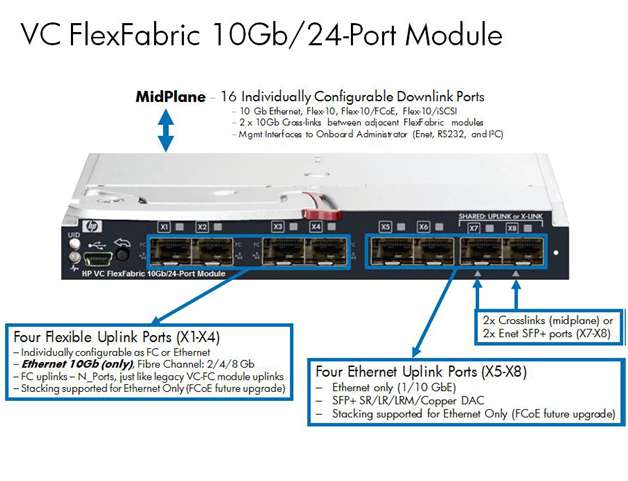

Virtual Connect FlexFabric is a converged virtualized I / O module for the c7000 blade basket.

The HP Virtual Connect FlexFabric module uses Flex-10, FCoE, Accelerated iSCSI technologies for switching blades and network infrastructure (SAN and LAN) at a speed of 10Gb.

From the possibilities: transfer of “profiles” (table of MAC and WWN servers) not only between different blades in the chassis, but also to remote sites where there is the same server blade.

Also, when using Virtual Connect, each of the two ports of a blade server network card can be divided into 4 virtual 1GbE / 10GbE / 8Gb FC / iSCSI ports with a total bandwidth of 10GbE per port. This allows you to get from 8 virtual ports to the server (on the BL460c G7), which must be set at the first initialization.

The only limitation is that HP Virtual Connect FlexFabric are not full switches. These are aggregators, for which you must have separate Ethernet and SAN switches (in the latest firmware, however, the Direct Connect feature with HP P10000 3PAR appeared).

A general view of the equipment used is reflected in the Onboard Administrator.

Integrated into the ProLiant BL460c G7, the NC553i Dual Port FlexFabric 10Gb card can work with HP Virtual Connect FlexFabric modules directly, without additional mezzanine cards. Two HP Virtual Connect FlexFabric modules are installed for fault tolerance of the communication paths of the blade servers to the EVA 4400 storage system and Ethernet switches.

In this example, we will consider a scenario where traffic from the HP Virtual Connect FlexFabric module is divided into Ethernet switches and an FC factory. FCoE in this case is used for switching modules with blades inside the basket.

HP Virtual Connect FlexFabric modules require transceivers to work with various protocols. In this example, 4 Gb FC SFP transceivers were installed in ports 1 and 2 on each of the modules, and 10 Gb SFP + transceivers were installed in ports 4 and 5. 10GbE channels were used in this test to enable network equipment in our demo center.

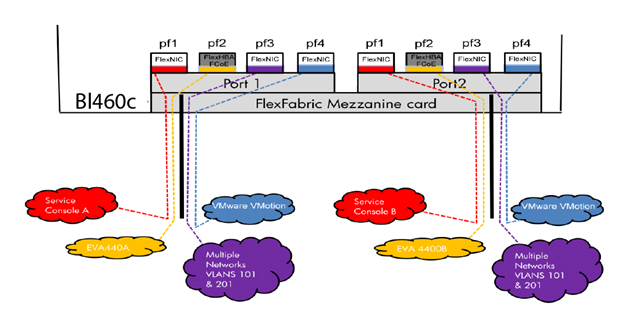

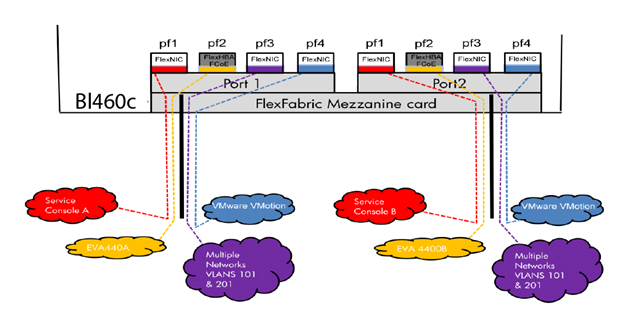

The diagram shows that the 3 ports of the NC553i Dual Port FlexFabric 10Gb network adapter are configured as FlexNIC for interfacing virtual machines and for connecting to the network infrastructure. The remaining port was configured as FCoE for communication with the SAN infrastructure and the EVA 4400 storage system.

HP Virtual Connect Manager management software is supplied with each Virtual Connect module, HP Virtual Connect Enterprise Manager for Enterprise modules. Using Enterprise Manager, you can manage up to 256 domains from one console (1 domain - 4 Blade systems with Virtual Connect).

After connecting the module, you need to configure it. This is done either by connecting the basket itself to the Onboard Administrator, or by the IP address that is assigned to the module by default at the factory.

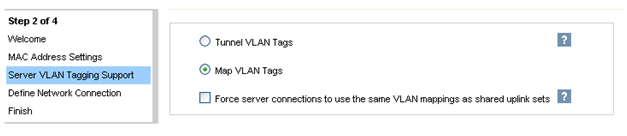

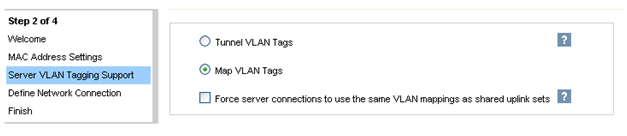

Once HP FlexFabric is connected, we need to define the domain, Ethernet network, SAN fabric and server profiles for the hosts. These settings are made in the HP Virtual Connect Manager or on the command line. In our example, the domain has already been created, so the next step is to define the VC Sharing Uplink Sets to support Ethernet networks of virtual machines named HTC-77-A and HTC-77-B. Enable the “Map VLAN tags” feature in the installation wizard.

The “Map VLAN tags” feature allows you to use one physical interface to work with multiple networks using Shared Uplink Set. In this way, multiple VLANs can be configured on the server network card interface. The Shared Uplink Sets parameters were specified in the VCM installation wizard. SUS job example:

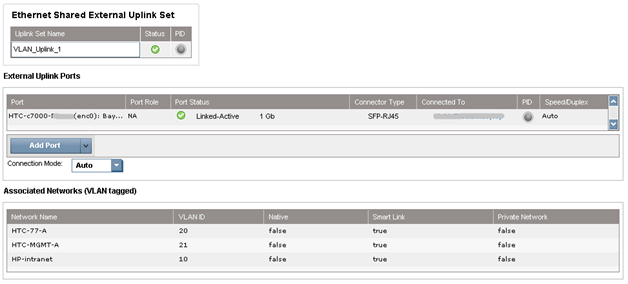

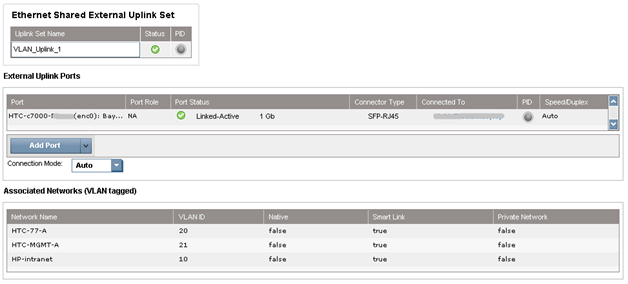

Both HTC-77-A and HTC-MGMT-A networks were assigned: VLAN_Uplink_1 to port 5 of module 1; the second Shared Uplink Set (VLAN_Uplink_2) has been assigned to port 5 of module 2, and includes the networks HTC-77-B (VLAN 20) and HTC-MGMT-B (VLAN 21). An example of creating Ethernet networks in VCM:

The HP Virtual Connect module can create an uplink-free internal network using high-performance 10Gb connections to the c7000 backplane. The internal network can be used for VMware vMotion, as well as for the organization of network resiliency. The traffic of this network does not reach the external ports of the c7000. In our case, 2 VMware vMotion networks were configured. For the management console, two networks have been configured with the names HP-MGMT-A and HP-MGMT-B. Port 6 of the HP Virtual Connect FlexFabric modules in slots 1 and 2 was used to connect to the management console.

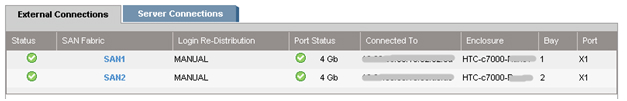

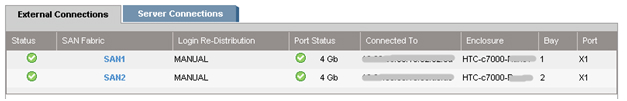

After that, the SAN factory configuration begins. It's pretty simple: in VCM choose Define - SAN Fabric. Select the SAN-1 module in bay 1, port X1. For the second SAN-2 module, the port is also X1. SAN Configuration Example:

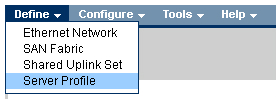

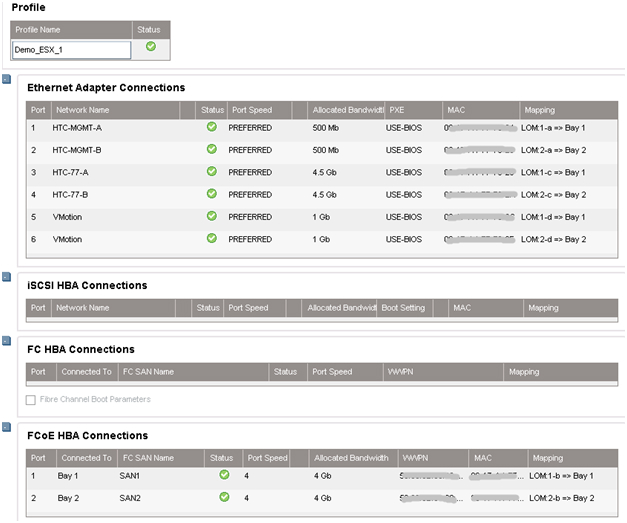

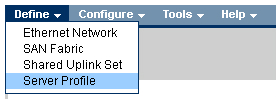

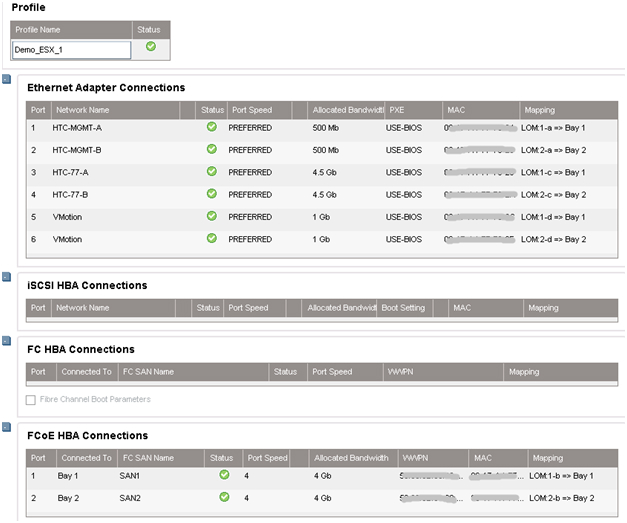

The final step is to “glue” all previously made settings to the server profile. This can be done in several ways: for example, via the VCM web-interface or via the Virtual Connect - Virtual Connect Scripting Utility (VCSU) command line. Before installing the OS, you need to check that all system, NIC and FC drivers are updated, and all network parameters are set. An example of creating a profile in CVM:

In VCM, select Define - Server Profile, enter the profile name and select the network connections from the profile created earlier.

In our case, all the ports were assigned by us in advance: 6 Ethernet ports and 2 SAN ports for each profile. The profiles for compartment 11 and 12 are identical, i.e. each server sees the same set of ports. The total speed does not exceed 10 Gb / s for each port of the server network card.

If network expansion is planned in the future, it is recommended to assign unassigned ports in the profile. By doing so, you can further activate these ports without shutting down and restarting the server.

When creating a default profile, only 2 ports are available in the Ethernet section. To add more, you need to call the context menu with the right mouse button and select “Add connection”.

We assign a speed for each type of ports: management ports - 500Mb / s, vMotion ports - 1Gb / s, SAN ports - 4Gb / s, and virtual machine ports - 4.5Gb / s.

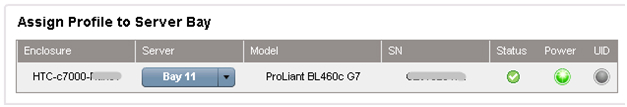

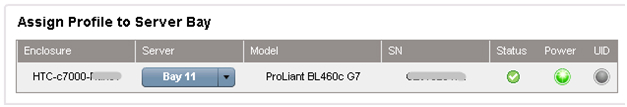

After creating the profiles, they are attached to the blade compartment of the basket in the same menu.

This completes the cart configuration. Next, there is a remote connection to the blades themselves and the deployment of VMware ESX 5.0, an example of remote installation of the OS on the ProLiant servers via iLO has already been described .

In our case, instead of Windows, VMware is selected in the installer drop-down menu and the path to the distribution kit is indicated.

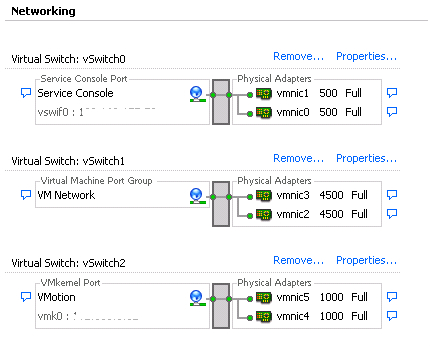

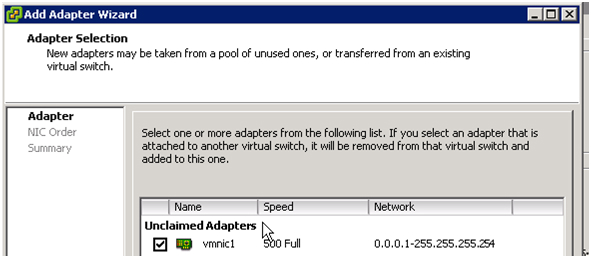

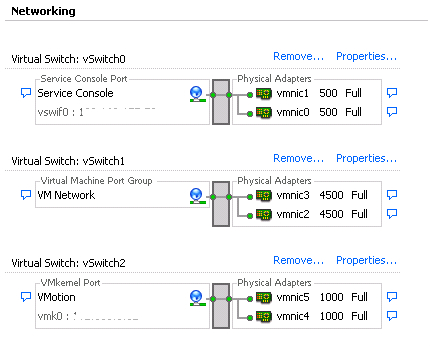

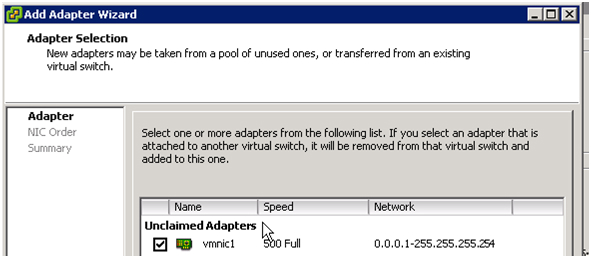

After installation, the server restarts and you can begin to configure the virtual machines. To do this, connect to the ESX server. Go to the Configuration section, create 6 vmnics on each host, as in the Virtual Connect module: Service Console (vSwitch0), VM Network (vSwitch1), vMotion (vSwitch2). Thus we get a fault-tolerant configuration, where each network corresponds to 2 vmnics.

As soon as vmnics are added, you will notice that each adapter is automatically set to the speed specified in the server profile when configured in Virtual Connect Manager.

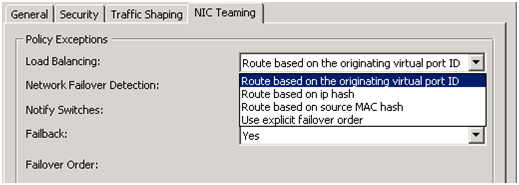

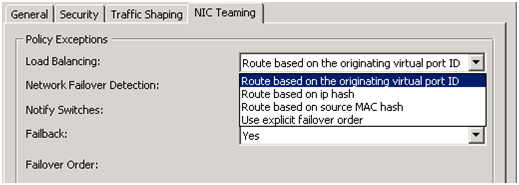

After adding vmnics, we combine them into a group using the vSwitch: Port tab - in the Configuration field, select vSwitch - Edit, select NIC Teaming, make sure that both vmnics are visible. Load balancing is selected by “Route based on the originating virtual port ID” - these settings are recommended to be set by default (for more information about this method, see the VMware website .

The previous review described how to work with the EVA - Command View disk array management interface.

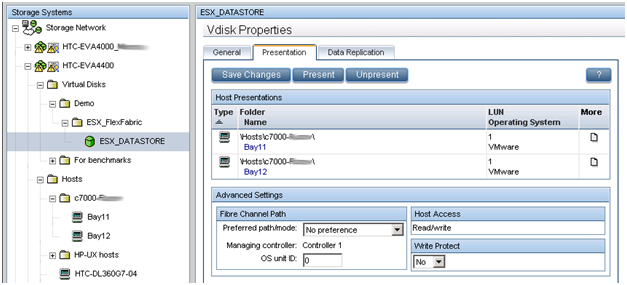

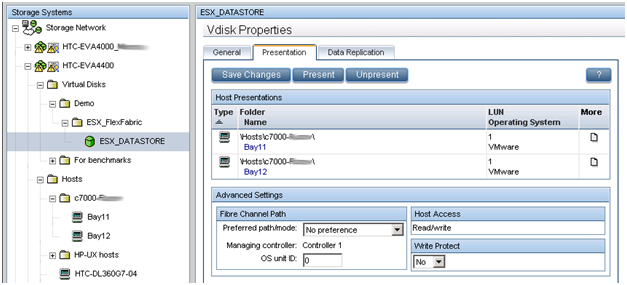

In this example, we create a 500 GB LUN that is shared between two virtual machine hosts. According to the method described in the previous article, a virtual disk is created and presented to two ESX hosts. The size of the LUN is determined by the type of OS and the roles that this cluster will perform. A prerequisite for a cluster (in particular, for VMware vMotion) - the LUN must be shared. The new LUN must be formatted in VMFS, and the Raw Device mappings (RDMs) feature for the virtual machines must be enabled.

HP Virtual Connect in combination with VMware vMotion gives the same level of redundancy as when using two VC-FC modules and two VC-Eth modules, except that only 2 HP FlexFabric modules are needed. HP Virtual Connect FlexFabric allows you to organize fault-tolerant paths to a common LUN and fault-tolerant network interface interconnection (NIC Teaming) for networking. All VMware vSphere settings meet the Best Practices described in the document .

A cluster availability check was performed. A Windows 2008 Server R2 virtual machine was deployed on one of the hosts.

The virtual machine was manually migrated from one server to another several times, while the cluster was still available during the flashing.

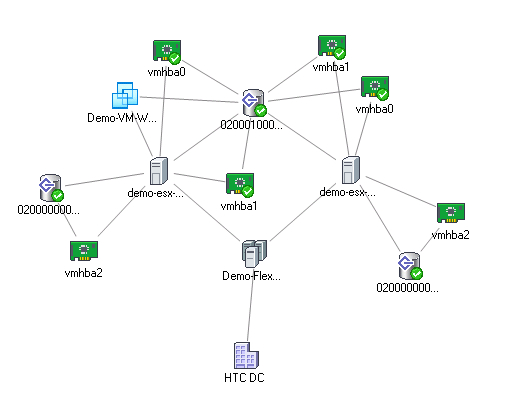

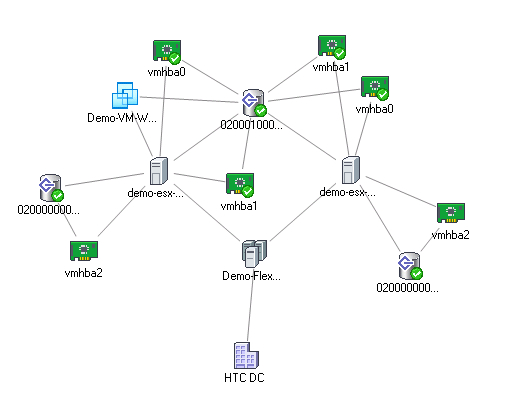

Cluster layout:

HTC DC is our DC, Demo-FlexFabric Cluster is our cluster, Demo-ESX1 and Demo-ESX2 are VMware hosts, vmhba2 are SAS blade server controllers connected to the internal disk subsystem. Vmhba0 and vmhba1 are two ports of embedded NC553i Dual Port FlexFabric 10Gb Adapter network cards connected to a shared LUN EVA 4400. Demo-VM-W2K8R2-01 is a virtual machine.

Literature:

1. HP Blade Server BL460c Gen8

2. HP Virtual Connect FlexFabric

3. Deploying a VMware vSphere HA Cluster with HP Virtual Connect FlexFabric

4. VMware Best Practices vSphere 5.0

5. VMware Virtual Networking Concepts

The cluster can be used for medium and large enterprises to reduce unplanned downtime. Since for a business such parameters as availability of its service or services to the client in 24x7 mode are important, this solution is based on a high availability cluster. The cluster always includes at least 2 servers. In our solution, servers running VMware monitor each other’s state, while at the same time only one of them will lead, and a virtual machine with our business application will be deployed on it. In the event of a master server failure, its role automatically assumes the second, while for the customer access to the business application is almost not interrupted.

1. Description of the task

In this example, we describe the process of creating a highly available VMware cluster on the BL460c G7 server blades. The hardware consists of an HP c7000 blade basket and two BL460c G7 blade servers, where a VMware HA (High Available) cluster is configured. In the HP demo center in Moscow, only models of G7 blade servers are now available, but based on the described example, you can assemble a cluster on new Gen8 blade servers. Configuration, in general, will not differ. The storage system is HP EVA 4400. For the Ethernet and Fiber Channel connections in the c7000 cage, 2 HP Virtual Connect Flex Fabric modules are installed in compartments 1 and 2.

')

2. Description of components

Virtual Connect FlexFabric is a converged virtualized I / O module for the c7000 blade basket.

The HP Virtual Connect FlexFabric module uses Flex-10, FCoE, Accelerated iSCSI technologies for switching blades and network infrastructure (SAN and LAN) at a speed of 10Gb.

From the possibilities: transfer of “profiles” (table of MAC and WWN servers) not only between different blades in the chassis, but also to remote sites where there is the same server blade.

Also, when using Virtual Connect, each of the two ports of a blade server network card can be divided into 4 virtual 1GbE / 10GbE / 8Gb FC / iSCSI ports with a total bandwidth of 10GbE per port. This allows you to get from 8 virtual ports to the server (on the BL460c G7), which must be set at the first initialization.

The only limitation is that HP Virtual Connect FlexFabric are not full switches. These are aggregators, for which you must have separate Ethernet and SAN switches (in the latest firmware, however, the Direct Connect feature with HP P10000 3PAR appeared).

A general view of the equipment used is reflected in the Onboard Administrator.

Integrated into the ProLiant BL460c G7, the NC553i Dual Port FlexFabric 10Gb card can work with HP Virtual Connect FlexFabric modules directly, without additional mezzanine cards. Two HP Virtual Connect FlexFabric modules are installed for fault tolerance of the communication paths of the blade servers to the EVA 4400 storage system and Ethernet switches.

In this example, we will consider a scenario where traffic from the HP Virtual Connect FlexFabric module is divided into Ethernet switches and an FC factory. FCoE in this case is used for switching modules with blades inside the basket.

HP Virtual Connect FlexFabric modules require transceivers to work with various protocols. In this example, 4 Gb FC SFP transceivers were installed in ports 1 and 2 on each of the modules, and 10 Gb SFP + transceivers were installed in ports 4 and 5. 10GbE channels were used in this test to enable network equipment in our demo center.

The diagram shows that the 3 ports of the NC553i Dual Port FlexFabric 10Gb network adapter are configured as FlexNIC for interfacing virtual machines and for connecting to the network infrastructure. The remaining port was configured as FCoE for communication with the SAN infrastructure and the EVA 4400 storage system.

HP Virtual Connect Manager management software is supplied with each Virtual Connect module, HP Virtual Connect Enterprise Manager for Enterprise modules. Using Enterprise Manager, you can manage up to 256 domains from one console (1 domain - 4 Blade systems with Virtual Connect).

After connecting the module, you need to configure it. This is done either by connecting the basket itself to the Onboard Administrator, or by the IP address that is assigned to the module by default at the factory.

3. Configuring Profiles in Virtual Connect Manager

Once HP FlexFabric is connected, we need to define the domain, Ethernet network, SAN fabric and server profiles for the hosts. These settings are made in the HP Virtual Connect Manager or on the command line. In our example, the domain has already been created, so the next step is to define the VC Sharing Uplink Sets to support Ethernet networks of virtual machines named HTC-77-A and HTC-77-B. Enable the “Map VLAN tags” feature in the installation wizard.

The “Map VLAN tags” feature allows you to use one physical interface to work with multiple networks using Shared Uplink Set. In this way, multiple VLANs can be configured on the server network card interface. The Shared Uplink Sets parameters were specified in the VCM installation wizard. SUS job example:

Both HTC-77-A and HTC-MGMT-A networks were assigned: VLAN_Uplink_1 to port 5 of module 1; the second Shared Uplink Set (VLAN_Uplink_2) has been assigned to port 5 of module 2, and includes the networks HTC-77-B (VLAN 20) and HTC-MGMT-B (VLAN 21). An example of creating Ethernet networks in VCM:

The HP Virtual Connect module can create an uplink-free internal network using high-performance 10Gb connections to the c7000 backplane. The internal network can be used for VMware vMotion, as well as for the organization of network resiliency. The traffic of this network does not reach the external ports of the c7000. In our case, 2 VMware vMotion networks were configured. For the management console, two networks have been configured with the names HP-MGMT-A and HP-MGMT-B. Port 6 of the HP Virtual Connect FlexFabric modules in slots 1 and 2 was used to connect to the management console.

After that, the SAN factory configuration begins. It's pretty simple: in VCM choose Define - SAN Fabric. Select the SAN-1 module in bay 1, port X1. For the second SAN-2 module, the port is also X1. SAN Configuration Example:

The final step is to “glue” all previously made settings to the server profile. This can be done in several ways: for example, via the VCM web-interface or via the Virtual Connect - Virtual Connect Scripting Utility (VCSU) command line. Before installing the OS, you need to check that all system, NIC and FC drivers are updated, and all network parameters are set. An example of creating a profile in CVM:

In VCM, select Define - Server Profile, enter the profile name and select the network connections from the profile created earlier.

In our case, all the ports were assigned by us in advance: 6 Ethernet ports and 2 SAN ports for each profile. The profiles for compartment 11 and 12 are identical, i.e. each server sees the same set of ports. The total speed does not exceed 10 Gb / s for each port of the server network card.

If network expansion is planned in the future, it is recommended to assign unassigned ports in the profile. By doing so, you can further activate these ports without shutting down and restarting the server.

When creating a default profile, only 2 ports are available in the Ethernet section. To add more, you need to call the context menu with the right mouse button and select “Add connection”.

We assign a speed for each type of ports: management ports - 500Mb / s, vMotion ports - 1Gb / s, SAN ports - 4Gb / s, and virtual machine ports - 4.5Gb / s.

After creating the profiles, they are attached to the blade compartment of the basket in the same menu.

4. Configure VMware Cluster

This completes the cart configuration. Next, there is a remote connection to the blades themselves and the deployment of VMware ESX 5.0, an example of remote installation of the OS on the ProLiant servers via iLO has already been described .

In our case, instead of Windows, VMware is selected in the installer drop-down menu and the path to the distribution kit is indicated.

After installation, the server restarts and you can begin to configure the virtual machines. To do this, connect to the ESX server. Go to the Configuration section, create 6 vmnics on each host, as in the Virtual Connect module: Service Console (vSwitch0), VM Network (vSwitch1), vMotion (vSwitch2). Thus we get a fault-tolerant configuration, where each network corresponds to 2 vmnics.

As soon as vmnics are added, you will notice that each adapter is automatically set to the speed specified in the server profile when configured in Virtual Connect Manager.

After adding vmnics, we combine them into a group using the vSwitch: Port tab - in the Configuration field, select vSwitch - Edit, select NIC Teaming, make sure that both vmnics are visible. Load balancing is selected by “Route based on the originating virtual port ID” - these settings are recommended to be set by default (for more information about this method, see the VMware website .

5. Setting up the EVA 4400 disk array

The previous review described how to work with the EVA - Command View disk array management interface.

In this example, we create a 500 GB LUN that is shared between two virtual machine hosts. According to the method described in the previous article, a virtual disk is created and presented to two ESX hosts. The size of the LUN is determined by the type of OS and the roles that this cluster will perform. A prerequisite for a cluster (in particular, for VMware vMotion) - the LUN must be shared. The new LUN must be formatted in VMFS, and the Raw Device mappings (RDMs) feature for the virtual machines must be enabled.

6. Implementing VMware HA and VMware vMotion

HP Virtual Connect in combination with VMware vMotion gives the same level of redundancy as when using two VC-FC modules and two VC-Eth modules, except that only 2 HP FlexFabric modules are needed. HP Virtual Connect FlexFabric allows you to organize fault-tolerant paths to a common LUN and fault-tolerant network interface interconnection (NIC Teaming) for networking. All VMware vSphere settings meet the Best Practices described in the document .

A cluster availability check was performed. A Windows 2008 Server R2 virtual machine was deployed on one of the hosts.

The virtual machine was manually migrated from one server to another several times, while the cluster was still available during the flashing.

Cluster layout:

HTC DC is our DC, Demo-FlexFabric Cluster is our cluster, Demo-ESX1 and Demo-ESX2 are VMware hosts, vmhba2 are SAS blade server controllers connected to the internal disk subsystem. Vmhba0 and vmhba1 are two ports of embedded NC553i Dual Port FlexFabric 10Gb Adapter network cards connected to a shared LUN EVA 4400. Demo-VM-W2K8R2-01 is a virtual machine.

Literature:

1. HP Blade Server BL460c Gen8

2. HP Virtual Connect FlexFabric

3. Deploying a VMware vSphere HA Cluster with HP Virtual Connect FlexFabric

4. VMware Best Practices vSphere 5.0

5. VMware Virtual Networking Concepts

Source: https://habr.com/ru/post/145859/

All Articles