Hadron Collider, ten-point wind and switches

On Habré, there were already quite a lot of topics on the data center and computing clusters, but are you curious about what equipment the giant facebook works on or, for example, the Moscow Internet Exchange? What is the basis of the SKIF — MSU “Chebyshev” and what “pieces of iron” does the Large Hadron Collider use in its network? Welcome under the cut, as they say!

Caution! Many pictures and text.

')

Its name, Force10, is primarily due to one of its founders, Somu Sikdar, who, as an avid yachtsman and lover of big waves, perpetuated his respect for the wind with a power of 10 on the Beaufort scale. In fact, this is a very strong storm. Since its inception, the company has specialized in solutions for high-performance computing clusters and corporate data processing centers (DPCs). “Biography” with a length of just over 10 years, but it can be counted a half dozen events that have become significant events for the global networking industry: the first 5 Tbit / s passive backplane, the first multiprocessor switch, the first rack Gigabit switch for the data center, the first modular switch with 1260 x GE ports, the first 10/40 GE factory based on open standards, the first 2.5 Tbit / s core network switch with a 2RU size.

Among customers using Force10 switches are large Internet companies, for example, the social network Facebook , and Internet traffic exchange points (German DE-CIX and Moscow MSK-IX ). A separate consumer segment of Force10 - clusters of high-performance computing, in particular, the supercomputer industry. According to analysts, 30% of the world's top40 supercomputers list use Force10 equipment. In particular, within the framework of the SKIF-GRID supercomputer program of the Union State of Russia and Belarus and the national project Education with the use of Force10 technologies, a SKIF — MSU supercomputer Chebyshev was created. This joint development of Moscow State University, the Institute of Software Systems RAS and T-Platforms in the fall of 2009 ranked second in the list of Top 50 most powerful computers in the CIS. In general, T-Platforms is a permanent partner of the Force10 team, using these high-performance switching solutions in many of its projects.

In addition, many corporate networks are built on Force10 products requiring high-performance switching. A striking example is the network of the European Nuclear Center CERN. Experiments in the 27-kilometer tunnel of the Large Hadron Collider, where protons are accelerated, generate about 15 Petabytes of data annually. All of them are immediately distributed to computer laboratories, including approximately 500 research institutes and universities from around the world. The main line of the CERN laboratory campus operates on the basis of a core built using 10 GE Force10 E-series switches.

In August 2011, Dell acquired the company, and the corresponding product line was named Dell Force10, which made Dell the global market leader in the leading segment of 40 GE networks. The current market positioning of Dell Force10 solutions resulting from the merging of Dell and Force10 technologies can be summarized as follows: high-performance, open solutions for data centers that achieve maximum functionality, flexibility, scalability and trouble-free performance of any data center, regardless of whether they serve a small workgroup or the largest internet search site.

There are two key words in the definition of the niche for Dell Force10 products: "high-performance" and "open." The Dell Force10 family of products is an open, high-performance switching solution for data centers based on an open architecture. It involves the support of open standards, the ability to integrate with equipment from other manufacturers, thereby ensuring the protection of customer investments.

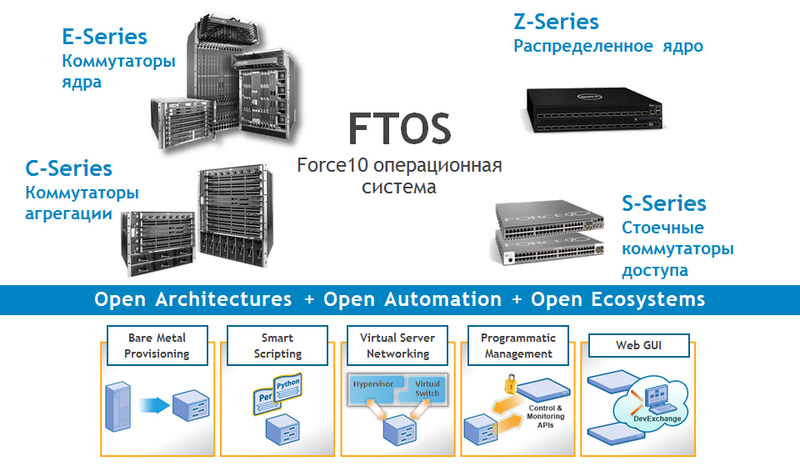

All Dell Force10 switches run on a single modular operating system, FTOS (Force Ten Operating System), supporting switching at the second and third level (Layer 2 and Layer 3), with high performance and fault tolerance. It implements a level of abstraction equipment, which allows you to run the same applications on different products of the Force10 family. In other words, it is possible to repeatedly use the same code, which accelerates the deployment of applications and system functionality.

FTOS software is based on NetBSD, a free, open source operating system built on a modular principle that is highly flexible, reliable, portable and efficient. FTOS is a carrier-class operating system that runs even on the youngest model of Dell Force10 switches.

Dell OpenManage Network Manager with a graphical user interface is used to manage Dell network solutions. In addition, in large installations and virtualized environments, the functionality of FTOS is complemented by excellent automation software in the data center called Open Automation , which simplifies the management of the entire system and increases its efficiency.

With the increasing proliferation of virtualization, data centers must respond faster to change. However, management tasks are becoming more complex: IT managers have to deal with hundreds and thousands of virtual machines with the appropriate storage resources and networks. The infrastructure of the data center must quickly adapt to the requirements of the applications and respond to them. In addition, servers, storage systems and networks can no longer be managed separately - a single dynamic environment is needed. Actually, Open Automation is aimed at solving these problems.

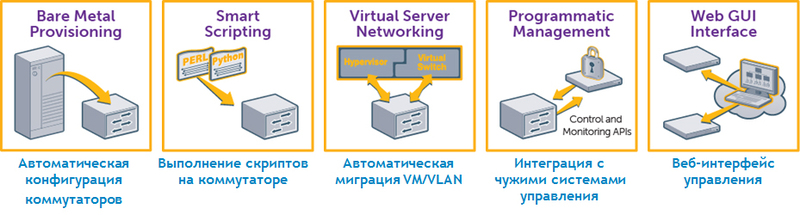

In essence, Open Automation is a collection of interconnected network management tools that can be used together or separately:

The Dell Force10 product line consists of four main categories (series):

1) E-series modular switches;

2) C-series modular switches;

3) rack mount Top-of-Rack S-series switches;

4) Z-series to build a distributed core.

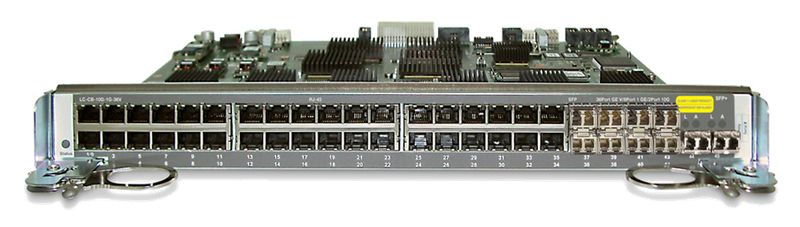

The E-series includes High End modular switches that include up to 140 ports of 10 GE without oversubscription or 560 10GE with 4: 1 oversubscription. It is noteworthy that in one chassis you can provide 1260 GE ports - a unique figure in the industry for scaling for modular switches. Due to the high port density of 10 GE, these switches are great for working as a data center core.

The modular chassis of the ExaScale E1200i E-series switches in the 24RU form factor includes:

Fault tolerance of the chassis is provided by redundant power supplies and fans, redundant processor modules (1 + 1), redundant switching fabric modules (9 + 1).

The chassis is based on a distributed architecture, which involves distributed processing on interface cards, as well as separate processors for Layer 2, Layer 3 protocols and control.

The ExaScale E600i modular chassis in the 16RU form factor provides a passive 2.7 Tbit / s Backplane and has 7 slots for interface cards (125 Gbit / s per slot). The modules of the switching factory (4 + 1 1.75 Tbit / s), processor modules (1 + 1), and power supply units (3 + 1 AC) are backed up.

The C-series is a modular chassis for the aggregation of data centers and corporate network cores. They are characterized by low cost per port. Capable of supporting up to 64 ports of 10 GE and 384 GE without oversubscription. Customers called this switch a tractor: they turned it on, they tuned it once, and it “plows” like a tractor.

Models C150 and C300 have a passive copper backplane, four or eight slots for interface cards (96 Gbit / s per slot). The capacity is, respectively, 768 Gbit / s and 1,536 Tbit / s. Power supplies, processor modules with integrated switching factory, fans are reserved.

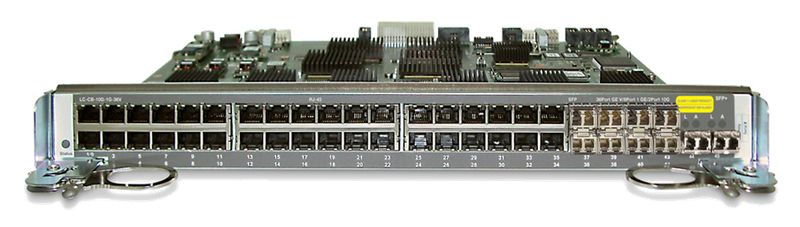

In the list of interface cards for the C-series there is an interesting version of the FlexMedia card with different types of ports located on one card: 36 ports 10/100/1000, 8 ports GE SFP and 2 ports 10GE SFP +.

C-series modular switches are great for use in data centers as an end-of-row switch and for aggregation purposes. Since all C-series switching systems support Power over Ethernet, they can be deployed in campus networks and wiring closets.

The S-series is made up of top-of-rack switches with stacking capability that provide server connections over Gigabit Ethernet and 10GE.

The S-series product line consists of 1/10/40 GE fixed configuration systems designed primarily for use as top-of-rack data center access level switches. As part of the line: the S55 model with 44 ports 10/100 / 1000Base-T and low switching latency, the S60 model with an increased packet buffer, and S4810, focused on ensuring the highest density of ports in the 1/10 GE Top-of-Rack switch and supporting 40 GE.

S55 and S60 models - Top-of-Rack Gigabit Switches - have 44 10/100/1000 copper ports for connecting servers and up to 4 10 GE ports. S55 and S60 models support Open Automation functionality.

The S60's packet buffer size is 1.25 GB, a unique indicator for rack-mount gigabit switches for the entire network industry. A switch with such capabilities is particularly well suited for applications that, on the one hand, are characterized by large bursts of traffic, and on the other hand, are critical for packet loss, such as video, iSCSI, Memcache, Hadoop.

The S4810 rack-mounted Top-of-Rack switch with non-blocking architecture provides 640 Gbit / s bandwidth (full-duplex) and 700 ns low latency, 48 ports of 10 GE for connecting servers and 4 ports of 40 GE. Using splitter cables, port 40 GE can be turned into 4 ports of 10 GE, and then the switch has 64 ports of 10 GE, operating at full speed and without oversubscription. Supports 128K MAC addresses, provides switching at Layer 2 and Layer 3 levels, routing protocols IPv4 and IPv6. Implemented native support for automation and virtualization (Open Automation), as well as support for the convergence of SAN and LAN (DCB, FCoE).

S4810 switches can be stacked using 10 or 40 GE ports (the maximum bandwidth for stacking is 160 Gbps). Supports up to 6 switches per stack. It does not require special stack modules.

Virtual Link Trunking (VLT) technology is also supported, which allows servers and switches to be connected via aggregated channels to two S4810 switches, thereby ensuring fault tolerance and balancing traffic across all channels in the network.

The Dell Force10 MXL Blade Switch for the Dell PowerEdge M1000e Blade Chassis, announced in late April and first shown at Interop 2012 in Las Vegas, is the industry's first 40 GE switch in the blade format. It is created as an integral part of the virtual network architecture, based on the requirements of high performance and high density, and is capable of supporting modern workloads in both traditional and virtual network structures, as well as in public and private cloud environments.

The Dell Force10 MXL features high port density in a single device: 32 1/10 GE ports for connecting blade servers, provided non-blocking local switching for all blade servers connected to the switch. It scales to six external 40 GE ports or up to 24 external 10GE ports.

The Dell Force10 MXL switch supports stacking, allowing up to six switches to be connected to the stack through a single IP address. Ports 40 GE are used for stacking.

In addition, the MXL switch can be easily expanded using interface expansion modules by adding 40 GE QSFP +, 10 GE SFP + or 10GBase-T ports. Using the Dell Force10 MXL Blade Switch is a good way to build a converged SAN / LAN network. It fully supports converged storage solutions based on DCB and FCoE. MXL switch is available now.

Switch Z9000 is designed to build a distributed core. With a 2RU size, it can accommodate 32 ports of 40 GE or with splitter cables - 128 ports of 10 GE. The non-blocking factory is capable of providing a throughput of 2.5 Tbit / s with a delay of less than 3 µs.

At the same time, the switch consumes very little power - up to 800 W, which is 6.25 W per port of 10 GE. It is a typical building block for the new Distributed Core architecture developed by Dell.

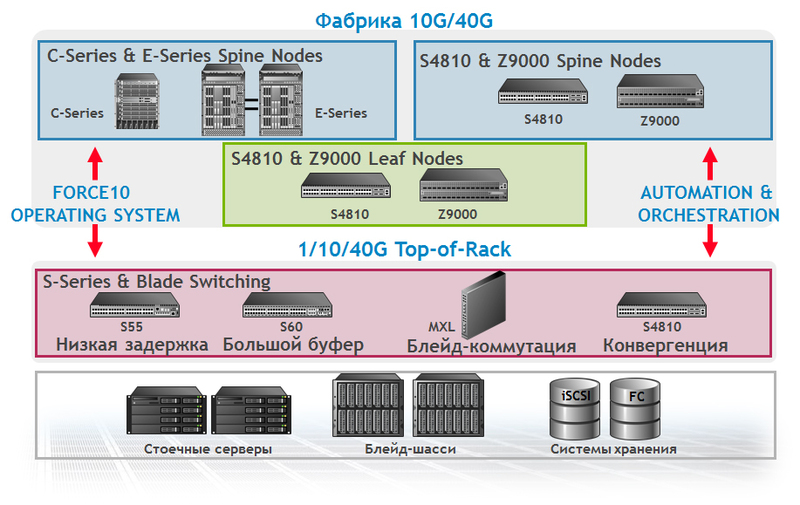

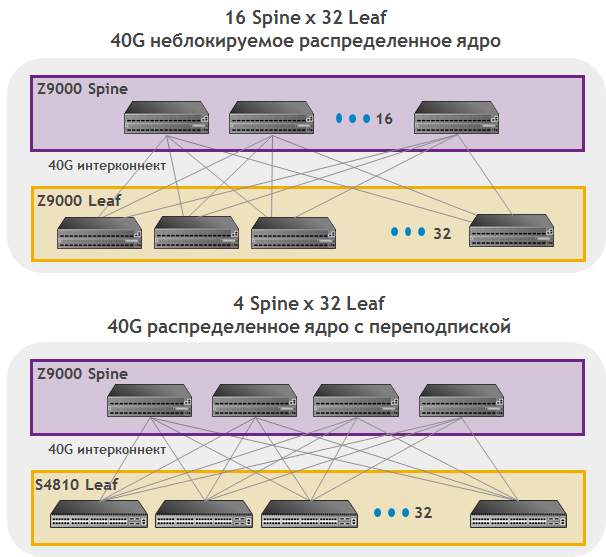

The distributed core architecture consists of two levels: Spine (the one above) and Leaf (the one below). It is possible to draw an analogy with a modular chassis - to present a distributed core in the form of a large modular chassis, in which Spine switches play the role of switching factory, Leaf switches play the role of interface cards.

Connections between Spine and Leaf switches are made using 40 GE interfaces, and 10 GE can also be used with splitter cables. The control protocol of this modular chassis “lives” on each of the switches: both Spine and Leaf, that is, it is distributed. All switches communicate and interact with each other based on open standards. Scaling is done by adding plug-and-play switches to the factory of Spine and Leaf switches. Using a distributed kernel, you can build a Layer 3 factory based on the routing protocols OSPF, BGP and the traffic balancing mechanism for multiple paths using ECMP (Equal Cost Multipathing). Thus it is possible to use all the channels in the factory. In the future, it is also planned to support the TRILL protocol (Transparent Interconnection of Lots of Links), which will create a Layer 2 factory with balancing on all available channels in the factory.

Depending on the tasks involved in designing a factory, you can build a distributed core without oversubscription and with oversubscription.

This architecture provides high reliability. Each Spine node passes 100 / n percent of the bandwidth (where n is the number of Spine switches). This means that for 16 Spine nodes, if one of them fails, 93.75% of the bandwidth is available, compared to 50% for a traditional design consisting of two modular switches.

In addition, it becomes possible to scale the system as it grows: start with the minimum number of Spine and Leaf nodes and then add as needed (up to 8192 access ports of 10 GE without oversubscription in the factory). Compared to a network built on a modular chassis, the distributed core has less power consumption and takes up less space in racks.

In order to simplify the creation of a distributed kernel, Dell has developed a special software, Dell Fabric Manager. It helps to design, build, and manage a distributed core. In particular, a number of labor-intensive functions are automated, such as the development of a wiring diagram for connecting ports between factory switches or configuring a system of multiple switches. Dell Fabric Manager also supports remote monitoring of distributed core switches using a visual graphical representation of network topology.

Thus, the distributed core architecture provides an ultra-scalable Leaf-Spine factory for a data center with minimal switching latency at speeds up to 160 Tbit / s with moderate sizes, power requirements and price.

Obviously, the trend of virtualization is now spreading to networks: they begin to embed network capabilities for virtual machines in hypervisor environments. The first step in the implementation of this trend was the emergence of embedded virtual switches vSwitch (such as, for example, vDS for VMware ESX). Dell has included virtualization support in its Open Automation data center management automation software. The next logical step towards a deeper implementation of virtualization capabilities in the network and virtualization support between data centers is associated with VxLAN (Virtual Extensible Local Area Network) and NVGRE (Network Virtualization using Generic Routing Encapsulation) technologies.

In August last year, a group of companies led by VMware, announced as part of the IETF, an RFC project for VxLAN - virtual extensible local area networks. In fact, this is a virtualization standard proposal that will allow virtual machines to move across Layer 3 boundaries using a second-level identifier abstraction (Layer 2 ID). In September, another group of companies, led by Microsoft, announced another project - IETF RFC for NVGRE. The network virtualization environment offered by NVGRE supports secure mode of using shared resources (multi-tenancy) and mobility of virtual machines in private and public clouds using Generic Routing Encapsulation (GRE) encapsulation protocol.

Dell intends to support both specifications: VxLAN and NVGRE - and is working to support the appropriate technologies in its Dell Force10 products. In this case, Dell customers will be able to use the hypervisors they are used to. Moreover, Dell intends to work closely with leading vendors of hypervisors and operating systems to further improve the specifications of VxLAN and NVGRE.

We believe that in addition to virtual networks in a hypervisor environment, the physical network itself must also evolve towards open and standards-based Software Defined Networking (SDN) networks. The network architecture of software-configured SDN networks is based on two fundamental architectural principles: (1) separation of the control plane (control plane) and data transfer (data plane) and (2) compatibility with virtual networks in next-generation hypervisor environments. The future network architecture, in which applications are “knowledgeable” about the network, works in a virtualized environment and, if necessary, dynamically creates logical networks. These logical networks are superimposed, that is, they work on top of a software-controlled physical network infrastructure, and are designed to deliver all the necessary data and applications, as well as additional services to any user, regardless of whether the environment is physical or virtual.

At Dell, this architecture is called the Virtual Network Architecture (VNA) and develops products and solutions in accordance with the priority direction for our company to SDN networks.

Well, if you're wondering how Dell Force10 switches can help you in your business, please contact us. Our experts can advise you and go to see what’s what, and think about the implementation of Force10 to solve your problems. All questions can be addressed directly to Marat Rakayev of Dell: marat_rakaev@dell.com .

UPDATE !!! All information in the article is completely up to date.

Caution! Many pictures and text.

A small historical lyrical digression

')

Its name, Force10, is primarily due to one of its founders, Somu Sikdar, who, as an avid yachtsman and lover of big waves, perpetuated his respect for the wind with a power of 10 on the Beaufort scale. In fact, this is a very strong storm. Since its inception, the company has specialized in solutions for high-performance computing clusters and corporate data processing centers (DPCs). “Biography” with a length of just over 10 years, but it can be counted a half dozen events that have become significant events for the global networking industry: the first 5 Tbit / s passive backplane, the first multiprocessor switch, the first rack Gigabit switch for the data center, the first modular switch with 1260 x GE ports, the first 10/40 GE factory based on open standards, the first 2.5 Tbit / s core network switch with a 2RU size.

Among customers using Force10 switches are large Internet companies, for example, the social network Facebook , and Internet traffic exchange points (German DE-CIX and Moscow MSK-IX ). A separate consumer segment of Force10 - clusters of high-performance computing, in particular, the supercomputer industry. According to analysts, 30% of the world's top40 supercomputers list use Force10 equipment. In particular, within the framework of the SKIF-GRID supercomputer program of the Union State of Russia and Belarus and the national project Education with the use of Force10 technologies, a SKIF — MSU supercomputer Chebyshev was created. This joint development of Moscow State University, the Institute of Software Systems RAS and T-Platforms in the fall of 2009 ranked second in the list of Top 50 most powerful computers in the CIS. In general, T-Platforms is a permanent partner of the Force10 team, using these high-performance switching solutions in many of its projects.

In addition, many corporate networks are built on Force10 products requiring high-performance switching. A striking example is the network of the European Nuclear Center CERN. Experiments in the 27-kilometer tunnel of the Large Hadron Collider, where protons are accelerated, generate about 15 Petabytes of data annually. All of them are immediately distributed to computer laboratories, including approximately 500 research institutes and universities from around the world. The main line of the CERN laboratory campus operates on the basis of a core built using 10 GE Force10 E-series switches.

In August 2011, Dell acquired the company, and the corresponding product line was named Dell Force10, which made Dell the global market leader in the leading segment of 40 GE networks. The current market positioning of Dell Force10 solutions resulting from the merging of Dell and Force10 technologies can be summarized as follows: high-performance, open solutions for data centers that achieve maximum functionality, flexibility, scalability and trouble-free performance of any data center, regardless of whether they serve a small workgroup or the largest internet search site.

Open Solutions and Storm Architecture

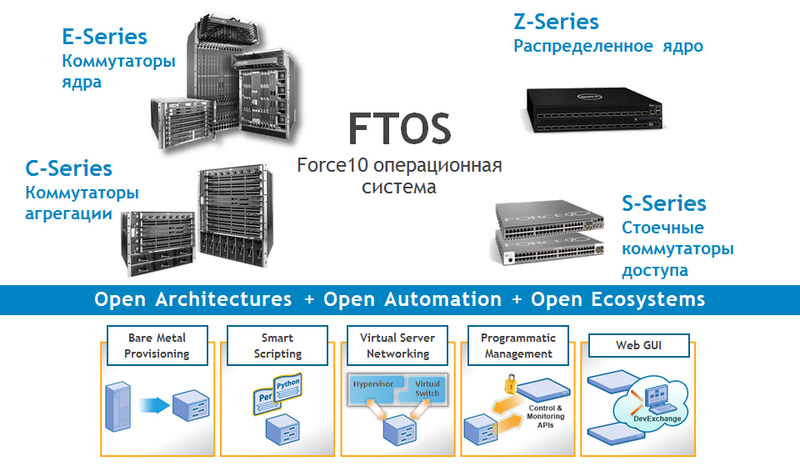

There are two key words in the definition of the niche for Dell Force10 products: "high-performance" and "open." The Dell Force10 family of products is an open, high-performance switching solution for data centers based on an open architecture. It involves the support of open standards, the ability to integrate with equipment from other manufacturers, thereby ensuring the protection of customer investments.

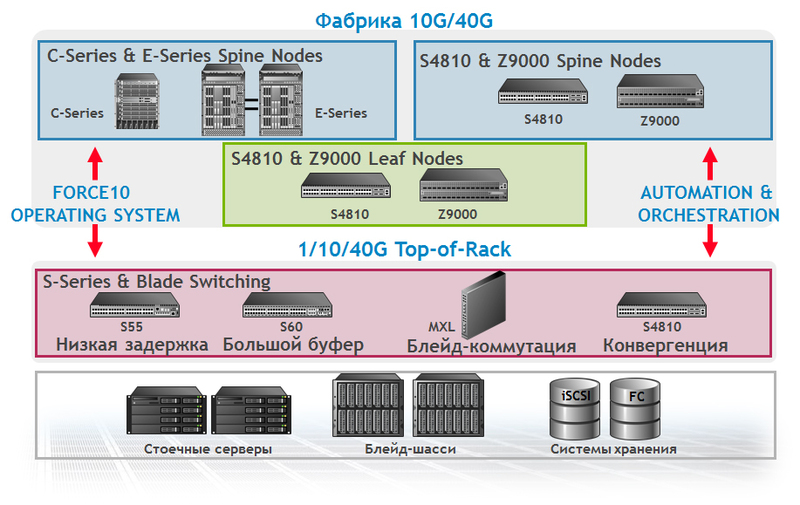

All Dell Force10 switches run on a single modular operating system, FTOS (Force Ten Operating System), supporting switching at the second and third level (Layer 2 and Layer 3), with high performance and fault tolerance. It implements a level of abstraction equipment, which allows you to run the same applications on different products of the Force10 family. In other words, it is possible to repeatedly use the same code, which accelerates the deployment of applications and system functionality.

FTOS software is based on NetBSD, a free, open source operating system built on a modular principle that is highly flexible, reliable, portable and efficient. FTOS is a carrier-class operating system that runs even on the youngest model of Dell Force10 switches.

Dell OpenManage Network Manager with a graphical user interface is used to manage Dell network solutions. In addition, in large installations and virtualized environments, the functionality of FTOS is complemented by excellent automation software in the data center called Open Automation , which simplifies the management of the entire system and increases its efficiency.

With the increasing proliferation of virtualization, data centers must respond faster to change. However, management tasks are becoming more complex: IT managers have to deal with hundreds and thousands of virtual machines with the appropriate storage resources and networks. The infrastructure of the data center must quickly adapt to the requirements of the applications and respond to them. In addition, servers, storage systems and networks can no longer be managed separately - a single dynamic environment is needed. Actually, Open Automation is aimed at solving these problems.

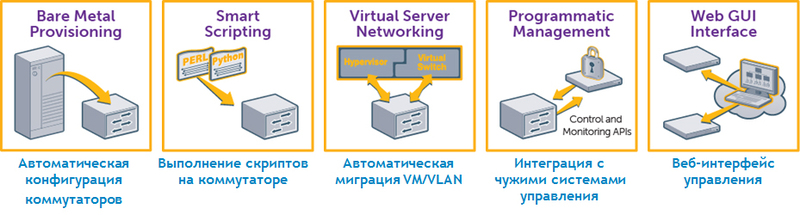

In essence, Open Automation is a collection of interconnected network management tools that can be used together or separately:

- Automatic switch configuration (Bare Metal Provisioning) - reduces installation time, eliminates configuration errors and standardizes configurations by automatically configuring network switches.

- Switch Script Execution (Smart Scripting) - Improves network monitoring and management using a powerful Perl / Python script environment.

- VM / VLAN Automatic Migration (Virtual Server Networking) - simplifies network management in a virtualized environment, providing automatic migration of virtual local area networks (VLANs) along with virtual machines (VM).

- Integration with management systems (Programmatic Management) - management of switches using third-party tools and support for standard management interfaces.

Dell Force10 product line

The Dell Force10 product line consists of four main categories (series):

1) E-series modular switches;

2) C-series modular switches;

3) rack mount Top-of-Rack S-series switches;

4) Z-series to build a distributed core.

Dell Force10 E-series switches

The E-series includes High End modular switches that include up to 140 ports of 10 GE without oversubscription or 560 10GE with 4: 1 oversubscription. It is noteworthy that in one chassis you can provide 1260 GE ports - a unique figure in the industry for scaling for modular switches. Due to the high port density of 10 GE, these switches are great for working as a data center core.

The modular chassis of the ExaScale E1200i E-series switches in the 24RU form factor includes:

- Passive copper backplane 5 Tbps.

- 3.5 Tbps modular switching factory.

- 14 slots for interface cards, 125 Gbit / s per slot.

- 6 AC power supplies.

Fault tolerance of the chassis is provided by redundant power supplies and fans, redundant processor modules (1 + 1), redundant switching fabric modules (9 + 1).

The chassis is based on a distributed architecture, which involves distributed processing on interface cards, as well as separate processors for Layer 2, Layer 3 protocols and control.

The ExaScale E600i modular chassis in the 16RU form factor provides a passive 2.7 Tbit / s Backplane and has 7 slots for interface cards (125 Gbit / s per slot). The modules of the switching factory (4 + 1 1.75 Tbit / s), processor modules (1 + 1), and power supply units (3 + 1 AC) are backed up.

Dell Force10 C-series switches

The C-series is a modular chassis for the aggregation of data centers and corporate network cores. They are characterized by low cost per port. Capable of supporting up to 64 ports of 10 GE and 384 GE without oversubscription. Customers called this switch a tractor: they turned it on, they tuned it once, and it “plows” like a tractor.

Models C150 and C300 have a passive copper backplane, four or eight slots for interface cards (96 Gbit / s per slot). The capacity is, respectively, 768 Gbit / s and 1,536 Tbit / s. Power supplies, processor modules with integrated switching factory, fans are reserved.

In the list of interface cards for the C-series there is an interesting version of the FlexMedia card with different types of ports located on one card: 36 ports 10/100/1000, 8 ports GE SFP and 2 ports 10GE SFP +.

C-series modular switches are great for use in data centers as an end-of-row switch and for aggregation purposes. Since all C-series switching systems support Power over Ethernet, they can be deployed in campus networks and wiring closets.

Dell Force10 S-series switches

The S-series is made up of top-of-rack switches with stacking capability that provide server connections over Gigabit Ethernet and 10GE.

The S-series product line consists of 1/10/40 GE fixed configuration systems designed primarily for use as top-of-rack data center access level switches. As part of the line: the S55 model with 44 ports 10/100 / 1000Base-T and low switching latency, the S60 model with an increased packet buffer, and S4810, focused on ensuring the highest density of ports in the 1/10 GE Top-of-Rack switch and supporting 40 GE.

S55 and S60 models - Top-of-Rack Gigabit Switches - have 44 10/100/1000 copper ports for connecting servers and up to 4 10 GE ports. S55 and S60 models support Open Automation functionality.

The S60's packet buffer size is 1.25 GB, a unique indicator for rack-mount gigabit switches for the entire network industry. A switch with such capabilities is particularly well suited for applications that, on the one hand, are characterized by large bursts of traffic, and on the other hand, are critical for packet loss, such as video, iSCSI, Memcache, Hadoop.

The S4810 rack-mounted Top-of-Rack switch with non-blocking architecture provides 640 Gbit / s bandwidth (full-duplex) and 700 ns low latency, 48 ports of 10 GE for connecting servers and 4 ports of 40 GE. Using splitter cables, port 40 GE can be turned into 4 ports of 10 GE, and then the switch has 64 ports of 10 GE, operating at full speed and without oversubscription. Supports 128K MAC addresses, provides switching at Layer 2 and Layer 3 levels, routing protocols IPv4 and IPv6. Implemented native support for automation and virtualization (Open Automation), as well as support for the convergence of SAN and LAN (DCB, FCoE).

S4810 switches can be stacked using 10 or 40 GE ports (the maximum bandwidth for stacking is 160 Gbps). Supports up to 6 switches per stack. It does not require special stack modules.

Virtual Link Trunking (VLT) technology is also supported, which allows servers and switches to be connected via aggregated channels to two S4810 switches, thereby ensuring fault tolerance and balancing traffic across all channels in the network.

The Dell Force10 MXL Blade Switch for the Dell PowerEdge M1000e Blade Chassis, announced in late April and first shown at Interop 2012 in Las Vegas, is the industry's first 40 GE switch in the blade format. It is created as an integral part of the virtual network architecture, based on the requirements of high performance and high density, and is capable of supporting modern workloads in both traditional and virtual network structures, as well as in public and private cloud environments.

The Dell Force10 MXL features high port density in a single device: 32 1/10 GE ports for connecting blade servers, provided non-blocking local switching for all blade servers connected to the switch. It scales to six external 40 GE ports or up to 24 external 10GE ports.

The Dell Force10 MXL switch supports stacking, allowing up to six switches to be connected to the stack through a single IP address. Ports 40 GE are used for stacking.

In addition, the MXL switch can be easily expanded using interface expansion modules by adding 40 GE QSFP +, 10 GE SFP + or 10GBase-T ports. Using the Dell Force10 MXL Blade Switch is a good way to build a converged SAN / LAN network. It fully supports converged storage solutions based on DCB and FCoE. MXL switch is available now.

Dell Force10 Z-series switches

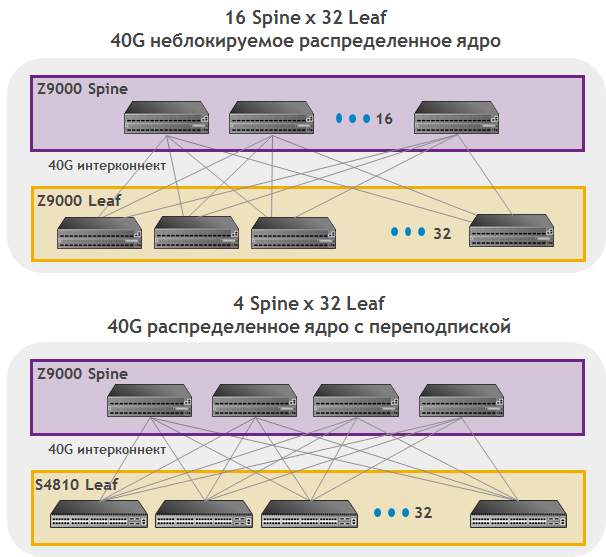

Switch Z9000 is designed to build a distributed core. With a 2RU size, it can accommodate 32 ports of 40 GE or with splitter cables - 128 ports of 10 GE. The non-blocking factory is capable of providing a throughput of 2.5 Tbit / s with a delay of less than 3 µs.

At the same time, the switch consumes very little power - up to 800 W, which is 6.25 W per port of 10 GE. It is a typical building block for the new Distributed Core architecture developed by Dell.

Distributed core

The distributed core architecture consists of two levels: Spine (the one above) and Leaf (the one below). It is possible to draw an analogy with a modular chassis - to present a distributed core in the form of a large modular chassis, in which Spine switches play the role of switching factory, Leaf switches play the role of interface cards.

Connections between Spine and Leaf switches are made using 40 GE interfaces, and 10 GE can also be used with splitter cables. The control protocol of this modular chassis “lives” on each of the switches: both Spine and Leaf, that is, it is distributed. All switches communicate and interact with each other based on open standards. Scaling is done by adding plug-and-play switches to the factory of Spine and Leaf switches. Using a distributed kernel, you can build a Layer 3 factory based on the routing protocols OSPF, BGP and the traffic balancing mechanism for multiple paths using ECMP (Equal Cost Multipathing). Thus it is possible to use all the channels in the factory. In the future, it is also planned to support the TRILL protocol (Transparent Interconnection of Lots of Links), which will create a Layer 2 factory with balancing on all available channels in the factory.

Depending on the tasks involved in designing a factory, you can build a distributed core without oversubscription and with oversubscription.

Factory for data center of any size

| Factory size | Small | Average | Large |

|---|---|---|---|

| Spine Nodes | S4810 | Z9000 | Z9000 |

| Leaf nodes | S4810 | S4810 | Z9000 |

| Number of nodes | 4 spine / 12 leaf | 4 spine / 32 leaf | 16 spine / 32 leaf |

| Interconnect Factory | 10 GbE | 40 GbE | 40 GbE |

| Factory performance | 3.84 Tbit / s | 10.24 Tbit / s | 40.96 Tbps |

| Available 10GbE ports | 576 with oversubscription 3: 1 | 1,536 with oversubscription 3: 1 | 2,048 unlockable |

This architecture provides high reliability. Each Spine node passes 100 / n percent of the bandwidth (where n is the number of Spine switches). This means that for 16 Spine nodes, if one of them fails, 93.75% of the bandwidth is available, compared to 50% for a traditional design consisting of two modular switches.

In addition, it becomes possible to scale the system as it grows: start with the minimum number of Spine and Leaf nodes and then add as needed (up to 8192 access ports of 10 GE without oversubscription in the factory). Compared to a network built on a modular chassis, the distributed core has less power consumption and takes up less space in racks.

In order to simplify the creation of a distributed kernel, Dell has developed a special software, Dell Fabric Manager. It helps to design, build, and manage a distributed core. In particular, a number of labor-intensive functions are automated, such as the development of a wiring diagram for connecting ports between factory switches or configuring a system of multiple switches. Dell Fabric Manager also supports remote monitoring of distributed core switches using a visual graphical representation of network topology.

Thus, the distributed core architecture provides an ultra-scalable Leaf-Spine factory for a data center with minimal switching latency at speeds up to 160 Tbit / s with moderate sizes, power requirements and price.

What will happen next?

Obviously, the trend of virtualization is now spreading to networks: they begin to embed network capabilities for virtual machines in hypervisor environments. The first step in the implementation of this trend was the emergence of embedded virtual switches vSwitch (such as, for example, vDS for VMware ESX). Dell has included virtualization support in its Open Automation data center management automation software. The next logical step towards a deeper implementation of virtualization capabilities in the network and virtualization support between data centers is associated with VxLAN (Virtual Extensible Local Area Network) and NVGRE (Network Virtualization using Generic Routing Encapsulation) technologies.

In August last year, a group of companies led by VMware, announced as part of the IETF, an RFC project for VxLAN - virtual extensible local area networks. In fact, this is a virtualization standard proposal that will allow virtual machines to move across Layer 3 boundaries using a second-level identifier abstraction (Layer 2 ID). In September, another group of companies, led by Microsoft, announced another project - IETF RFC for NVGRE. The network virtualization environment offered by NVGRE supports secure mode of using shared resources (multi-tenancy) and mobility of virtual machines in private and public clouds using Generic Routing Encapsulation (GRE) encapsulation protocol.

Dell intends to support both specifications: VxLAN and NVGRE - and is working to support the appropriate technologies in its Dell Force10 products. In this case, Dell customers will be able to use the hypervisors they are used to. Moreover, Dell intends to work closely with leading vendors of hypervisors and operating systems to further improve the specifications of VxLAN and NVGRE.

We believe that in addition to virtual networks in a hypervisor environment, the physical network itself must also evolve towards open and standards-based Software Defined Networking (SDN) networks. The network architecture of software-configured SDN networks is based on two fundamental architectural principles: (1) separation of the control plane (control plane) and data transfer (data plane) and (2) compatibility with virtual networks in next-generation hypervisor environments. The future network architecture, in which applications are “knowledgeable” about the network, works in a virtualized environment and, if necessary, dynamically creates logical networks. These logical networks are superimposed, that is, they work on top of a software-controlled physical network infrastructure, and are designed to deliver all the necessary data and applications, as well as additional services to any user, regardless of whether the environment is physical or virtual.

At Dell, this architecture is called the Virtual Network Architecture (VNA) and develops products and solutions in accordance with the priority direction for our company to SDN networks.

Well, if you're wondering how Dell Force10 switches can help you in your business, please contact us. Our experts can advise you and go to see what’s what, and think about the implementation of Force10 to solve your problems. All questions can be addressed directly to Marat Rakayev of Dell: marat_rakaev@dell.com .

UPDATE !!! All information in the article is completely up to date.

Source: https://habr.com/ru/post/145742/

All Articles