Replication from OLTP to OLAP database

My friend Robert Hodges recently published an article about replication from OLTP to an OLAP database (namely, from MySQL to Vertica), which his company built on its Tungsten product. The most interesting thing is the data conversion that occurs during the replication process. The approach is quite general, and can be used for other systems.

The usual approach to replication is synchronous or asynchronous transfer of a binary log from one database (master) to others (slaves). In a binary log, all operations that modify data are strictly sequentially recorded. If it is “played” on another system from the same starting point, then it should be exactly the same data state as on the original one. "Playing" occurs in one operation or in a single transaction, that is, in very small pieces.

This approach does not work well with OLAP-specific, and especially column-oriented databases that store data physically not in rows, but in columns. Such databases are optimized for writing, reading and sorting large data arrays, which is typical for analytical tasks, but not for small operations on single records, because any operation involves many columns that are physically stored in different files (and sometimes different disks) . The worst is the case with data changes. Of course, all databases support the SQL standard and the UPDATE statement, but at the physical level, it is usually translated into the fact that the updated record is marked as deleted, and a modified copy is inserted instead. Then, someday, the "garbage collector" shakes up the table and deleted records will be deleted forever. In addition to poor performance, it follows that frequent deletions and updates lead to database clogging, which reduces its performance, including reading.

')

Robert suggested, it seems to me, a new, albeit a natural approach to solving the problem of data replication for such cases. The binary log is converted into a sequence of partially ordered sets of DELETE / INSERT operations for each table, and so the word “set” implies that it is enough to perform “identical” operations in some sense once. Let me explain a little more.

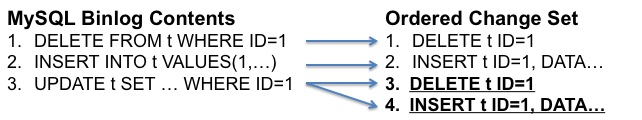

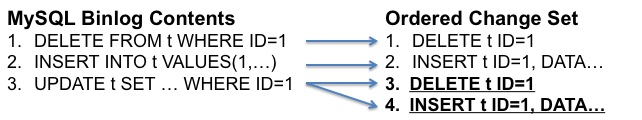

First, instead of replicating individual changes or tables, it is suggested that replication be done in fairly large chunks or data packets. It is natural to load data into OLAP databases. For example, all changes over a period of time: minute, 5 minutes, etc. Secondly, all changes that fall into the package are divided into tables. Then, each UPDATE is translated into a DELETE and INSERT pair. And, finally, for each table, the reduction is carried out according to certain rules: only one DELETE is left for each key, and only the last INSERT is left for each key if it is not followed by DELETE. This reduction turns the flow of changes into a minimal set (set) of operations like DELETE and INSERT, with DELETE being executed before INSERT. For example:

Three operations in the binary log were transferred to 4 operations at the intermediate stage, and after the reduction only the last two remained.

What does this give? This gives the most efficient way to change data from an OLAP database point of view: first, all deleted or changed records in the package are deleted, and nothing more, and then one piece for each table - new and changed records are added, which can be done very efficiently through package operators data downloads. That is, a strictly ordered linear sequence is transformed into a partially ordered set (into a sequence of partially ordered sets). This is the fundamental meaning.

Obviously, there is a limitation. If the UPDATE or DELETE in the binary log does not include the condition on the primary key of the table, then this approach does not work, and in this case you will have to replicate the data in the traditional way.

In conclusion, I note that this is not an empty theory, but a working implementation, about which Robert writes in the next article . Why do you need it? The client wanted to get fast analytics for their data stored in MySQL. And Vertica is a very good tool for this. I know firsthand.

The usual approach to replication is synchronous or asynchronous transfer of a binary log from one database (master) to others (slaves). In a binary log, all operations that modify data are strictly sequentially recorded. If it is “played” on another system from the same starting point, then it should be exactly the same data state as on the original one. "Playing" occurs in one operation or in a single transaction, that is, in very small pieces.

This approach does not work well with OLAP-specific, and especially column-oriented databases that store data physically not in rows, but in columns. Such databases are optimized for writing, reading and sorting large data arrays, which is typical for analytical tasks, but not for small operations on single records, because any operation involves many columns that are physically stored in different files (and sometimes different disks) . The worst is the case with data changes. Of course, all databases support the SQL standard and the UPDATE statement, but at the physical level, it is usually translated into the fact that the updated record is marked as deleted, and a modified copy is inserted instead. Then, someday, the "garbage collector" shakes up the table and deleted records will be deleted forever. In addition to poor performance, it follows that frequent deletions and updates lead to database clogging, which reduces its performance, including reading.

')

Robert suggested, it seems to me, a new, albeit a natural approach to solving the problem of data replication for such cases. The binary log is converted into a sequence of partially ordered sets of DELETE / INSERT operations for each table, and so the word “set” implies that it is enough to perform “identical” operations in some sense once. Let me explain a little more.

First, instead of replicating individual changes or tables, it is suggested that replication be done in fairly large chunks or data packets. It is natural to load data into OLAP databases. For example, all changes over a period of time: minute, 5 minutes, etc. Secondly, all changes that fall into the package are divided into tables. Then, each UPDATE is translated into a DELETE and INSERT pair. And, finally, for each table, the reduction is carried out according to certain rules: only one DELETE is left for each key, and only the last INSERT is left for each key if it is not followed by DELETE. This reduction turns the flow of changes into a minimal set (set) of operations like DELETE and INSERT, with DELETE being executed before INSERT. For example:

Three operations in the binary log were transferred to 4 operations at the intermediate stage, and after the reduction only the last two remained.

What does this give? This gives the most efficient way to change data from an OLAP database point of view: first, all deleted or changed records in the package are deleted, and nothing more, and then one piece for each table - new and changed records are added, which can be done very efficiently through package operators data downloads. That is, a strictly ordered linear sequence is transformed into a partially ordered set (into a sequence of partially ordered sets). This is the fundamental meaning.

Obviously, there is a limitation. If the UPDATE or DELETE in the binary log does not include the condition on the primary key of the table, then this approach does not work, and in this case you will have to replicate the data in the traditional way.

In conclusion, I note that this is not an empty theory, but a working implementation, about which Robert writes in the next article . Why do you need it? The client wanted to get fast analytics for their data stored in MySQL. And Vertica is a very good tool for this. I know firsthand.

Source: https://habr.com/ru/post/145734/

All Articles