What is silent about the Random Number Generator from Intel?

I have long wanted to kick the RNG from Intel, but I could not find a free moment. I had to force myself. The result of kicking is the text below.

The format of the text - banter and criticism, with respect to the opponent.

I do not think that the post will be of interest to the developers of this RNG (they have long received a prize for it), but it may be of interest to someone else for general development.

What is so silent RNG from Intel? And he is silent about a lot of things, but first we need to determine the terminology.

There is a common name Random Number Generator (Random Number Generator - RNG). They are divided into PRNG (Pseudo Random Number Generator) and TRNG - True RNG. Every self-respecting office that releases crypto IP, well, is simply obliged to have True RNG in its arsenal.

Even better, if your True RNG is compact, fast, and most importantly, digital (well, without analogous problems, such as Schottky diodes, noisy resistors, etc. of exotic living creatures, because it is difficult to “maintain” it).

In a word, there is no way without True RNG, since real entropy in cryptography needs a full stop.

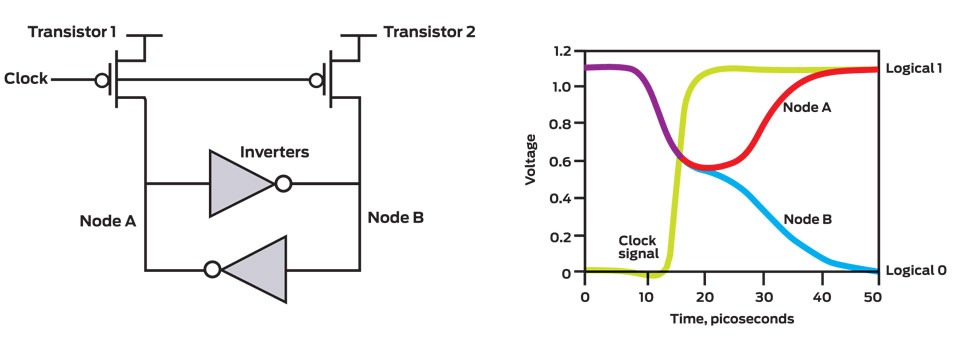

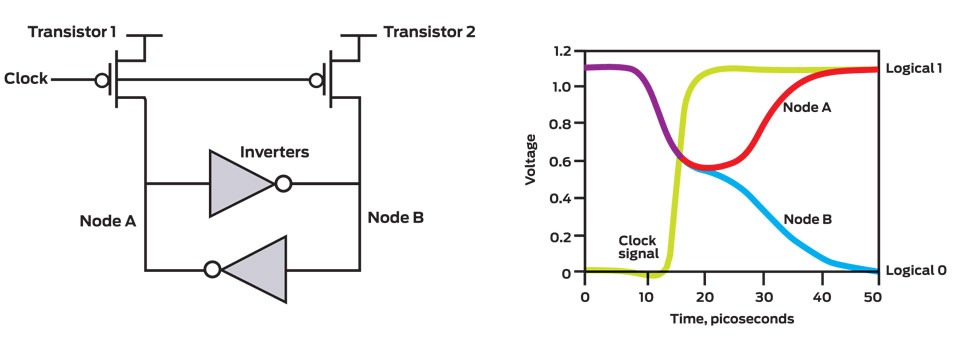

If anyone was interested in True RNG, he should be aware of the material where the first Intel RNG was first described .

They have there as a source of entropy used "noise resistor" in combination with a pair of oscillators. This is the first True RNG from Intel and until recently (before the release of Ivy Bridge products) was the only one. In principle, the first Intel's True RNG worked properly (although it was not smart), but it was allergic (personal guess) to portability (between production technologies), it required special nutrition, etc. restrictions. Therefore, it was “kept” in the back of the chipset, which, for various reasons, lagged behind (in production technology) processors for a year or two.

Intel decided to stop this masochism and in 2008 gave the task to two groups of developers to make a new True RNG. That was digital, yes fast, and that energy would not eat valuable energy in vain, but various certification passed.

In the course of development, there were 2 publications on this topic, and I just have to stop at them in detail. Unfortunately, they are not in the public domain (IEEE only), but the seeker will always find them.

The first, "A 4Gbps 0.57pJ / bit Process-Voltage-Temperature Variation All-Digital True Random Number Generator in 45nm CMOS"

From the name and abstract to the material, it immediately became clear that they didn’t like to joke at Intel, and "they were tearing everyone up" without even asking for the names. But when he read to the end - he laughed for a long time. The main points causing laughter:

Well, okay, the first test is always a simulation, and you can agree with these, but you can not agree with the fact that

In principle, the central element of the entropy source is a bistable cell, which is essentially a digital , but how it was processed with a file is already beyond the definition of all-digital.

, but how it was processed with a file is already beyond the definition of all-digital.

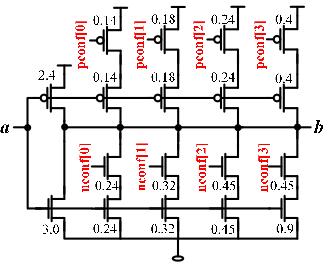

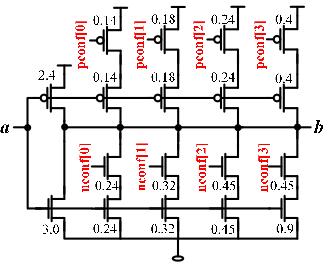

Here is an example of a used inverter (2 in total), which has 8 specially selected taps for power and ground.

Turning on / off them you can adjust the level of the inverter switching (in a real chip, the transistors will be all different). The purpose of the developers was simple - to achieve the same level of switching of a pair of used inverters in a bistable element; they probably achieved their goals (compensated for technological variation), but calling this ancient solution (older than me) - all-digital is just blasphemy, imho.

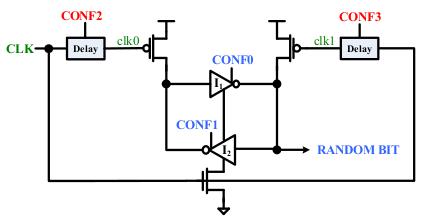

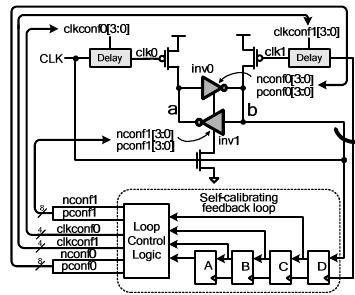

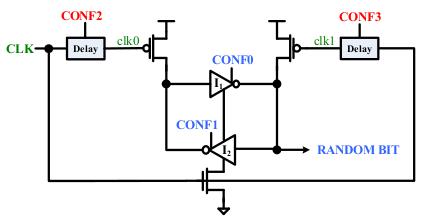

In addition to the “special-digital inverters”, which are clearly not in the standard digital library for design, researchers from Intel added another all-digital solution to compensate for the parasitic capacity of the connecting element (delay element), which works on the same principle of setting / compensation. As a result, we got this all-digital.

where CONF0-1 is 8-bit registers to adjust the switching levels of the inverters, and CONF2-3 is 4-bit registers to compensate for the spurious capacitance of connecting lines (delay settings). In general, CONF0-3 is used to balance the system, because without it, as lucky (the chip will differ from the chip): either it will not work, if the noise is less than the switching difference of the inverters, or it will work but it is bad - the distribution of zeros and units is not even , although possible and working options (all according to Gauss).

There is still much to complain about (how the technological variation was simulated, the results of passing statistical tests, the “low-consuming” energy solution, etc.) were evaluated, but it is better to go to the second publication.

')

The second one, “2.4GHz 7mW All-Digital PVT-Variation Tolerant True Random Number Generator in 45nm CMOS” , repeats the first one in all highlights, even adds some “controversial evaluation points”, and only in performance they calmed down the appetite - from 4 Gbit / s 2.4Gbit / s

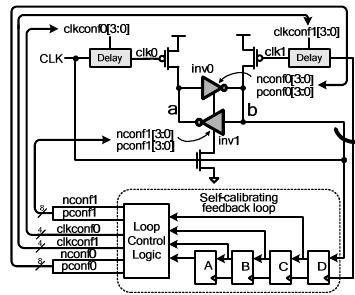

The system of self-calibration functions as follows: the system checks the generated bit and, depending on its value, rearranges the source of entropy, so that the next time to get an inverse value at the output. For example, if the current (generated) value is '0', then the entropy source (using a new combination of loads on parts of inverters and connecting lines) will be “pushed” in the opposite direction to generate '1' with a greater probability.

Yes, the above example is rather simplified, because, according to the developers, the self-calibration system evaluates 1, 2 and 4 consecutive bits when making a decision (no details are given). In total, I can agree that this solution will achieve Uniformity at the output (a uniform distribution of values like '1' and '0'), but what about Unpredictability and Independence? But even Mr. Jesse Volker mentioned such important parameters as Unpredictability and Independence for Random Number Generators.

Another reasonable question arises on tests - how long and how many samples has been tested?

As a result, there is a table with the passage of 5 tests (Frequency, Block Frequency, Runs, Cumulative Sums and FFT), while there are 15 of them in the package. If in the first article they received data from analog simulation, then in the second, they clearly state that RNG is implemented hardware (fabricated), and from this it follows that there should not be valid reasons for inadequate testing (in the sense of - it is difficult to generate).

At this science-like campaign ended and remained almost only advertising.

At IDF 2011, the RNG at Ivy Bridge was casually announced, but more detailed only in the IEEE Spectrum publications on this topic.

Translation of this article has long been on Habré here , where, in the comments, carefully postebalis over double bleaching using AES of these most "almost random" data from the entropy source.

The same picture (only more details) at IDF 2012.

I would like to note in this article the phrase " Inverters 512 bits at a time .". I did not succeed in unambiguously present the implementation of this solution. Anyway.

What was all this about?

In any case, Intel developers are great, they were able to release such a “digital solution” on 22nm technologists, and this is very cool.

PS If someone seemed confused about the presentation of the material or any questions, I would be happy to clarify or answer.

PS 2. thanks for editing

The format of the text - banter and criticism, with respect to the opponent.

I do not think that the post will be of interest to the developers of this RNG (they have long received a prize for it), but it may be of interest to someone else for general development.

What is so silent RNG from Intel? And he is silent about a lot of things, but first we need to determine the terminology.

There is a common name Random Number Generator (Random Number Generator - RNG). They are divided into PRNG (Pseudo Random Number Generator) and TRNG - True RNG. Every self-respecting office that releases crypto IP, well, is simply obliged to have True RNG in its arsenal.

Even better, if your True RNG is compact, fast, and most importantly, digital (well, without analogous problems, such as Schottky diodes, noisy resistors, etc. of exotic living creatures, because it is difficult to “maintain” it).

In a word, there is no way without True RNG, since real entropy in cryptography needs a full stop.

If anyone was interested in True RNG, he should be aware of the material where the first Intel RNG was first described .

They have there as a source of entropy used "noise resistor" in combination with a pair of oscillators. This is the first True RNG from Intel and until recently (before the release of Ivy Bridge products) was the only one. In principle, the first Intel's True RNG worked properly (although it was not smart), but it was allergic (personal guess) to portability (between production technologies), it required special nutrition, etc. restrictions. Therefore, it was “kept” in the back of the chipset, which, for various reasons, lagged behind (in production technology) processors for a year or two.

Intel decided to stop this masochism and in 2008 gave the task to two groups of developers to make a new True RNG. That was digital, yes fast, and that energy would not eat valuable energy in vain, but various certification passed.

In the course of development, there were 2 publications on this topic, and I just have to stop at them in detail. Unfortunately, they are not in the public domain (IEEE only), but the seeker will always find them.

The first, "A 4Gbps 0.57pJ / bit Process-Voltage-Temperature Variation All-Digital True Random Number Generator in 45nm CMOS"

From the name and abstract to the material, it immediately became clear that they didn’t like to joke at Intel, and "they were tearing everyone up" without even asking for the names. But when he read to the end - he laughed for a long time. The main points causing laughter:

- We have all tested the NIST STS test suite, and our RNG passes all tests.

Well, okay, the first test is always a simulation, and you can agree with these, but you can not agree with the fact that

- The source of entropy, according to the developers, is completely digital.

In principle, the central element of the entropy source is a bistable cell, which is essentially a digital

, but how it was processed with a file is already beyond the definition of all-digital.

, but how it was processed with a file is already beyond the definition of all-digital.Here is an example of a used inverter (2 in total), which has 8 specially selected taps for power and ground.

Turning on / off them you can adjust the level of the inverter switching (in a real chip, the transistors will be all different). The purpose of the developers was simple - to achieve the same level of switching of a pair of used inverters in a bistable element; they probably achieved their goals (compensated for technological variation), but calling this ancient solution (older than me) - all-digital is just blasphemy, imho.

In addition to the “special-digital inverters”, which are clearly not in the standard digital library for design, researchers from Intel added another all-digital solution to compensate for the parasitic capacity of the connecting element (delay element), which works on the same principle of setting / compensation. As a result, we got this all-digital.

where CONF0-1 is 8-bit registers to adjust the switching levels of the inverters, and CONF2-3 is 4-bit registers to compensate for the spurious capacitance of connecting lines (delay settings). In general, CONF0-3 is used to balance the system, because without it, as lucky (the chip will differ from the chip): either it will not work, if the noise is less than the switching difference of the inverters, or it will work but it is bad - the distribution of zeros and units is not even , although possible and working options (all according to Gauss).

There is still much to complain about (how the technological variation was simulated, the results of passing statistical tests, the “low-consuming” energy solution, etc.) were evaluated, but it is better to go to the second publication.

')

The second one, “2.4GHz 7mW All-Digital PVT-Variation Tolerant True Random Number Generator in 45nm CMOS” , repeats the first one in all highlights, even adds some “controversial evaluation points”, and only in performance they calmed down the appetite - from 4 Gbit / s 2.4Gbit / s

- the same inferior set of statistical tests, the source of entropy, etc .;

- added self-calibration mechanism, which should be considered in more detail;

The system of self-calibration functions as follows: the system checks the generated bit and, depending on its value, rearranges the source of entropy, so that the next time to get an inverse value at the output. For example, if the current (generated) value is '0', then the entropy source (using a new combination of loads on parts of inverters and connecting lines) will be “pushed” in the opposite direction to generate '1' with a greater probability.

Yes, the above example is rather simplified, because, according to the developers, the self-calibration system evaluates 1, 2 and 4 consecutive bits when making a decision (no details are given). In total, I can agree that this solution will achieve Uniformity at the output (a uniform distribution of values like '1' and '0'), but what about Unpredictability and Independence? But even Mr. Jesse Volker mentioned such important parameters as Unpredictability and Independence for Random Number Generators.

Another reasonable question arises on tests - how long and how many samples has been tested?

As a result, there is a table with the passage of 5 tests (Frequency, Block Frequency, Runs, Cumulative Sums and FFT), while there are 15 of them in the package. If in the first article they received data from analog simulation, then in the second, they clearly state that RNG is implemented hardware (fabricated), and from this it follows that there should not be valid reasons for inadequate testing (in the sense of - it is difficult to generate).

At this science-like campaign ended and remained almost only advertising.

At IDF 2011, the RNG at Ivy Bridge was casually announced, but more detailed only in the IEEE Spectrum publications on this topic.

Translation of this article has long been on Habré here , where, in the comments, carefully postebalis over double bleaching using AES of these most "almost random" data from the entropy source.

The same picture (only more details) at IDF 2012.

I would like to note in this article the phrase " Inverters 512 bits at a time .". I did not succeed in unambiguously present the implementation of this solution. Anyway.

What was all this about?

In any case, Intel developers are great, they were able to release such a “digital solution” on 22nm technologists, and this is very cool.

PS If someone seemed confused about the presentation of the material or any questions, I would be happy to clarify or answer.

PS 2. thanks for editing

Source: https://habr.com/ru/post/145188/

All Articles