Limit the number of requests - Raterlimiter

If you fear that your web service may be ignored by careless users, or you just have a weak server, then you have already thought about limiting the number of requests from each user. In a good way - this is only one of the necessary echelons of defense. Of course, such a restriction will not save from a serious attack, but from the point of view of price / quality is quite suitable

Recently, I began to actively engage in Erlang. Well and, as usual, for fixing the material implemented a simple web service on Mochiweb . Mochiweb is quite a decent framework for creating web applications, but I did not find the ability to limit the number of requests from one client. So did it yourself.

Because The functionality of limiting the speed of requests is completely self-contained and not tied to a specific task, I selected the module made in an independent application and decided to post its source code.

')

So, we have Erlang / OTP , Mochiweb, rebar . I would like to count the number of requests from a particular user and give him 413 error code if the requests go too often. The client is identified by its IP address. Thereby, which gives

The task is not so difficult, but perhaps a ready-made solution will save someone time.

I decided to use the token bucket algorithm.

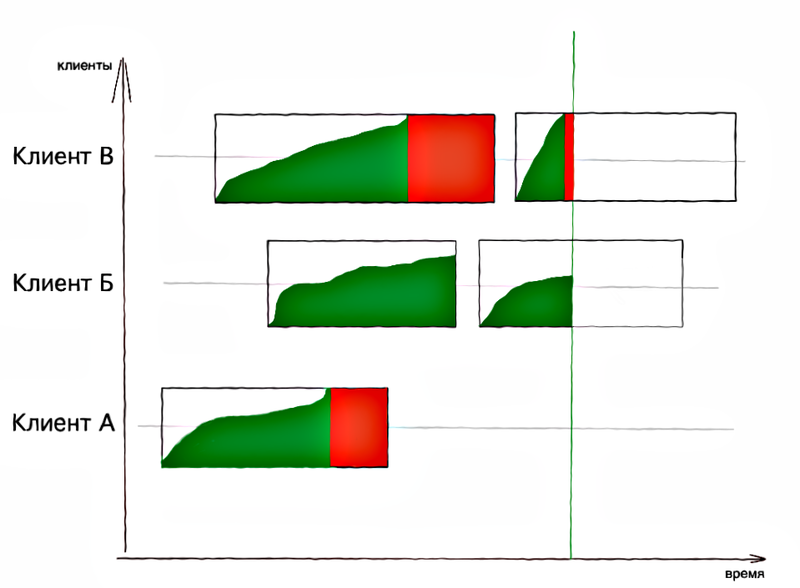

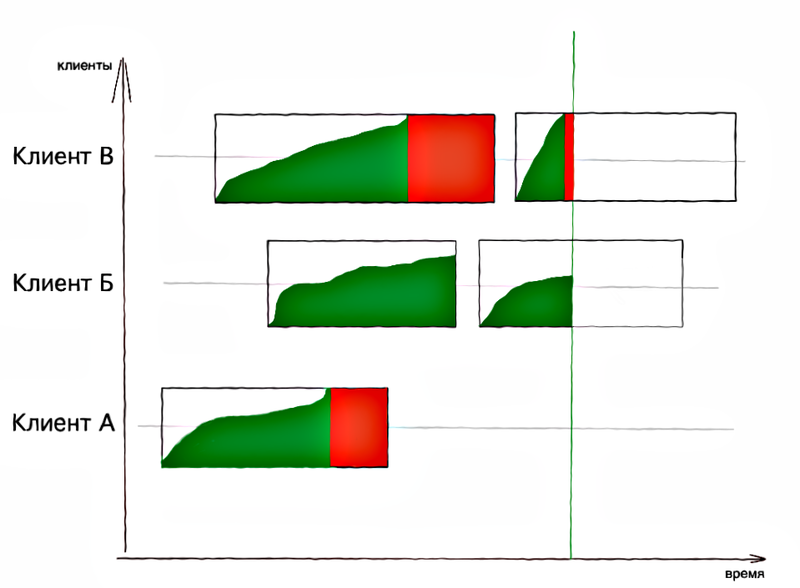

If we describe it briefly - we consider certain time intervals for each client as baskets, in which we will add user requests for this time interval. Baskets have a limited volume. As soon as the basket is filled with requests to the limit, we stop serving the client. Because as time goes on, baskets for the customer are constantly changing, and the customer has a chance to be served in a new time interval.

In the picture above, red indicates the time when each of the customers was not served.

As can be seen from the picture, Client A received a restriction of requests some time ago and did not send them anymore, Client B works quietly and does not exceed the limit, and client C has chosen the current limit of requests and receives refusals.

We will create baskets as requests from the client appear and with due account of time. Each client can have their own time scale, read the basket lifetime. The volume of the basket, that is, the limit of requests, can be set for each client separately.

Store information about customer baskets will be in the dictionary:

The client API consists of a single

Where

Calling this function increments the counter and returns to the client whether to service the request.

For storing data, I use an ETS table of type

Where

Creation time is more for debugging, you can opt out

Main function:

First, we check if the basket we need is {IP, BucketNumber}.

In the case of a new basket, everything is pretty trite - we create a new basket with initial values and say OK.

Adding a counter to an existing basket is a little more interesting. The ets module allows you to modify the counters according to the specified rules. I liked how gracefully you can combine the increment of a counter with a change in the date of the last access.

So the code:

increments the counter # 2 by 1 (this is just the hit counter), adds 0 to the creation time, adds 1 to counter # 4 with the maximum value checked. Because the maximum value is indicated as 0, the fourth counter is always set to Stamp (current time). At the output we get a list of all the variable counters in order, i.e.

As a result, in two appeals to the tables, we updated or created a basket and received the number of hits. In the current implementation of the raterlimiter, the atomicity of changing tables is not critical, it just works synchronously (calling gen_server: call / 2 is synchronous).

Over time, the customer baskets are piling up and piling up. It is necessary to remove them.

Deletion occurs periodically. Simply remove all baskets older than the specified limit.

I must say, on the Erlang everything looks nice and brief -

ets: select_delete deletes all entries matching the matching functions ( MatchSpec ). Writing these match_spec manually is hell. It is much easier to use the ets: fun2ms function , which converts the normal Erlang function into a matching function. For example, the above function

in the final form in the MatchSpec format, for NowStamp = 1000, Timeout = 0 looks like

The main thing when using ets: fun2ms do not forget to include an additional file in the source file -

The use boils down to a single check_rate call, not counting, of course, the code and settings for running the raterlimiter application itself. Here is an example from my Mochiweb-based application:

Full code is available on Github . There you can also report errors, or make a pull request.

Recently, I began to actively engage in Erlang. Well and, as usual, for fixing the material implemented a simple web service on Mochiweb . Mochiweb is quite a decent framework for creating web applications, but I did not find the ability to limit the number of requests from one client. So did it yourself.

Because The functionality of limiting the speed of requests is completely self-contained and not tied to a specific task, I selected the module made in an independent application and decided to post its source code.

')

Task

So, we have Erlang / OTP , Mochiweb, rebar . I would like to count the number of requests from a particular user and give him 413 error code if the requests go too often. The client is identified by its IP address. Thereby, which gives

mochiweb_request:get(peer).The task is not so difficult, but perhaps a ready-made solution will save someone time.

An approach

I decided to use the token bucket algorithm.

If we describe it briefly - we consider certain time intervals for each client as baskets, in which we will add user requests for this time interval. Baskets have a limited volume. As soon as the basket is filled with requests to the limit, we stop serving the client. Because as time goes on, baskets for the customer are constantly changing, and the customer has a chance to be served in a new time interval.

In the picture above, red indicates the time when each of the customers was not served.

As can be seen from the picture, Client A received a restriction of requests some time ago and did not send them anymore, Client B works quietly and does not exceed the limit, and client C has chosen the current limit of requests and receives refusals.

We will create baskets as requests from the client appear and with due account of time. Each client can have their own time scale, read the basket lifetime. The volume of the basket, that is, the limit of requests, can be set for each client separately.

Store information about customer baskets will be in the dictionary:

IP + № as a keycounter - actually the query counterThe client API consists of a single

check_rate(IP, TimeScale, Limit) function check_rate(IP, TimeScale, Limit) .Where

IP is the client's ip address (however, it can be any identifier)TimeScale - Basket lifetime in ms.Limit - the maximum number of requests in the basketCalling this function increments the counter and returns to the client whether to service the request.

Implementation

For storing data, I use an ETS table of type

ordered_set . It is sorted by key and does not allow duplication of keys. The table is a record [BucketID, Counter, CreatedAt, ModifiedAt] Where

BucketID - {IP, BucketNum}, is also the key for ordered_setCounter - the number of requests from the clientCreatedAt - Basket creation time (in ms)ModifiedAt - Time to change the basket (in ms)Creation time is more for debugging, you can opt out

Main function:

-spec count_hit(Id::binary(), Scale::integer(), Limit::integer()) -> {ok, continue} | {fail, Count::integer()}. %% Counts request by ID and blocks it if rate limiter fires %% ID of the client %% Scale of time (1000 = new bucket every second, 60000 = bucket every minute) %% Limit - max size of bucket count_hit(Id, Scale, Limit) -> Stamp = timestamp(), %% milliseconds since 00:00 GMT, January 1, 1970 BucketNumber = trunc(Stamp / Scale), %% with scale=1 bucket changes every millisecond Key = {BucketNumber, Id}, case ets:member(?RATERLIMITER_TABLE, Key) of false -> ets:insert(?RATERLIMITER_TABLE, {Key, 1, Stamp, Stamp }), {ok, continue}; true -> % increment counter by 1, created_time by 0, and changed_time by current one Counters = ets:update_counter(?RATERLIMITER_TABLE, Key, [{2,1},{3,0},{4,1,0, Stamp}]), % Counter, created at, changed at [BucketSize, _, _] = Counters, if (BucketSize > Limit) -> {fail, Limit}; true -> {ok, continue} end end. First, we check if the basket we need is {IP, BucketNumber}.

In the case of a new basket, everything is pretty trite - we create a new basket with initial values and say OK.

Adding a counter to an existing basket is a little more interesting. The ets module allows you to modify the counters according to the specified rules. I liked how gracefully you can combine the increment of a counter with a change in the date of the last access.

So the code:

Counters = ets:update_counter(?RATERLIMITER_TABLE, Key, [{2,1},{3,0},{4,1,0, Stamp}]) increments the counter # 2 by 1 (this is just the hit counter), adds 0 to the creation time, adds 1 to counter # 4 with the maximum value checked. Because the maximum value is indicated as 0, the fourth counter is always set to Stamp (current time). At the output we get a list of all the variable counters in order, i.e.

[Counter, CreatedTime, ChangedTime]As a result, in two appeals to the tables, we updated or created a basket and received the number of hits. In the current implementation of the raterlimiter, the atomicity of changing tables is not critical, it just works synchronously (calling gen_server: call / 2 is synchronous).

Removing old baskets

Over time, the customer baskets are piling up and piling up. It is necessary to remove them.

Deletion occurs periodically. Simply remove all baskets older than the specified limit.

I must say, on the Erlang everything looks nice and brief -

remove_old_limiters(Timeout) -> NowStamp = timestamp(), Matcher = ets:fun2ms(fun ({_,_,_,Accessed}) when Accessed < (NowStamp - Timeout) -> true end), ets:select_delete(?RATERLIMITER_TABLE, Matcher). ets: select_delete deletes all entries matching the matching functions ( MatchSpec ). Writing these match_spec manually is hell. It is much easier to use the ets: fun2ms function , which converts the normal Erlang function into a matching function. For example, the above function

fun ({_,_,_,Accessed}) when Accessed < (NowStamp - Timeout) -> true end in the final form in the MatchSpec format, for NowStamp = 1000, Timeout = 0 looks like

[{{'_','_','_','$1'},[{'<','$1',{'-',1000,0}}],[true]}] The main thing when using ets: fun2ms do not forget to include an additional file in the source file -

-include_lib("stdlib/include/ms_transform.hrl"). Using

The use boils down to a single check_rate call, not counting, of course, the code and settings for running the raterlimiter application itself. Here is an example from my Mochiweb-based application:

{Status, _Reason} = raterlimiter:check_rate(Req:get(peer), 30000, 500), % 500 requests per 30 seconds allowed case Status of ok -> serve_request(Req, DocRoot, Path); _ -> % client wants too much information Req:respond({413,[{"Content-Type", "text/html"}, {"Retry-After", "900 (Too many requests)"}], " IP , 15 ."}) end Full code is available on Github . There you can also report errors, or make a pull request.

Source: https://habr.com/ru/post/145105/

All Articles