Neural networks for dummies. Part 2 - Perceptron

In the previous article , the basics for understanding the topic of neural networks were considered. The resulting system was not a full-fledged neural network, but was merely exploratory in nature. The decision-making mechanisms in it were “black boxes”, not described in detail.

Here they will be discussed in the framework of this article. The result of the article will be a full-fledged neural network of one perceptron that can recognize input data and allows itself to be trained.

Programming language, this time - C #.

Interested please under the cat.

')

The presentation will be conducted in simple language, so I ask the pros not to criticize for terminology.

In the first article, the element responsible for recognizing a particular digit was a certain “neuron” (as such, in fact, not entirely being, but adopted for it for simplicity).

Consider what he is.

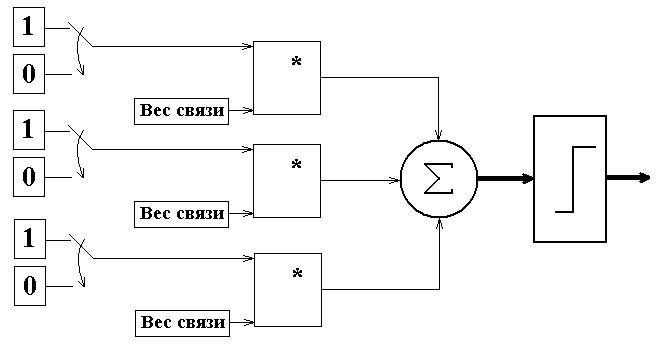

At the entrance we will again submit the image, so the input data is a two-dimensional array. Further, each piece of input data is connected to a neuron using a special connection (synapse) - in the figure there are red lines. But these are not simple connections. Each of them has some significance for the neuron.

I will give an analogy with a man. We perceive information by different senses - we see, we hear, we smell, we feel. But some of these feelings have a higher priority (vision), and some lower (intuition, touch). Moreover, for each life situation, we give priority to each sense organ based on our experience - in preparing to eat an unfamiliar product, we put sight and smell into maximum priority, but hearing does not matter. And trying to find a friend in a crowd of people, we pay attention to the information that eyes and ears give us, and the rest fades into the background.

The simplest similarity of a separate organ in a neuron is the synapse. In different situations, different synapses will have different significance. This significance is called the weight of the connection.

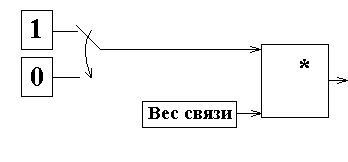

Because if we have a black and white picture to the input, then the input of an axon can be only 1 or 0:

And the output is either the weight value, or 0.

Roughly speaking, if there is something at the entrance, the foot begins to “twitch”, telling the neuron that there is information on it. How much it “twitches” will depend on the decision taken by the network.

The number of axons corresponds to the number of elements in the input array. In this article I will use 3x5 pixel images as input. Accordingly, the number of connections coming into the neuron will be 3 x 5 = 15.

The signal, scaled by the ratio of the weight of the connection, comes to the neuron, where it is added to the rest of the signals from other synapses.

It turns out some number. This number is compared with a predetermined threshold - if the value obtained is higher than the threshold, then the neuron is considered to have output one.

The threshold is chosen from the following considerations: the higher it is, the more accurate the neuron will be, but the longer we have to train it.

Let us turn to the software implementation.

Create a neuron class:

class Web { public int[,] mul; // public int[,] weight; // public int[,] input; // public int limit = 9; // - , public int sum ; // public Web(int sizex, int sizey,int[,] inP) // { weight = new int[sizex, sizey]; // ( ) mul = new int[sizex, sizey]; input = new int[sizex, sizey]; input = inP; // } Yes, I know that the procedures for multiplying the signal by weight, summing the signals, comparing with the threshold and outputting the result could be combined in one place. But it seemed to me that it would be clearer if we carry out each operation separately and call them in turn:

Scaling:

public void mul_w() { for (int x = 0; x <= 2; x++) { for (int y = 0; y <= 4; y++) // { mul[x, y] = input[x,y]*weight[x,y]; // (0 1) . } } } Addition:

public void Sum() { sum = 0; for (int x = 0; x <= 2; x++) { for (int y = 0; y <= 4; y++) { sum += mul[x, y]; } } } Comparison:

public bool Rez() { if (sum >= limit) return true; else return false; } The program will open a file image of this type:

Run through all the pixels and try to determine whether this figure is the one that she was taught to recognize.

Because we have only one neuron, then we can only recognize one character. I chose the number 5. In other words, the program will tell us whether the picture fed to it is an image of the number 5 or not.

So that the program can continue to work after shutdown, I will save the values of the scales in a text file.

private void Form1_Load(object sender, EventArgs e) { NW1 = new Web(3, 5,input); // openFileDialog1.Title = " "; openFileDialog1.ShowDialog(); string s = openFileDialog1.FileName; StreamReader sr = File.OpenText(s); // string line; string[] s1; int k = 0; while ((line = sr.ReadLine()) != null) { s1 = line.Split(' '); for (int i = 0; i < s1.Length; i++) { listBox1.Items.Add(""); if (k < 5) { NW1.weight[i, k] = Convert.ToInt32(s1[i]); // listBox1.Items[k] += Convert.ToString(NW1.weight[i, k]); // , } } k++; } sr.Close(); } Next, you need to create an array of input data:

Bitmap im = pictureBox1.Image as Bitmap; for (var i = 0; i <= 5; i++) listBox1.Items.Add(" "); for (var x = 0; x <= 2; x++) { for (var y = 0; y <= 4; y++) { int n = (im.GetPixel(x, y).R); if (n >= 250) n = 0; // , else n = 1; listBox1.Items[y] = listBox1.Items[y] + " " + Convert.ToString(n); input[x, y] = n; // } } We recognize the symbol by calling the above class methods:

public void recognize() { NW1.mul_w(); NW1.Sum(); if (NW1.Rez()) listBox1.Items.Add(" - True, Sum = "+Convert.ToString(NW1.sum)); else listBox1.Items.Add( " - False, Sum = "+Convert.ToString(NW1.sum)); } Everything. Now our program can already be called a neural network and define something.

However, she is completely stupid and always gives False.

Need to train her. We will train according to the simplest algorithm.

If the network gives the correct answer, we are happy and do nothing.

And if you make a mistake, we punish her accordingly:

- If its incorrect answer is False, then add the values of the inputs to the weights of each leg (to leg 1 is the value at the point [0,0] of the picture, etc.):

public void incW(int[,] inP) { for (int x = 0; x <= 2; x++) { for (int y = 0; y <= 4; y++) { weight[x, y] += inP[x, y]; } } } - If her incorrect answer is True, then we subtract the values of the inputs from the weight of each leg:

public void decW(int[,] inP) { for (int x = 0; x <= 2; x++) { for (int y = 0; y <= 4; y++) { weight[x, y] -= inP[x, y]; } } } Then we save the received changes in an array of scales and we continue training.

As I said, I took the number 5 for the sample:

In addition, I prepared the remaining numbers and several options for the number 5 itself:

It is quite expected that the most problematic figure will be 6, however, we will overcome this with the help of training.

Create a text file filled with 3x5 zeros - the net memory of our network:

000

000

000

000

000

Run the program and point it to this file.

Load a picture of number 5:

Naturally, the answer is incorrect. Click "Not true."

The scales are recalculated (you can see the result in the file):

1 1 1

100

1 1 1

0 0 1

1 1 1

Now the program will correctly recognize this picture.

We continue to feed our program pictures and punish it for incorrect answers.

Ultimately, it will recognize all the pictures from the set unmistakably.

Here are the totals of the scales, saved to a file by my fully configured network:

1 2 1

1 0 -4

1 2 1

-4 0 1

1 1 0

So the six is determined correctly.

The source code of the program, the executable file, the files of weights and 3x5 pictures can be taken from here .

If you want to play with other symbols or train the network again - do not forget to reset all the numbers in the w.txt file

This concludes the article, dear habrazhiteli. We learned how to create, customize and train the simplest perceptron.

If the article meets with hot reviews, next time we will try to implement something more complex and multi-layered. In addition, the issue of optimal (fast) network training has remained unlit.

For this I will take my leave, thanks for reading.

Source: https://habr.com/ru/post/144881/

All Articles