Using SURF to create augmented reality marker

Using SURF to create augmented reality marker

This is a continuation of the topic of augmented reality. Here is the first part . In the discussion of the topic by the Inco user, interesting results of his work were shown in the direction of recognizing the augmented reality marker Video . At that moment there was no time, but after a couple of months I wondered how it all worked, how stable the approach was, and there were free hours. I present to you my realization of this idea, which resulted in a report on this event.

In this topic, attention will be paid to a brief summary of my report and an explanation of what was not enough time at the meeting: the source code of the program. Those who want to fully see and listen to the presentation can use the screencast:

What is augmented reality?

First of all, I would like to highlight what augmented reality is.

There is our reality - what we see with our eyes, how we interpret it, and how it really is. In addition, there is a virtual reality. This is what is generated, what does not exist, what they want to show you.

Here at the junction of these two realities is augmented reality. It can shift more towards our reality, then it will be augmented reality, or it can shift more towards virtuality — then it will be a virtuality supplemented with our reality.

What is augmented reality used for now?

Now it is used:

- For presentations and demonstrations. The first photo, the dam is shown there, this is a photo from the conference devoted to Avtokad

- Interfaces New interfaces that do not need to be projected. Put a hand, or something else (a piece of paper) under the camera, and you have an interface ready with which you can control the object.

- Exit to socialization. That situation, when you drive your phone across the landscape, and you are additionally informed about this landscape.

How does augmented reality work?

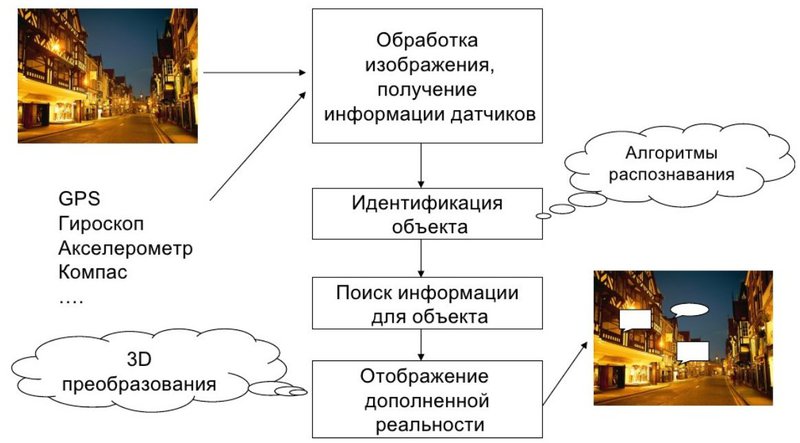

First of all, we have an image. Why is the image - because a person perceives information for 70% of his eyes, that is, the image from the camera. Further, various sensors, GPS, gyroscopes, accelerometers, compass - they all give information on the device, on its orientation and position in space.

For example, knowing where you are and where your phone is looking, we can tell you what your perspective is, what you see and, accordingly, using this information, bring out the augmented reality about what you see, about what we assume that you see. For example from the new:

Further, image processing, obtaining sensor information. In fact, everything is not so simple. The image we get from the camera often needs to be pre-processed. The data that the sensors give us - they are not accurate, if we take the same accelerometer, it is godlessly fonit, at high frequencies, i.e. it constantly gives us some not the data we wanted. Similar to GPS, it has an accuracy threshold, the one who worked with GPS with mobile phones knows. Gyroscope - everyone imagines that this is a gyroscope as in a jet aircraft, which gives a position in three planes. Yes, it gives a position in three planes, but without a compass and an accelerometer, it will not be accurate enough. Again, augmented reality is not only an image, augmented reality takes into account all that happens with the device.

Object identification This is the answer to the question, but what I see. This is the most interesting, here in all this augmented reality. Without this, these are all cool toys, i.e., twist the phone, and you have a car or a motorcycle to the left to the right, and if we identify:

- where we are - we can draw some conclusion about the fact that we have a field of view;

- what we see - we can show something to a person in the image that he sees.

The key problem of augmented reality is what to supplement. Identify need something. To do this, there are recognition algorithms in mathematics and computer science. We found the image, great. And now we will try to find in the database of images. So we copied the image, let's say a picture, it would be interesting to know what kind of picture it is. This is also a difficult task. How to build an index is also a very interesting direction in computer science, and of course, there are a lot of tasks.

And now how to actually show how to give a user so that the user understands this? This raises the question of usability.

')

How to identify an object?

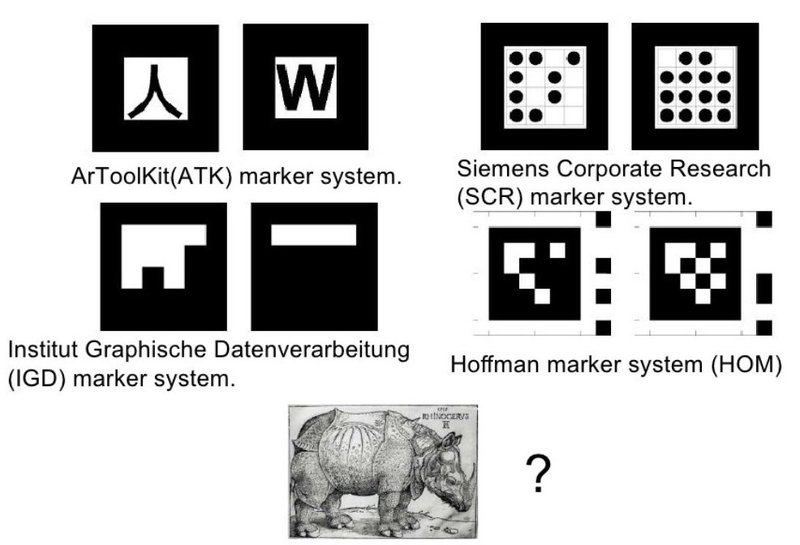

Here, we got the image, and we need to identify the object, the marker, i.e., something about which we will complement. This can be done with markers or without markers. Here, any barcode, any sign, QR-code, this is already a marker. The phone can recognize it and, for example, add reality, for example, a translation for ordinary characters. For example, some information found from the Internet for a car number, a house number, etc.

Geometric figure. The classic marker of augmented reality is a square. Why? Because it's pretty easy to build a plane and find a homography. Find a position in three coordinates.

The graphic marker that we create, if it has a clear form, such as a square, it stands out from reality, respectively, it brings a dissonance into the interior. If the marker is close to reality, if it enters this reality, it is better. Photography is better than a drawn square, the picture is better than a QR code. Now look, without markers, we can get our coordinates, position in space, and of course a graphic marker, which in fact may not be a marker. We can recognize part of our reality and then say, yes it was our marker. Again, you can generate an image in advance if we want people to recognize something. We create a marker, for example a picture that we hang on the wall.

What could be a marker.

If we take augmented reality markers, there are several systems that have been developed since the early nineties. Here is a link to the topic where I described in detail the augmented reality marker technologies.

Here is Durer's engraving - rhino. Can it be a marker? Yes maybe.

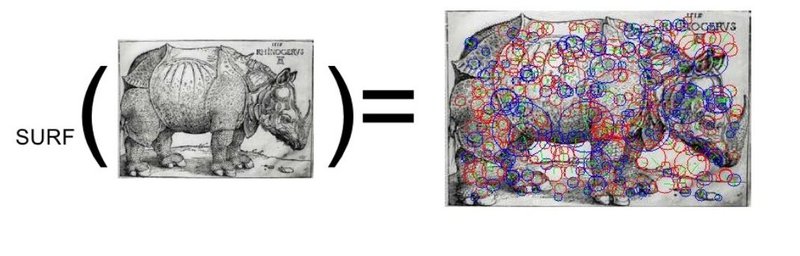

Accordingly, how? For this, in fact, there is a method SURF. Here is a great article that describes very well the technique of finding persistent traits.

If you thoughtfully understand the method, look at the source ( one , two , three ) publications, then such conclusions will appear (for more details, see the screencast):

- The method has a sensitivity that needs to be adjusted.

- The method gives false positives.

- In constructing the method, empirical coefficients are used, which also need to be adjusted for the particular case.

- The method has prerequisites for paralleling and optimization.

And the most important thing - to use in the finished application of augmented reality, it is not enough to recognize the marker, you need to be able to monitor it, make “tracking” and extinguish false alarms, anoamous outliers of points that SURF can give us, if we do tracking using these methods.

How to find the coordinates of the object in the frame and superimpose video?

Here is an article that describes mathematics for the three-dimensional case (when we try to impose a 3D model on our marker). For the two-dimensional case, everything is much simpler.

It is necessary at several points (four and more) to find the parameters of affine transformations, which will tell us how to compress, expand, distort the image so that the key points we have found coincide.

This is where the danger of false positives comes from. If we take the “wrong” points, the result will be an incorrectly found transformation matrix, and our image will not be there. How to deal with this - there are several approaches, but they all boil down to how to determine the anomalous levels of data in the observations. Those. grouping and clustering.

Code

To implement this program, we need the installed OpenCV library. This code was written for Linux, but should also be compiled in Visual C. Link to source: Opensurf.zip

In short, what the code does:

This source code is based on the OpenSurf project, the main.cpp file has been modified.

Actually, what I would like to touch (fragments of the main.cpp file):

Initial data:

// , CvCapture* capture1 = cvCaptureFromFile("imgs/ribky.avi"); // (capture), CvCapture* capture = cvCaptureFromFile("imgs/child_book.avi"); //CvCapture* capture = cvCaptureFromCAM( CV_CAP_ANY ); // - // This is the reference object we wish to find in video frame IplImage *img = cvLoadImage("imgs/marker6.png"); We get the key points:

// img = cvQueryFrame(capture); frm_id++; // surfDetDes(img, ipts, false, 4, 4, 2, 0.001f); // getMatches(ipts,ref_ipts,matches); Building a frame and drawing green dots on the first forty frames is completely transparent, so go straight to overlay frames from the video:

// frame = cvQueryFrame(capture1); // pt1.resize(n); pt2.resize(n); // for(int i = 0; i < n; i++ ) { pt1[i] = cvPoint2D32f(matches[i].second.x, matches[i].second.y); pt2[i] = cvPoint2D32f(matches[i].first.x, matches[i].first.y); } _pt1 = cvMat(1, n, CV_32FC2, &pt1[0] ); _pt2 = cvMat(1, n, CV_32FC2, &pt2[0] ); // ( ) cvFindHomography(&_pt1, &_pt2, &_h, CV_RANSAC, 5); // cvZero(frame1); // , 0,255,0 cvWarpPerspective(frame, frame1, &_h, CV_WARP_FILL_OUTLIERS,cvScalar(0,255,0) ); // 0,255,0 // = not (0,253..255,0) imagegr=cvCreateImage(cvGetSize(frame1), frame1->depth, 1); cvInRangeS(frame1, cvScalar(0, 253, 0), cvScalar(0, 255, 0), imagegr ); cvNot(imagegr, imagegr); // , 0,253... 255,0 cvCopy(frame1,img,imagegr); In light of the creation of Google glasses and the growing interest in augmented reality, the goal of the presentation was to describe the method, to share with people ideas and thoughts that are multiplied many times during the discussion.

Source: https://habr.com/ru/post/144845/

All Articles