Android + Arduino + 4 wheels. Part 3 - Video and Audio Transmission

Finally, moving forward. Everything, now house do not dare to call the robot Mitya "the radio-controlled machine"!

It turned out to be difficult to find a relatively easy and working way to transfer video and audio streams from an Android gadget to a remote control application on a PC. Without this step, I categorically did not want to move on, so for quite a long time I was stuck in my stubbornness.

Many thanks to all who helped me, without help I would not have mastered this puzzle. Correspondence with like-minded people brought me to very pleasant and interesting people, I somehow did not consider this side of the hobby before. It turned out the robot Mitya helped me find people close in spirit. And it was a completely unexpected bonus for me. Perhaps this is the main "profit" hobby? Only now I realized that communication is more important to me than just “handicraft”. Why is all this necessary, if not with whom to share, consult, boast?

')

I will wrap up with so beloved lyric component. This article is a continuation of the story about the robot Mitya. In the first part I described how I built my robot, in the second I described what it would take to program it. In this article I will focus on the issue of video and sound transmission from the robot to the operator. I will not repeat here about the project in general and its software architecture in particular - these issues are described in detail in the first part.

The most exciting question: what is the result, how much will the picture and sound slow down? Here is my video response:

I apologize for the tips, but I definitely needed to demonstrate both the original sound and the sound reproduced on the PC. The delay, of course, is. Moreover, when playing, the sound is a little behind the picture. The video lags slightly behind reality (after all, it’s necessary to take into account that this is not an analog system, and it’s the phone that runs the power, though powerful). I tried to control the robot, and I did not experience discomfort with such a delay. So I was completely satisfied with the result - with a slight lag of sound, I am quite ready to accept it.

The quality of the picture displayed by the operator corresponds to what the front-facing camera on HTC Sensation is capable of. I could use the main camera, but then I would have to abandon Miti's mimicry, and I can't go for that.

For ease of navigation I will give a plan for the further content of the article:

1. The evolution of the solution (only for the curious)

2. IP Webcam

3. Video playback in a Windows application

4. Playback sound in a Windows application

5. Summary

So that I am not accused of “multi-lettering” I propose a compromise: people are curious, or sympathizing with the project, or simply compassionate (it’s not for nothing that I wrote all this) can read how I got out and what solutions I found and discarded.

But seriously, I want to share, whenever possible, all the acquired experience, because I am sure that value is not only a positive experience, but also a negative one. What if there are people who can learn from the mistakes of others? In addition, I would be very grateful if someone found or showed a way out of the dead ends that I met. And then it would be an exchange of experience.

In this section, I am writing about dead ends. If you are just looking for a recipe for transferring video and sound from a robot to a PC, you can skip to the next section.

The idea was simple: to embed code that implements the translation of video and audio streams to the level of an Android application, and to embed code that receives and reproduces these streams to the Windows-level management application.

I dismissed all kinds of “knee” solutions using ready-made, non-integrable products into my code right away - ugly.

I started with the video. And for some reason he was deeply convinced that there would be no problems with the formation and translation of the video stream. XXI century after all, someone already has four-core smartphones ... But no. Despite the fact that each phone on board has two cameras, it is not so easy to make a webcam out of the phone. Surprisingly, there are no ready-made software tools built into Android to solve this problem. I don’t know what is the reason, as this task is not a problem for phone manufacturers, but I suspect that everything is explained by the complexity of certification in some countries of devices with such software. Suddenly this is spyware? This is the only explanation I could come up with. But Mitya rings so like motors that a spy from him, like a balloon from a hippopotamus. Therefore, conscience with insomnia does not torment me.

Digging up google, code.google, stackoverflow, android developers and all-all-all, I found some very interesting, but I have not brought anywhere, solutions. And I spent a lot of time on them. In any case, I will describe them and why I refused each of them. Experience may be helpful. For some problems, these solutions are quite suitable, but I did not make friends with them. I will omit the most dead-end options. Leave only those who have a chance.

Option one: use the MediaRecorder class, which is part of Android. I had thought that this was the solution to all my problems - here both video and audio. At the output, I will receive a 3gp stream, transfer it via UDP to PC. But alas. MediaRecorder will not work with streams so easily - it can only with files. Googling, I found a very interesting discussion on the groups.google.com forum. A similar problem was discussed here, and during its discussion a link to another interesting post came up . It describes a sort of "hack", how to deceive MediaRecorder, so that he thinks he is working with the file, and actually slip him to write the output stream to the socket. I will not repeat the implementation description, everything is written there. This option is quite satisfied with the discussion participants. The task was to record video from the phone to a file on a remote computer, bypassing the phone's memory card. It was in the file - by studying this option deeper, I was convinced that it is not suitable for webcasting. The fact is that MediaRecorder on my device works as follows: when a video recording starts, a file is created and a space is reserved in its head for recording the size of the stream. This field is filled only upon completion of the video. And for this, the output stream of MediaPlayer must support positioning (Seek operation). A file stream, for example, is capable of this, and when broadcasting over a network, the stream, naturally, is strictly sequential and will not jump to its beginning to fill the field with the final size. But the guys had a task to write to a file on a remote computer. Therefore, they first "merged" their stream into a file, and then opened this file for writing and entered its size in the right place. After that, such a file could already be played with anything.

The task in my project is different: I do not have a moment to complete the recording. And I can not determine the size of the video stream. Those. MediaPlayer output stream is not intended for webcast. At least on my machine. This option had to be thrown away.

Well, I decided to try to do everything myself. Android provides the ability to get raw footage from the camera of the device. This function is described perfectly everywhere, the Internet is filled with examples of converting the received stream with a frame from the device’s camera to a jpeg-file and saving this file to a memory card. In my case, the stream of jpeg-frames could be driven over UDP on a PC. But what if the stream is broken (after all, this is UDP)? It is necessary to somehow separate the frames with labels or use the jpeg header for this. On the PC, you have to somehow extract the frames from the stream. All this is somehow low-level, and therefore I did not like it at all. Definitely need to use a ready-made codec. And it would be great to use some standard media protocol. And I began to collect information in this direction. So the second option was dropped without being born and the third one appeared: use ffmpeg.

ffmpeg is deservedly the most popular and popular product in this area. Thanks to the developed community, it is quite possible to rely on it, and I decided that I decided on the direction of further work. And then I suspended for two months. It turns out, ffmpeg for Android will have to compile yourself. At the same time, you will have to get acquainted with the Android NDK, since ffmpeg is written in C. Building ffmpeg for Android is extremely difficult in Windows. “Embarrassing” is not entirely sincere, in fact I have not met a single mention of a successful compilation in Windows. There are a lot of questions on this topic, but they are recommended to deploy Linux in all forums in one voice. Now, perhaps, the situation has changed: here is the question for the article on my blog and my answer. I had no experience with using Linux. But I was curious about what kind of beast Linux was, I had to install Ubuntu . It turned out further that there is no actual information on the compilation of ffmpeg on the Internet. Having suffered a lot, he managed to collect the coveted ffmpeg. I described the assembly process in some detail in my blog.

By this time, Mitya had long been gathering dust in the corner, abandoned and forgotten. And in front of me loomed only new and completely bleak battles with the Android NDK, the complete lack of API documentation for ffmpeg, immersion in the mysteries of codecs and compiling ffserver (this is a streaming video server, part of the ffmpeg project). With the help of ffserver, you can organize the broadcast of video and audio from the robot to any PC on the network. My friends already thought that I had burned out with robotics, and I cried, injected, but continued to gnaw ffmpeg granite. Not sure that I could go this way to the end.

And then help came to me. Remember I talked about the wonderful like-minded people with whom I shared a common hobby? I must say that the robot Mitya was not alone by this moment. In another city, he already appeared outwardly very similar, but internally in some places it was different and sometimes even for the better, a twin brother. I correspond with Luke_Skypewalker - the author of this robot. In the next letter from him I see a tip to see an application for Android, called IP Webcam . I also find out that this application transmits HTTP video and sound from an Android device, has an API and can work in the background!

I must admit that after the publication of the first part of the article about the robot Mityu, on the project site I received a comment from the user kib.demon with the advice to take a closer look at IP Webcam. I looked, but I didn’t see the main thing - the fact that this application has a software API. I thought that the application that implements the webcam itself does not need me and quickly forgot about its existence. And there was also a comment on the article itself. There was no information about the API, and I missed it too. This mistake cost Mité three months in the corner.

I do not think that I was in vain so much digging with ffmpeg. After my posts (there was also an English version), they send me a lot of questions, apparently taking me for an expert in this field. I try to respond to the best of my knowledge, but most of the questions still hang in the air. The topic is still relevant. I would be very happy if someone continued this work and described it. Still, on the Internet, almost nothing about the software interaction with ffmpeg. And that is, out of date for several years. Thanks to the use of decent codecs, ffmpeg can allow a maximum of video to be squeezed out of a minimum of traffic. For many projects this will be very useful.

So, I decided on the level of the Android part of the robot Mitya: the IP Webcam application will be used to broadcast video and audio.

On the developer’s website there is a page describing the functions available in the API. You can also download the source code of a tiny Android project that demonstrates how to programmatically interact with IP Webcam.

Actually, here is the key part of this example:

It is remarkable that you can control the orientation of the broadcast video, its resolution, picture quality. You can switch between the rear and front cameras, turn the flash LEDs off. All this can be controlled in the settings before launching the broadcast or programmatically using the cheat codes given by the author of the project, as

This is shown in the example.

A little distracted: unfortunately with a flash (Mitya's headlight) I have a puncture. This does not apply to IP Webcam. I have already encountered this problem in my code earlier. On my device, if you activate the front camera, you can no longer control the flash. I suspect it's not just HTC Sensation.

In the IP Webcam application after the start of the video broadcast, the video from the active camera starts to be displayed on the screen. Above the video, two customizable buttons are displayed. By default, one initiates a dialog with help, and the second opens a menu of available actions. In the example, the author hides the first button, and the second sets up to translate the application in the background.

You can check audio / video broadcasting, for example, in a browser or in the VLC media player application. After launching the IP Webcam broadcast in the browser at http: // <IP phone>: <port>, the “Smartphone Camera Service” becomes available. Here you can view individual frames, play video and sound. To view a video stream, for example, in the VLC media player you need to open the URL http: // <phone IP>: <port> / videofeed. Two URLs are available for audio playback: http: // <ip phone>: <port> /audio.wav and http: // <ip phone>: <port> /audio.ogg. There is a significant delay in sound, but as it turned out later, this is due to caching during playback. In the VLC media player, you can set caching to 20 ms and the lag will become insignificant.

It was good news, but now bad: I, of course, found difficulties. On my device in the background, IP Webcam stopped streaming video. I read the comments on Google Play and found that this happens to all the lucky ones with the 4th Android. About the 3rd I do not know. And just before the “discovery” of IP Webcam, I updated the Android version from 2.3.4 to 4.0.3. The impossibility of the background work was fatal, because I could not leave the activating, broadcasting video working on top, opening up my activity with the face of Mitya, and also controlling it. The activation of the face brought the IP Webcam activations into the background and the broadcast stopped. Yes, I could organize that part of the application that controlled the robot as a service, but what about Mitya’s face?

Not finding a way out by myself, I decided to write a letter to the author of the IP Webcam project. The author "our", in the sense of writing, you can write in Russian. Three things bothered me:

I have to thank the author of IP Webcam (Pavel Khlebovich). Another great acquaintance. In the course of the correspondence, which we started, he not only answered all my questions, but also sent another demo project, which shows how to get out with the background mode and my Android 4.0.3.

Most of all, of course, I was worried about the first two questions. For the second, Pavel told me everything about audio caching in the browser and the VLC media player.

But he wrote to me on the first question: “It seems that all 4.x phones already use the V4L driver, which does not allow capturing video without a surface for displaying it. Therefore, the video does not work in the background. As a workaround, you can try to make your own on top of my Activity, describe it as translucent, so that the IP Webcam surface is not destroyed, but actually make it opaque and show what you need. ”

It seems to be understandable, but how can one open one activity on the other and that both work? I thought only one activation can be active at a time (sorry for the pun). Conducted several experiments, but the "lower" activity always worked onPause. Pavel helped again: he made a demo project for me. Surprisingly, the idea of placing one activity on top of another works! I finished Paul's demo project a little bit (cosmetically) and uploaded Mitya's robot to the site.

I will describe what has been done in this demonstration.

1. Button1 is added to the layout of the main activity.

2. Added another layout “imageoverlay.xml” with the image “some_picture.png”. There is nothing more in it.

3. Added OverlayActivity.java, which displays imageoverlay.xml content. It is this activity that will be displayed over the IP Webcam video. In my main project at this place will be activating, controlling the robot. She is Mitya's face.

4. The following should be added to the manifest:

a) Description OverlayActivity:

The launchMode and theme attributes must be filled with the specified values.

b) Rights for accessing the device camera:

5. Supplement MainActivity.java:

By clicking on the button, IP Webcam is launched, then after 4 seconds our activation with a picture opens on top of it. The image size is specifically made smaller than the screen resolution (at least for HTC Sensation) so that both activations can be seen. And 20 seconds after launch, the work of IP Webcam in the background stops, but our "main" activation continues to work.

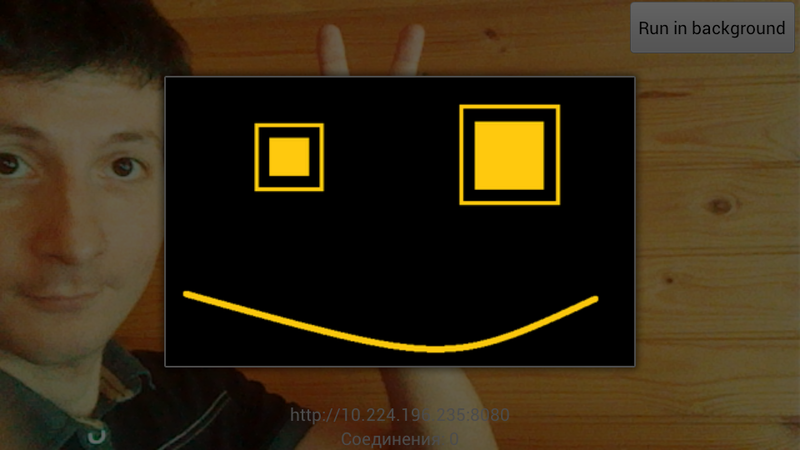

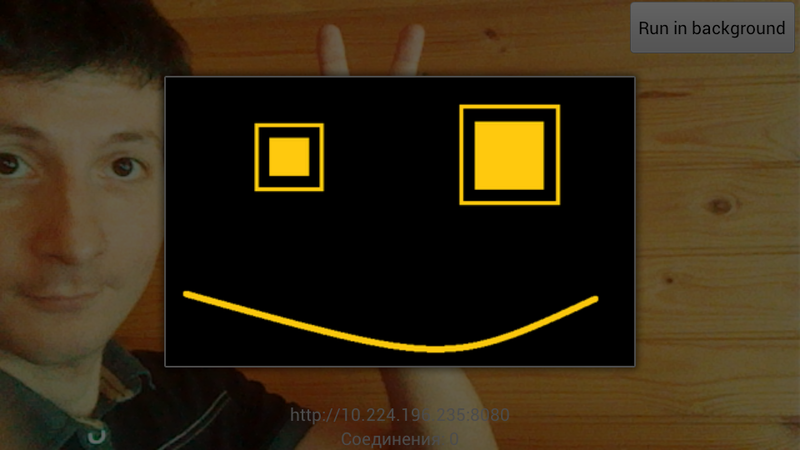

Here’s how it looks on the phone:

Then I had to change the Android application Mitya very slightly, and I could already see it with a camera and hear it with a microphone in a browser on a PC.

I did not think about it before, but only at this stage I realized that the video from IP Webcam is not exactly video. This is MJPEG . Those. The stream is a sequential transmission of frames in JPEG format. I am quite weak in matters related to video transmission, so I did not expect agility from MJPEG. And in vain - the result completely suited me.

To play the MJPEG stream, I found the wonderful free product MJPEG Decoder . Finding a tool for displaying MJPEG was complicated by the fact that the Windows application that runs Mitya uses the XNA framework. MJPEG Decoder supports XNA 4.0, and it also works with WinForms, WPF, Silverlight and Windows Phone 7 applications.

Mitya's source code is still open, but there are a lot of things there, so here I will give my demo example of using MJPEG Decoder in an XNA application for playing a video stream from IP Webcam. And in the next section, I will supplement this example with code for playing audio from IP Webcam.

So, create a Windows Game (4.0) project. In the links of this project, we add the library “MjpegProcessorXna4” (download it from the project site or from me ).

In the Game1.cs file, we declare the use of the MjpegProcessor namespace:

Declare the mjpeg private field:

Declare texture for video output:

We supplement the Initialize method:

Supplement the Update method:

1. By pressing the spacebar, start the playback of the video stream:

2. By pressing Esc, we stop playback:

3. Accept the next frame when you call Update:

We supplement the Draw method:

That's almost all. Almost, because everything is already working, but frames during playback I change very rarely. I have every 1-2 seconds. To fix this, it remains to correct the class constructor as follows:

I will give the contents of the Game1.cs file at the end of the next section, when I add it with the streaming audio playback code.

I did not manage to find a way how using standard XNA tools or even .NET you can play audio streams from IP Webcam. Neither "audio.wav" nor "audio.ogg". I found examples of where streaming audio was played using the static MediaPlayer class from the XNA framework, but our streams were too tough for him.

A lot of links on the web to open projects NAUDIO and SlimDX. But in the first one I launched a demo application and it also failed, and the second one I didn’t master. Roll up to the level of DirectX really did not want to. Quite by chance I found a wonderful way out. it turns out, along with all the same great VLC media player comes ActiveX-library! Those.under Windows, I can work with VLC using COM interfaces. The interface description is very mean; the VLC project Wiki contains generally outdated information. It is unpleasant, but everything was possible.

To work with the ActiveX interfaces of the library, you must first register it. When installing the VLC media player, it is not registered and, as I understand it, it is a dead weight in the Vlc folder. To do this, run the console (cmd.exe) and type:

Specify the path, of course. If you have Windows Vista or Windows 7, run the console as an administrator.

Now the sound from IP Webcam we can play from anywhere. I trained on Excel. It is possible and on VBScript, but in VBA members of classes get out in hints. If you wish, here is a quick example. Open Excel, press Alt + F11. In the Microsoft Visual Basic for Applications window, the Tools menu -> References ... Connect the link to “VideoLAN VLC ActiveX Plugin”. Now you can add a module and enter the macro text there:

Start IP Webcam, run the macro - there will be a sound! By the way, you can also make video playback.

Returning to our test XNA-application, to play streaming audio, do the following:

Add a reference to the VideoLAN VLC ActiveX Plugin COM component in the project. In Solution Explorer, it will appear as “AXVLC”. Select the “AXVLC” link and in the “Properties” window set the “Embed interaction types” property to False.

Declare use of the AXVLC namespace:

Announce object:

Supplement the Initialize method:

To add the Update method:

1. By pressing the space bar, we start playing the audio stream:

2. By pressing Esc, we stop the playback of the audio stream:

So, this is what should happen:

Having closed this stage, I had both technical and non-technical conclusions.

Now my friends can travel around my apartment “in” Mitya, from the comfort of my home. More precisely could, if they had a gamepad. It seems that it is necessary to make management more popular input tools.

Mitya sees and hears everything, but can say nothing. First you need to expand his facial expressions and train with some basic gestures, such as, “yes”, “no”, “wag his tail”. Then it will be necessary to do the sound reproduction in the opposite direction - from the operator to the robot.

Another question that has emerged is the constant buzzing-buzzing work of a vertical servo driving the head of Mitya. Due to the weight of the phone, the servo is forced to maintain a given angle and constantly buzzes and vibrates finely. It does not affect the video, but the microphone significantly scores. The operator, as a result, hears nothing but the buzz. In some positions of the head, the sound stops and then everything is perfectly audible. I do not even know how to solve this problem yet.

My non-technical experience is that I discover new aspects of my hobby. Standing in the corner, the robot Mitya seems to be living his own life: he introduces me to interesting and in some people very similar to me, he manages to find me job offers and every evening I hurry home to hurry to do something still in this project. I hope there are no psychiatrists here ... In a word, I never expected such a return from the evening pastime (both from my side and with Mitina).

And here is another conclusion that I will try to remember. I understand perfectly well that nothing motivates so much as winning. And nothing kills interest like a long absence of victories. I think not everyone possesses such a degree of stubborn boredom as I do. And even I was on the verge of not throwing it all. I told myself that I would take another project for the time being, take a break from this, and then I would definitely come back. But deep down I knew what it meant and tried not to look at that angle. The solution of the problem of video transmission was for me a big step in the project, and I will try not to take big steps anymore. A big step is a big risk. I almost took up another job and it seemed more attractive to me, but now I don’t even remember about it. My mind is full of plans for Mitya. So now I will set less tasks for myself. I want a lot of victories, this is food for my pleasure!I will try in the future to do without big victories. I choose many, many small.

It turned out to be difficult to find a relatively easy and working way to transfer video and audio streams from an Android gadget to a remote control application on a PC. Without this step, I categorically did not want to move on, so for quite a long time I was stuck in my stubbornness.

Many thanks to all who helped me, without help I would not have mastered this puzzle. Correspondence with like-minded people brought me to very pleasant and interesting people, I somehow did not consider this side of the hobby before. It turned out the robot Mitya helped me find people close in spirit. And it was a completely unexpected bonus for me. Perhaps this is the main "profit" hobby? Only now I realized that communication is more important to me than just “handicraft”. Why is all this necessary, if not with whom to share, consult, boast?

')

I will wrap up with so beloved lyric component. This article is a continuation of the story about the robot Mitya. In the first part I described how I built my robot, in the second I described what it would take to program it. In this article I will focus on the issue of video and sound transmission from the robot to the operator. I will not repeat here about the project in general and its software architecture in particular - these issues are described in detail in the first part.

The most exciting question: what is the result, how much will the picture and sound slow down? Here is my video response:

I apologize for the tips, but I definitely needed to demonstrate both the original sound and the sound reproduced on the PC. The delay, of course, is. Moreover, when playing, the sound is a little behind the picture. The video lags slightly behind reality (after all, it’s necessary to take into account that this is not an analog system, and it’s the phone that runs the power, though powerful). I tried to control the robot, and I did not experience discomfort with such a delay. So I was completely satisfied with the result - with a slight lag of sound, I am quite ready to accept it.

The quality of the picture displayed by the operator corresponds to what the front-facing camera on HTC Sensation is capable of. I could use the main camera, but then I would have to abandon Miti's mimicry, and I can't go for that.

For ease of navigation I will give a plan for the further content of the article:

1. The evolution of the solution (only for the curious)

2. IP Webcam

3. Video playback in a Windows application

4. Playback sound in a Windows application

5. Summary

Solution evolution (curious only)

So that I am not accused of “multi-lettering” I propose a compromise: people are curious, or sympathizing with the project, or simply compassionate (it’s not for nothing that I wrote all this) can read how I got out and what solutions I found and discarded.

But seriously, I want to share, whenever possible, all the acquired experience, because I am sure that value is not only a positive experience, but also a negative one. What if there are people who can learn from the mistakes of others? In addition, I would be very grateful if someone found or showed a way out of the dead ends that I met. And then it would be an exchange of experience.

In this section, I am writing about dead ends. If you are just looking for a recipe for transferring video and sound from a robot to a PC, you can skip to the next section.

The idea was simple: to embed code that implements the translation of video and audio streams to the level of an Android application, and to embed code that receives and reproduces these streams to the Windows-level management application.

I dismissed all kinds of “knee” solutions using ready-made, non-integrable products into my code right away - ugly.

I started with the video. And for some reason he was deeply convinced that there would be no problems with the formation and translation of the video stream. XXI century after all, someone already has four-core smartphones ... But no. Despite the fact that each phone on board has two cameras, it is not so easy to make a webcam out of the phone. Surprisingly, there are no ready-made software tools built into Android to solve this problem. I don’t know what is the reason, as this task is not a problem for phone manufacturers, but I suspect that everything is explained by the complexity of certification in some countries of devices with such software. Suddenly this is spyware? This is the only explanation I could come up with. But Mitya rings so like motors that a spy from him, like a balloon from a hippopotamus. Therefore, conscience with insomnia does not torment me.

Digging up google, code.google, stackoverflow, android developers and all-all-all, I found some very interesting, but I have not brought anywhere, solutions. And I spent a lot of time on them. In any case, I will describe them and why I refused each of them. Experience may be helpful. For some problems, these solutions are quite suitable, but I did not make friends with them. I will omit the most dead-end options. Leave only those who have a chance.

Option one: use the MediaRecorder class, which is part of Android. I had thought that this was the solution to all my problems - here both video and audio. At the output, I will receive a 3gp stream, transfer it via UDP to PC. But alas. MediaRecorder will not work with streams so easily - it can only with files. Googling, I found a very interesting discussion on the groups.google.com forum. A similar problem was discussed here, and during its discussion a link to another interesting post came up . It describes a sort of "hack", how to deceive MediaRecorder, so that he thinks he is working with the file, and actually slip him to write the output stream to the socket. I will not repeat the implementation description, everything is written there. This option is quite satisfied with the discussion participants. The task was to record video from the phone to a file on a remote computer, bypassing the phone's memory card. It was in the file - by studying this option deeper, I was convinced that it is not suitable for webcasting. The fact is that MediaRecorder on my device works as follows: when a video recording starts, a file is created and a space is reserved in its head for recording the size of the stream. This field is filled only upon completion of the video. And for this, the output stream of MediaPlayer must support positioning (Seek operation). A file stream, for example, is capable of this, and when broadcasting over a network, the stream, naturally, is strictly sequential and will not jump to its beginning to fill the field with the final size. But the guys had a task to write to a file on a remote computer. Therefore, they first "merged" their stream into a file, and then opened this file for writing and entered its size in the right place. After that, such a file could already be played with anything.

The task in my project is different: I do not have a moment to complete the recording. And I can not determine the size of the video stream. Those. MediaPlayer output stream is not intended for webcast. At least on my machine. This option had to be thrown away.

Well, I decided to try to do everything myself. Android provides the ability to get raw footage from the camera of the device. This function is described perfectly everywhere, the Internet is filled with examples of converting the received stream with a frame from the device’s camera to a jpeg-file and saving this file to a memory card. In my case, the stream of jpeg-frames could be driven over UDP on a PC. But what if the stream is broken (after all, this is UDP)? It is necessary to somehow separate the frames with labels or use the jpeg header for this. On the PC, you have to somehow extract the frames from the stream. All this is somehow low-level, and therefore I did not like it at all. Definitely need to use a ready-made codec. And it would be great to use some standard media protocol. And I began to collect information in this direction. So the second option was dropped without being born and the third one appeared: use ffmpeg.

ffmpeg is deservedly the most popular and popular product in this area. Thanks to the developed community, it is quite possible to rely on it, and I decided that I decided on the direction of further work. And then I suspended for two months. It turns out, ffmpeg for Android will have to compile yourself. At the same time, you will have to get acquainted with the Android NDK, since ffmpeg is written in C. Building ffmpeg for Android is extremely difficult in Windows. “Embarrassing” is not entirely sincere, in fact I have not met a single mention of a successful compilation in Windows. There are a lot of questions on this topic, but they are recommended to deploy Linux in all forums in one voice. Now, perhaps, the situation has changed: here is the question for the article on my blog and my answer. I had no experience with using Linux. But I was curious about what kind of beast Linux was, I had to install Ubuntu . It turned out further that there is no actual information on the compilation of ffmpeg on the Internet. Having suffered a lot, he managed to collect the coveted ffmpeg. I described the assembly process in some detail in my blog.

By this time, Mitya had long been gathering dust in the corner, abandoned and forgotten. And in front of me loomed only new and completely bleak battles with the Android NDK, the complete lack of API documentation for ffmpeg, immersion in the mysteries of codecs and compiling ffserver (this is a streaming video server, part of the ffmpeg project). With the help of ffserver, you can organize the broadcast of video and audio from the robot to any PC on the network. My friends already thought that I had burned out with robotics, and I cried, injected, but continued to gnaw ffmpeg granite. Not sure that I could go this way to the end.

And then help came to me. Remember I talked about the wonderful like-minded people with whom I shared a common hobby? I must say that the robot Mitya was not alone by this moment. In another city, he already appeared outwardly very similar, but internally in some places it was different and sometimes even for the better, a twin brother. I correspond with Luke_Skypewalker - the author of this robot. In the next letter from him I see a tip to see an application for Android, called IP Webcam . I also find out that this application transmits HTTP video and sound from an Android device, has an API and can work in the background!

I must admit that after the publication of the first part of the article about the robot Mityu, on the project site I received a comment from the user kib.demon with the advice to take a closer look at IP Webcam. I looked, but I didn’t see the main thing - the fact that this application has a software API. I thought that the application that implements the webcam itself does not need me and quickly forgot about its existence. And there was also a comment on the article itself. There was no information about the API, and I missed it too. This mistake cost Mité three months in the corner.

I do not think that I was in vain so much digging with ffmpeg. After my posts (there was also an English version), they send me a lot of questions, apparently taking me for an expert in this field. I try to respond to the best of my knowledge, but most of the questions still hang in the air. The topic is still relevant. I would be very happy if someone continued this work and described it. Still, on the Internet, almost nothing about the software interaction with ffmpeg. And that is, out of date for several years. Thanks to the use of decent codecs, ffmpeg can allow a maximum of video to be squeezed out of a minimum of traffic. For many projects this will be very useful.

Ip webcam

So, I decided on the level of the Android part of the robot Mitya: the IP Webcam application will be used to broadcast video and audio.

On the developer’s website there is a page describing the functions available in the API. You can also download the source code of a tiny Android project that demonstrates how to programmatically interact with IP Webcam.

Actually, here is the key part of this example:

Intent launcher = new Intent().setAction(Intent.ACTION_MAIN).addCategory(Intent.CATEGORY_HOME); Intent ipwebcam = new Intent() .setClassName("com.pas.webcam", "com.pas.webcam.Rolling") .putExtra("cheats", new String[] { "set(Photo,1024,768)", // set photo resolution to 1024x768 "set(DisableVideo,true)", // Disable video streaming (only photo and immediate photo) "reset(Port)", // Use default port 8080 "set(HtmlPath,/sdcard/html/)", // Override server pages with ones in this directory }) .putExtra("hidebtn1", true) // Hide help button .putExtra("caption2", "Run in background") // Change caption on "Actions..." .putExtra("intent2", launcher) // And give button another purpose .putExtra("returnto", new Intent().setClassName(ApiTest.this,ApiTest.class.getName())); // Set activity to return to startActivity(ipwebcam); It is remarkable that you can control the orientation of the broadcast video, its resolution, picture quality. You can switch between the rear and front cameras, turn the flash LEDs off. All this can be controlled in the settings before launching the broadcast or programmatically using the cheat codes given by the author of the project, as

This is shown in the example.

A little distracted: unfortunately with a flash (Mitya's headlight) I have a puncture. This does not apply to IP Webcam. I have already encountered this problem in my code earlier. On my device, if you activate the front camera, you can no longer control the flash. I suspect it's not just HTC Sensation.

In the IP Webcam application after the start of the video broadcast, the video from the active camera starts to be displayed on the screen. Above the video, two customizable buttons are displayed. By default, one initiates a dialog with help, and the second opens a menu of available actions. In the example, the author hides the first button, and the second sets up to translate the application in the background.

You can check audio / video broadcasting, for example, in a browser or in the VLC media player application. After launching the IP Webcam broadcast in the browser at http: // <IP phone>: <port>, the “Smartphone Camera Service” becomes available. Here you can view individual frames, play video and sound. To view a video stream, for example, in the VLC media player you need to open the URL http: // <phone IP>: <port> / videofeed. Two URLs are available for audio playback: http: // <ip phone>: <port> /audio.wav and http: // <ip phone>: <port> /audio.ogg. There is a significant delay in sound, but as it turned out later, this is due to caching during playback. In the VLC media player, you can set caching to 20 ms and the lag will become insignificant.

It was good news, but now bad: I, of course, found difficulties. On my device in the background, IP Webcam stopped streaming video. I read the comments on Google Play and found that this happens to all the lucky ones with the 4th Android. About the 3rd I do not know. And just before the “discovery” of IP Webcam, I updated the Android version from 2.3.4 to 4.0.3. The impossibility of the background work was fatal, because I could not leave the activating, broadcasting video working on top, opening up my activity with the face of Mitya, and also controlling it. The activation of the face brought the IP Webcam activations into the background and the broadcast stopped. Yes, I could organize that part of the application that controlled the robot as a service, but what about Mitya’s face?

Not finding a way out by myself, I decided to write a letter to the author of the IP Webcam project. The author "our", in the sense of writing, you can write in Russian. Three things bothered me:

- Stop broadcasting in the background.

- Sound lag from the picture.

- Incorrectly displayed the phone's IP address in IP Webcam. This is not critical, but at first confusing. Although this is nonsense, here the only question is the incorrect text on the phone screen.

I have to thank the author of IP Webcam (Pavel Khlebovich). Another great acquaintance. In the course of the correspondence, which we started, he not only answered all my questions, but also sent another demo project, which shows how to get out with the background mode and my Android 4.0.3.

Most of all, of course, I was worried about the first two questions. For the second, Pavel told me everything about audio caching in the browser and the VLC media player.

But he wrote to me on the first question: “It seems that all 4.x phones already use the V4L driver, which does not allow capturing video without a surface for displaying it. Therefore, the video does not work in the background. As a workaround, you can try to make your own on top of my Activity, describe it as translucent, so that the IP Webcam surface is not destroyed, but actually make it opaque and show what you need. ”

It seems to be understandable, but how can one open one activity on the other and that both work? I thought only one activation can be active at a time (sorry for the pun). Conducted several experiments, but the "lower" activity always worked onPause. Pavel helped again: he made a demo project for me. Surprisingly, the idea of placing one activity on top of another works! I finished Paul's demo project a little bit (cosmetically) and uploaded Mitya's robot to the site.

I will describe what has been done in this demonstration.

1. Button1 is added to the layout of the main activity.

2. Added another layout “imageoverlay.xml” with the image “some_picture.png”. There is nothing more in it.

3. Added OverlayActivity.java, which displays imageoverlay.xml content. It is this activity that will be displayed over the IP Webcam video. In my main project at this place will be activating, controlling the robot. She is Mitya's face.

4. The following should be added to the manifest:

a) Description OverlayActivity:

<activity android:name="OverlayActivity" android:launchMode="singleInstance" android:theme="@android:style/Theme.Dialog"> </activity> The launchMode and theme attributes must be filled with the specified values.

b) Rights for accessing the device camera:

<uses-permission android:name="android.permission.CAMERA"/> 5. Supplement MainActivity.java:

package ru.ipwebcam.android4.demo; import android.app.Activity; import android.content.Intent; import android.os.Bundle; import android.os.Handler; import android.util.Log; import android.view.View; import android.view.View.OnClickListener; import android.widget.Button; public class MainActivity extends Activity { static String TAG = "IP Webcam demo"; Handler h = new Handler(); public void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.main); final Button videoButton = (Button)findViewById(R.id.button1); videoButton.setOnClickListener(new OnClickListener() { public void onClick(View v) { Intent launcher = new Intent().setAction(Intent.ACTION_MAIN).addCategory(Intent.CATEGORY_HOME); Intent ipwebcam = new Intent() .setClassName("com.pas.webcam", "com.pas.webcam.Rolling") .putExtra("hidebtn1", true) // Hide help button .putExtra("caption2", "Run in background") // Change caption on "Actions..." .putExtra("intent2", launcher); // And give button another purpose h.postDelayed(new Runnable() { public void run() { startActivity(new Intent(MainActivity.this, OverlayActivity.class)); Log.i(TAG, "OverlayActivity started"); } }, 4000); startActivityForResult(ipwebcam, 1); h.postDelayed(new Runnable() { public void run() { sendBroadcast(new Intent("com.pas.webcam.CONTROL").putExtra("action", "stop")); Log.i(TAG, "Video is stopped"); } }, 20000); Log.i(TAG, "Video is started"); } }); } } By clicking on the button, IP Webcam is launched, then after 4 seconds our activation with a picture opens on top of it. The image size is specifically made smaller than the screen resolution (at least for HTC Sensation) so that both activations can be seen. And 20 seconds after launch, the work of IP Webcam in the background stops, but our "main" activation continues to work.

Here’s how it looks on the phone:

Then I had to change the Android application Mitya very slightly, and I could already see it with a camera and hear it with a microphone in a browser on a PC.

Play video in a Windows application

I did not think about it before, but only at this stage I realized that the video from IP Webcam is not exactly video. This is MJPEG . Those. The stream is a sequential transmission of frames in JPEG format. I am quite weak in matters related to video transmission, so I did not expect agility from MJPEG. And in vain - the result completely suited me.

To play the MJPEG stream, I found the wonderful free product MJPEG Decoder . Finding a tool for displaying MJPEG was complicated by the fact that the Windows application that runs Mitya uses the XNA framework. MJPEG Decoder supports XNA 4.0, and it also works with WinForms, WPF, Silverlight and Windows Phone 7 applications.

Mitya's source code is still open, but there are a lot of things there, so here I will give my demo example of using MJPEG Decoder in an XNA application for playing a video stream from IP Webcam. And in the next section, I will supplement this example with code for playing audio from IP Webcam.

So, create a Windows Game (4.0) project. In the links of this project, we add the library “MjpegProcessorXna4” (download it from the project site or from me ).

In the Game1.cs file, we declare the use of the MjpegProcessor namespace:

using MjpegProcessor; Declare the mjpeg private field:

private MjpegDecoder mjpeg; Declare texture for video output:

private Texture2D videoTexture; We supplement the Initialize method:

this.mjpeg = new MjpegDecoder(); Supplement the Update method:

1. By pressing the spacebar, start the playback of the video stream:

this.mjpeg.ParseStream(new Uri(@"http://192.168.1.40/videofeed")); 2. By pressing Esc, we stop playback:

this.mjpeg.StopStream(); 3. Accept the next frame when you call Update:

this.videoTexture = this.mjpeg.GetMjpegFrame(this.GraphicsDevice); We supplement the Draw method:

if (this.videoTexture != null) { this.spriteBatch.Begin(); Rectangle rectangle = new Rectangle( 0, 0, this.graphics.PreferredBackBufferWidth, this.graphics.PreferredBackBufferHeight); this.spriteBatch.Draw(this.videoTexture, rectangle, Color.White); this.spriteBatch.End(); } That's almost all. Almost, because everything is already working, but frames during playback I change very rarely. I have every 1-2 seconds. To fix this, it remains to correct the class constructor as follows:

public Game1() { this.IsFixedTimeStep = false; this.graphics = new GraphicsDeviceManager(this); this.graphics.SynchronizeWithVerticalRetrace = false; Content.RootDirectory = "Content"; } I will give the contents of the Game1.cs file at the end of the next section, when I add it with the streaming audio playback code.

Sound playback in a Windows application

I did not manage to find a way how using standard XNA tools or even .NET you can play audio streams from IP Webcam. Neither "audio.wav" nor "audio.ogg". I found examples of where streaming audio was played using the static MediaPlayer class from the XNA framework, but our streams were too tough for him.

A lot of links on the web to open projects NAUDIO and SlimDX. But in the first one I launched a demo application and it also failed, and the second one I didn’t master. Roll up to the level of DirectX really did not want to. Quite by chance I found a wonderful way out. it turns out, along with all the same great VLC media player comes ActiveX-library! Those.under Windows, I can work with VLC using COM interfaces. The interface description is very mean; the VLC project Wiki contains generally outdated information. It is unpleasant, but everything was possible.

To work with the ActiveX interfaces of the library, you must first register it. When installing the VLC media player, it is not registered and, as I understand it, it is a dead weight in the Vlc folder. To do this, run the console (cmd.exe) and type:

regsvr32 "c:\Program Files (x86)\VideoLAN\VLC\axvlc.dll"Specify the path, of course. If you have Windows Vista or Windows 7, run the console as an administrator.

Now the sound from IP Webcam we can play from anywhere. I trained on Excel. It is possible and on VBScript, but in VBA members of classes get out in hints. If you wish, here is a quick example. Open Excel, press Alt + F11. In the Microsoft Visual Basic for Applications window, the Tools menu -> References ... Connect the link to “VideoLAN VLC ActiveX Plugin”. Now you can add a module and enter the macro text there:

Sub TestAxLib() Dim vlc As New AXVLC.VLCPlugin2 vlc.Visible = False vlc.playlist.items.Clear vlc.AutoPlay = True vlc.Volume = 200 vlc.playlist.Add "http://192.168.1.40:8080/audio.wav", Null, Array(":network-caching=5") vlc.playlist.playItem (0) MsgBox "Hello world!" vlc.playlist.stop End Sub Start IP Webcam, run the macro - there will be a sound! By the way, you can also make video playback.

Returning to our test XNA-application, to play streaming audio, do the following:

Add a reference to the VideoLAN VLC ActiveX Plugin COM component in the project. In Solution Explorer, it will appear as “AXVLC”. Select the “AXVLC” link and in the “Properties” window set the “Embed interaction types” property to False.

Declare use of the AXVLC namespace:

using AXVLC; Announce object:

private AXVLC.VLCPlugin2 audio; Supplement the Initialize method:

this.audio = new AXVLC.VLCPlugin2Class(); To add the Update method:

1. By pressing the space bar, we start playing the audio stream:

this.audio.Visible = false; this.audio.playlist.items.clear(); this.audio.AutoPlay = true; this.audio.Volume = 200; string[] options = new string[] { @":network-caching=20" }; this.audio.playlist.add( @"http://192.168.1.40:8080/audio.wav", null, options); this.audio.playlist.playItem(0); 2. By pressing Esc, we stop the playback of the audio stream:

if (this.audio.playlist.isPlaying) { this.audio.playlist.stop(); } So, this is what should happen:

using System; using System.Collections.Generic; using System.Linq; using Microsoft.Xna.Framework; using Microsoft.Xna.Framework.Audio; using Microsoft.Xna.Framework.Content; using Microsoft.Xna.Framework.GamerServices; using Microsoft.Xna.Framework.Graphics; using Microsoft.Xna.Framework.Input; using Microsoft.Xna.Framework.Media; using AXVLC; using MjpegProcessor; namespace WindowsGame1 { /// <summary> /// . /// </summary> public class Game1 : Microsoft.Xna.Framework.Game { GraphicsDeviceManager graphics; SpriteBatch spriteBatch; /// <summary> /// . /// </summary> private Texture2D videoTexture; /// <summary> /// MJPEG. /// (, MJPEG), IP Webcam. /// </summary> /// <remarks> /// .NET "MjpegProcessorXna4". /// </remarks> private MjpegDecoder mjpeg; /// <summary> /// VLC. /// , IP Webcam. /// </summary> /// <remarks> /// ActiveX- VLC (www.videolan.org). /// VLC, ActiveX- axvlc.dll, COM- "VideoLAN VLC ActiveX Plugin" ( "AXVLC"). /// </remarks> private AXVLC.VLCPlugin2 audio; /// <summary> /// . /// </summary> public Game1() { this.IsFixedTimeStep = false; this.graphics = new GraphicsDeviceManager(this); this.graphics.SynchronizeWithVerticalRetrace = false; Content.RootDirectory = "Content"; } /// <summary> /// . /// </summary> protected override void Initialize() { base.Initialize(); // : this.mjpeg = new MjpegDecoder(); // COM- : this.audio = new AXVLC.VLCPlugin2Class(); } /// <summary> /// LoadContent will be called once per game and is the place to load /// all of your content. /// </summary> protected override void LoadContent() { // Create a new SpriteBatch, which can be used to draw textures. spriteBatch = new SpriteBatch(GraphicsDevice); } /// <summary> /// Allows the game to run logic such as updating the world, /// checking for collisions, gathering input, and playing audio. /// </summary> /// <param name="gameTime">Provides a snapshot of timing values.</param> protected override void Update(GameTime gameTime) { // : if (Keyboard.GetState().IsKeyDown(Keys.Space)) { // : this.mjpeg.ParseStream(new Uri(@"http://192.168.1.40:8080/videofeed")); // : this.audio.Visible = false; this.audio.playlist.items.clear(); this.audio.AutoPlay = true; this.audio.Volume = 200; string[] options = new string[] { @":network-caching=20" }; this.audio.playlist.add( @"http://192.168.1.40:8080/audio.wav", null, options); this.audio.playlist.playItem(0); } // Esc : if (Keyboard.GetState().IsKeyDown(Keys.Escape)) { // : this.mjpeg.StopStream(); // : if (this.audio.playlist.isPlaying) { this.audio.playlist.stop(); } } // : this.videoTexture = this.mjpeg.GetMjpegFrame(this.GraphicsDevice); base.Update(gameTime); } /// <summary> /// This is called when the game should draw itself. /// </summary> /// <param name="gameTime">Provides a snapshot of timing values.</param> protected override void Draw(GameTime gameTime) { GraphicsDevice.Clear(Color.CornflowerBlue); if (this.videoTexture != null) { this.spriteBatch.Begin(); Rectangle rectangle = new Rectangle( 0, 0, this.graphics.PreferredBackBufferWidth, this.graphics.PreferredBackBufferHeight); this.spriteBatch.Draw(this.videoTexture, rectangle, Color.White); this.spriteBatch.End(); } base.Draw(gameTime); } } } Total

Having closed this stage, I had both technical and non-technical conclusions.

Now my friends can travel around my apartment “in” Mitya, from the comfort of my home. More precisely could, if they had a gamepad. It seems that it is necessary to make management more popular input tools.

Mitya sees and hears everything, but can say nothing. First you need to expand his facial expressions and train with some basic gestures, such as, “yes”, “no”, “wag his tail”. Then it will be necessary to do the sound reproduction in the opposite direction - from the operator to the robot.

Another question that has emerged is the constant buzzing-buzzing work of a vertical servo driving the head of Mitya. Due to the weight of the phone, the servo is forced to maintain a given angle and constantly buzzes and vibrates finely. It does not affect the video, but the microphone significantly scores. The operator, as a result, hears nothing but the buzz. In some positions of the head, the sound stops and then everything is perfectly audible. I do not even know how to solve this problem yet.

My non-technical experience is that I discover new aspects of my hobby. Standing in the corner, the robot Mitya seems to be living his own life: he introduces me to interesting and in some people very similar to me, he manages to find me job offers and every evening I hurry home to hurry to do something still in this project. I hope there are no psychiatrists here ... In a word, I never expected such a return from the evening pastime (both from my side and with Mitina).

And here is another conclusion that I will try to remember. I understand perfectly well that nothing motivates so much as winning. And nothing kills interest like a long absence of victories. I think not everyone possesses such a degree of stubborn boredom as I do. And even I was on the verge of not throwing it all. I told myself that I would take another project for the time being, take a break from this, and then I would definitely come back. But deep down I knew what it meant and tried not to look at that angle. The solution of the problem of video transmission was for me a big step in the project, and I will try not to take big steps anymore. A big step is a big risk. I almost took up another job and it seemed more attractive to me, but now I don’t even remember about it. My mind is full of plans for Mitya. So now I will set less tasks for myself. I want a lot of victories, this is food for my pleasure!I will try in the future to do without big victories. I choose many, many small.

Source: https://habr.com/ru/post/144748/

All Articles