Stream encryption at 10 Gbit / s? Yes. Parallel

Over the past couple of decades, the IT industry has made a huge breakthrough in its development - there are a lot of new technologies, services, programming languages, etc. But the most important thing is that the number of IT-technology users has grown to gigantic proportions. It became especially noticeable on the volumes of traffic - such large services as Google, Facebook, Twitter process petabytes of traffic. At the same time, everyone knows what data centers they have. However, I am not going to talk now about cloud technologies and NoSQL solutions. I would like to look at this whole situation a bit from the other side, namely from the point of view of security.

Imagine that you have a data center, which has a thick wire with traffic. How do you think the traffic to you is safe? I would not be too naive and would say - no matter how much. There are too many articles on the Internet about how to make viruses, network worms, DoS, DDoS, the number of script kiddies is now just going through the roof, and the possibility of hiring professional hackers doesn’t surprise anyone.

At this point, you start thinking about how to protect yourself from all this horror, and the brave guys from some IT consulting tell you: put an intrusion detection system, firewall, IPS and antivirus everywhere and everywhere. On a reasonable question, “How many of these pieces do we need?”, You are called the numbers 10/20/100/500, depending on the thickness of your wire with traffic.

And here a question arises, about which I would like to talk: how to parallelize the processing of traffic in order to protect it? This question is very important for several reasons:

')

1. Security is an integral part of the foundation on which your system / service / anything should be based.

2. Security mechanisms are very resource-intensive (we remember Kaspersky on a home computer).

3. Security mechanisms are practically not accelerated in any way with the help of such things as caching, execution prediction, etc.

On the one hand, in order to make our lives better, we can come up with new ways to improve the performance of the remedies themselves. On the other hand, we can pokrativit a little and leave the means of protection as is, and instead of improving them, come up with a way to organize distributed traffic processing by means of protection.

At this moment, especially impatient people are starting to offer to install something like F5 Big-IP, which will distribute traffic to servers with Checkpoint Security Gateway, and we will enjoy life. In principle, a good solution, but it stops working when:

• you want to easily and unconfigurefully reconfigure the protection settings (change firewall rules, add IP addresses to VPNs, etc.);

• you want to seamlessly add another instance of protection;

• you have one or, even worse, multiple instances of protection.

Plus, you are still limited by the existing options for protection, because if you want to write your own, then you ... well ... just worn out.

But do not be afraid, we will help you! Our company has a cool thing called Crossbeam. And not just worth it, but also actively used by us for the development and porting of their means of protection. I'll tell you briefly what it is.

Crossbeam is a platform sharpened for end-to-end traffic processing with security tools. She provides:

• powerful hardware configuration;

• mechanisms for the distribution and balancing of traffic;

• tools for developing your own applications.

The platform consists of the following components:

1. Hardware

1) Chassis

2) Power and ventilation units

3) Modules:

i. Network (NPM - network processing module)

ii. Applications (APM - application processing module)

iii. Management (CPM - control processing module)

2. Software

1) XOS operating system

2) Virtual Application Processors - VAP (Virtual Application Processor)

3) Applications

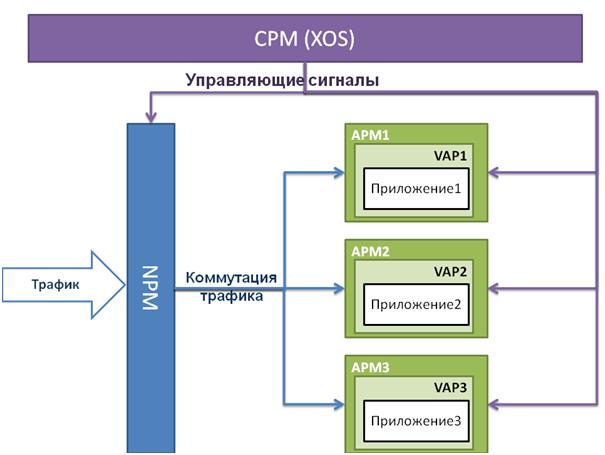

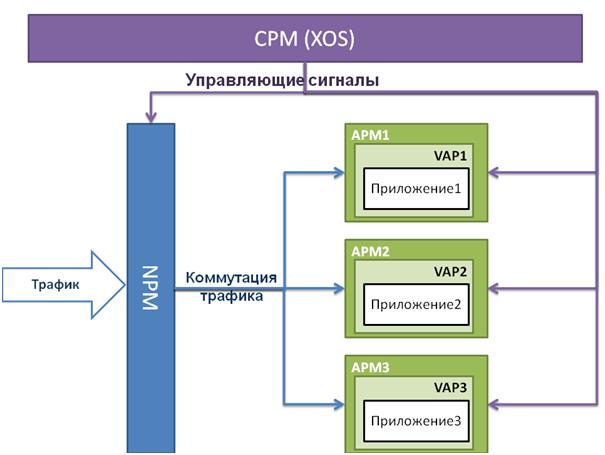

The processing of traffic can be represented as follows:

Incoming traffic arrives at the network module port (NPM), which distributes it across application modules (APM). In short, this is done like this:

1. NPM parses the IP packet header.

2. The following data is extracted from the header:

a. Source ip address

b. Destination ip address

c. Source TCP port

d. Destination TCP port

and are formed into a tuple. All packets with the same tuples are called traffic flow.

3. the resulting tuple is searched in the so-called. active network flow table (AFT). The search result is the APM number for traffic processing.

4. If the search was successful, the packet is sent to the corresponding APM.

5. If the search was not successful, then NPM refers to the CPM, which stores the configuration of the entire platform, in order for it to add a new entry to the AFT.

This algorithm allows us to solve the question voiced at the beginning: how to parallelize the processing of traffic in order to protect it. At the same time, note that this method of distributing traffic is sufficiently selective in order to balance the load, but at the same time it does not allow user sessions to be split across several compute nodes.

In addition, the platform tools allow us to:

• it is easy and natural to reconfigure the protection settings, since this is done once in one place and applies to all copies;

• If one or more instances of protection fall, automatically transfer the load to other instances of protection (APM).

In general, a very interesting piece of hardware with good functionality.

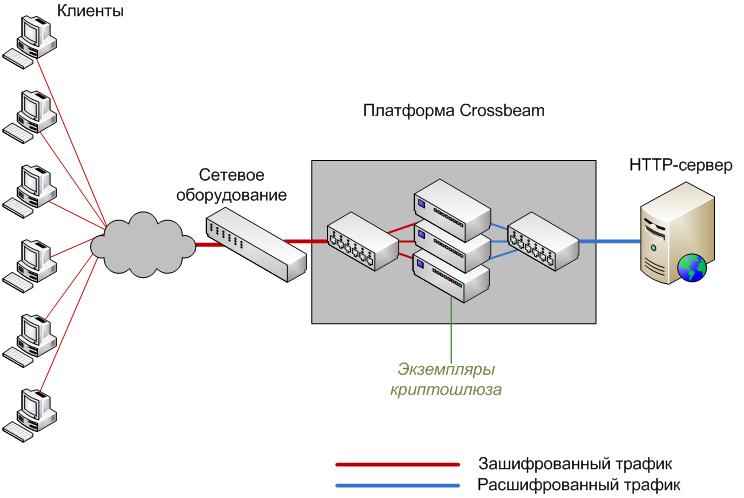

When she came to us, we decided that it was not worthwhile to waste such a thing and developed a cryptographic gateway specially for her. In our understanding, this is a program that is placed in front of our data center and:

• performs packet decryption for traffic going to the data center, thereby unloading the server from demanding computing

• makes packet encryption for the traffic coming from the data center, thereby ensuring the secure transmission of information to customers

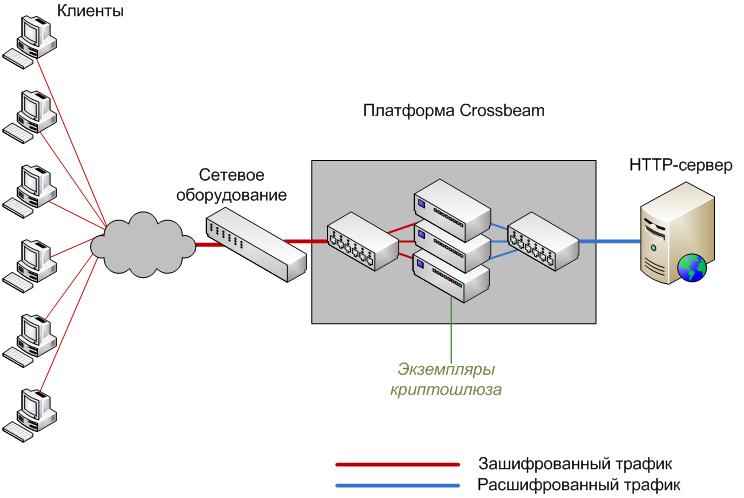

For clarity, I will give the scheme

This gateway does the following:

• distributes incoming traffic packets by APM;

• encrypts the contents of each packet according to the algorithm of GOST 28147-89;

• sends the package to where it was going;

• for packets going in the opposite direction, produces the same, only deciphering the contents.

At the same time, for each client (in fact, for each IP address) there must be its own key.

We started development on the usual CentOS, because what turns on APM is nothing more than a trimmed and patched RHEL. Having written and debugged our crypto gateway under CentOS, we began the transfer to Crossbeam. With the SDK, documentation and communication with the developers of Crossbeam itself, we spent about half a year on it. The essence of the transfer is to write to the application interface to communicate with the platform and take into account the possibilities of redistributing traffic.

The first problem we encountered is debugging. There is no gdb on APM, therefore we didn’t know how to debug at first. But having thought hard and carefully looking at the APMs, we saw the COM port. Then the solution came pretty quickly - remote debugging via the COM port. Set the kernel parameters for the Linux APM image, included debugging via kdb in proc - it worked. By the way, you can read about debugging with our colleagues (http://habrahabr.ru/company/neobit/blog/141067)

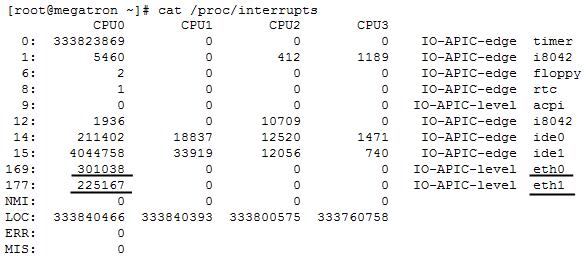

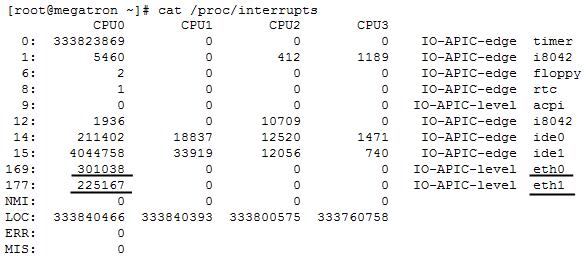

The second problem was to evenly distribute the load across the processor cores. Initially, the crypto-gateway was a kernel module that put a hook in the netfilter subsystem. When we started sending traffic, we saw that of the 16 cores available on the APM, one was loaded by 99% and the rest were idle. At the same time packet loss was just merciless. It was connected with this. A call to the netfilter hooks in the Linux kernel is done through software interrupts on the network interface. You can look in / proc / interrupts to see if this is so for your system.

There are 2 options for solving this problem:

1. Setting the SMP_AFFINITY kernel parameter.

2. Rebuilding the Linux kernel with the CONFIG_HOTPLUG_CPU parameter (starting with kernel version 2.6.24.3).

In the first case, a bit mask is written to the file / proc / irq / <interrupt number> / smp_affinity , in which the units correspond to the cores involved in processing this interrupt. However, in most cases, this solution only allows redistributing the load from one core to another. Those. when writing to mask smp_affinity 4 (000100), interrupt processing is performed on the third core. However, when writing mask 7 (000111), interrupt processing is performed only on the first core. Thus, this solution is applicable only for a very limited set of hardware. The second option is more universal, but it is not always applicable - many manufacturers strongly modify the Linux kernel for their hardware, without providing source code for the possibility of rebuilding. And Crossbeam among them. I had to use some secret knowledge from the developers of Crossbeam - they wrote a driver sharpened for Crossbeam, which performed the initial traffic processing before the package got into the Linux kernel.

As a result, we managed to achieve performance, which surprised us very much - streaming encryption in accordance with GOST 28147-89 at a speed of 0.95 Gbit / s. On one APM. And this is achieved by several things: firstly, by parallelizing the processing of network traffic, secondly, by load distribution across the cores, and thirdly, by the powerful hardware platform.

If we recall the possibility of paralleling traffic, then we can say that the encryption rate grows almost linearly with the addition of APM. That is, if you run the application on our maximum configuration of 3 APM, then you can encrypt it at a speed of almost 3 Gbit / s. If you are rich, you can buy the coolest model and make up to 10 Gbit / s. Not bad, huh?

By the way, these figures were obtained experimentally, and not theoretical, as most manufacturers do. We assembled a booth that generated a load of 5 Gb / s. As the HTTP server for which we decrypted the packages, we took nginx, twisted its settings, the settings of the Linux network stack, and forced nginx to give out HTML statics at a speed of 5 Gbit / s.

Summing up, I would like to draw the following conclusions. Parallelization of traffic processing is a very effective approach to overcoming bottlenecks, however, we started applying this approach to protection tools quite recently, and for the GOST algorithm we were among the first. Our idea was to parallelize traffic flows together with encryption of network connections in separate streams and distribute computations over a large number of cores on several independent computational modules. Naturally, the process of separating the security functions from the logic of the application system was not trivial, but our efforts were fully justified, as evidenced by the figures.

Imagine that you have a data center, which has a thick wire with traffic. How do you think the traffic to you is safe? I would not be too naive and would say - no matter how much. There are too many articles on the Internet about how to make viruses, network worms, DoS, DDoS, the number of script kiddies is now just going through the roof, and the possibility of hiring professional hackers doesn’t surprise anyone.

At this point, you start thinking about how to protect yourself from all this horror, and the brave guys from some IT consulting tell you: put an intrusion detection system, firewall, IPS and antivirus everywhere and everywhere. On a reasonable question, “How many of these pieces do we need?”, You are called the numbers 10/20/100/500, depending on the thickness of your wire with traffic.

And here a question arises, about which I would like to talk: how to parallelize the processing of traffic in order to protect it? This question is very important for several reasons:

')

1. Security is an integral part of the foundation on which your system / service / anything should be based.

2. Security mechanisms are very resource-intensive (we remember Kaspersky on a home computer).

3. Security mechanisms are practically not accelerated in any way with the help of such things as caching, execution prediction, etc.

On the one hand, in order to make our lives better, we can come up with new ways to improve the performance of the remedies themselves. On the other hand, we can pokrativit a little and leave the means of protection as is, and instead of improving them, come up with a way to organize distributed traffic processing by means of protection.

At this moment, especially impatient people are starting to offer to install something like F5 Big-IP, which will distribute traffic to servers with Checkpoint Security Gateway, and we will enjoy life. In principle, a good solution, but it stops working when:

• you want to easily and unconfigurefully reconfigure the protection settings (change firewall rules, add IP addresses to VPNs, etc.);

• you want to seamlessly add another instance of protection;

• you have one or, even worse, multiple instances of protection.

Plus, you are still limited by the existing options for protection, because if you want to write your own, then you ... well ... just worn out.

But do not be afraid, we will help you! Our company has a cool thing called Crossbeam. And not just worth it, but also actively used by us for the development and porting of their means of protection. I'll tell you briefly what it is.

Crossbeam is a platform sharpened for end-to-end traffic processing with security tools. She provides:

• powerful hardware configuration;

• mechanisms for the distribution and balancing of traffic;

• tools for developing your own applications.

The platform consists of the following components:

1. Hardware

1) Chassis

2) Power and ventilation units

3) Modules:

i. Network (NPM - network processing module)

ii. Applications (APM - application processing module)

iii. Management (CPM - control processing module)

2. Software

1) XOS operating system

2) Virtual Application Processors - VAP (Virtual Application Processor)

3) Applications

The processing of traffic can be represented as follows:

Incoming traffic arrives at the network module port (NPM), which distributes it across application modules (APM). In short, this is done like this:

1. NPM parses the IP packet header.

2. The following data is extracted from the header:

a. Source ip address

b. Destination ip address

c. Source TCP port

d. Destination TCP port

and are formed into a tuple. All packets with the same tuples are called traffic flow.

3. the resulting tuple is searched in the so-called. active network flow table (AFT). The search result is the APM number for traffic processing.

4. If the search was successful, the packet is sent to the corresponding APM.

5. If the search was not successful, then NPM refers to the CPM, which stores the configuration of the entire platform, in order for it to add a new entry to the AFT.

This algorithm allows us to solve the question voiced at the beginning: how to parallelize the processing of traffic in order to protect it. At the same time, note that this method of distributing traffic is sufficiently selective in order to balance the load, but at the same time it does not allow user sessions to be split across several compute nodes.

In addition, the platform tools allow us to:

• it is easy and natural to reconfigure the protection settings, since this is done once in one place and applies to all copies;

• If one or more instances of protection fall, automatically transfer the load to other instances of protection (APM).

In general, a very interesting piece of hardware with good functionality.

When she came to us, we decided that it was not worthwhile to waste such a thing and developed a cryptographic gateway specially for her. In our understanding, this is a program that is placed in front of our data center and:

• performs packet decryption for traffic going to the data center, thereby unloading the server from demanding computing

• makes packet encryption for the traffic coming from the data center, thereby ensuring the secure transmission of information to customers

For clarity, I will give the scheme

This gateway does the following:

• distributes incoming traffic packets by APM;

• encrypts the contents of each packet according to the algorithm of GOST 28147-89;

• sends the package to where it was going;

• for packets going in the opposite direction, produces the same, only deciphering the contents.

At the same time, for each client (in fact, for each IP address) there must be its own key.

We started development on the usual CentOS, because what turns on APM is nothing more than a trimmed and patched RHEL. Having written and debugged our crypto gateway under CentOS, we began the transfer to Crossbeam. With the SDK, documentation and communication with the developers of Crossbeam itself, we spent about half a year on it. The essence of the transfer is to write to the application interface to communicate with the platform and take into account the possibilities of redistributing traffic.

The first problem we encountered is debugging. There is no gdb on APM, therefore we didn’t know how to debug at first. But having thought hard and carefully looking at the APMs, we saw the COM port. Then the solution came pretty quickly - remote debugging via the COM port. Set the kernel parameters for the Linux APM image, included debugging via kdb in proc - it worked. By the way, you can read about debugging with our colleagues (http://habrahabr.ru/company/neobit/blog/141067)

The second problem was to evenly distribute the load across the processor cores. Initially, the crypto-gateway was a kernel module that put a hook in the netfilter subsystem. When we started sending traffic, we saw that of the 16 cores available on the APM, one was loaded by 99% and the rest were idle. At the same time packet loss was just merciless. It was connected with this. A call to the netfilter hooks in the Linux kernel is done through software interrupts on the network interface. You can look in / proc / interrupts to see if this is so for your system.

There are 2 options for solving this problem:

1. Setting the SMP_AFFINITY kernel parameter.

2. Rebuilding the Linux kernel with the CONFIG_HOTPLUG_CPU parameter (starting with kernel version 2.6.24.3).

In the first case, a bit mask is written to the file / proc / irq / <interrupt number> / smp_affinity , in which the units correspond to the cores involved in processing this interrupt. However, in most cases, this solution only allows redistributing the load from one core to another. Those. when writing to mask smp_affinity 4 (000100), interrupt processing is performed on the third core. However, when writing mask 7 (000111), interrupt processing is performed only on the first core. Thus, this solution is applicable only for a very limited set of hardware. The second option is more universal, but it is not always applicable - many manufacturers strongly modify the Linux kernel for their hardware, without providing source code for the possibility of rebuilding. And Crossbeam among them. I had to use some secret knowledge from the developers of Crossbeam - they wrote a driver sharpened for Crossbeam, which performed the initial traffic processing before the package got into the Linux kernel.

As a result, we managed to achieve performance, which surprised us very much - streaming encryption in accordance with GOST 28147-89 at a speed of 0.95 Gbit / s. On one APM. And this is achieved by several things: firstly, by parallelizing the processing of network traffic, secondly, by load distribution across the cores, and thirdly, by the powerful hardware platform.

If we recall the possibility of paralleling traffic, then we can say that the encryption rate grows almost linearly with the addition of APM. That is, if you run the application on our maximum configuration of 3 APM, then you can encrypt it at a speed of almost 3 Gbit / s. If you are rich, you can buy the coolest model and make up to 10 Gbit / s. Not bad, huh?

By the way, these figures were obtained experimentally, and not theoretical, as most manufacturers do. We assembled a booth that generated a load of 5 Gb / s. As the HTTP server for which we decrypted the packages, we took nginx, twisted its settings, the settings of the Linux network stack, and forced nginx to give out HTML statics at a speed of 5 Gbit / s.

Summing up, I would like to draw the following conclusions. Parallelization of traffic processing is a very effective approach to overcoming bottlenecks, however, we started applying this approach to protection tools quite recently, and for the GOST algorithm we were among the first. Our idea was to parallelize traffic flows together with encryption of network connections in separate streams and distribute computations over a large number of cores on several independent computational modules. Naturally, the process of separating the security functions from the logic of the application system was not trivial, but our efforts were fully justified, as evidenced by the figures.

Source: https://habr.com/ru/post/144565/

All Articles