Mind games. We understand with Intel HD graphics. And play?

At the recently held Game Developers Conference , while the exhibition was attended by girls, even more long-legged and less dressed than in the photo, I, an Intel software engineer, told “about the features of Intel's integrated graphics solutions and their effective use in game development. ".

To my surprise, the audience of the report was not only game developers

Therefore, that is, by numerous appearances of the listeners, I acquaint you with this report.

I have a report with a similar topic every year, every sixth to seventh. But, of course, I do not repeat the same story from year to year. And do not even make remakes! Time goes by, Intel

Constantly presents new graphic solutions; accordingly, new issues that need to be covered appear.

But something remains unchanged - introducing that the Intel Integrated Graphics market is now huge, will continue to grow, and among all categories of users, so these GPUs need to be considered when developing games and other graphical applications ...

And if before the only confirmation of these words was theoretical - a corresponding graph or chart from some serious source such as Mercury Research, now I have a practical and visual confirmation. Ultrabooks. The transition to this type of device is just as inevitable for users as the transition from CRT televisions and monitors to LCDs that ended not so long ago.

Although the specification of the ultrabook does not require an integrated GPU, the strict requirements for its dimensions do not allow inserting a discrete graphics card, which is not flattened by a tank. Therefore, in all ultrabooks (and not only those that the girls in the photo) uses a processor-integrated solution - Intel HD Graphics.

')

Intel HD Graghics. urriculum vitae.

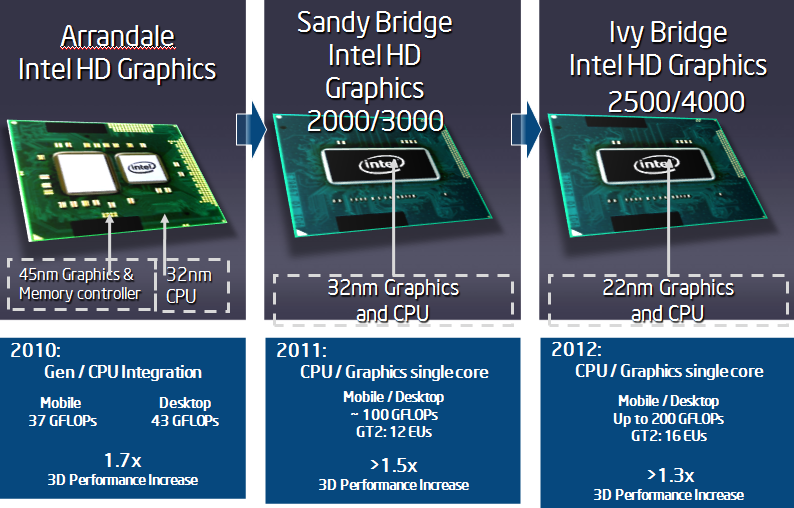

For the first time, the graphics core of Intel moved to the CPU from the north bridge of the motherboard in 2010, and immediately changed its name from Intel GMA (Graphics Media Accelerator) to Intel HD Graphics . But this was still a “civil marriage”, the GPU was not fully integrated with the CPU, it was even produced using a different technology - 45nm versus 32nm for the CPU. But, thanks to architectural improvements and an increase in the operating frequency, 3D processing speed has increased by 70% compared to the previous version of Intel GMA.

In 2011, Intel HD Graphics of the second generation was integrated into the core of Sandy Bridge. According to the original plan, the GT1 modification - Intel HD 2000 version with 6 executive devices (Execution Units or EU) was to be put into mobile devices, and the Intel HD 3000 with 12 EU versions should be put into desktop devices (GT2 modification). (By the way, the HD Graphics architecture initially laid the possibility of the technically simple addition of the EU.)

But in the end, Intel HD 3000 got into mobile devices, and Intel HD 2000 overwhelmingly in the desktop.

And finally, exactly a month ago, Intel HD Graphics 3 appeared in tandem with the Intel Ivy Bridge CPU. The third generation HD Graphics is distinguished not only by increasing the number of EUs to 16 for the desktop version of HD Graphics 4000, but also by improving the EUs themselves capable of running three instructions per clock, as well as the appearance of L3 cache, an additional texture sampler and full support for DirectX 11 hardware. Another unique feature of the third generation CPU is the variable size of vector instructions EU. The system is capable of dispatching HLSL logical flows to executive devices like SIMD8, SIMD16 or SIMD32.

The slide below shows more detailed comparative characteristics of Intel HD:

Why is the slide so gray? But because it is completely secret! In the original version, it was labeled as Intel Confidential, meaning a strict ban on distribution, and there were not three columns, but four. In the fourth column were the characteristics of a new, not yet released Intel GPU. Accordingly, compared with the new, not yet existing, everything else was shown just gray. I didn’t paint the slide - it’s not “17 Moments of Spring”.

Intel HD Graghics third generation. Characteristics from the place of work.

What games are playable on Intel HD 4000, that is, show a frame rate greater than or equal to 25 frames? If you quote the corresponding page on the Intel site, then - "most mainstream games at a screen resolution of 1280x720 or better." The same site contains a far from complete list of more than one hundred modern games, whose performance on Intel HD 4000/2500 is confirmed by Intel engineers.

And now - independent external testing. A trustworthy international site with a talking title www.notebookcheck.net , which tests ALL video cards found on netbooks-ultrabooks, recently published test results for the Intel HD 4000. For example, Metro 2033, Call of Duty: Modern Warfare 3, Battlefield 3, Fifa 2012 and Unigine Heaven 2.1 benchmark. I will not retell the results, see the original .

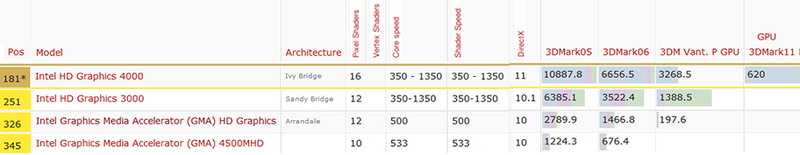

Finally, a sample from a common table of synthetic test results, made for different Intel GPUs. Pay attention to the change in position in the card performance rating:

Conclusion notebookcheck: “Overall, we are impressed with the new Intel graphics core. Performance compared to the HD 3000 has improved by 30%. This difference may be even greater - up to 40% if the GPU is paired with a powerful quad-core Ivy Bridge CPU, for example, i7-3610QM.

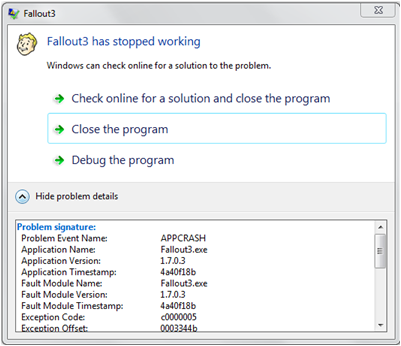

So what to do if your favorite game on Intel HD does not work properly? The tips given by www.intel.com/support/graphics/sb/cs-010486.htm , at first glance, look like the Captain Obvious: change the game settings, check for new game patches, install the new Intel driver. But in fact, these tips work. Intel engineers work closely with game developers, including when creating patches for compatibility with Intel GPUs. Also, as noted by notebookcheck, “slowly but surely” “(” “slowly but surely”) improves Intel drivers both in terms of correctness and performance, which leads to solving problems with games.

At this point, the post for simple players ends (thank you for your attention, welcome to the comments), and begin

Quick tips for game creators

1. Correctly determine the parameters of the graphics system and its capabilities - support for shaders, DX extensions and available video memory (note that the Intel GPU does not have a separate video memory, it shares system memory with the CPU).

Look at the example of the source code and application binary for correct and complete determination of the system parameters with the Intel GPU - GPU Detect here .

In addition, the Microsoft DirectX SDK (June 2010) includes a Video Memory example for determining the size of available video memory. We also recommend searching the Internet for "Get Video Memory Via WMI".

2. Consider the capabilities of Turbo Boost . Thanks to Turbo Boost, the frequency of the Intel GPU can double, giving a significant performance boost. But only if it allows the thermal state of the system. And this happens for obvious reasons only when not very busy, that is, the CPU is not very hot.

The following advice is to use the CPU status request as GetData () as rarely as possible. Note that the call to GetData () in a loop with the expectation of a result is 100% CPU load. In case of emergency, make requests to the CPU at the beginning of the frame drawing and load the CPU with some useful work before getting the GetData results. In this case, the CPU wait will be minimal.

3. Use implemented Intel GPU Early Z rejection. This technology allows you to discard in advance from further processing, i.e. without performing costly pixel shaders, fragments that do not pass the depth test are blocked by other objects.

For efficient use of Early Z there are two methods:

- sorting and drawing objects from near to far in depth (front to back)

- prepass without drawing with filling of the depth buffer and masking of the areas that are known to be invisible in the final image.

It is clear that the first method is not suitable for scenes with (semi) transparent objects, and the second has significant overhead costs.

The source code for examples of using Early Z can be found here.

4. Common sense and general tips for optimizing graphics applications. Nobody canceled them for Intel GPU. Reduce the number of shifts in graphics states and shaders, group draw calls, avoid reading from render targets, and generally do not use more than three render buffers. Also optimize the geometry (Direct3D D3DXOptimizeVertices and D3DXOptimizeFaces) for the DirectX vertex pre- and post-conversion caches.

5. And finally, to effectively detect performance problems with DirectX applications on an Intel GPU, use the free Intel GPA tool .

Source: https://habr.com/ru/post/144486/

All Articles