Sleep inside a dream: mix virtual and real networks in the "cloud"

Imagine that you are the owner of a bank, a steamer and a newspaper, and you have one “cloud” that you need to give to all three companies. Of course, it assumes server virtualization, plus network virtualization as such will be required.

Imagine that you are the owner of a bank, a steamer and a newspaper, and you have one “cloud” that you need to give to all three companies. Of course, it assumes server virtualization, plus network virtualization as such will be required.This is necessary so that the virtual machines of the same user see each other, but other users did not see them at all and did not even know about their existence.

The second task is to imagine that you have a certain node that cannot be virtualized, for example, a special data storage or something else that is not transferred to the “cloud” without large losses. It would be good to keep this device so that it was visible from the same segment as the virtual machines.

')

All this is possible. In the first case, with the division of segments, your bank would have one piece of "cloud", a steamer - another, and a newspaper - the third. At the same time, they would be as securely delimited in terms of information flows, as if they were standing in physically different places separated by steel walls, but in practice the machines themselves could be located in the same data center rack. Of course, this isolation of individual segments of the "cloud" means more opportunities for the user and greater security of his data. In the second case, a virtual network would also be used, but in a slightly different way.

Let's start with the level of virtual network deployment.

There are different technologies that allow you to do this, they all have certain limitations. In our practice, we stopped at Openflow: this is one of the mechanisms for implementing SDN (Software Defined Networking), when the network is configured at the software level and the equipment adjusts to this configuration (so that you do not have to run and set the parameters by hand). In general, the Openflow protocol is a topic for a separate topic. Now it is important to note only that we use the implementation developed by Nicira, created specifically for network virtualization combined with server virtualization.

Features of this implementation is that network virtualization begins immediately on the hypervisor, in the place where the control machines are running. The Overlay networking approach is used, when the cloud is completely irrelevant to the physical network topology. The main thing is for TCP to work there, on top of which logical networks are built. There is TCP - you can use any solutions in terms of network virtualization for machines in the same rack, or separated into different regions. The Openflow standard is well-known to large vendors, and iron makes NEC, Extreme Networks, HP. For example, Google, Yahoo use the same technology in their work.

Why do we need a soft switch that allows you to configure such networks? The fact is that initially we had different options: VLAN (802.1q) could be used, but there was a problem with them: each vlan used should be registered on the switches, plus the number of VLANs themselves was limited to the level of iron. Therefore, we could not run a large number of switches. While this problem was solved, my colleagues suggested a software switch for Linux using Openflow. We contacted Nicira, and as a result, we began to work with them in building our own cloud.

The result - we were able to allow users to build networks of any complexity. That is, the customer who rises into our “cloud” receives not only virtual machines, but also a certain freedom of action, not limited by strange rules. This is very convenient for a number of companies that are building an IT infrastructure far from scratch.

Here are some examples of network virtualization features:

- The simplest example: last year we held an Olympiad for students. Two virtual machines worked, each in its own network. The third looked at the first two, that is, it was a router between networks. Students performed tasks for system administrators right in the “cloud” as if they were working with real physical networks in different cities.

- Adding machines and switches as a constructor, we can build more complex networks. For example, one of the interesting achievements is a network in which only IPV6 works (IPV4 is not there at all). There is one machine that looks to the Internet, it has a dual stack, the machines no longer have IPV4 addresses, and they can go to the Internet by IPV4 via a router. Just an interesting experience.

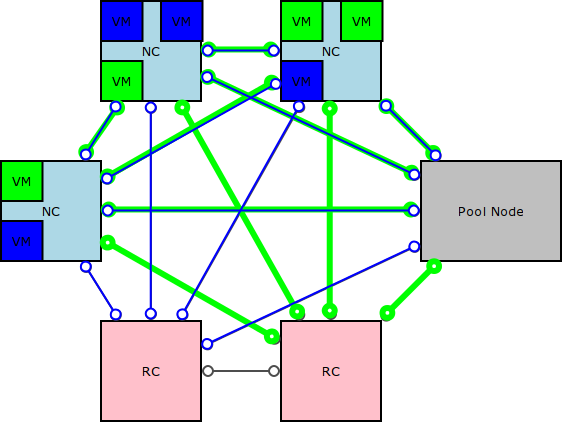

- The practical application of technology is very logical: in each network we provide the user with a dedicated L2-segment, where he can drive any traffic arbitrarily, even, for example, IPX. Isolation is complete: intercepting traffic between networks is simply unrealistic. Then we allow physical servers to be connected to the cloud network, for example, those that cannot be virtualized for a number of reasons. With the help of special software, the physical network segment expands into a “cloud”, and virtual machines find themselves in the same segment as the iron ones — this is very convenient when transferring the infrastructure of a large company to the “cloud”.

- Not so long ago, we launched such a thing as virtual private "clouds." This is an analogue of Amazon VPC, only more flexible. The user starts virtual machines on the managed network, there we raise our DHCP server, distribute addresses to machines, output it to the Internet through our gateway, and so on. Addressing the network in such cases is determined by the “cloud” and does not always suit the user, so he can create a virtual private “cloud”, create a network with arbitrary addressing for himself, and run his machines there. Plus, he can establish a secure connection from the office to this “cloud” and work with his virtual machines. Moreover, two different users can create their own networks with the same addressing, and they will not intersect with each other. This gives Nicira network virtualization + clever routers + network stack isolation and other such solutions. And all these users can manage themselves without the intervention of the data center administrator.

- From the last: recently we started using the functionality of another Nicira development - High Availability of a cluster to connect an external network to the “cloud”. That is, from the user comes 2 laces, we stick them into the "cloud". If one of the connection lines (for example, the client switch) suddenly falls, then everything continues to work through the second. This is the 802.1ag protocol: two nodes make a virtual and physical network connection, but they work in active-passive mode when one node is working and the other is resting. When the first node falls, the second (and very quickly, so that even packets are not lost) begins to pass traffic through itself.

Source: https://habr.com/ru/post/144454/

All Articles